• Extended version •

This repository will be continuously updated.

Step1: Creating a virtual environment

conda create -n nddepth python=3.8

conda activate nddepth

conda install pytorch=1.10.0 torchvision cudatoolkit=11.1

pip install matplotlib, tqdm, tensorboardX, timm, mmcv, open3d

Step2: Download the modified scikit_image package , in which the input parameters of the Felzenswalb algorithm have been changed to accommodate our method.

unzip scikit-image-0.17.2.zip

cd scikit-image-0.17.2

python setup.py build_ext -i

pip install -e .

You can prepare the datasets KITTI and NYUv2 according to here and download the SUN RGB-D dataset from here, and then modify the data path in the config files to your dataset locations.

You can download the generated surface normal ground-truth on NYUV2 from here and KITTI from here.

First download the pretrained encoder backbone from here, and then modify the pretrain path in the config files. If you want to train the KITTI_Official model, first download the pretrained encoder backbone from here, which is provided by MIM.

Training the NYUv2 model:

python nddepth/train.py configs/arguments_train_nyu.txt

Training the KITTI_Eigen model:

python nddepth/train.py configs/arguments_train_kittieigen.txt

Training the KITTI_Official model:

python nddepth_kittiofficial/train.py configs/arguments_train_kittiofficial.txt

Evaluate the NYUv2 model:

python nddepth/eval.py configs/arguments_eval_nyu.txt

Evaluate the KITTI_Eigen model:

python nddepth/eval.py configs/arguments_eval_kittieigen.txt

To generate KITTI Online evaluation data for the KITTI_Official model:

python nddepth_kittiofficial/test.py --data_path path to dataset --filenames_file ./data_splits/kitti_official_test.txt --max_depth 80 --checkpoint_path path to pretrained checkpoint --dataset kitti --do_kb_crop

You can download the qualitative depth results of NDDepth, IEBins, NeWCRFs, PixelFormer, AdaBins and BTS on the test sets of NYUv2 and KITTI_Eigen from here and download the qualitative point cloud results of NDDepth, IEBins, NeWCRFS, PixelFormer, AdaBins and BTS on the NYUv2 test set from here.

If you want to derive these results by yourself, please refer to the test.py.

If you want to perform inference on a single image, run:

python nddepth/inference_single_image.py --dataset kitti or nyu --image_path path to image --checkpoint_path path to pretrained checkpoint

Then you can acquire the qualitative depth and normal results.

| Model | Abs Rel | Sq Rel | RMSE | a1 | a2 | a3 | Link |

|---|---|---|---|---|---|---|---|

| NYUv2 (Swin-L) | 0.087 | 0.041 | 0.311 | 0.936 | 0.991 | 0.998 | [Google] [Baidu] |

| KITTI_Eigen (Swin-L) | 0.050 | 0.141 | 2.025 | 0.978 | 0.998 | 0.999 | [Google] [Baidu] |

| Model | SILog | Abs Rel | Sq Rel | RMSE | a1 | a2 | a3 | Link |

|---|---|---|---|---|---|---|---|---|

| KITTI_Official (Swinv2-L) | 7.53 | 5.23 | 0.81 | 2.34 | 0.972 | 0.996 | 0.999 | [Google] |

If you find our work useful in your research please consider citing our paper:

@inproceedings{shao2023nddepth,

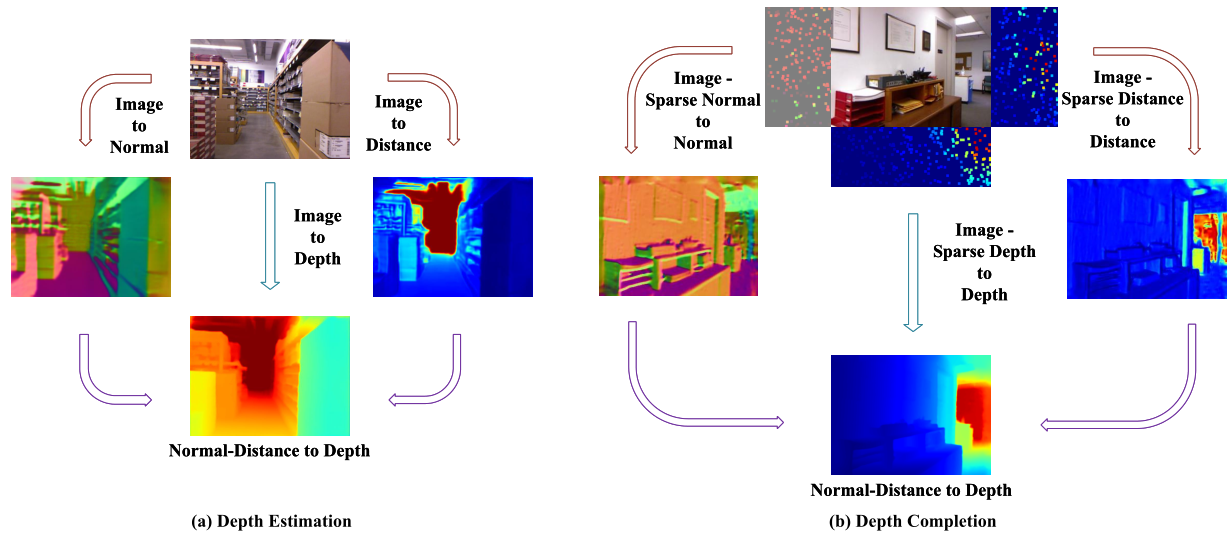

title={NDDepth: Normal-distance assisted monocular depth estimation},

author={Shao, Shuwei and Pei, Zhongcai and Chen, Weihai and Wu, Xingming and Li, Zhengguo},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={7931--7940},

year={2023}

}

@article{shao2023nddepth,

title={NDDepth: Normal-Distance Assisted Monocular Depth Estimation and Completion},

author={Shao, Shuwei and Pei, Zhongcai and Chen, Weihai and Chen, Peter CY and Li, Zhengguo},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2024}

}

If you have any questions, please feel free to contact swshao@buaa.edu.cn.

Our code is based on the implementation of NeWCRFs, BTS and Structdepth. We thank their excellent works.