This is an official repository of

Efficient Camera Exposure Control for Visual Odometry via Deep Reinforcement Learning, Shuyang Zhang, Jinhao He, Yilong Zhu, Jin Wu, and Jie Yuan.

This paper is currently under review at IEEE Robotics and Automation Letters (RAL).

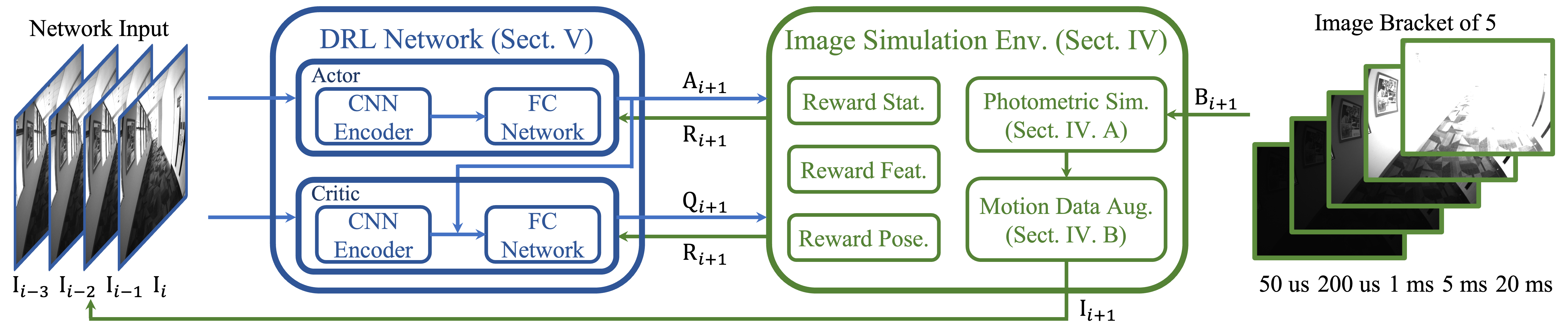

Training process.

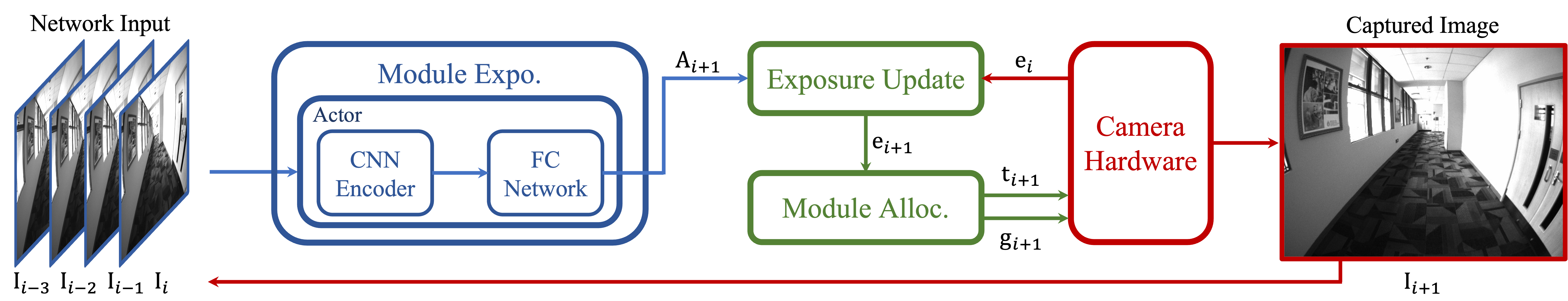

Inference process.

We want to implement an exposure control method based on deep reinforcement learning (DRL), which

- enables fast and convenient offline training via a simulation environment;

- adds high-level information to make the agent intelligent for subsequent visual tasks, for this paper, visual odometry (VO).

- A DRL-based camera exposure control solution. The exposure control challenge is divided into two subtasks, enabling completely offline DRL operations without the necessity for online interactions.

- An lightweight image simulator based on imaging principles,significantly enhances the data efficiency and simplifies the complexity of DRL training.

- A study on reward function design with various levels of information. The trained agents are equipped with different intelligence, enabling them to deliver exceptional performance in challenging scenarios.

- Sufficient experimental evaluation, which demonstrates that our exposure control method improves the performance of VO tasks, and achieves faster response speed and reduced time consumption.

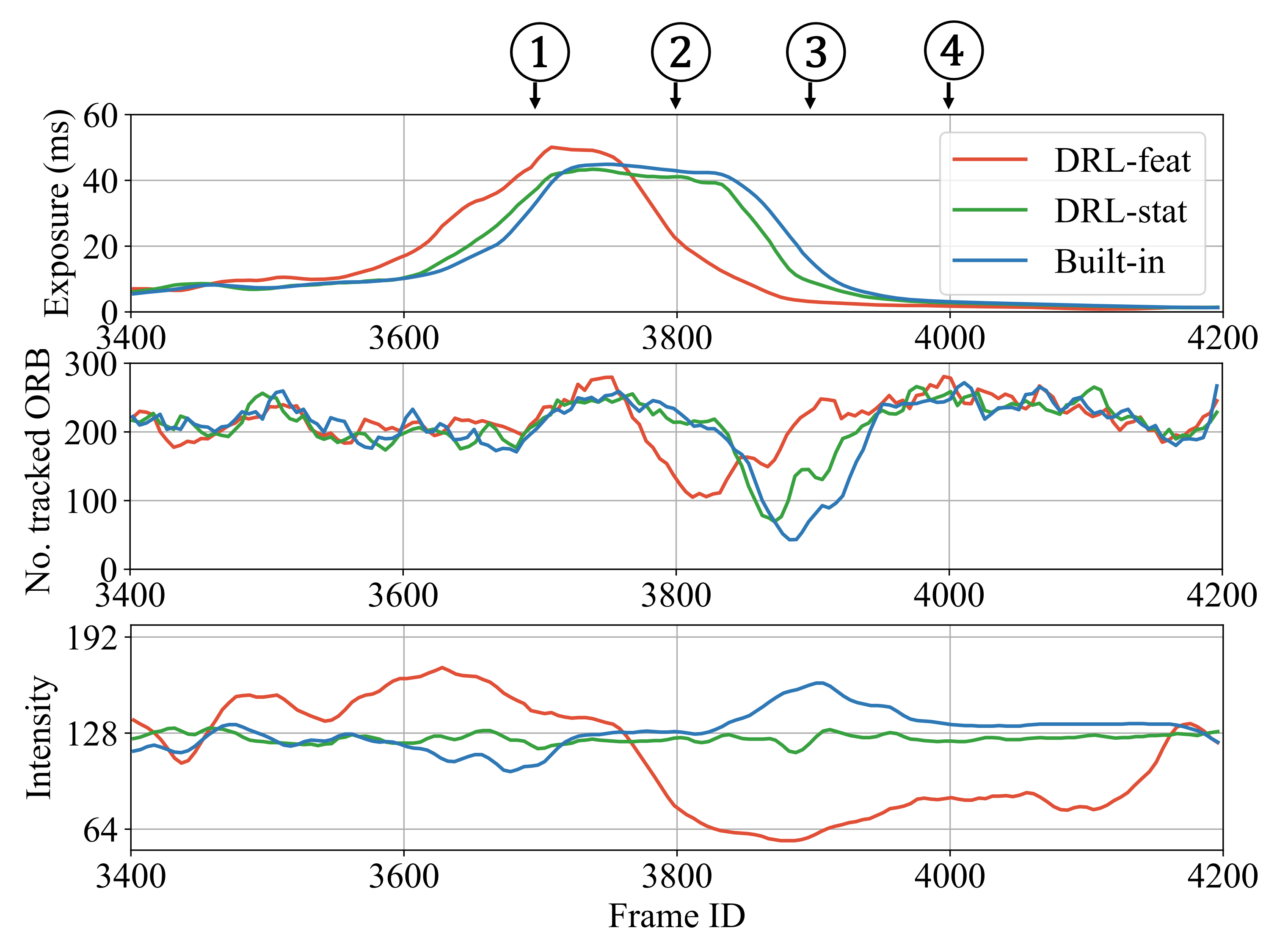

Our DRL-based method with feature-level rewards (DRL-feat) exhibits a high-level comprehension of lighting and motion. It predicts the impending over-exposure event and preemptively reduces the exposure. While this adjustment temporarily decreases the number of tracked feature points, it effectively prevents a more severe failure in subsequent frames.

-

Download our dataset. If you only want to run the agents with the pretrained model, please download the test dataset only. If you want to train with our data, please download the full datasets.

-

Configure the environment. Our code is implemented in Python. You can use Conda and Pip to install all the required packages.

# create conda environment conda create -n drl_expo_ctrl python=3.8 conda activate drl_expo_ctrl # install requirement packages pip install opencv-python pyyaml tensorboard # install torch, recommended to follow the official website guidelines with CUDA version. pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 -

Update configuration and parameters After unzipping the datasets, please change the root directory (seqs_root) of the dataset in train.yaml and infer.yaml.

- Choose the sequence to replay. Change the sequence name (seq_name) in infer.yaml

- Change the name of pretrained models. Loaded by PyTorch in infer.py

- Run the inference

python infer.py

- Customize the parameters in train.py

- Run the training

python train.py

Corridor

Built-in |

Shim |

DRL-stat |

DRL-feat |

Parking

Built-in |

Shim |

DRL-stat |

DRL-feat |

The source code is released under MIT license.