by Delong Zhu at Robotics, Perception, and AI Laboratory, CUHK

A more efficient and accurate model, Cascaded OCR-RCNN, is available now !!! The large-scale dataset will also be available soon!

OCR-RCNN is designed for elevator button recognition task based on Faster RCNN, which includes a Region Proposal Network (RPN), an Object Detection Network and a Character Recognition Network. This framework aims to help solve inter-floor navigation problem of service robots.

In this package, a button recognition service is implemented based on a trained OCR-RCNN model. The service takes a raw image as input and returns the detection, localization and character recognition results. Besides, a Multi-Tracker is also implemented, which utilizes the outputs of recognition service to initialize the tracking process, yielding an on-line detection performance.

If you find it helpful to your project, please consider cite our paper:

@inproceedings{zhu2018novel,

title={A Novel OCR-RCNN for Elevator Button Recognition},

author={Zhu, Delong and Li, Tingguang and Ho, Danny and Zhou, Tong and Meng, Max QH},

booktitle={2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={3626--3631},

year={2018},

organization={IEEE}

}

- Ubuntu 14.04

- ROS indigo

- TensorFlow 1.4.1

- OpenCV 3.4.1

- compiled with OpenCV's extra modules

- GPU: GTX Titan X

- with a total memory of 12288MB

- Download the OCR-RCNN model and put it in the folder

elevator_button_recognition/src/button_recognition/ocr_rcnn_model/ - Run

cd elevator_button_recognitionand runcatkin_init_workspace - Run

catkin_make -j[thread_number] - Run

source elevator_button_recognition/devel/setup.bashor add the command to '~/.bashrc' - (Optional) add the path of ocr-rcnn mode to launch file at

elevator_button_recognition/src/button_recognition/launch - (Optional) add the path of test samples to launch file at

elevator_button_recognition/src/button_tracker/launch

- If

image_only == true, three panel images insrc/button_tracker/test_sampleswill be tested. - If

image_only == false, a video insrc/button_tracker/test_sampleswill be tested.

- Run

roslaunch button_recognition button_recognition.launchto launch the recognition service, which recieves asensor_msgs/CompressedImageas input and return arecog_result(self-defined) type of response. - Run

roslaunch button_tracker button_tracker.launchto launch the tracking node, where the recognition service is called to initialize a 'Multi-Tracker`.

- The simplest KCF tracker is used in this implementation.

- Change the multi-tracker to single-tracker will further increase the processing speed.

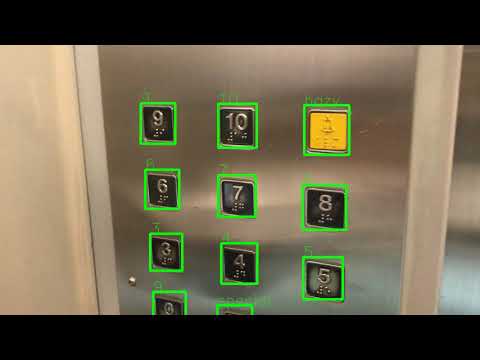

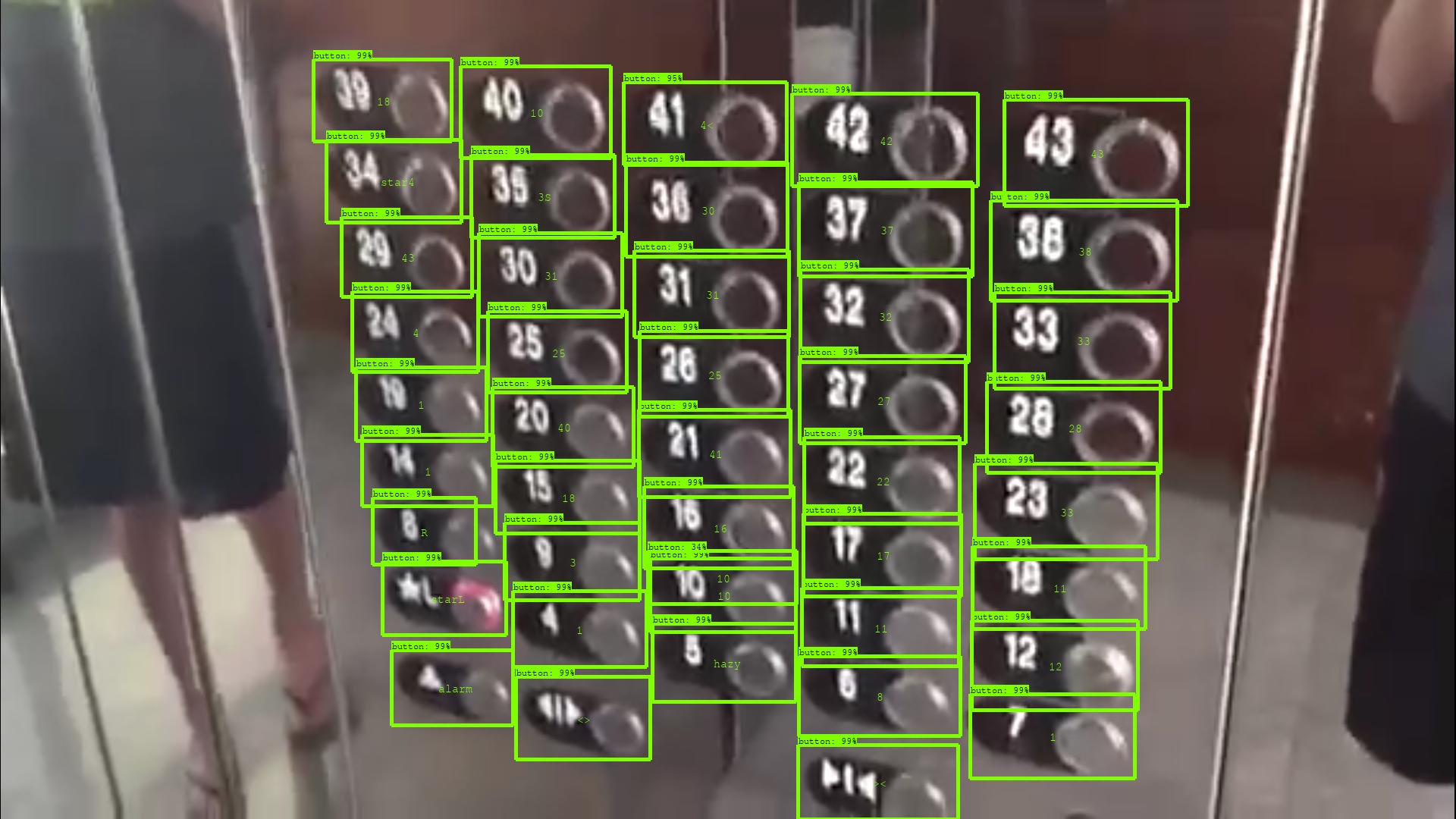

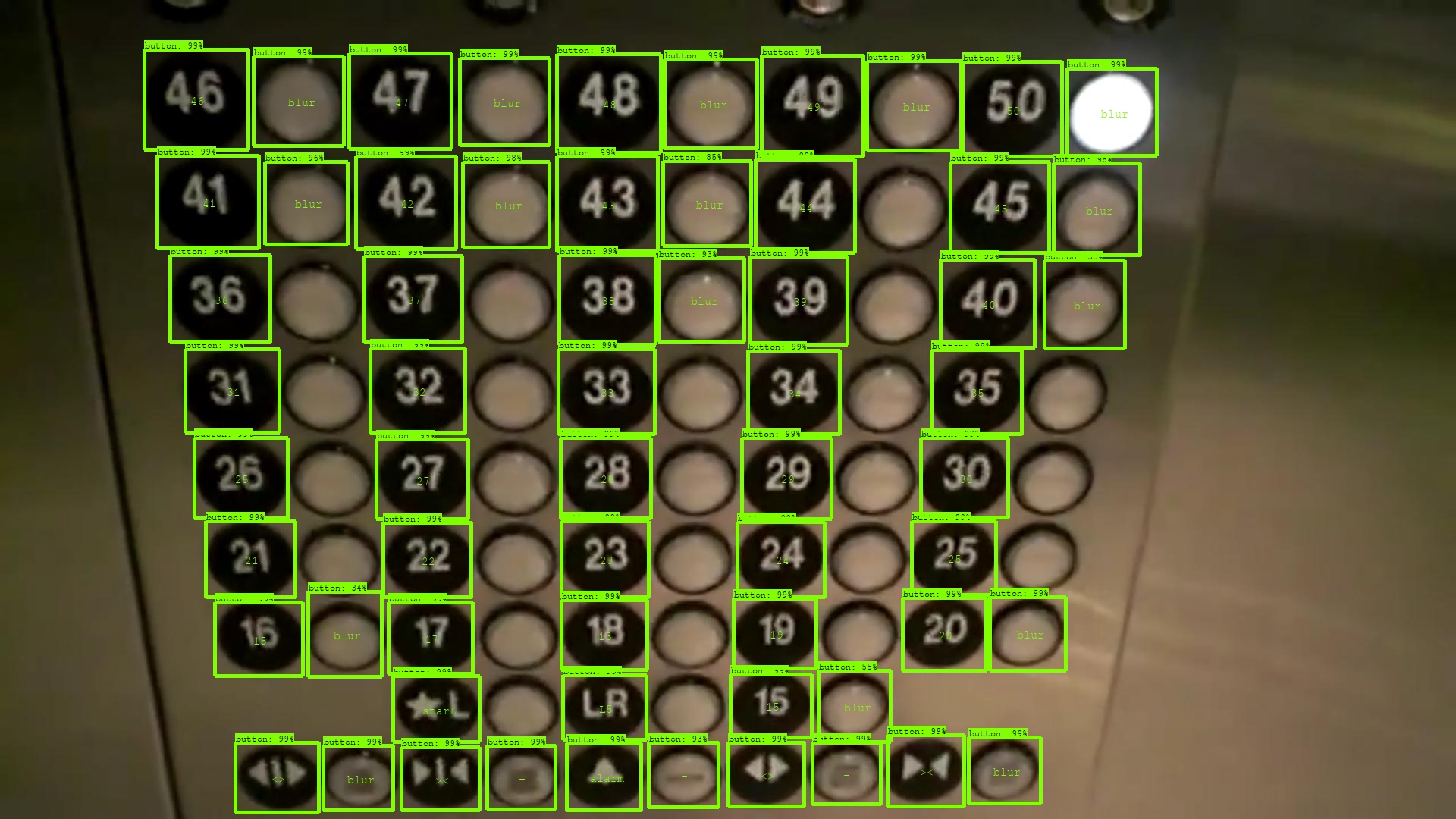

- Two demo-images are listed as follows. They are screenshots from two Youtube videos. Recognition results are visualized at the center of each bounding box.

Image Source: [https://www.youtube.com/watch?v=bQpEYpg1kLg&t=8s]

Image Source: [https://www.youtube.com/watch?v=bQpEYpg1kLg&t=8s]

Image Source: [https://www.youtube.com/watch?v=k1bTibYQjTo&t=9s]

Image Source: [https://www.youtube.com/watch?v=k1bTibYQjTo&t=9s]

- Two demo-videos are also provided. The first one is a composition video, where each frame is processed off-line, then composed to a complete video. This video shows us the recognition performance of OCR-RCNN in an untrained elevator. The second one is a real-time video, where the OCR-RCNN and a

Multi-Trackeris utilized to carry out on-line recognition task. As we can see, although the tracker can help increase the recognition speed, its accuracy and robustness are worse than the off-line version. So, it is crucial to further improve the OCR-RCNN's time efficiency in the next stage!