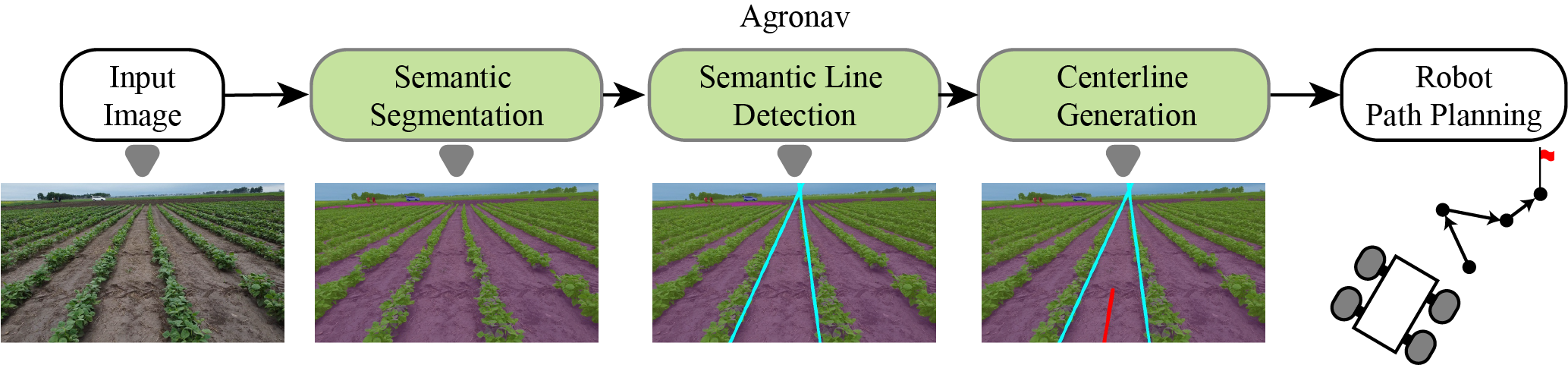

Agronav: Autonomous Navigation Framework for Agricultural Robots and Vehicles using Semantic Segmentation and Semantic Line Detection

- This repository contains the codebase for our work presented at the 4th International Workshop on AGRICULTURE-VISION: CHALLENGES & OPPORTUNITIES FOR COMPUTER VISION IN AGRICULTURE from CVPR 2023.

- The link to the original paper can be found here.

- Our work uses MMSegmentation to train our semantic segmentation model and Deep Hough Transform for semantic line detection.

Pipeline of the Agronav framework

- 06/22/2023: Revised instructions, tested image inference code.

This code has been tested on Python 3.8.

-

After creating a virtual environment (Python 3.8), install pytorch and cuda package.

Using

conda:conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.6 -c pytorch -c nvidia

Using

pip:pip install torch==1.13.1+cu116 torchvision==0.14.1+cu116 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu116

-

Install

mmcvpip install mmcv-full==1.7.1 cd segmentation pip install -v -e .

-

Install other dependencies

conda install numpy scipy scikit-image pqdm -y pip install opencv-python yml POT pudb -y

-

Install

deep-hough-transformcd ../lineDetection cd model/_cdht python setup.py build python setup.py install --user

-

Download the Agroscapes Segmentation dataset from here and extract the images and labels to

data/agronav/imagesanddata/agronav/labelsrespectively. -

Run

python train-agronav.pyto start training. Although before that check the fileagronav.pyfor the configuration of training (cfg). You might want to edit the python file for cfg based on your training model. Accordingly editcfg.load_fromandcfg.work_dir, for your checkpoint and output directory respectively.

-

Download the AgroNav_LineDetection dataset from here and extract to

data/directory. The dataset contains images and ground truth annotations of the semantic lines. The images are the outputs of the semantic segmentation model. Each image contains a pair of semantic lines. -

Run the following lines for data augmentation and generation of the parametric space labels.

cd lineDetection

python data/prepare_data_NKL.py --root './data/agroNav_LineDetection' --label './data/agroNav_LineDetection' --save-dir './data/training/agroNav_LineDetection_resized_100_100' --fixsize 400 - Run the following script to obtain a list of filenames of the training data.

python data/extractFilenameList.pyThis creates a .txt file with the filenames inside /training. Divide the filenames into train and validation data.

agroNav_LineDetection_train.txt

agroNav_LineDetection_val.txt-

Specify the training and validation data paths in config.yml.

-

Train the model.

python train.py- Download the pre-trained checkpoints for semantic segmentation [MobileNetV3, HRNet, ResNest]. Move the downloaded file to

./segmentation/checkpoint/. - Download the pre-trained checkpoints for semantic line detection here. Move the download file to

../lineDetection/checkpoint/. - Move inference images to

./inference/input - Run the following command to perform end-to-end inference on the test images. End-to-end inference begins with a raw RGB image, and visualizes the centerlines.

python e2e_inference_image.py

- The final results with the centerlines will be saved in

./inference/output_ceterline, the intermediate results are saved in./inference/tempand./inference/output.

To run the semantic segmentation and line detection models independently, use ./segmentation/inference_image.py and ./lineDetection/inference.py.

If found helpful, please consider citing our work:

@InProceedings{Panda_2023_CVPR,

author = {Panda, Shivam K. and Lee, Yongkyu and Jawed, M. Khalid},

title = {Agronav: Autonomous Navigation Framework for Agricultural Robots and Vehicles Using Semantic Segmentation and Semantic Line Detection},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2023},

pages = {6271-6280}

}