Modify: only run successfully in docker

Requirements:

- x86-64 (amd64) architecture

- GPU that supports CUDA 11.5

- Ubuntu

Dependencies:

- Docker

- NVIDIA Container Toolkit

- You need to set up a ssh key for Github account to clone the LIMAP when building the image. Follow this instruction to generate a key, and then this instruction to register the key to your Github account.

Step:

-

download Dockerfile

-

For GUI

xhost +

-

Build docker image

docker build --build-arg SSH_PRIVATE_KEY="$(cat ~/.ssh/id_ed25519)" -t="limap" .

-

Run container

docker run \ --rm \ -it \ --gpus all \ --shm-size 50G \ --device=/dev/dri:/dev/dri \ -v /tmp/.X11-unix:/tmp/.X11-unix:rw \ -e DISPLAY=$DISPLAY \ -e QT_X11_NO_MITSHM=1 \ --net=host \ limap bash

Note:

-

python pdb调试方法

sstep intonnext stepccontinue

-

For VSCode Debug

- python path

/opt/venv/bin/python3.9

- python path

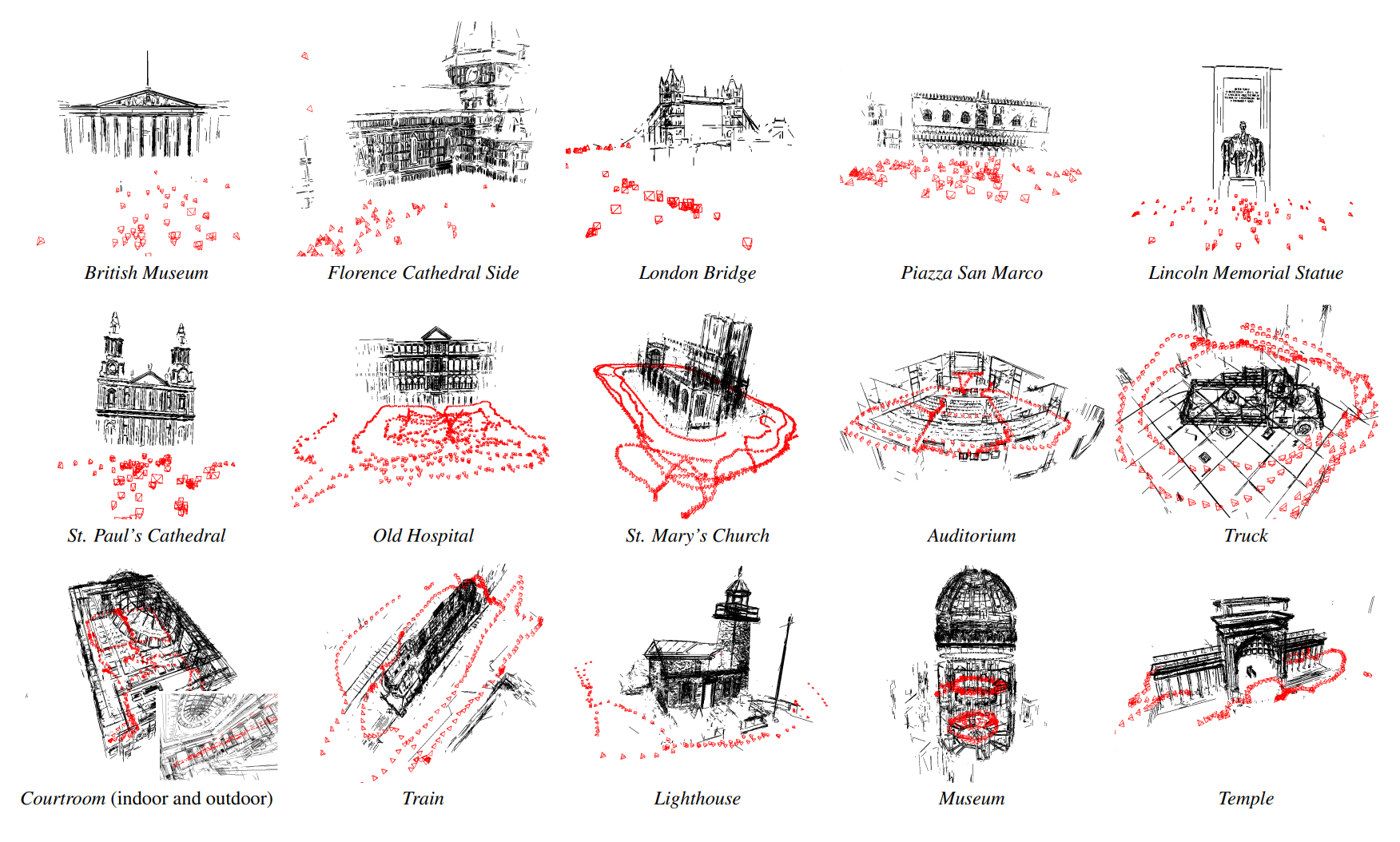

LIMAP is a toolbox for mapping and localization with line features. The system was initially described in the highlight paper 3D Line Mapping Revisited at CVPR 2023 in Vancouver, Canada. Contributors to this project are from the Computer Vision and Geometry Group at ETH Zurich.

In this project, we provide interfaces for various geometric operations on 2D/3D lines. We support off-the-shelf SfM software including VisualSfM, Bundler, and COLMAP to initialize the camera poses to build 3D line maps on the database. The line detectors, matchers, and vanishing point estimators are abstracted to ensure flexibility to support recent advances and future development.

Next step: Hybrid incremental SfM is under development and will be included in the next round of release.

Install the dependencies as follows:

sudo apt-get install libhdf5-dev- Python 3.9 + required packages

git submodule update --init --recursive

# Refer to https://pytorch.org/get-started/previous-versions/ to install pytorch compatible with your CUDA

python -m pip install torch==1.12.0 torchvision==0.13.0

python -m pip install -r requirements.txtTo install the LIMAP Python package:

python -m pip install -Ive .

To double check if the package is successfully installed:

python -c "import limap"

Download the test scene (100 images) with the following command.

bash scripts/quickstart.shTo run Fitnmerge (line mapping with available depth maps) on Hypersim (visualization is enabled by default):

python runners/hypersim/fitnmerge.py --output_dir outputs/quickstart_fitnmergeTo run Line Mapping (RGB-only) on Hypersim (visualization is enabled by default):

python runners/hypersim/triangulation.py --output_dir outputs/quickstart_triangulationTo run Visualization of the 3D line maps after the reconstruction:

python visualize_3d_lines.py --input_dir outputs/quickstart_triangulation/finaltracks # add the camera frustums with "--imagecols outputs/quickstart_triangulation/imagecols.npy"[Tips] Options are stored in the config folder: cfgs. You can easily change the options with the Python argument parser. The following shows an example:

python runners/hypersim/triangulation.py --sfm.hloc.descriptor sift --line2d.detector.method lsd \

--line2d.visualize --triangulation.IoU_threshold 0.2 \

--skip_exists --n_visible_views 5In particular, --skip_exists is a very useful option to avoid running point-based SfM and line detection/description repeatedly in each pass.

Also, the combination LSD detector + Endpoints NN matcher can be enabled with --default_config_file cfgs/triangulation/default_fast.yaml for high efficiency (while with non-negligible performance degradation).

We provide two query examples for localization from the Stairs scene in the 7Scenes Dataset, where traditional point-based methods normally struggle due to the repeated steps and lack of texture. The examples are provided in .npy files: runners/tests/localization/localization_test_data_stairs_[1|2].npy, which contains the necessary 2D-3D point and line correspondences along with the necessary configurations.

To run the examples, for instance the first one:

python runners/tests/localization.py --data runners/tests/localization_test_data_stairs_1.npyThe script will print the pose error estimated using point-only (hloc), and the pose error estimated by our hybrid point-line localization framework. In addition, two images will be created in the output folder (default to outputs/test/localization) showing the inliers point and line correspondences in hybrid localization projected using the two estimated camera pose (by point-only and point+line) onto the query image with 2D point and line detections marked. An improved accuracy of the hybrid point-line method is expected to be observed.

If you wish to use the methods with separate installation needed you need to install it yourself with the corresponding guides. This is to avoid potential issues at the LIMAP installation to ensure a quicker start.

Note: PR on integration of new features are very welcome.

The following line detectors are currently supported:

The following line descriptors/matchers are currently supported:

- LBD (separate installation needed [Guide])

- SOLD2

- LineTR

- L2D2

- Endpoint matching with SuperPoint + Nearest Neighbors

- Endpoint matching with SuperPoint + SuperGlue

- GlueStick

The following vanishing point estimators are currently supported:

- JLinkage

- Progressive-X (separation installation needed [Guide])

If you use this code in your project, please consider citing the following paper:

@InProceedings{Liu_2023_LIMAP,

author = {Liu, Shaohui and Yu, Yifan and Pautrat, Rémi and Pollefeys, Marc and Larsson, Viktor},

title = {3D Line Mapping Revisited},

booktitle = {Computer Vision and Pattern Recognition (CVPR)},

year = {2023},

}This project is mainly developed and maintained by Shaohui Liu, Yifan Yu, Rémi Pautrat, and Viktor Larsson. Issues and contributions are very welcome at any time.