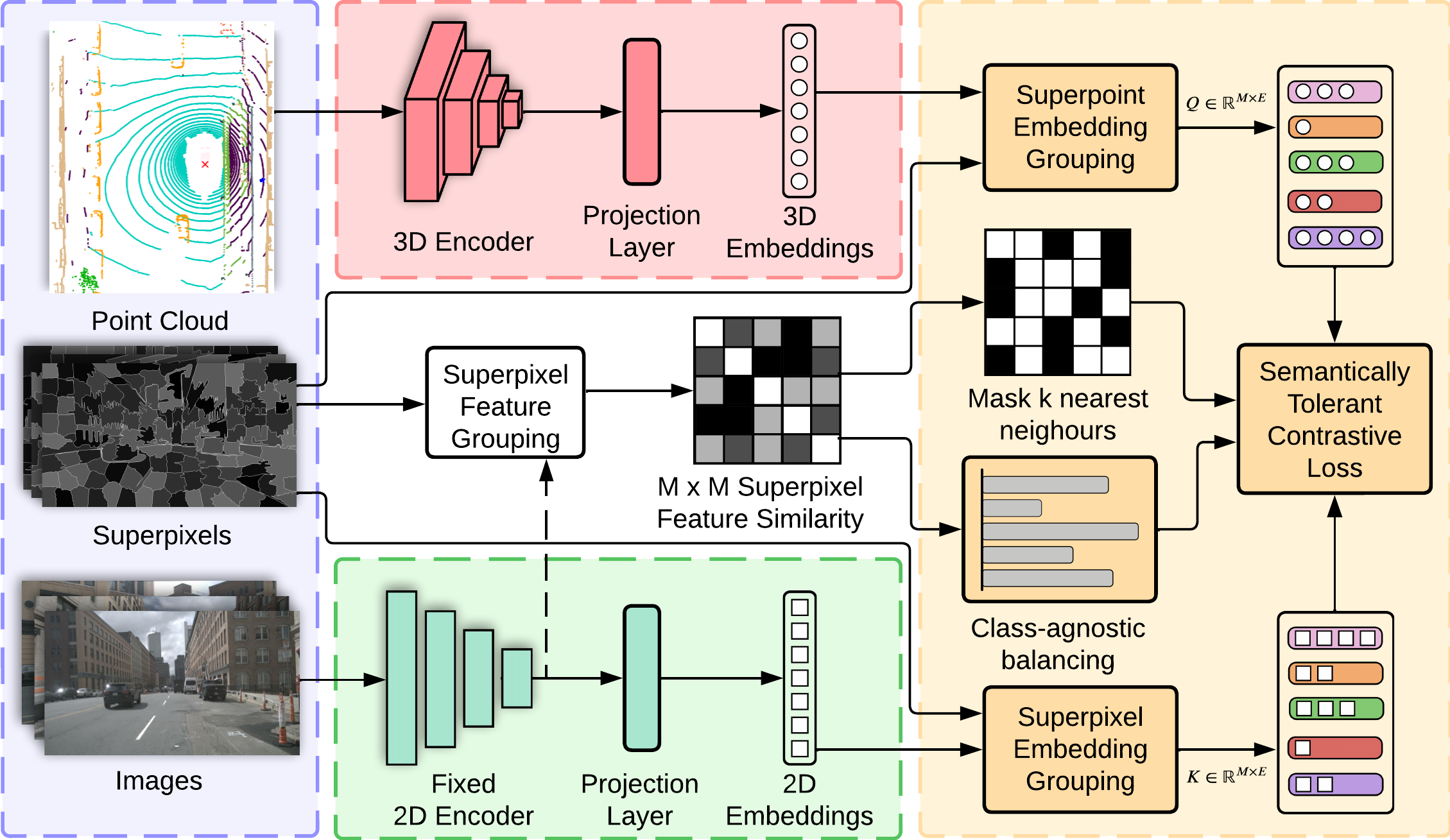

ST-SLidR is a 2D to 3D distillation framework for autonomous driving scenes. This repository is based off of [SLidR].

Self-Supervised Image-to-Point Distillation via Semantically Tolerant Contrastive Loss

Anas Mahmoud, Jordan Hu, Tianshu Kuai, Ali Harakeh, Liam Paull, Steven Waslander

[Paper]

Please refer to INSTALL.md for the installation of ST-SLidR.

All results are obtained by pre-training on nuScenes dataset. Each pretraining experiment is conducted 3 times, and the average performance is reported on Linear Probing using 100% of the labelled point cloud data and on finetuning using 1% of the labelled point cloud data.

| Method | Self-supervised Encoder |

Linear Probing (100%) |

Finetuning (1%) |

|---|---|---|---|

| SLidR | MoCoV2 | 38.08 | 40.01 |

| ST-SLidR | MoCoV2 | 40.56 | 41.13 |

| SLidR | SwAV | 38.96 | 40.01 |

| ST-SLidR | SwAV | 40.36 | 41.20 |

| SLidR | DINO | 38.29 | 39.86 |

| ST-SLidR | DINO | 40.36 | 41.07 |

| SLidR | oBoW | 37.41 | 39.51 |

| ST-SLidR | oBoW | 40.00 | 40.87 |

If you use ST-SLidR in your research, please consider citing:

@inproceedings{ST-SLidR,

title = {Self-Supervised Image-to-Point Distillation via Semantically Tolerant Contrastive Loss},

author = {Anas Mahmoud and Jordan Hu and Tianshu Kuai and Ali Harakeh and Liam Paull and Steven Waslander},

journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

ST-SLidR is released under the Apache 2.0 license.