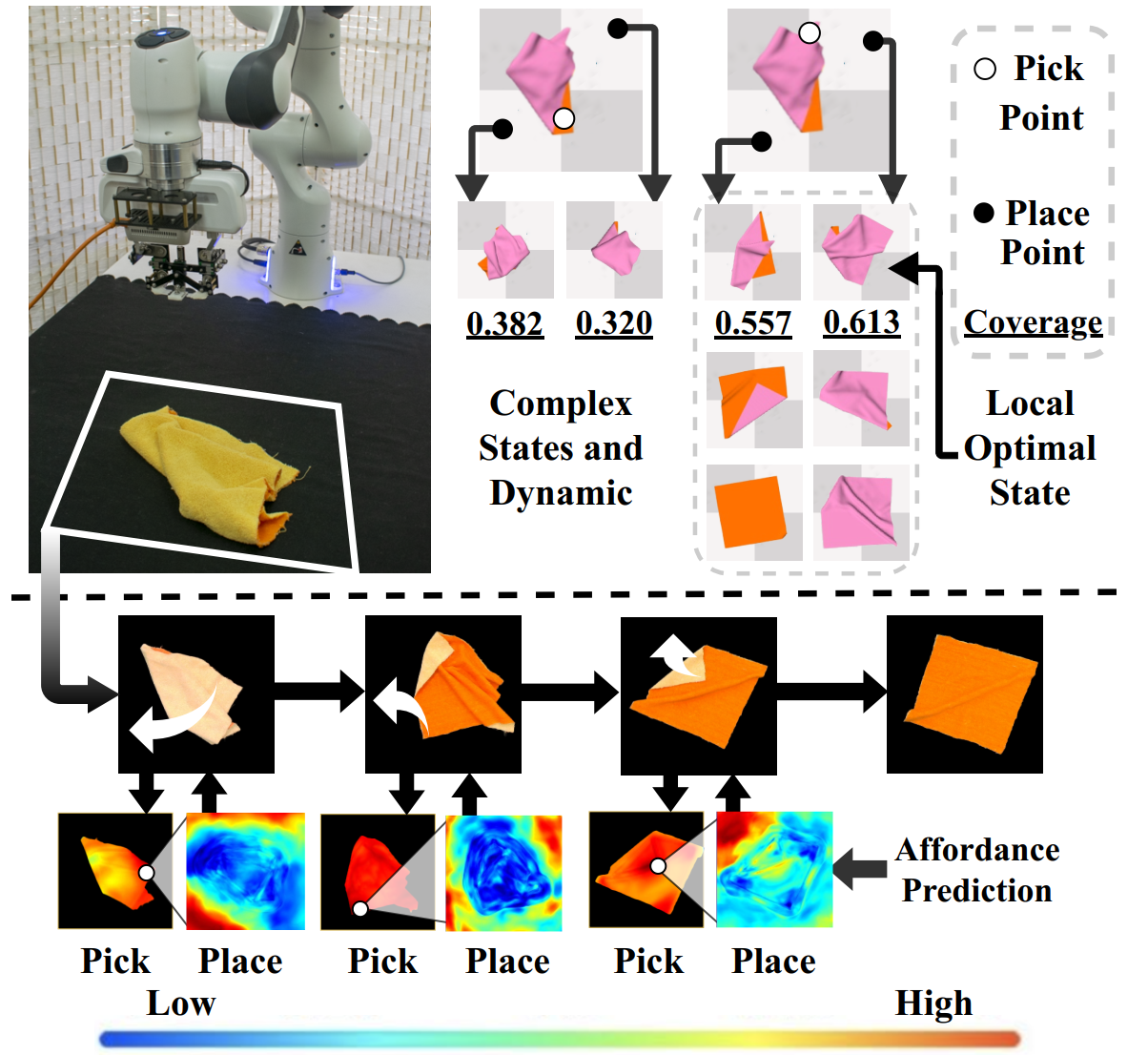

This is the official implementation of the paper: Learning Foresightful Dense Visual Affordance for Deformable Object Manipulation (ICCV 2023).

Project | Paper | ArXiv | Video | Video (real world)

- Install SoftGym simulator

Please refer to https://github.com/Xingyu-Lin/softgym for SoftGym Installation Instructions.

- Install other dependencies

Our environment dependencies are included in the "environment.yml" file. You can easily install them when you create a new conda environment in the first step.

Collecting data for task SpreadCloth, please run

bash scripts/collect_cloth-flatten.sh

Collecting data for task RopeConfiguration, please run

bash scripts/collect_rope-configuration.sh

Our models are trained in a reversed step-by-step manner. We prepare some bash files to handle the training process.

To train models for SpreadCloth, pleas run

bash scripts/train_cloth-flatten.sh

To train models for RopeConfiguration, pleas run

bash scripts/train_rope-configuration.sh

We prepare some bash files to test the final model. you can easily change the argument to test our other models mentioned in our paper.

To test models for SpreadCloth, pleas run

bash scripts/test-cloth-flatten.sh

To test models for RopeConfiguration, pleas run

bash scripts/test-rope-configuration.sh

The manipulation result (.gif) will be stored in "test_video" directory.

If you find this paper useful, please consider citing:

@InProceedings{Wu_2023_ICCV,

author = {Wu, Ruihai and Ning, Chuanruo and Dong, Hao},

title = {Learning Foresightful Dense Visual Affordance for Deformable Object Manipulation},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {10947-10956}

}

If you have any questions, please feel free to contact Ruihai Wu at wuruihai_at_pku_edu_cn and Chuanruo Ning at chuanruo_at_stu_pku_edu_cn