The Aktienführer-Datenarchiv include company data from the "Hoppenstedt Aktienführer", a yearly publication about companies traded at German stock markets. The database contains data from 1956-2016 and was created by different workflows using tools, which we will describe further here:

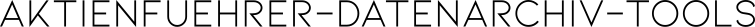

- Until 1999, the data was published in printed books, which were first digitized. Then:

- The data from 1956-1975 were recorded automatically by OCR and subsequent parsing, see the workflow for the books for more details.

- The data from 1976-1999 were recorded manually by a "Double Keying" method. (This was done in the first project phase.)

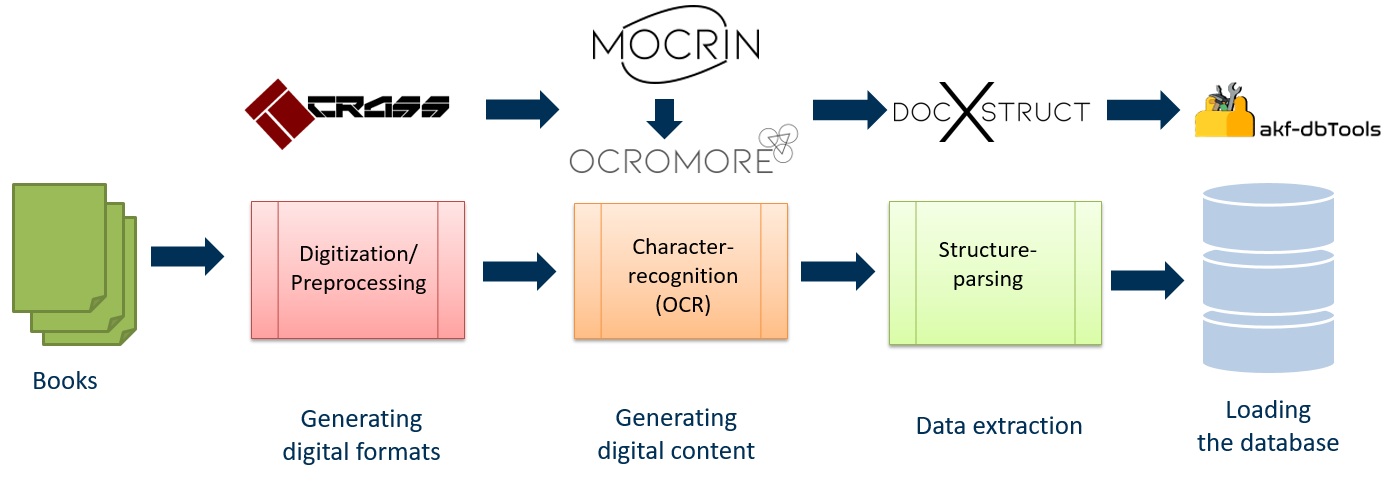

- The CDs/DVDs from 2000-2016 were recorded by analyzing and parsing the HTML pages from the discs, see the workflow for the CDs for more details.

The extracted data together with the tools were created in a project funded by the Deutsche Forschungsgemeinschaft (DFG).

crass is a command line driven post-processing tool for scanned sheets of paper. It crops segments, based on separator lines, and splices them afterwards together in a certain order to gain standalone, single-column text sections. This simplifies the layout analysis for following OCR steps and bundles related text sections. In an additional pre-processing step, which is called deskewing, crass can also correct the rotation of the page.

mocrin is a command line driven processing tool for multiple OCR-engines. The main purpose is to handle multiple OCR-engines with one interface for a clean and uniform workflow. Another use is to serve as part of a self-configuration process to extract the best settings for different OCR-engines (not fully implemented yet). At the moment you can store multiple configuration files for the OCR-engines, which can be modified manually. So you can have a big collection of preconfigured settings for different input data. Furthermore, it can cut areas out of images using some regular expressions, which can be used as training datasets for NN-models.

ocromore unites the best parts of the individual OCR-output to produce an optimal outcome. In the first step, it parses the different OCR-output-files to a SQLite-database. The purpose of this database is to serve as an exchange and store platform using pandas as handler. Combining pandas and the dataframe-objectifier offers a wide-range of high-performance use-cases like multi segment alignment (msa). The combined output can be stored as a new OCR-result or in plain text file format. To evaluate the result you can either use the common standard ISRI tool to generate an accuracy report or do visual comparison with a diff-tool (default "meld").

docxstruct

analyses, categorizes and segments the data from text-based documents.

At the moment it is specialized to the hocr fileformat.

The contained data in each segment will be

parsed in a structured manner into a json file.

akf-dbTools

is a collection of little scripts,

which can modify and update certain parts of the akf-database.

The scripts are customized to the requirements of the project.

Amongst other things it loads the database with json-files

from docxstruct, normalizes and deduplicates the data in the database.

akf-cdparser

analyses, categorizes and segments the HTML files from the Aktienführer CDs.

The contained data in each segment will be

parsed in a structured manner into a json file.

akf-dbTools

is a collection of little scripts,

which can modify and update certain parts of the database.

Amongst other things, it loads the database with json-files

from akf-cdparser, normalizes and deduplicates the data in the database.

Note that the automatic processing will sometimes need some manual adjustments.

This sections provides additional tools that are also created or modified in the Aktienführer-Datenarchiv project.

akf-corelib is a library for core functionalites, which get used by multiple projects in the Aktienführer-Datenarchiv work process.

Betrial is a tool that reduces the amount of effort to perform a Bernoulli Trial for OCR-Validation. Given a pool of recognized pages it randomly selects characters, cuts the whole line and the corresponding, recognized text and generates a validation html-page.

hocr-parser is a modified third party library for modules of the Aktienführer-Datenarchiv work process, but can also be used independently. Originally written by Athento.

Copyright (c) 2017 Universitätsbibliothek Mannheim

Author:

The tools are Free Software. You may use them under the terms of the Apache 2.0 License. See LICENSE for details.