This ROS package projects RGB camera imagery onto 3D depth pointclouds of scenes which are generated by separate sensors (such as regular cameras and LIDAR units), "painting" the clouds into RGBXYZ clouds. This can be done for various image formats - so far, flat 2D rasters and spherical camera imagery are implemented, with both equisolid and equidistant projections implemented for the latter.

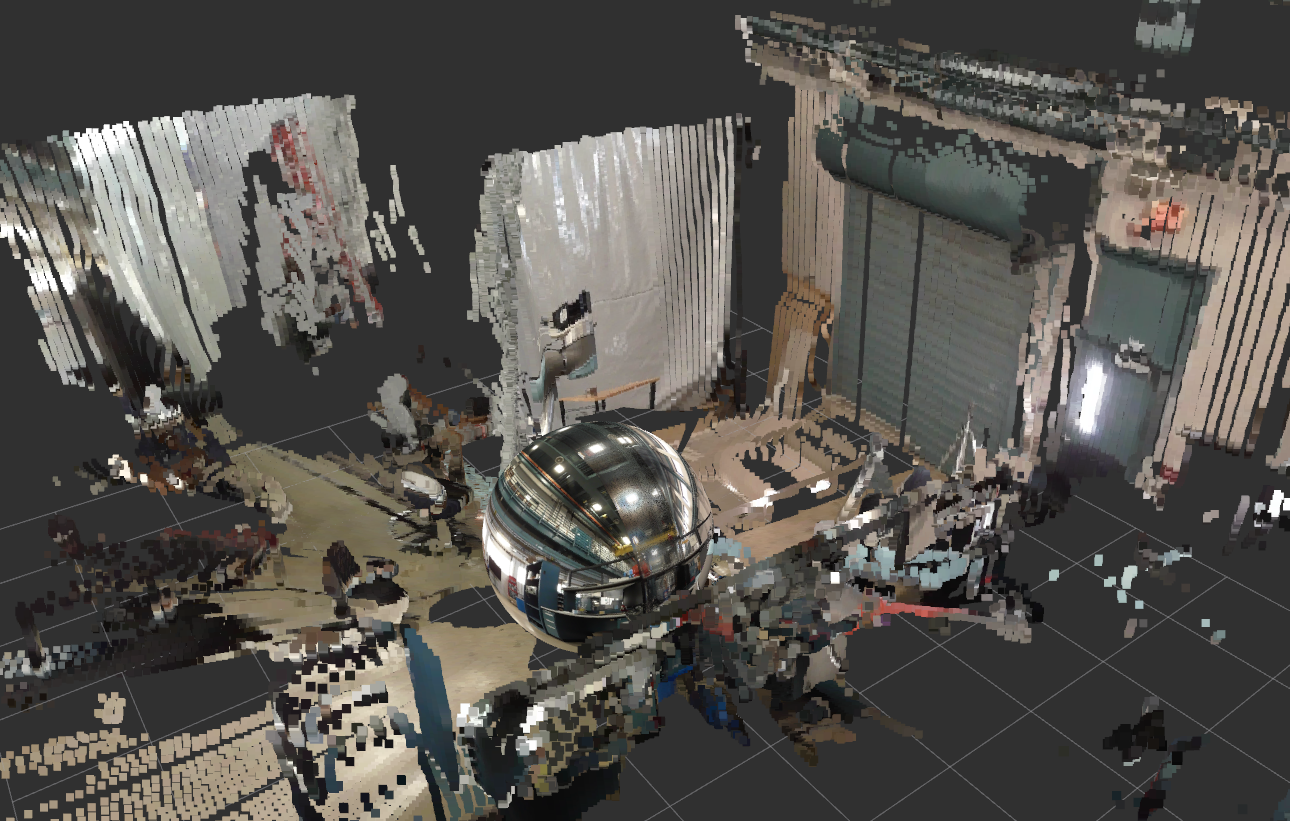

The above images illustrate a painted pointcloud of an example scene, with a panoramic camera image of the same scene displayed below for comparison. The panorama was taken a few days after the pointcloud was painted, so a few temporary objects have moved in the latter scene.

First, the RGB raster image is converted into a pointcloud version and projected onto the unit sphere about the target frame. A copy of the depth cloud is created with points projected onto this same sphere. For every point in the depth cloud a nearest neighbor search is performed on the RGB cloud and an inverse-distance-weighted assignment of color between a user-specified k number of neighbors is performed. The colors are assigned to the unprojected depth cloud to yield an RGBXYZ cloud. Various downsampling options are available at multiple points within this process to improve speed.

Commercial stereo vision and structure-from-motion sensors allow simultaneous estimation of RGB and XYZ data for an environment. However, these approaches depend on estimation of feature correspondences between images, and perform poorly for surfaces which are smooth, untextured, and highly self-similar. They do not have a direct basis on empirical measurement of distance, unlike time-of-flight approaches like LIDAR. This package was originally designed specifically to generate highly accurate and precise RGBXYZ images of scenes which would cause difficulty for stereo vision, such as large, regular, flat concrete facades. This approach performs very well for such scenes, where stereo vision would struggle.

This kind of approach lends itself best to large surfaces being painted in uncluttered scenes, where there is not much complexity of fore- vs background. Differences in occlusion of further objects by closer ones from the respective perspectives of the depth and RGB sensors can lead to problems for very cluttered scenes. These errors are reduced for smaller offsets between the sensors and for objects that are more distant. We are aiming to develop a sensor tree which places these two sensors essentially on top of one another, which should largely eliminate this problem.

The pointcloud_painter is controlled by parameters specified in a yaml file in param/. The settings are loaded on the client end, not in the pointcloud_painter service node itself, so if a new client is written for a custom application the parameter-handling in src/painter_client.cpp should be replicated there.

- painter_service_name: the name of the service to be called by an external client

- should_loop: whether or not the client side should loop

- max_lens_angle: the maximum lens angle visible through the camera

- projection_type: the type of projection used - see srv/pointcloud_painter_srv.srv for projection type designations

- neighbor_search_count the number of color neighbors to search for for each depth point to be painted

- flat_voxel_size the voxelization size for the RGB image input in planar cloud space

- spherical_voxel_size the voxelization size for the RGB image input in spherical cloud space

- compress_image whether or not to lossily compress the input raster image

- image_compression_ratio if (compress_image), the factor by which it should be compressed

- camera_frame_front the name of the frame used for the front camera

- camera_frame_rear the name of the frame used for the rear camera

- target_frame the name of the target frame in which the output is published

As is, the program can be run by launching the launch/pointcloud_painter.launch file.

roslaunch pointcloud_painter pointcloud_painter.launch

The user can also construct their own client file to interface with the pointcloud_painter server. If doing so, all that should be necessary is to run the associated client file (and any yaml files or parameter loading necessary) as well as the pointcloud_painter.srv node itself:

rosrun pointcloud_painter pointcloud_painter

More information about this package is available in the paper Improved Situational Awareness in ROS Using Panospheric Vision and Virtual Reality. If you are using this software please add the following citation to your publication:

@INPROCEEDINGS{VunderSA2018,

author={V. Vunder and R. Valner and C. McMahon and K. Kruusamäe and M. Pryor},

booktitle={2018 11th International Conference on Human System Interaction (HSI)},

title={Improved Situational Awareness in ROS Using Panospheric Vision and Virtual Reality},

year={2018},

pages={471-477},

keywords={Robots;Data visualization;Cameras;Headphones;Lenses;Distortion;Rendering (computer graphics);situational awareness;human-robot interaction;virtual reality;user interfaces;panospheric vision;telerobotics;ROS;RViz},

doi={10.1109/HSI.2018.8431062},

month={July},}