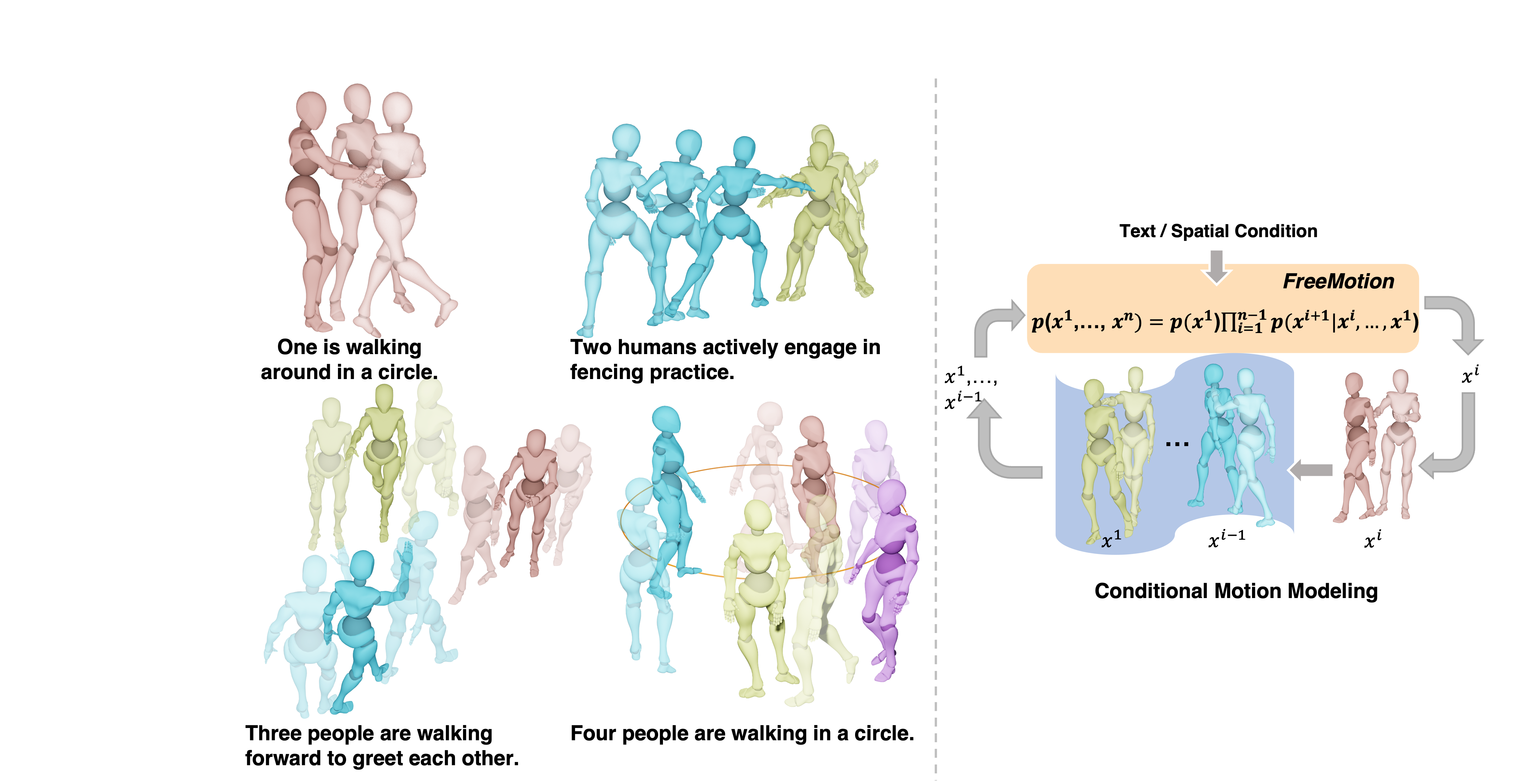

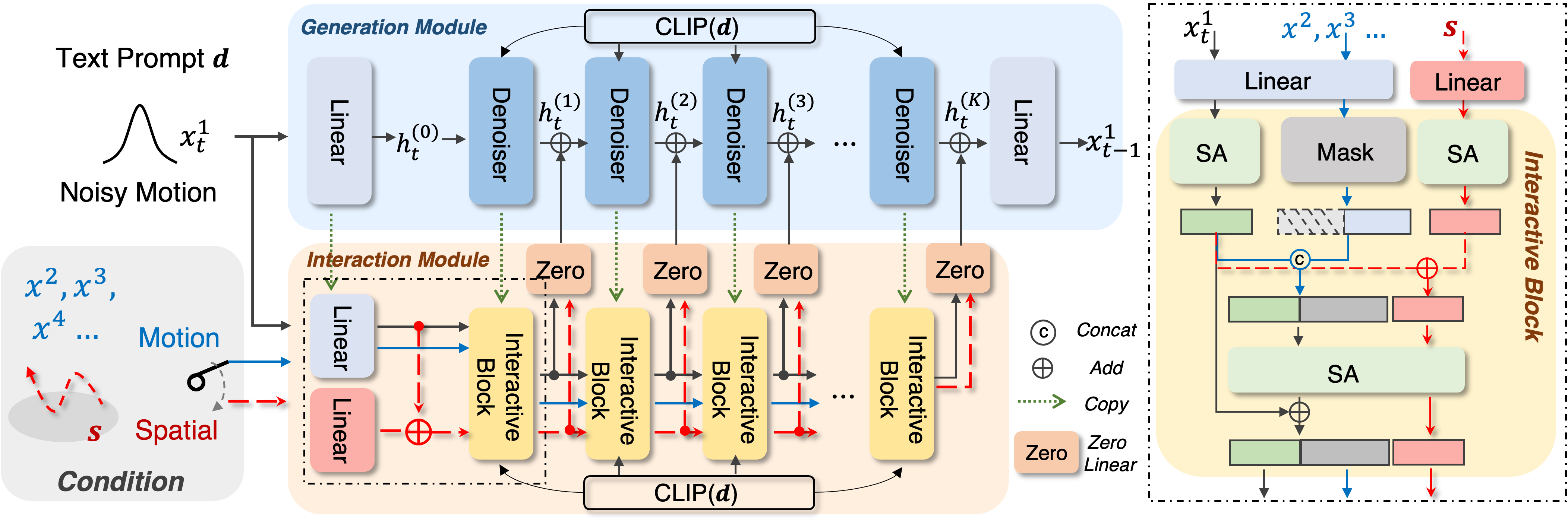

Text-to-motion synthesis is a crucial task in computer vision. Existing methods are limited in their universality, as they are tailored for single-person or two-person scenarios and can not be applied to generate motions for more individuals. To achieve the number-free motion synthesis, this paper reconsiders motion generation and proposes to unify the single and multi-person motion by the conditional motion distribution. Furthermore, a generation module and an interaction module are designed for our FreeMotion framework to decouple the process of conditional motion generation and finally support the number-free motion synthesis. Besides, based on our framework, the current single-person motion spatial control method could be seamlessly integrated, achieving precise control of multi-person motion. Extensive experiments demonstrate the superior performance of our method and our capability to infer single and multi-human motions simultaneously.

- [✓] Release the FreeMotion training.

- [✓] Release the FreeMotion evaluation.

- [] Release the separate_annots dataset.

- [] Release the FreeMotion checkpoints.

conda create python=3.8 --name freemotion

conda activate freemotion

pip install -r requirements.txt

bash prepare/download_evaluation_model.sh

Download the data from InterGen Webpage. And put them into ./data/.

Download the data from Ours Webpage And put it into ./data/.

<DATA-DIR>

./annots //Natural language annotations where each file consisting of three sentences.

./motions //Raw motion data standardized as SMPL which is similiar to AMASS.

./motions_processed //Processed motion data with joint positions and rotations (6D representation) of SMPL 22 joints kinematic structure.

./split //Train-val-test split.

./separate_annots //Annotations for each person's motionsh train_single.sh

sh train_inter.sh

sh test.sh

If you find our code or paper helps, please consider citing:

@article{fan2024freemotion,

title={FreeMotion: A Unified Framework for Number-free Text-to-Motion Synthesis},

author={Ke Fan and Junshu Tang and Weijian Cao and Ran Yi and Moran Li and Jingyu Gong and Jiangning Zhang and Yabiao Wang and Chengjie Wang and Lizhuang Ma},

year={2024},

eprint={2405.15763},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Thanks to interhuman,MotionGPT, our code is partially borrowing from them.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.