Vision-Centric BEV Perception: A Survey

Public Papers:

- IPM: Inverse perspective mapping simplifies optical flow computation and obstacle detection (Biological Cybernetics'1991) [paper]

- DSM: Automatic Dense Visual Semantic Mapping from Street-Level Imagery (IROS'12) [paper]

- MapV: Learning to map vehicles into bird’s eye view (ICIAP'17) [paper]

- BridgeGAN: Generative Adversarial Frontal View to Bird View Synthesis (3DV'18) [paper][project page]

- VPOE: Deep learning based vehicle position and orientation estimation via inverse perspective mapping image (IV'19) [paper]

- 3D-LaneNet: End-to-End 3D Multiple Lane Detection (ICCV'19) [paper]

- The Right (Angled) Perspective: Improving the Understanding of Road Scenes Using Boosted Inverse Perspective Mapping (IV'19) [paper]

- Cam2BEV: A Sim2Real Deep Learning Approach for the Transformation of Images from Multiple Vehicle-Mounted Cameras to a Semantically Segmented Image in Bird’s Eye View (ITSC'20) [paper] [project page]

- MonoLayout: Amodal Scene Layout from a Single Image (WACA'20) [paper] [project page]

- MVNet: Multiview Detection with Feature Perspective Transformation (ECCV'20) [paper] [project page]

- OGMs: Driving among Flatmobiles: Bird-Eye-View occupancy grids from a monocular camera for holistic trajectory planning (WACA'21) [paper] [project page]

- TrafCam3D: Monocular 3D Vehicle Detection Using Uncalibrated Traffic Camerasthrough Homography (IROS'21) [paper] [project page]

- SHOT:Stacked Homography Transformations for Multi-View Pedestrian Detection (ICCV'21) [paper]

- HomoLoss: Homography Loss for Monocular 3D Object Detection (CVPR'22) [paper]

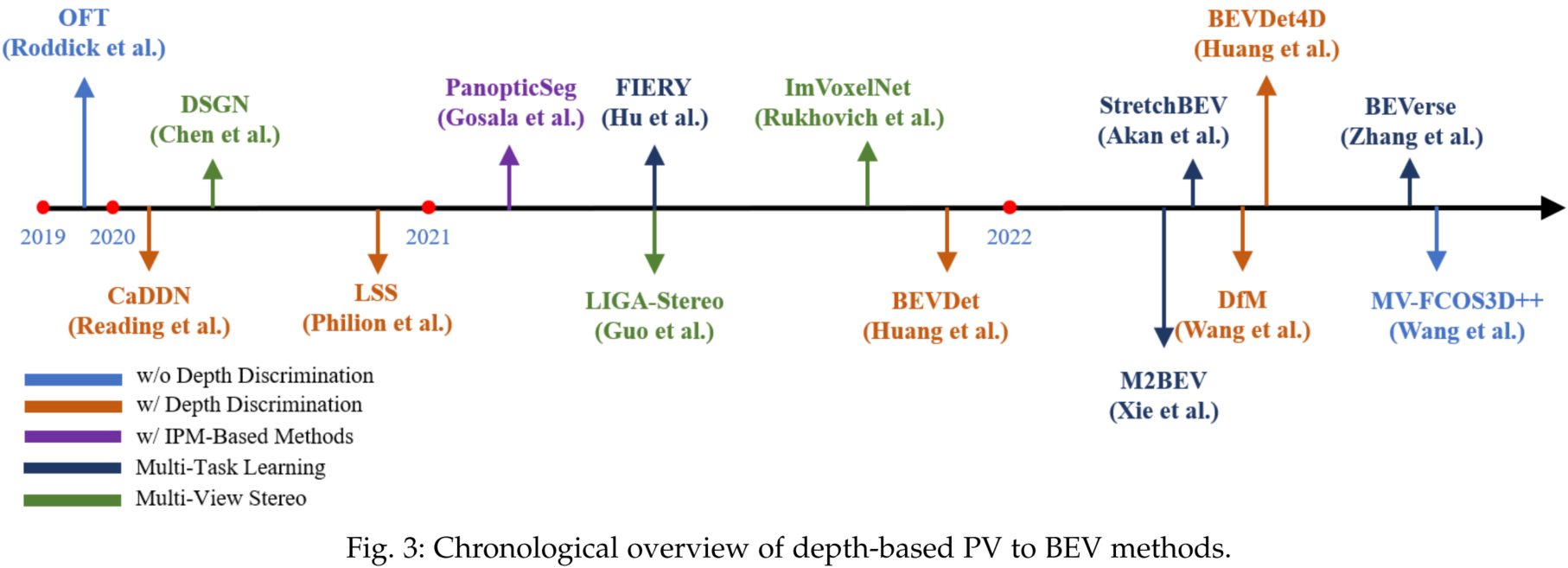

Chronological Overview:

Public Papers:

- OFT: Orthographic Feature Transform for Monocular 3D Object Detection (BMVC'19) [paper] [project page]

- CaDDN: Categorical Depth Distribution Network for Monocular 3D Object Detection (CVPR'21) [paper] [project page]

- DSGN: Deep Stereo Geometry Network for 3D Object Detection (CVPR'20) [paper] [project page]

- Lift, Splat, Shoot: Encoding Images From Arbitrary Camera Rigs by Implicitly Unprojecting to 3D (ECCV'20) [paper] [project page]

- PanopticSeg: Bird’s-Eye-View Panoptic Segmentation Using Monocular Frontal View Images (RA-L'22) [paper] [project page]

- FIERY: Future Instance Prediction in Bird’s-Eye View from Surround Monocular Cameras (ICCV'21) [paper] [project page]

- LIGA-Stereo: Learning LiDAR Geometry Aware Representations for Stereo-based 3D Detector (ICCV'21) [paper] [project page]

- ImVoxelNet: Image to Voxels Projection for Monocular and Multi-View General-Purpose 3D Object Detection (WACV'22) [paper] [project page]

- BEVDet: High-performance Multi-camera 3D Object Detection in Bird-Eye-View (Arxiv'21) [paper] [project page]

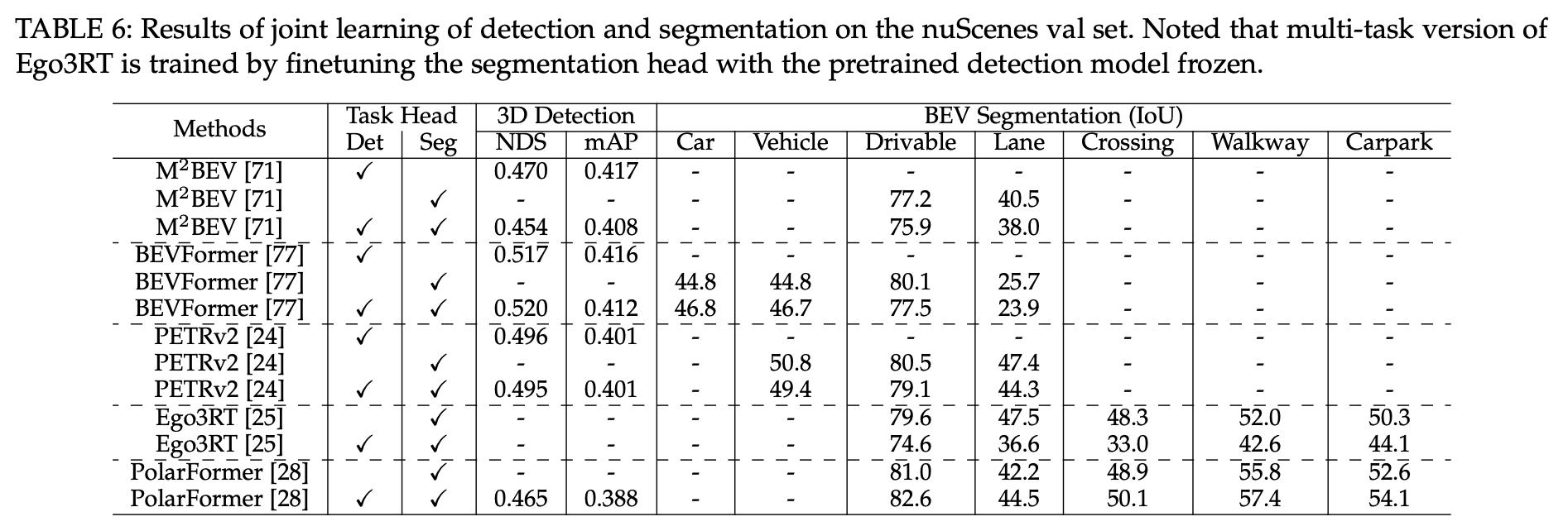

- M^2BEV: Multi-Camera Joint 3D Detection and Segmentation with Unified Bird’s-Eye View Representation (Arxiv'22) [paper] [project page]

- StretchBEV: Stretching Future Instance Prediction Spatially and Temporally (ECCV'22) [paper] [project page]

- DfM: Monocular 3D Object Detection with Depth from Motion (ECCV'22) [paper] [project page]

- BEVDet4D: Exploit Temporal Cues in Multi-camera 3D Object Detection (Arxiv'22) [paper] [project page]

- BEVerse: Unified Perception and Prediction in Birds-Eye-View for Vision-Centric Autonomous Driving (Arxiv'22) [paper] [project page]

- MV-FCOS3D++: Multi-View Camera-Only 4D Object Detection with Pretrained Monocular Backbones (Arxiv'22) [paper] [project page]

- Putting People in their Place: Monocular Regression of 3D People in Depth (CVPR'22) [Code] [Project Page] [Paper] [Video] [RH Dataset]

Chronological Overview:

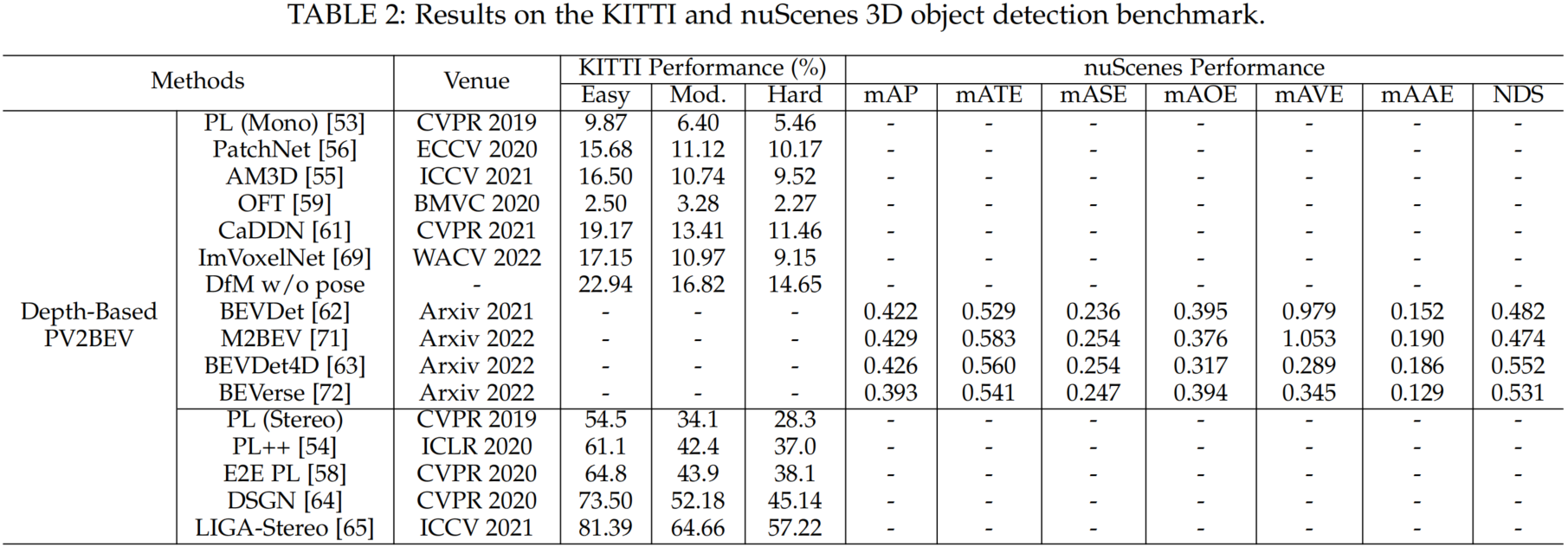

Benchmark Results:

Public Papers:

- VED: Monocular Semantic Occupancy Grid Mapping with Convolutional Variational Encoder-Decoder Networks (RA-L'19) [paper] [project page]

- VPN: Cross-view Semantic Segmentation for Sensing Surroundings (IROS'20) [paper] [project page]

- FishingNet: Future Inference of Semantic Heatmaps In Grids (Arxiv'20) [paper]

- PON: Predicting Semantic Map Representations from Images using Pyramid Occupancy Networks (CVPR'20) [paper] [project page]

- STA-ST: Enabling spatio-temporal aggregation in Birds-Eye-View Vehicle Estimation (ICRA'21) [paper]

- HDMapNet: An Online HD Map Construction and Evaluation Framework (ICRA'22) [paper] [project page]

- Projecting Your View Attentively: Monocular Road Scene Layout Estimation via Cross-view Transformation (CVPR'21) [paper] [project page]

- HFT: Lifting Perspective Representations via Hybrid Feature Transformation (Arxiv'22) [paper] [project page]

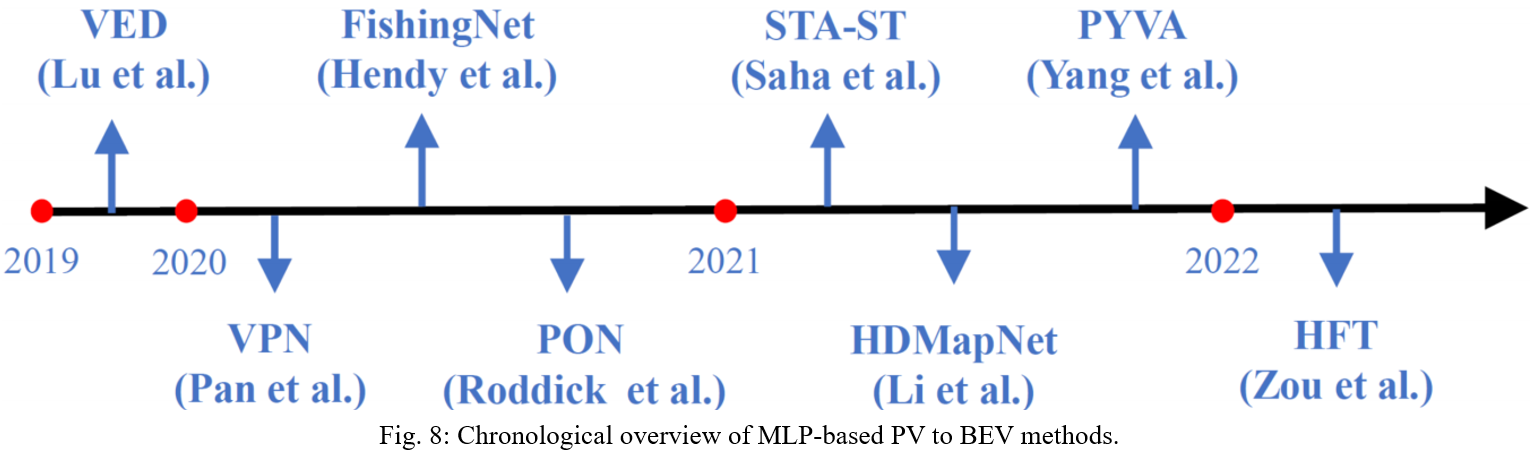

Chronological Overview:

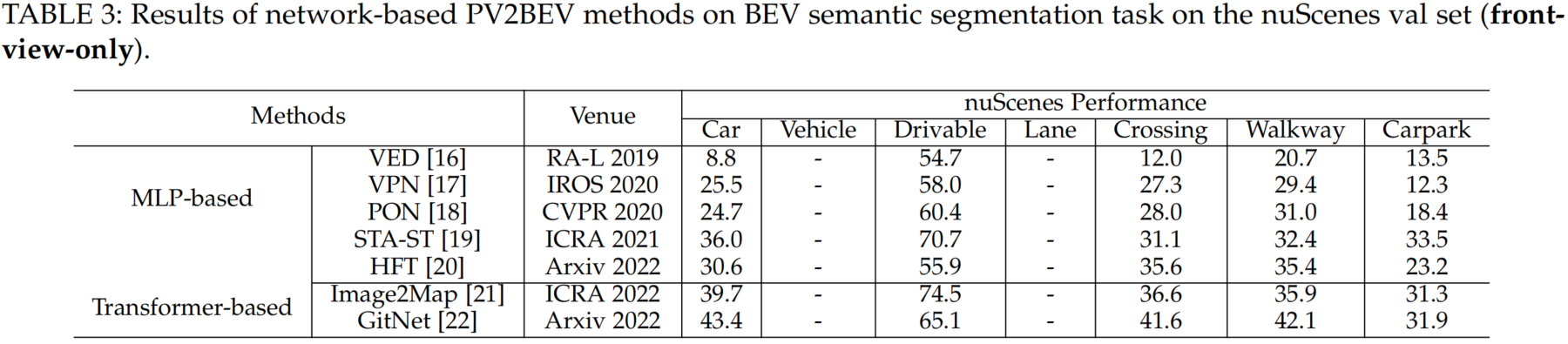

Benchmark Results:

Public Papers:

- STSU: Structured Bird’s-Eye-View Traffic Scene Understanding from Onboard Images (ICCV'21) [paper] [project page]

- Image2Map: Translating Images into Maps (ICRA'22) [paper] [project page]

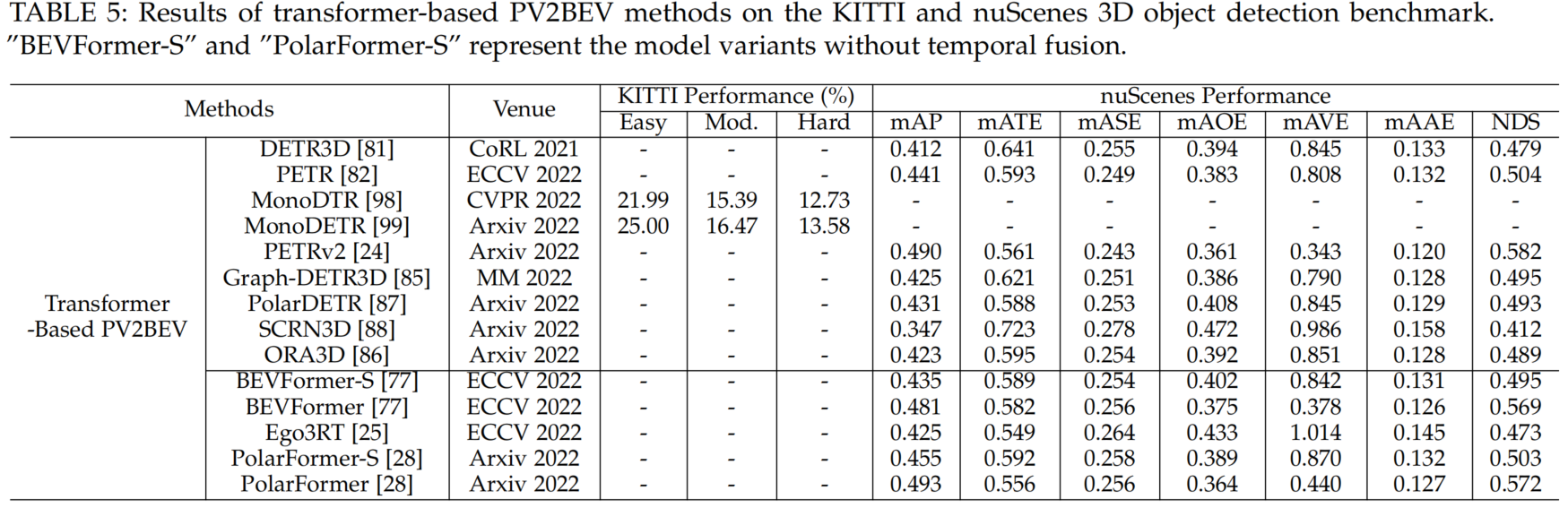

- DETR3D: 3D Object Detection from Multi-view Images via 3D-to-2D Queries (CoRL'21) [paper] [project page]

- TopologyPL: Topology Preserving Local Road Network Estimation from Single Onboard Camera Image (CVPR'22) [paper] [project page]

- PETR: Position Embedding Transformation for Multi-View 3D Object Detection (ECCV'22) [paper] [project page]

- BEVSegFormer: Bird's Eye View Semantic Segmentation From Arbitrary Camera Rigs (Arxiv'22) [paper]

- PersFormer: a New Baseline for 3D Laneline Detection (ECCV'22) [paper] [project page]

- MonoDTR: Monocular 3D Object Detection with Depth-Aware Transformer (CVPR'22) [page] [project page]

- MonoDETR: Depth-guided Transformer for Monocular 3D Object Detection (Arxiv'22) [paper] [project page]

- BEVFormer: Learning Bird's-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers (ECCV'22) [paper] [project page]

- GitNet: Geometric Prior-based Transformation for Birds-Eye-View Segmentation (ECCV'22) [paper]

- Graph-DETR3D: Rethinking Overlapping Regions for Multi-View 3D Object Detection (MM'22) [paper]

- CVT: Cross-view Transformers for real-time Map-view Semantic Segmentation (CVPR'22) [paper] [project page]

- PETRv2: A Unified Framework for 3D Perception from Multi-Camera Images (Arxiv'22) [paper] [project page]

- Ego3RT: Learning Ego 3D Representation as Ray Tracing (ECCV'22) [paper] [project page]

- GKT: Efficient and Robust 2D-to-BEV Representation Learning via Geometry-guided Kernel Transformer (Arxiv'22) [paper] [project page]

- PolarDETR: Polar Parametrization for Vision-based Surround-View 3D Detection (Arxiv'22) [paper] [project page]

- LaRa: Latents and Rays for Multi-Camera Bird’s-Eye-View Semantic Segmentation (Arxiv'22) [paper]

- SRCN3D: Sparse R-CNN 3D Surround-View Cameras 3D Object Detection and Tracking for Autonomous Driving (Arxiv'22) [paper] [project page]

- PolarFormer: Multi-camera 3D Object Detection with Polar Transformers (Arxiv'22)[paper] [project page]

- ORA3D: ORA3D: Overlap Region Aware Multi-view 3D Object Detection (Arxiv'22) [paper]

- CoBEVT: Cooperative Bird's Eye View Semantic Segmentation with Sparse Transformers (Arxiv'22) [paper]

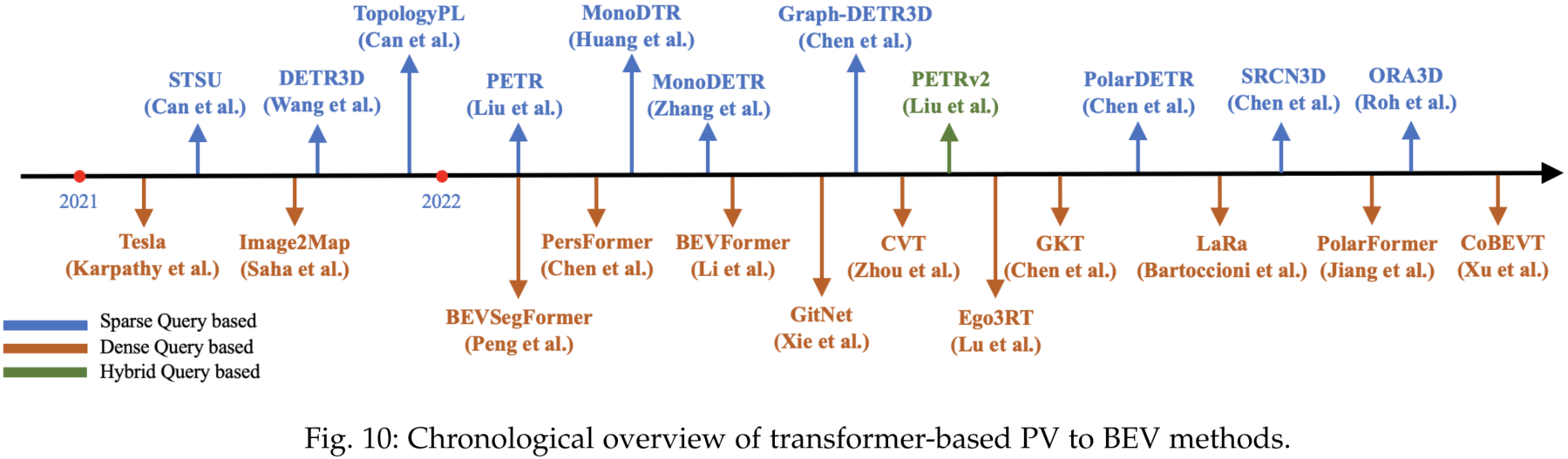

Chronological Overview:

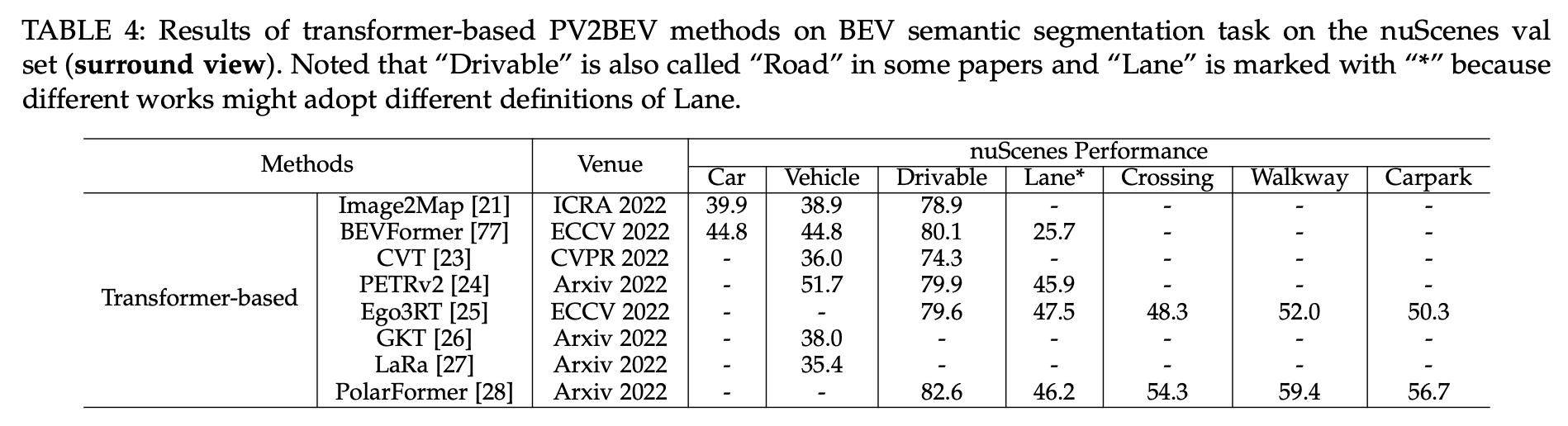

Benchmark Results:

- FIERY: Future Instance Prediction in Bird’s-Eye View from Surround Monocular Cameras (ICCV'21) [paper] [project page]

- StretchBEV: Stretching Future Instance Prediction Spatially and Temporally (ECCV'22) [paper] [project page]

- BEVerse: Unified Perception and Prediction in Birds-Eye-View for Vision-Centric Autonomous Driving (Arxiv'22) [paper] [project page]

- M^2BEV: Multi-Camera Joint 3D Detection and Segmentation with Unified Bird’s-Eye View Representation (Arxiv'22) [paper] [project page]

- STSU: Structured Bird’s-Eye-View Traffic Scene Understanding from Onboard Images (ICCV'21) [paper] [project page]

- BEVFormer: Learning Bird's-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers (ECCV'22) [paper] [project page]

- Ego3RT: Learning Ego 3D Representation as Ray Tracing (ECCV'22) [paper] [project page]

- PETRv2: A Unified Framework for 3D Perception from Multi-Camera Images (Arxiv'22) [paper] [project page]

- PolarFormer: Multi-camera 3D Object Detection with Polar Transformers (Arxiv'22)[paper] [project page]

Multi-Modality Fusion:

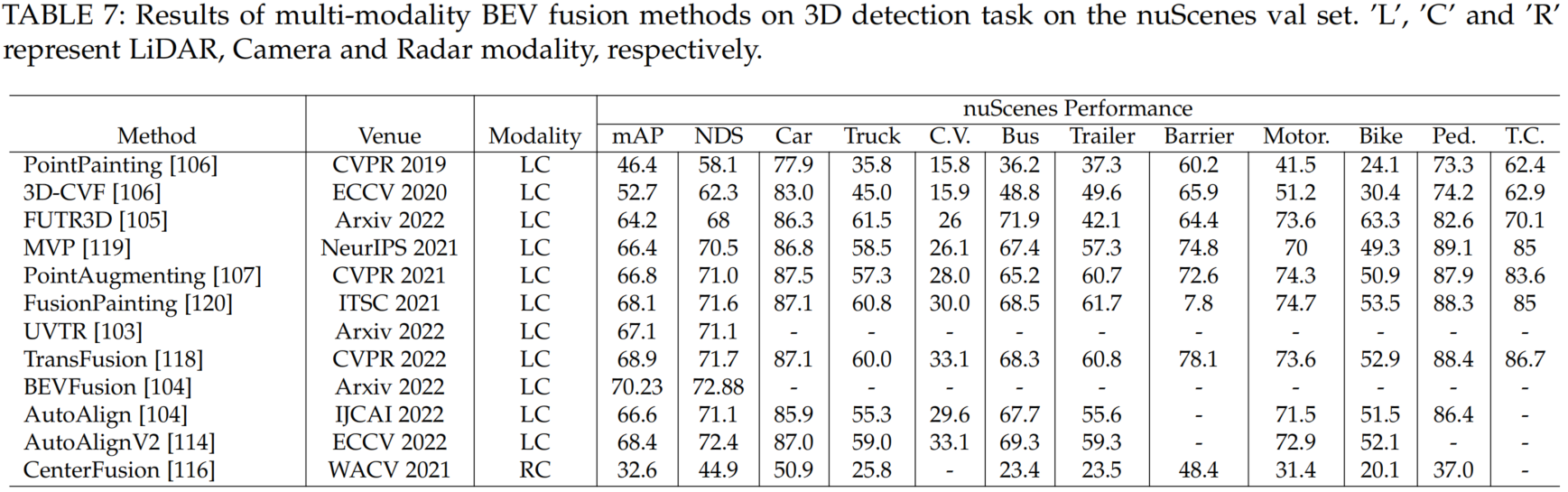

- PointPainting: Sequential Fusion for 3D Object Detection (CVPR'19) [paper] [project page]

- 3D-CVF: Generating Joint Camera and LiDAR Features Using Cross-View Spatial Feature Fusion for 3D Object Detection (ECCV'20) [paper] [project page]

- FUTR3D: A Unified Sensor Fusion Framework for 3D Detection (Arxiv'22) [paper] [project page]

- MVP: Multimodal Virtual Point 3D Detection (NIPS'21) [paper] [project page]

- PointAugmenting: Cross-Modal Augmentation for 3D Object Detection (CVPR'21) [paper] [project page]

- FusionPainting: Multimodal Fusion with Adaptive Attention for 3D Object Detection (ITSC'21) [paper] [project page]

- Unifying Voxel-based Representation with Transformer for 3D Object Detection (Arxiv'21) [paper] [project page]

- TransFusion: Robust LiDAR-Camera Fusion for 3D Object Detection with Transformers (CVPR'22) [paper] [project page]

- AutoAlign: Pixel-Instance Feature Aggregation for Multi-Modal 3D Object Detection (IJCAI'22) [paper] [project page]

- AutoAlignV2: Deformable Feature Aggregation for Dynamic Multi-Modal 3D Object Detection (ECCV'22) [paper] [project page]

- CenterFusion: Center-based Radar and Camera Fusion for 3D Object Detection (WACV'21) [paper] [project page]

- MSMDFusion: Fusing LiDAR and Camera at Multiple Scales with Multi-Depth Seeds for 3D Object Detection (Arxiv'22) [paper][project page]

Temporal Fusion:

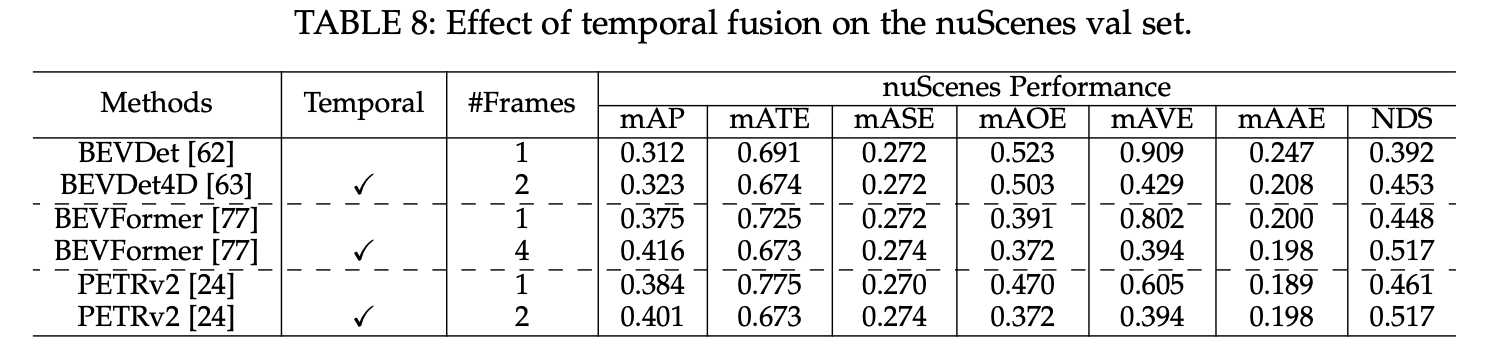

- BEVDet4D: Exploit Temporal Cues in Multi-camera 3D Object Detection (Arxiv'22) [paper] [project page]

- Image2Map: Translating Images into Maps (ICRA'22) [paper] [project page]

- FIERY: Future Instance Prediction in Bird’s-Eye View from Surround Monocular Cameras (ICCV'21) [paper] [project page]

- Ego3RT: Learning Ego 3D Representation as Ray Tracing (ECCV'22) [paper] [project page]

- PolarFormer: Multi-camera 3D Object Detection with Polar Transformers (Arxiv'22)[paper] [project page]

- BEVStitch: Understanding Bird’s-Eye View of Road Semantics using an Onboard Camera (ICRA'22) [paper] [project page]

- PETRv2: A Unified Framework for 3D Perception from Multi-Camera Images (Arxiv'22) [paper] [project page]

- BEVFormer: Learning Bird's-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers (ECCV'22) [paper] [project page]

- UniFormer: Unified Multi-view Fusion Transformer for Spatial-Temporal Representation in Bird’s-Eye-View (Arxiv'22) [paper]

- DfM: Monocular 3D Object Detection with Depth from Motion (ECCV'22) [paper] [project page]

Multi-agent Fusion:

- CoBEVT: Cooperative Bird's Eye View Semantic Segmentation with Sparse Transformers (Arxiv'22) [paper]

If you find our work useful in your research, please consider citing:

@inproceedings{Ma2022VisionCentricBP,

title={Vision-Centric BEV Perception: A Survey},

author={Yuexin Ma and Tai Wang and Xuyang Bai and Huitong Yang and Yuenan Hou and Yaming Wang and Y. Qiao and Ruigang Yang and Dinesh Manocha and Xinge Zhu},

year={2022}

}Please feel free to submit a pull request to add the new paper or related project page.