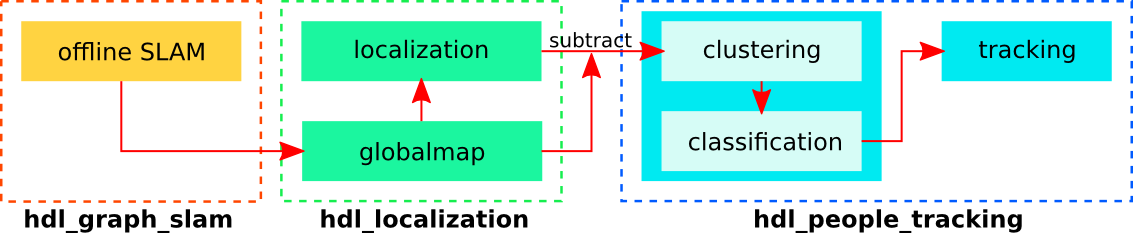

hdl_graph_slam is an open source ROS package for real-time 6DOF SLAM using a 3D LIDAR. It is based on 3D Graph SLAM with NDT scan matching-based odometry estimation and loop detection. It also supports several graph constraints, such as GPS, IMU acceleration (gravity vector), IMU orientation (magnetic sensor), and floor plane (detected in a point cloud). We have tested this package with Velodyne (HDL32e, VLP16) and RoboSense (16 channels) sensors in indoor and outdoor environments.

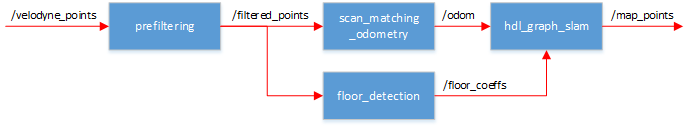

hdl_graph_slam consists of four nodelets.

- prefiltering_nodelet

- scan_matching_odometry_nodelet

- floor_detection_nodelet

- hdl_graph_slam_nodelet

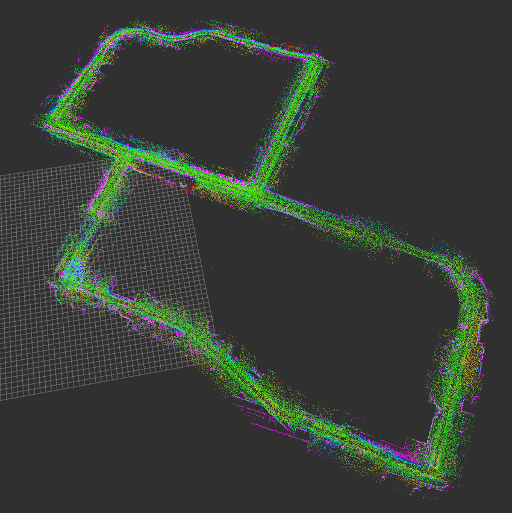

The input point cloud is first downsampled by prefiltering_nodelet, and then passed to the next nodelets. While scan_matching_odometry_nodelet estimates the sensor pose by iteratively applying a scan matching between consecutive frames (i.e., odometry estimation), floor_detection_nodelet detects floor planes by RANSAC. The estimated odometry and the detected floor planes are sent to hdl_graph_slam. To compensate the accumulated error of the scan matching, it performs loop detection and optimizes a pose graph which takes various constraints into account.

You can enable/disable each constraint by changing params in the launch file, and you can also change the weight (*_stddev) and the robust kernel (*_robust_kernel) of each constraint.

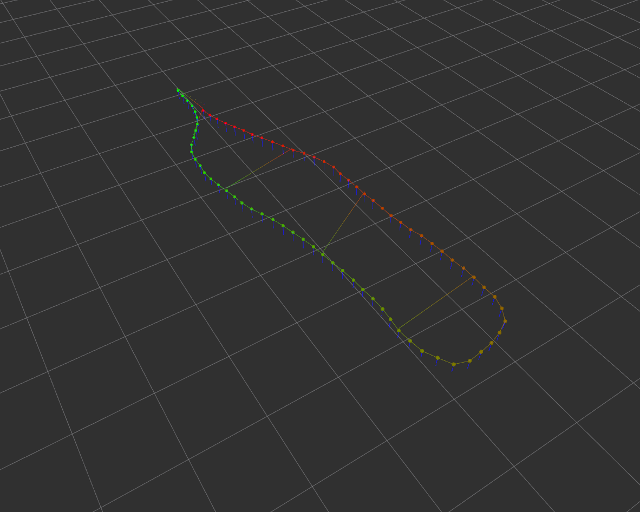

-

Odometry

-

Loop closure

-

GPS

- /gps/geopoint (geographic_msgs/GeoPointStamped)

- /gps/navsat (sensor_msgs/NavSatFix)

- /gpsimu_driver/nmea_sentence (nmea_msgs/Sentence)

hdl_graph_slam supports several GPS message types. All the supported types contain (latitude, longitude, and altitude). hdl_graph_slam converts them into the UTM coordinate, and adds them into the graph as 3D position constraints. If altitude is set to NaN, the GPS data is treated as a 2D constrait. GeoPoint is the most basic one, which consists of only (lat, lon, alt). Although NavSatFix provides many information, we use only (lat, lon, alt) and ignore all other data. If you're using HDL32e, you can directly connect hdl_graph_slam with velodyne_driver via /gpsimu_driver/nmea_sentence.

- IMU acceleration (gravity vector)

- /gpsimu_driver/imu_data (sensor_msgs/Imu)

This constraint rotates each pose node so that the acceleration vector associated with the node becomes vertical (as the gravity vector). This is useful to compensate for accumulated tilt rotation errors of the scan matching. Since we ignore acceleration by sensor motion, you should not give a big weight for this constraint.

-

IMU orientation (magnetic sensor)

- /gpsimu_driver/imu_data (sensor_msgs/Imu)

If your IMU has a reliable magnetic orientation sensor, you can add orientation data to the graph as 3D rotation constraints. Note that, magnetic orientation sensors can be affected by external magnetic disturbances. In such cases, this constraint should be disabled.

-

Floor plane

- /floor_detection/floor_coeffs (hdl_graph_slam/FloorCoeffs)

This constraint optimizes the graph so that the floor planes (detected by RANSAC) of the pose nodes becomes the same. This is designed to compensate the accumulated rotation error of the scan matching in large flat indoor environments.

All the configurable parameters are listed in launch/hdl_graph_slam.launch as ros params.

- /hdl_graph_slam/dump (hdl_graph_slam/DumpGraph)

- save all the internal data (point clouds, floor coeffs, odoms, and pose graph) to a directory.

- /hdl_graph_slam/save_map (hdl_graph_slam/SaveMap)

- save the generated map as a PCD file.

hdl_graph_slam requires the following libraries:

- OpenMP

- PCL

- g2o

- suitesparse

The following ROS packages are required:

# for melodic

sudo apt-get install ros-melodic-geodesy ros-melodic-pcl-ros ros-melodic-nmea-msgs ros-melodic-libg2o

cd catkin_ws/src

git clone https://github.com/koide3/ndt_omp.git -b melodic

git clone https://github.com/SMRT-AIST/fast_gicp.git --recursive

git clone https://github.com/koide3/hdl_graph_slam

cd .. && catkin_make -DCMAKE_BUILD_TYPE=Release

# for noetic

sudo apt-get install ros-noetic-geodesy ros-noetic-pcl-ros ros-noetic-nmea-msgs ros-noetic-libg2o

cd catkin_ws/src

git clone https://github.com/koide3/ndt_omp.git

git clone https://github.com/SMRT-AIST/fast_gicp.git --recursive

git clone https://github.com/koide3/hdl_graph_slam

cd .. && catkin_make -DCMAKE_BUILD_TYPE=Release[optional] bag_player.py script requires ProgressBar2.

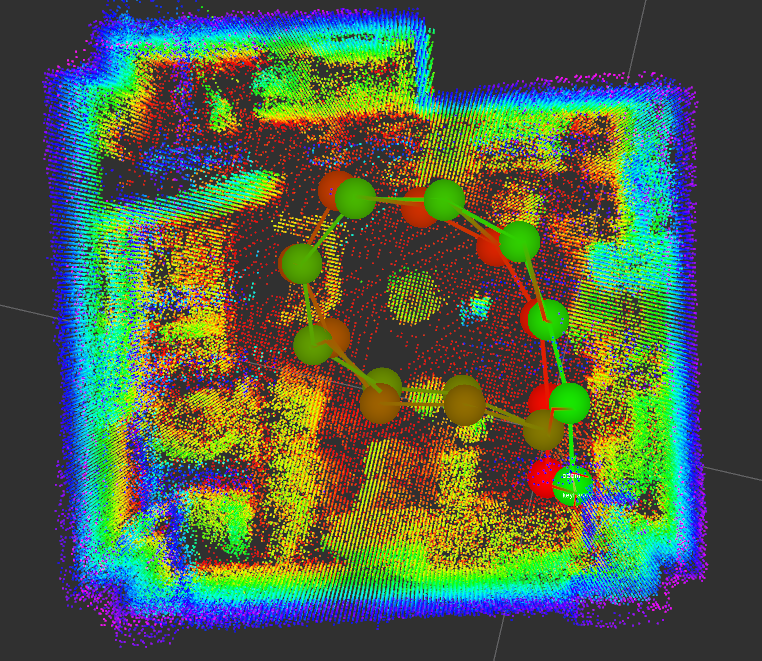

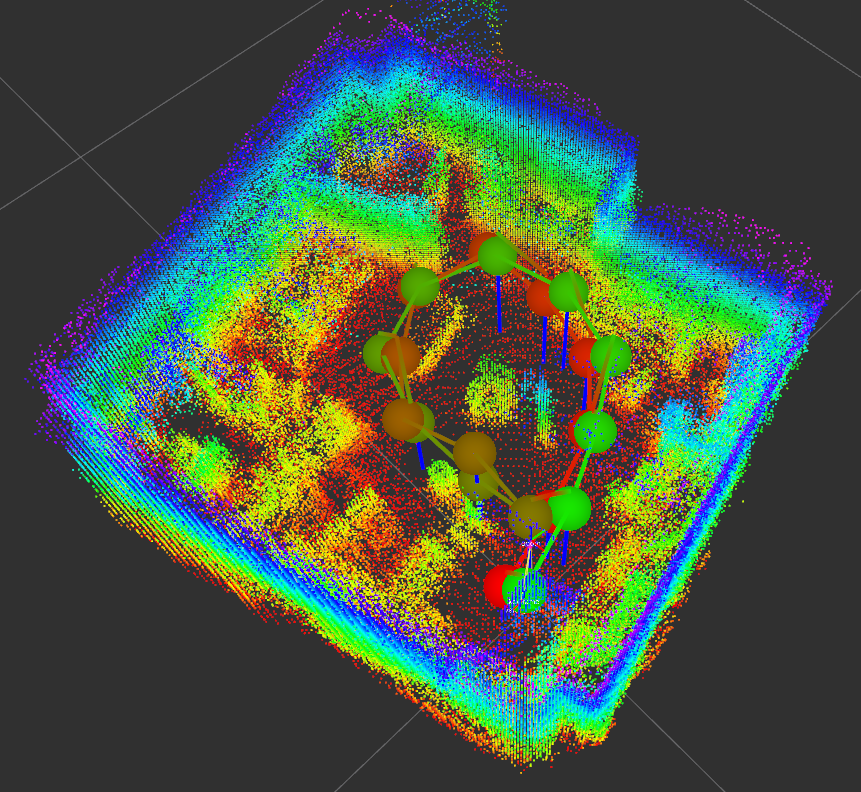

sudo pip install ProgressBar2Bag file (recorded in a small room):

- hdl_501.bag.tar.gz (raw data, 344MB)

- hdl_501_filtered.bag.tar.gz (downsampled data, 57MB, Recommended!)

rosparam set use_sim_time true

roslaunch hdl_graph_slam hdl_graph_slam_501.launchroscd hdl_graph_slam/rviz

rviz -d hdl_graph_slam.rvizrosbag play --clock hdl_501_filtered.bagWe also provide bag_player.py which automatically adjusts the playback speed and processes data as fast as possible.

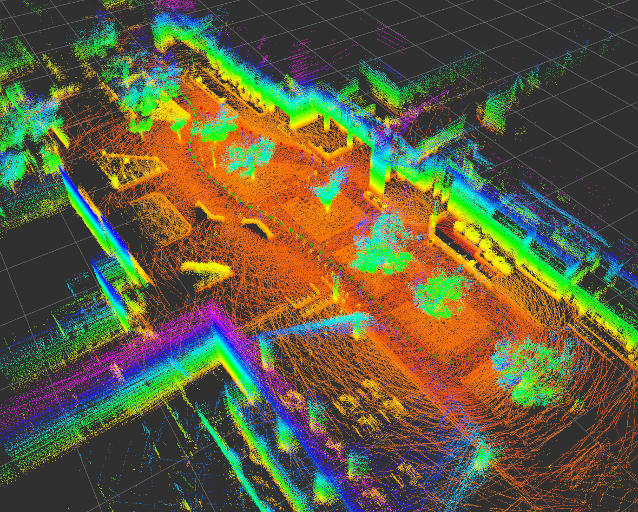

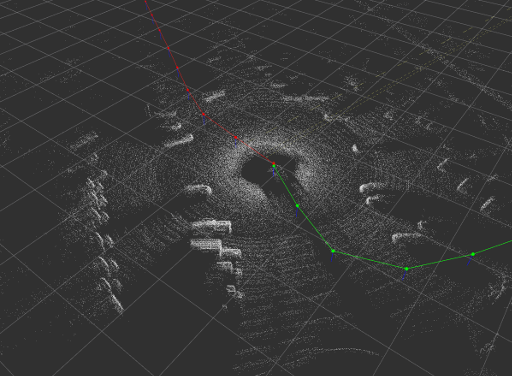

rosrun hdl_graph_slam bag_player.py hdl_501_filtered.bagYou'll see a point cloud like:

You can save the generated map by:

rosservice call /hdl_graph_slam/save_map "resolution: 0.05

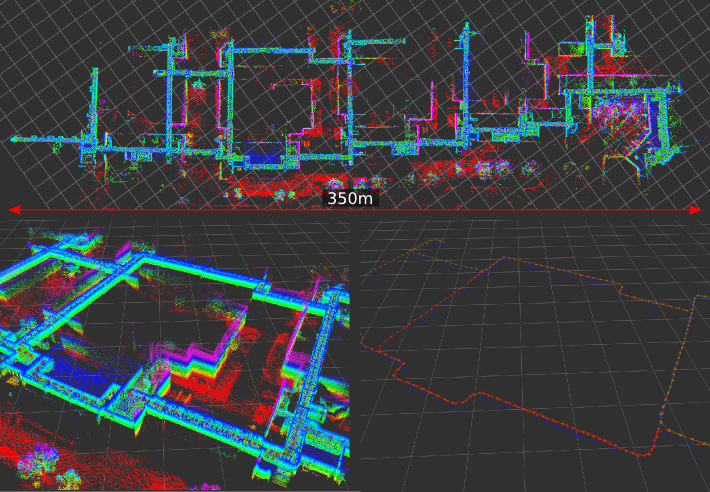

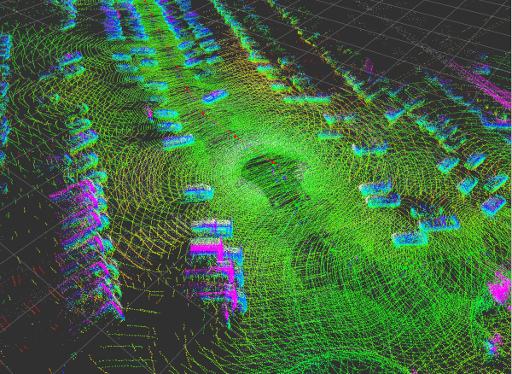

destination: '/full_path_directory/map.pcd'"Bag file (recorded in an outdoor environment):

- hdl_400.bag.tar.gz (raw data, about 900MB)

rosparam set use_sim_time true

roslaunch hdl_graph_slam hdl_graph_slam_400.launchroscd hdl_graph_slam/rviz

rviz -d hdl_graph_slam.rvizrosbag play --clock hdl_400.bagFord Campus Vision and Lidar Data Set [URL]

The following script converts the Ford Lidar Dataset to a rosbag and plays it. In this example, hdl_graph_slam utilizes the GPS data to correct the pose graph.

cd IJRR-Dataset-2

rosrun hdl_graph_slam ford2bag.py dataset-2.bag

rosrun hdl_graph_slam bag_player.py dataset-2.bag-

Define the transformation between your sensors (LIDAR, IMU, GPS) and base_link of your system using static_transform_publisher (see line #11, hdl_graph_slam.launch). All the sensor data will be transformed into the common base_link frame, and then fed to the SLAM algorithm.

-

Remap the point cloud topic of prefiltering_nodelet. Like:

<node pkg="nodelet" type="nodelet" name="prefiltering_nodelet" ...

<remap from="/velodyne_points" to="/rslidar_points"/>

...The mapping quality largely depends on the parameter setting. In particular, scan matching parameters have a big impact on the result. Tune the parameters accoding to the following instructions:

-

registration_method [updated] In short, use FAST_GICP for most cases and FAST_VGICP or NDT_OMP if the processing speed matters This parameter allows to change the registration method to be used for odometry estimation and loop detection. Note that GICP in PCL1.7 (ROS kinetic) or earlier has a bug in the initial guess handling. If you are on ROS kinectic or earlier, do not use GICP.

-

ndt_resolution This parameter decides the voxel size of NDT. Typically larger values are good for outdoor environements (0.5 - 2.0 [m] for indoor, 2.0 - 10.0 [m] for outdoor). If you chose NDT or NDT_OMP, tweak this parameter so you can obtain a good odometry estimation result.

-

other parameters All the configurable parameters are available in the launch file. Copy a template launch file (hdl_graph_slam_501.launch for indoor, hdl_graph_slam_400.launch for outdoor) and tweak parameters in the launch file to adapt it to your application.

This package is released under the BSD-2-Clause License.

Note that the cholmod solver in g2o is licensed under GPL. You may need to build g2o without cholmod dependency to avoid the GPL.

Kenji Koide, Jun Miura, and Emanuele Menegatti, A Portable 3D LIDAR-based System for Long-term and Wide-area People Behavior Measurement, Advanced Robotic Systems, 2019 [link].

Kenji Koide, k.koide@aist.go.jp, https://staff.aist.go.jp/k.koide

Active Intelligent Systems Laboratory, Toyohashi University of Technology, Japan [URL]

Mobile Robotics Research Team, National Institute of Advanced Industrial Science and Technology (AIST), Japan [URL]