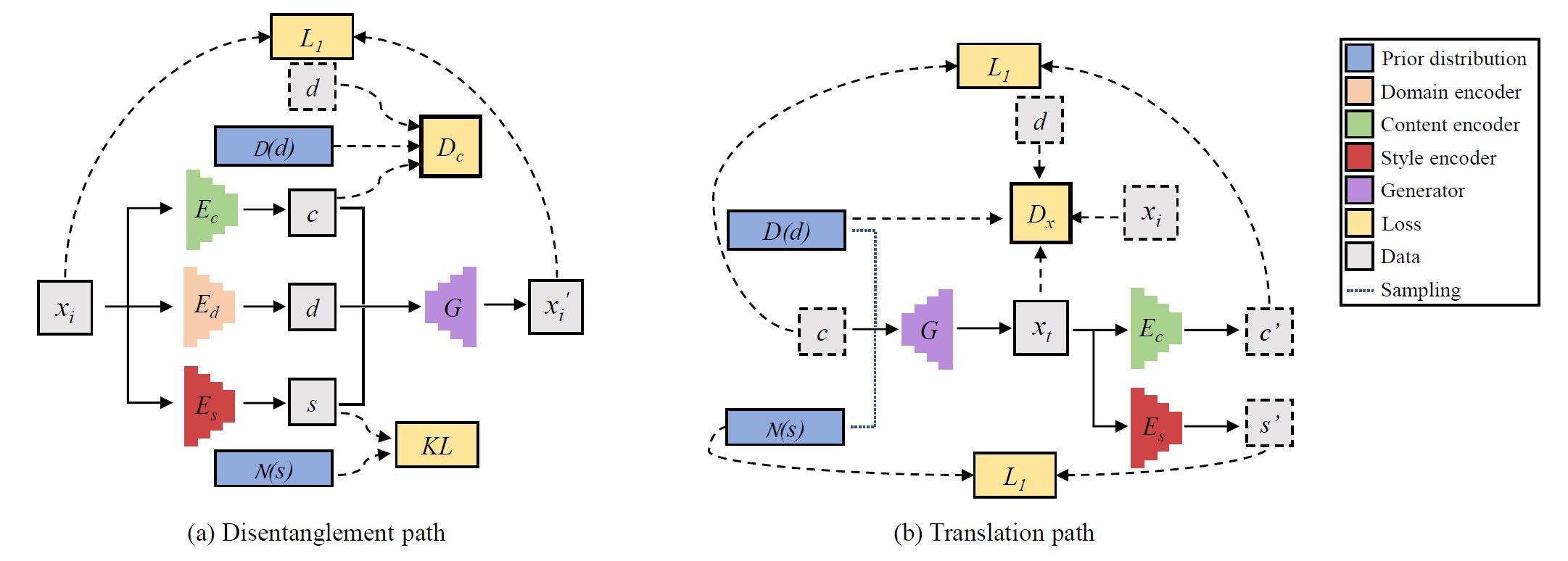

Pytorch implementation of our paper: "Multi-mapping Image-to-Image Translation via Learning Disentanglement".

you can install all the dependencies by

pip install -r requirements.txt

-

Download and unzip preprocessed datasets by

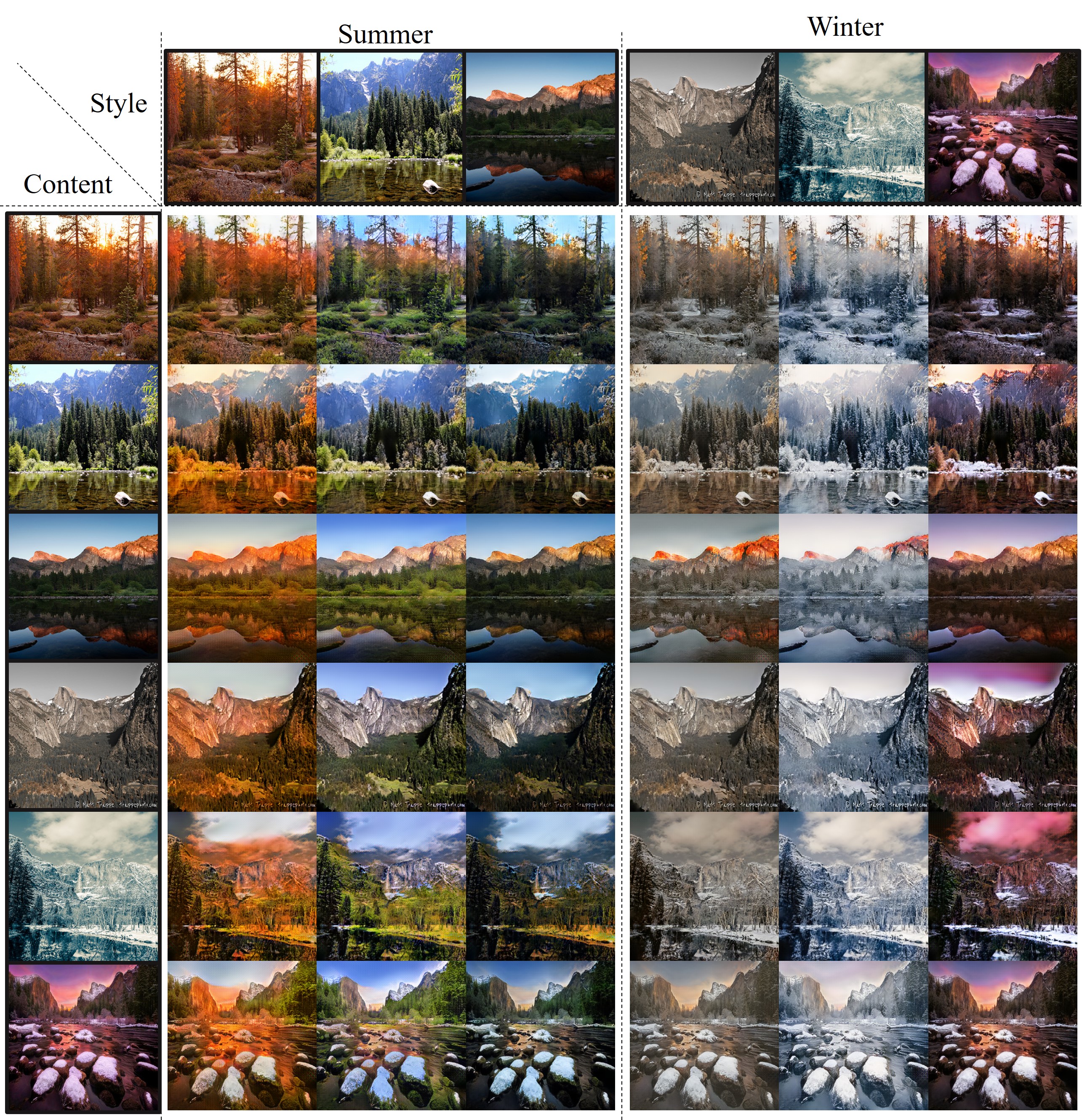

- Season Transfer

bash ./scripts/download_datasets.sh summer2winter_yosemite - Semantic Image Synthesis

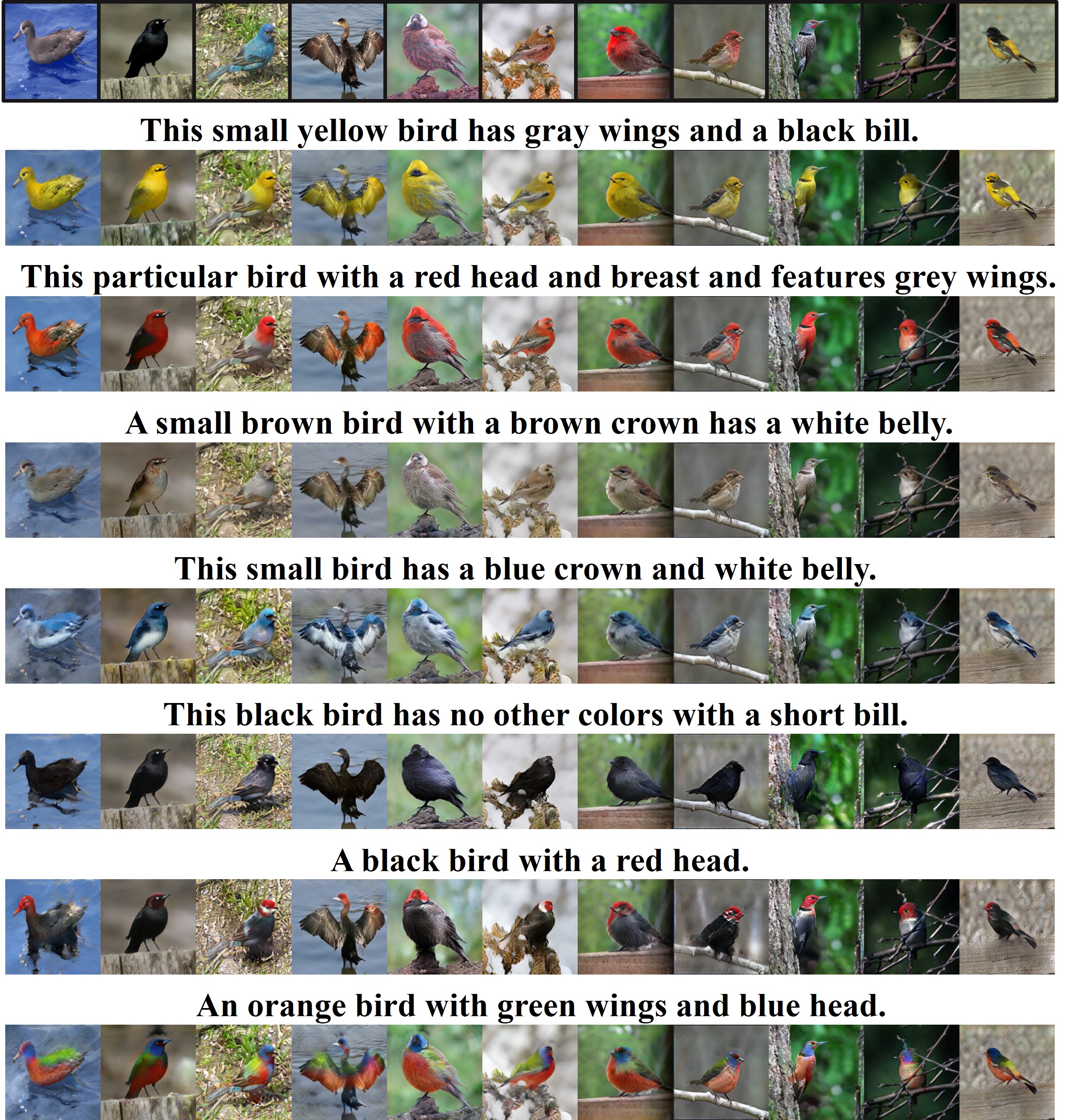

bash ./scripts/download_datasets.sh birds

- Season Transfer

-

Or you can manually download them from CycleGAN and AttnGAN.

- Season Transfer

bash ./scripts/train_season_transfer.sh - Semantic Image Synthesis

bash ./scripts/train_semantic_image_synthesis.sh - To view training results and loss plots, run python -m visdom.server and click the URL http://localhost:8097. More intermediate results can be found in environment

exp_name.

- Run

bash ./scripts/test_season_transfer.sh bash ./scripts/test_semantic_image_synthesis.sh - The testing results will be saved in

checkpoints/{exp_name}/resultsdirectory.

Pretrained models can be downloaded from Google Drive or Baidu Wangpan with code 59tm.

You can implement your Dataset and SubModel to start a new experiment.

If this work is useful for your research, please consider citing :

@inproceedings{yu2019multi,

title={Multi-mapping Image-to-Image Translation via Learning Disentanglement},

author={Yu, Xiaoming and Chen, Yuanqi and Liu, Shan and Li, Thomas and Li, Ge},

booktitle={Advances in Neural Information Processing Systems},

year={2019}

}

The code used in this research is inspired by BicycleGAN, MUNIT, DRIT, AttnGAN, and SingleGAN.

The diversity regulazation used in the current version is inspired by DSGAN and MSGAN.

Feel free to reach me if there is any questions (xiaomingyu@pku.edu.cn).