An RL Benchmark. Easy to use, experiment, visualize, compare results, and extend.

-

Model-free

- Value-based

- DQN (2013)

- Double DQN (2015)

- Dueling DQN (2015)

- Policy-based

- Reinforce (1992)

- Actor-Critic (2000)

- TRPO (2015)

- DDPG (2015)

- A2C (2016)

- A3C (2016)

- ACKTR (2017)

- PPO (2017)

- SAC (2018)

- TD3 (2018)

- Value-based

-

Model-based

Use python run.py -h to see the available parameters and get help.

To run a demo, simply run python run.py.

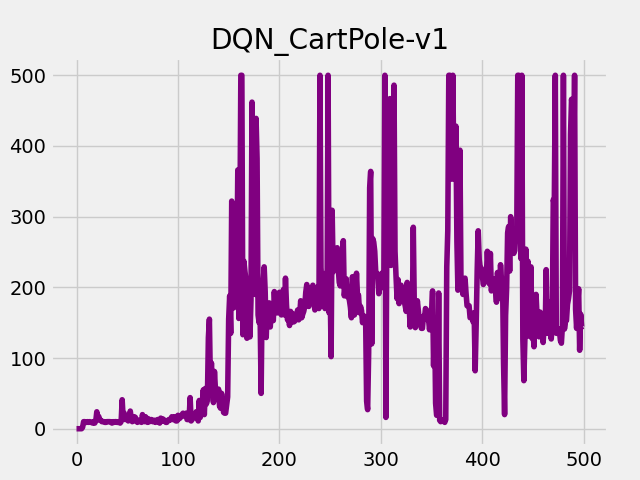

- Replay buffer: use

deque. - Target network: hard update (load state dict each N iterations).

- Only one hidden layer.

- DQN's update utilizes

gatherin pytorch. - Data type:

torch.floatandnp.float. - Fixed epsilon.

- Target update=100 is worse than Target update=10.

Param:

Namespace(batch_size=64, benchmark='DQN', device='cuda:0', env='CartPole-v1', epoch=500, epsilon=0.01, gamma=0.95, hidden=128, lr=0.002, max_capacity=1000, plot=True, save=True, seed=0, target_update=10)

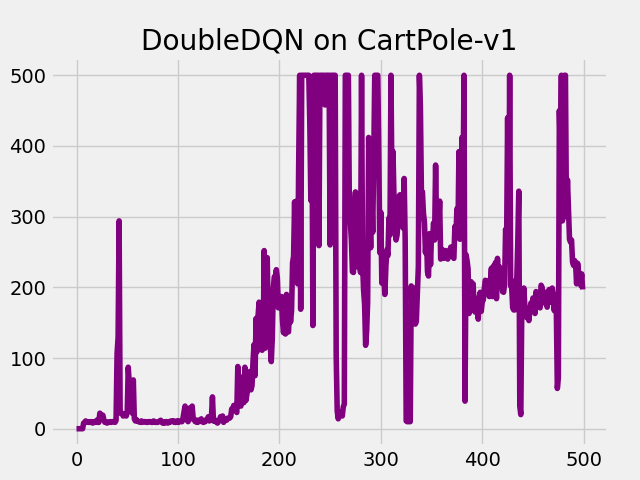

- Just change the way we computer Q target:

$$r + Q_{target}(s, a_{target}) -> r + Q_{target}(s, a_{origin})$$

Param:

Namespace(batch_size=64, benchmark='DoubleDQN', device='cuda:0', env='CartPole-v1', epoch=500, epsilon=0.01, gamma=0.95, hidden=128, lr=0.001, max_capacity=1000, plot=True, save=True, seed=0, target_update=10)

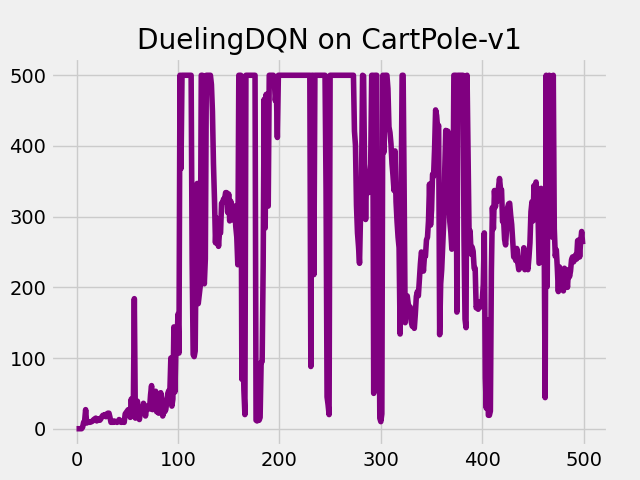

- Change the network (add advantage function layer and value function layer).

Param:

Namespace(batch_size=64, benchmark='DuelingDQN', device='cuda:0', env='CartPole-v1', epoch=500, epsilon=0.01, gamma=0.95, hidden=128, lr=0.001, max_capacity=1000, plot=True, save=True, seed=0, target_update=10)

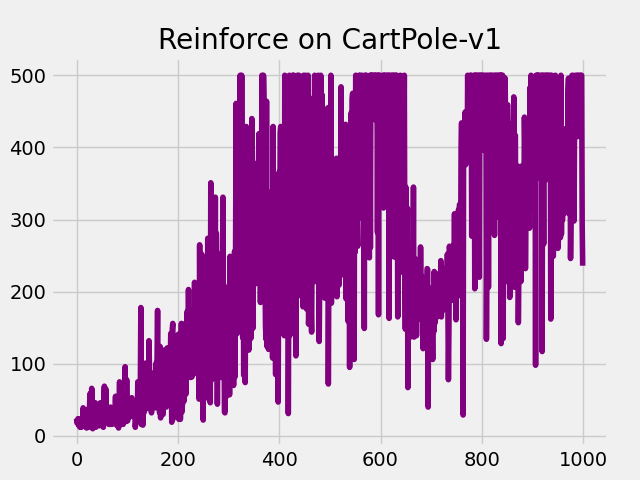

- Use

action_dist = torch.distributions.Categorical(probs)to take action.

Param:

Namespace(batch_size=64, benchmark='Reinforce', device='cuda:0', env='CartPole-v1', epoch=1000, epsilon=0.01, gamma=0.98, hidden=128, lr=0.001, max_capacity=1000, plot=True, save=True, seed=0, target_update=10)

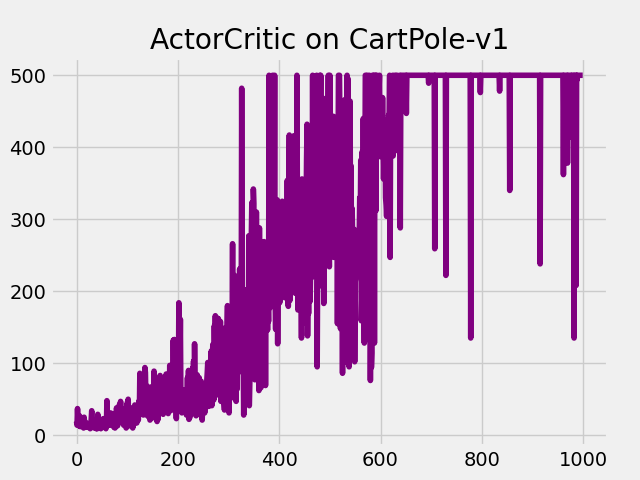

- Use TD error as the factor of the objective in policy gradient.

- Use

detach()to cut off the back propagation. (very important!) - Two-timescale update.

1e-3for the actor,1e-2for the critic. (effect the performance very much)

Param:

Namespace(actor_lr=0.001, batch_size=64, benchmark='ActorCritic', critic_lr=0.01, device='cuda:0', env='CartPole-v1', epoch=1000, epsilon=0.01, gamma=0.99, hidden=128, lr=0.001, max_capacity=1000, plot=True, save=True, seed=0, target_update=10)