Paper | Video | Project Page

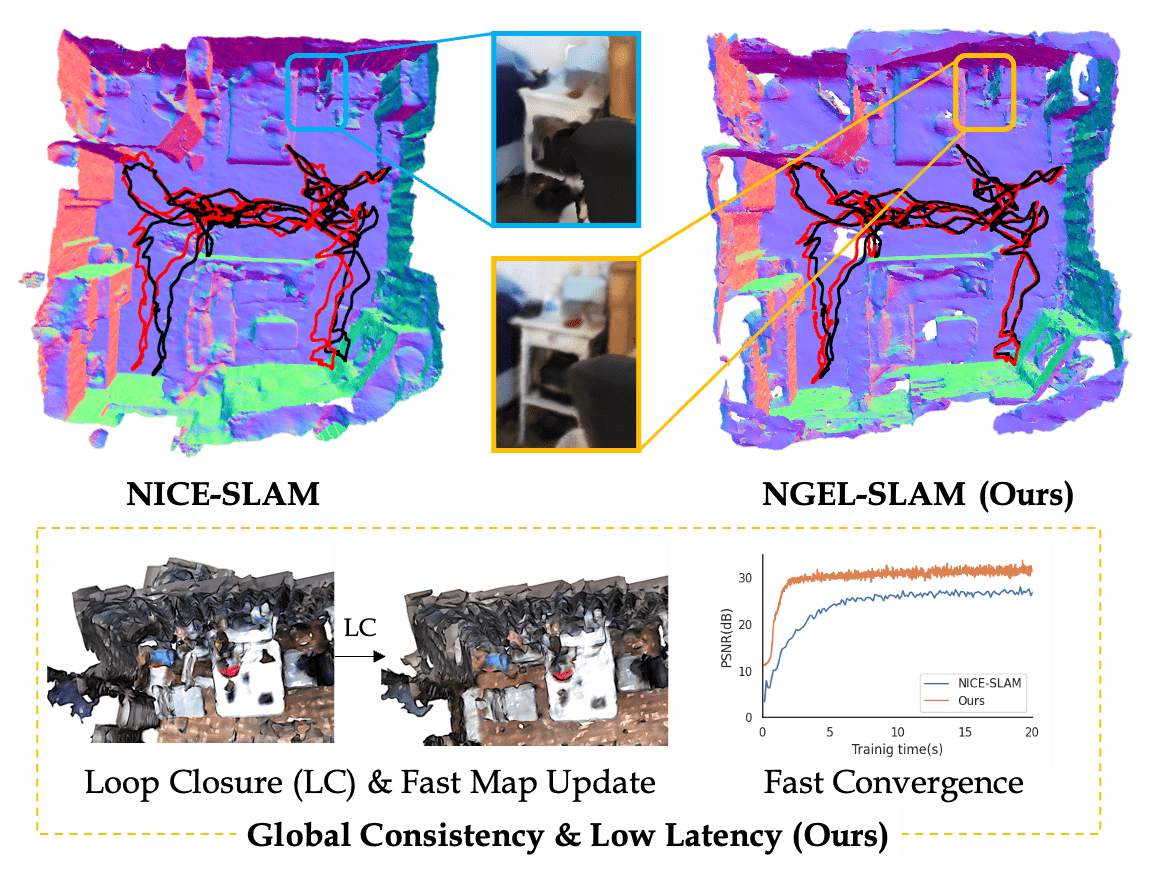

NGEL-SLAM: Neural Implicit Representation-based Global Consistent Low-Latency SLAM System

Yunxuan Mao, Xuan Yu, Kai Wang, Yue Wang, Rong Xiong, Yiyi Liao

Winner of ICRA 2024 Best Paper Award in Robot Vision

Please follow the instructions below to install the repo and dependencies.

mkdir catkin_ws && cd catkin_ws

mkdir src && cd src

git clone https://github.com/YunxuanMao/ngel_slam.git

cd ..

catkin_makeORB-SLAM-ROS3 modified by me can be downloaded here: https://drive.google.com/drive/folders/1RvMYtInNbKuP8XBV2_Z4iEpCbDixKxLE?usp=drive_link

conda create -n ngel python=3.8

conda activate ngel

pip install torch==1.10.1+cu113 torchvision==0.11.2+cu113 torchaudio==0.10.1 -f https://download.pytorch.org/whl/cu113/torch_stable.html

pip install -r requirements.txt

cd yx_kaolin

python setup.py developkaolin-wisp modified by me can be downloaded here: https://drive.google.com/drive/folders/1RvMYtInNbKuP8XBV2_Z4iEpCbDixKxLE?usp=drive_link

You should put your data in data folder follow NICE-SLAM and generate a rosbag for ORB-SLAM3

python write_bag.py --input_folder '{PATH_TO_INPUT_FOLDER}' --output '{PATH_TO_ROSBAG}' --frame_id 'FRAME_ID_TO_DATA'

You should change the intrinsics manually in write_bag.py.

You should first start the ORB-SLAM3-ROS, and then using code below

python main.py --config '{PATH_TO_CONFIG}' --input_folder '{PATH_TO_INPUT_FOLDER}' --output '{PATH_TO_OUTPUT}'

If you find our code or paper useful for your research, please consider citing:

@article{mao2023ngel,

title={Ngel-slam: Neural implicit representation-based global consistent low-latency slam system},

author={Mao, Yunxuan and Yu, Xuan and Wang, Kai and Wang, Yue and Xiong, Rong and Liao, Yiyi},

journal={arXiv preprint arXiv:2311.09525},

year={2023}

}

For large scale mapping work, you can refer to NF-Atlas.

Thanks for the source code of orb-slam3-ros and kaolin-wisp.