[Paper] [arXiv] [Project Page] [Video]

Abstract

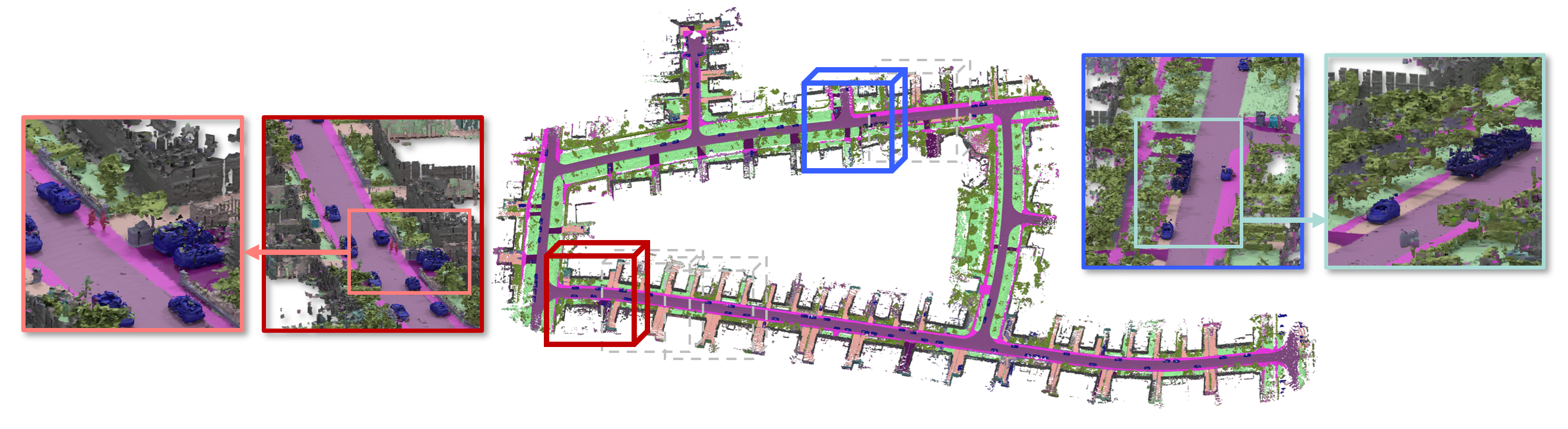

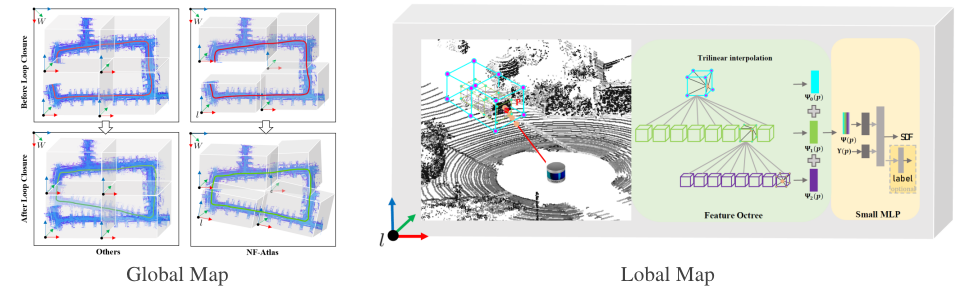

LiDAR Mapping has been a long-standing problem in robotics. Recent progress in neural implicit representation has brought new opportunities to robotic mapping. In this paper, we propose the multi-volume neural feature fields, called NF-Atlas, which bridge the neural feature volumes with pose graph optimization. By regarding the neural feature volume as pose graph nodes and the relative pose between volumes as pose graph edges, the entire neural feature field becomes both locally rigid and globally elastic. Locally, the neural feature volume employs a sparse feature Octree and a small MLP to encode the submap SDF with an option of semantics. Learning the map using this structure allows for end-to-end solving of maximum a posteriori (MAP) based probabilistic mapping. Globally, the map is built volume by volume independently, avoiding catastrophic forgetting when mapping incrementally. Furthermore, when a loop closure occurs, with the elastic pose graph based representation, only updating the origin of neural volumes is required without remapping. Finally, these functionalities of NF-Atlas are validated. Thanks to the sparsity and the optimization based formulation, NF-Atlas shows competitive performance in terms of accuracy, efficiency and memory usage on both simulation and real-world datasets.

- python 3.8

- pytorch 1.11.0

- CUDA 11.3

conda create -n nfatlas python=3.8

conda activate nfatlas

#Pytorch version depends on your device

conda install pytorch==1.11.0 torchvision==0.12.0 torchaudio==0.11.0 cudatoolkit=11.3 -c pytorch

pip install -r requirements.txt

cd third-party

./setup.sh

KITTI-360 Dataset

├── data_3d_raw

│ ├── 2013_05_28_drive_0000_sync

│ │ ├── velodyne_points

│ │ │ ├── data

│ │ │ │ ├── 0000000000.bin

│ │ │ │ ├── ...

│ │ │ ├── timestamps.txt

├──data_3d_semantics

│ ├── train

│ │ ├── 2013_05_28_drive_0000_sync

│ │ │ ├── static

│ │ │ │ ├──0000000002_0000000385.ply

│ │ │ │ ├── ...

├──data_poses

│ ├── 2013_05_28_drive_0000_sync

│ │ ├──cam0_to_world.txt

├──calibration

│ ├── perspective.txt

│ ├── calib_cam_to_velo.txt

Reconstruction

train a single volume without mesh output:

python main.py --DATA_PATH [YOUR/PATH/KITTI-360] --config_file config/kitti360.yaml

train milti-volume without mesh output(if train 2 volume):

python main.py --config_file config/kitti360.yaml --VOLUME_NUM 2

train two volumes with separate mesh output:

python main.py --config_file config/kitti360.yaml --VOLUME_NUM 2 --VIS_FLAG Ture --MESH_SIZE -1 1 -1 1 -0.1 0.2 --MESH_REVU 30

mesh(w/o sem) is installed in proj_dir/mesh/ (set in config/kitti360.yaml)

Semantic Map

The default version includes the semantic term. If you don't want to train with semantic loss, you can change the setting in config/kitti360.yaml:

loss_term:

semantic2d: False

semantic3d: False

Loop Updating

Due to the large number of volumes in a loop, we provide a demo of two volumes at the closed loop port. Install 'minisam' for Loop Updating.

python loop_demo.py

Render views

Generate depth images, semantic images, and normal images:

python render/render_imgs.py

Generate the Hole Mesh

generate a mesh (NO.0 and NO.1 volumes)

python hole_mesh_generation.py --START 0 --CUBE 2

We learned a lot from the following projects when developing NF-Atlas.

If you find this work useful, please cite:

@ARTICLE{10197558,

author={Yu, Xuan and Liu, Yili and Mao, Sitong and Zhou, Shunbo and Xiong, Rong and Liao, Yiyi and Wang, Yue},

journal={IEEE Robotics and Automation Letters},

title={NF-Atlas: Multi-Volume Neural Feature Fields for Large Scale LiDAR Mapping},

year={2023},

volume={8},

number={9},

pages={5870-5877},

doi={10.1109/LRA.2023.3300281}}For RGB-D SLAM work, you can refer to NGEL-SLAM

The code is released under the AGPL License.