Pytorch implementation of the paper "CLRNet: Cross Layer Refinement Network for Lane Detection" (CVPR2022 Acceptance).

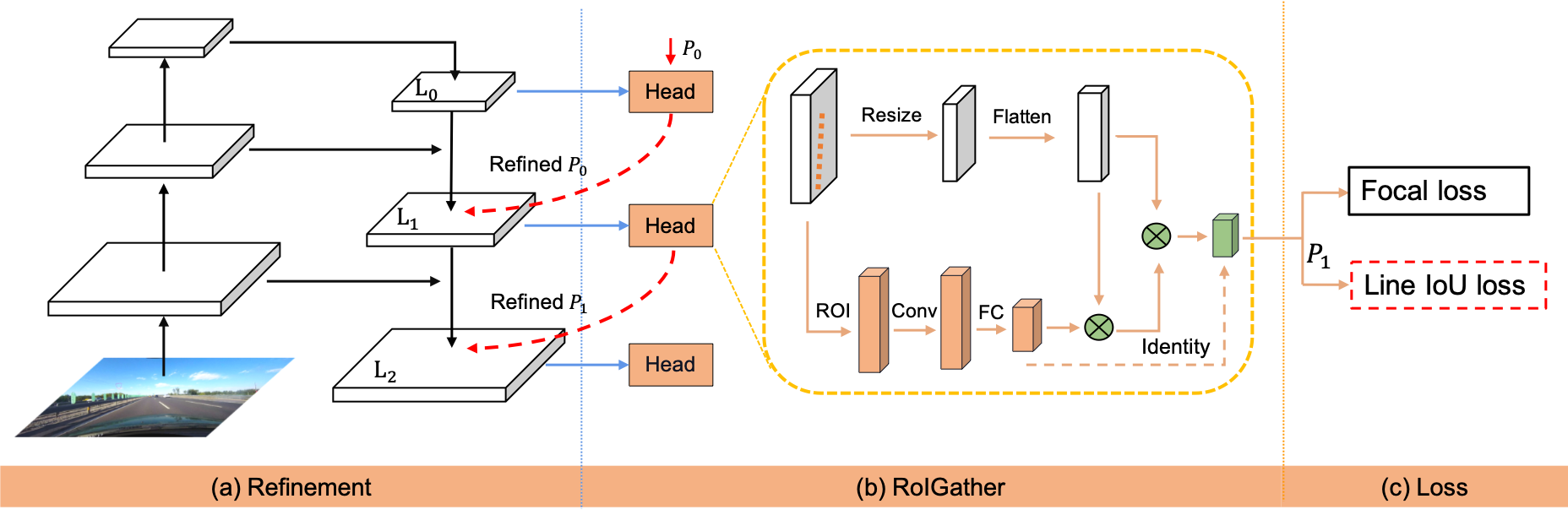

- CLRNet exploits more contextual information to detect lanes while leveraging local detailed lane features to improve localization accuracy.

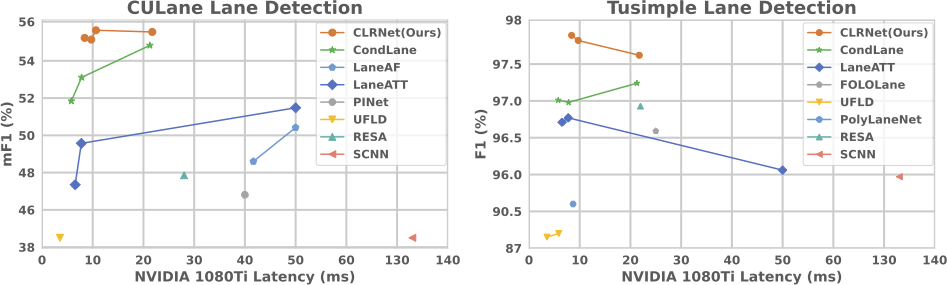

- CLRNet achieves SOTA result on CULane, Tusimple, and LLAMAS datasets.

Only test on Ubuntu18.04 and 20.04 with:

- Python >= 3.8 (tested with Python3.8)

- PyTorch >= 1.6 (tested with Pytorch1.6)

- CUDA (tested with cuda10.2)

- Other dependencies described in

requirements.txt

Clone this code to your workspace.

We call this directory as $CLRNET_ROOT

git clone https://github.com/Turoad/clrnetconda create -n clrnet python=3.8 -y

conda activate clrnet# Install pytorch firstly, the cudatoolkit version should be same in your system.

conda install pytorch torchvision cudatoolkit=10.1 -c pytorch

# Or you can install via pip

pip install torch==1.8.0 torchvision==0.9.0

# Install python packages

python setup.py build developDownload CULane. Then extract them to $CULANEROOT. Create link to data directory.

cd $CLRNET_ROOT

mkdir -p data

ln -s $CULANEROOT data/CULaneFor CULane, you should have structure like this:

$CULANEROOT/driver_xx_xxframe # data folders x6

$CULANEROOT/laneseg_label_w16 # lane segmentation labels

$CULANEROOT/list # data lists

Download Tusimple. Then extract them to $TUSIMPLEROOT. Create link to data directory.

cd $CLRNET_ROOT

mkdir -p data

ln -s $TUSIMPLEROOT data/tusimpleFor Tusimple, you should have structure like this:

$TUSIMPLEROOT/clips # data folders

$TUSIMPLEROOT/lable_data_xxxx.json # label json file x4

$TUSIMPLEROOT/test_tasks_0627.json # test tasks json file

$TUSIMPLEROOT/test_label.json # test label json file

For Tusimple, the segmentation annotation is not provided, hence we need to generate segmentation from the json annotation.

python tools/generate_seg_tusimple.py --root $TUSIMPLEROOT

# this will generate seg_label directoryDowload LLAMAS. Then extract them to $LLAMASROOT. Create link to data directory.

cd $CLRNET_ROOT

mkdir -p data

ln -s $LLAMASROOT data/llamasUnzip both files (color_images.zip and labels.zip) into the same directory (e.g., data/llamas/), which will be the dataset's root. For LLAMAS, you should have structure like this:

$LLAMASROOT/color_images/train # data folders

$LLAMASROOT/color_images/test # data folders

$LLAMASROOT/color_images/valid # data folders

$LLAMASROOT/labels/train # labels folders

$LLAMASROOT/labels/valid # labels folders

For training, run

python main.py [configs/path_to_your_config] --gpus [gpu_num]For example, run

python main.py configs/clrnet/clr_resnet18_culane.py --gpus 0For testing, run

python main.py [configs/path_to_your_config] --[test|validate] --load_from [path_to_your_model] --gpus [gpu_num]For example, run

python main.py configs/clrnet/clr_dla34_culane.py --validate --load_from culane_dla34.pth --gpus 0Currently, this code can output the visualization result when testing, just add --view.

We will get the visualization result in work_dirs/xxx/xxx/visualization.

| Backbone | mF1 | F1@50 | F1@75 |

|---|---|---|---|

| ResNet-18 | 55.23 | 79.58 | 62.21 |

| ResNet-34 | 55.14 | 79.73 | 62.11 |

| ResNet-101 | 55.55 | 80.13 | 62.96 |

| DLA-34 | 55.64 | 80.47 | 62.78 |

| Backbone | F1 | Acc | FDR | FNR |

|---|---|---|---|---|

| ResNet-18 | 97.89 | 96.84 | 2.28 | 1.92 |

| ResNet-34 | 97.82 | 96.87 | 2.27 | 2.08 |

| ResNet-101 | 97.62 | 96.83 | 2.37 | 2.38 |

| Backbone | valid mF1 F1@50 F1@75 |

test F1@50 |

|---|---|---|

| ResNet-18 | 70.83 96.93 85.23 | 96.00 |

| DLA-34 | 71.57 97.06 85.43 | 96.12 |

“F1@50” refers to the official metric, i.e., F1 score when IoU threshold is 0.5 between the gt and prediction. "F1@75" is the F1 score when IoU threshold is 0.75.

1.转化为ONNX模型

cd deploy

python torch2onnx.py ../configs/clrnet/clr_resnet18_seasky.py --load_from ../ckpts/checkpoint.pth

此时会在deploy目录下生成seasky_r18.onnx文件.

2.测试ONNX模型

python demo_onnx.py

如果没有问题,此时会在deploy目录下生成output_onnx.png图像,可以验证车道线检测结果.

3.ONNX转化为TensorRT

(1). 安装所需要依赖

pip install nvidia-pyindex

pip install polygraphy

pip install onnx-graphsurgeon

pip install pycuda

(2). 运行

polygraphy surgeon sanitize seasky_r18.onnx --fold-constants --output seasky_r18.onnx

(3). 转化为tensorrt, 得到seasky_r18.engine

trtexec --onnx=seasky_r18.onnx --saveEngine=seasky_r18.engine --verbose

(4). 测试tensorrt demo

python demo_trt.py

If our paper and code are beneficial to your work, please consider citing:

@InProceedings{Zheng_2022_CVPR,

author = {Zheng, Tu and Huang, Yifei and Liu, Yang and Tang, Wenjian and Yang, Zheng and Cai, Deng and He, Xiaofei},

title = {CLRNet: Cross Layer Refinement Network for Lane Detection},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {898-907}

}