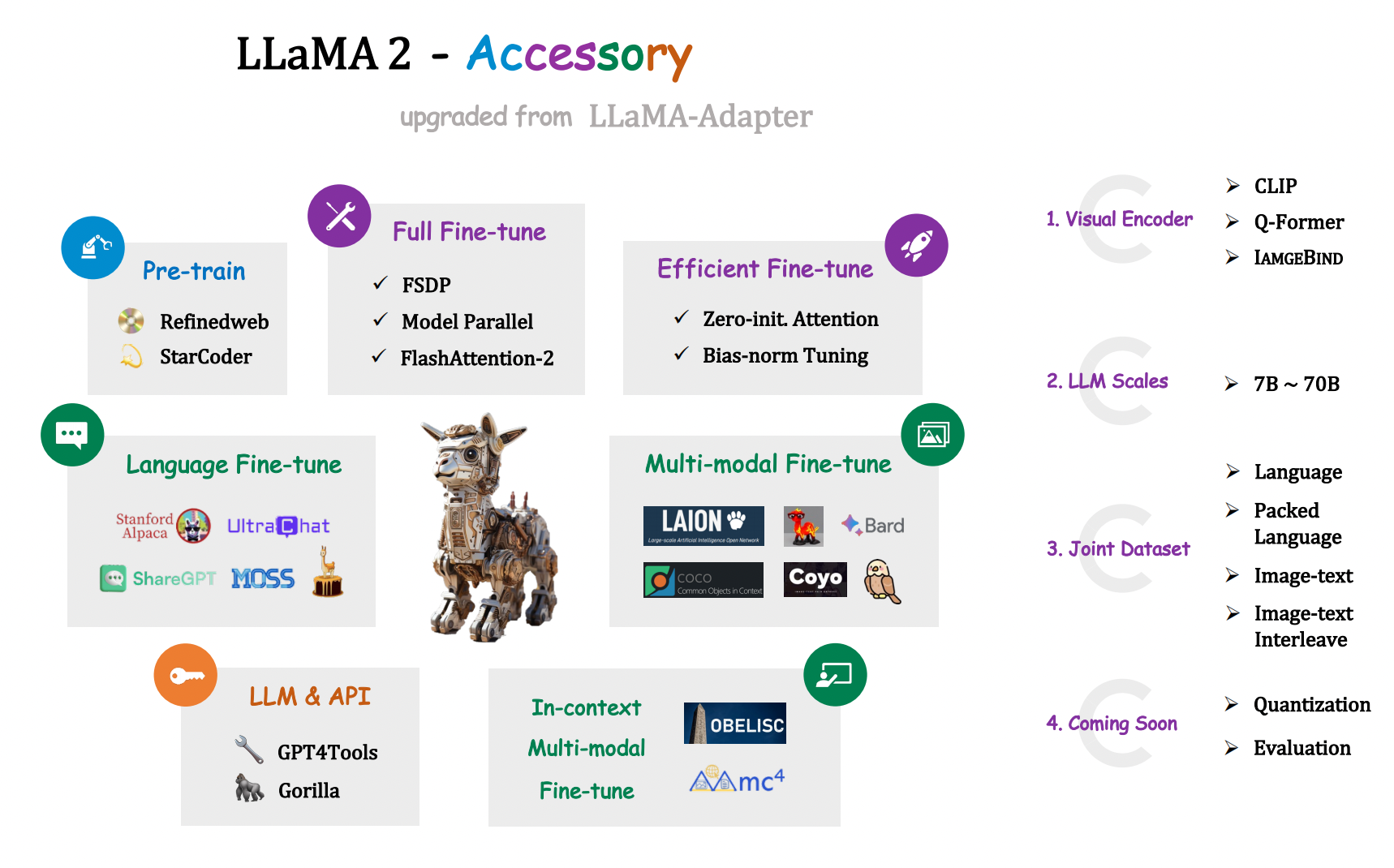

LLaMA2-Accessory is an open-source toolkit for pre-training, fine-tuning and deployment of Large Language Models (LLMs) and mutlimodal LLMs. This repo is mainly inherited from LLaMA-Adapter with more advanced features.

- [2023.07.23] Initial release 📌

-

Support More Datasets and Tasks

- Pre-training with RefinedWeb and StarCoder.

- Single-modal fine-tuning with Alpaca, ShareGPT, LIMA, UltraChat and MOSS.

- Multi-modal fine-tuning with image-text pairs (LAION, COYO and more), interleaved image-text data (MMC4 and OBELISC) and visual instruction data (LLaVA, Shrika, Bard)

- LLM for API Control (GPT4Tools and Gorilla).

-

Efficient Optimization and Deployment

- Parameter-efficient fine-tuning with Zero-init Attenion and Bias-norm Tuning.

- Fully Sharded Data Parallel (FSDP), Flash Attention 2 and QLoRA.

-

Support More Visual Encoders and LLMs

See docs/install.md.

See docs/finetune.md.

- instruction-tuned LLaMA2: alpaca.

- Chatbot LLaMA2: dialog_sharegpt & dialog_lima & llama2-chat.

Chris Liu, Ziyi Lin, Guian Fang, Jiaming Han, Renrui Zhang, Wenqi Shao, Peng Gao

Show More

- @facebookresearch for ImageBind & LIMA

- @Instruction-Tuning-with-GPT-4 for GPT-4-LLM

- @tatsu-lab for stanford_alpaca

- @tloen for alpaca-lora

- @lm-sys for FastChat

- @domeccleston for sharegpt

- @karpathy for nanoGPT

- @Dao-AILab for flash-attention

- @NVIDIA for apex & Megatron-LM

- @Vision-CAIR for MiniGPT-4

- @haotian-liu for LLaVA

- @huggingface for peft & OBELISC

- @Lightning-AI for lit-gpt & lit-llama

- @allenai for mmc4

- @StevenGrove for GPT4Tools

- @ShishirPatil for gorilla

- @OpenLMLab for MOSS

- @thunlp for UltraChat

- @LAION-AI for LAION-5B

- @shikras for shikra

- @kakaobrain for coyo-dataset

- @salesforce for LAVIS

- @openai for CLIP

- @bigcode-project for starcoder

- @tiiuae for falcon-refinedweb

- @microsoft for DeepSpeed

- @declare-lab for flacuna

- @Google for Bard