cleanlab helps you clean data and labels by automatically detecting issues in a ML dataset. To facilitate machine learning with messy, real-world data, this data-centric AI package uses your existing models to estimate dataset problems that can be fixed to train even better models.

# cleanlab works with **any classifier**. Yup, you can use PyTorch/TensorFlow/OpenAI/XGBoost/etc.

cl = cleanlab.classification.CleanLearning(sklearn.YourFavoriteClassifier())

# cleanlab finds data and label issues in **any dataset**... in ONE line of code!

label_issues = cl.find_label_issues(data, labels)

# cleanlab trains a robust version of your model that works more reliably with noisy data.

cl.fit(data, labels)

# cleanlab estimates the predictions you would have gotten if you had trained with *no* label issues.

cl.predict(test_data)

# A universal data-centric AI tool, cleanlab quantifies class-level issues and overall data quality, for any dataset.

cleanlab.dataset.health_summary(labels, confident_joint=cl.confident_joint)Get started with: tutorials, documentation, examples, and blogs.

- Learn to run cleanlab on your data in 5 minutes for: image, text, audio, or tabular data.

- Use cleanlab to automatically: detect data issues (outliers, duplicates, label errors, etc), train robust models, infer consensus + annotator-quality for multi-annotator data, suggest data to (re)label next (active learning).

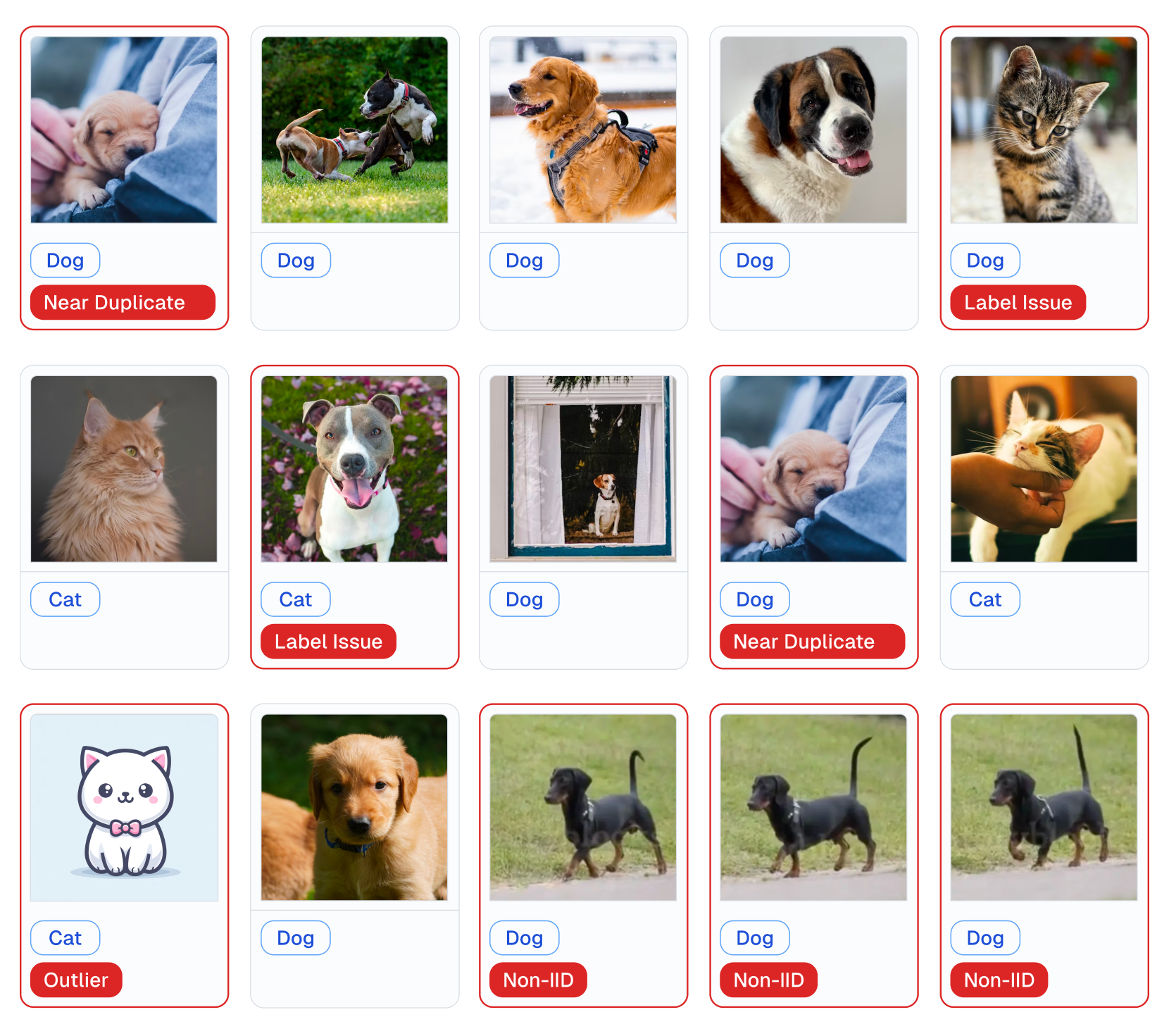

Examples of various issues in Cat/Dog dataset automatically detected by cleanlab via this code:

lab = cleanlab.Datalab(data=dataset, label="column_name_for_labels")

# Fit any ML model, get its feature_embeddings & pred_probs for your data

lab.find_issues(features=feature_embeddings, pred_probs=pred_probs)

lab.report()cleanlab cleans your data's labels via state-of-the-art confident learning algorithms, published in this paper and blog. See some of the datasets cleaned with cleanlab at labelerrors.com. This data-centric AI tool helps you find data and label issues, so you can train reliable ML models.

cleanlab is:

- backed by theory -- with provable guarantees of exact label noise estimation, even with imperfect models.

- fast -- code is parallelized and scalable.

- easy to use -- one line of code to find mislabeled data, bad annotators, outliers, or train noise-robust models.

- general -- works with any dataset (text, image, tabular, audio,...) + any model (PyTorch, OpenAI, XGBoost,...)

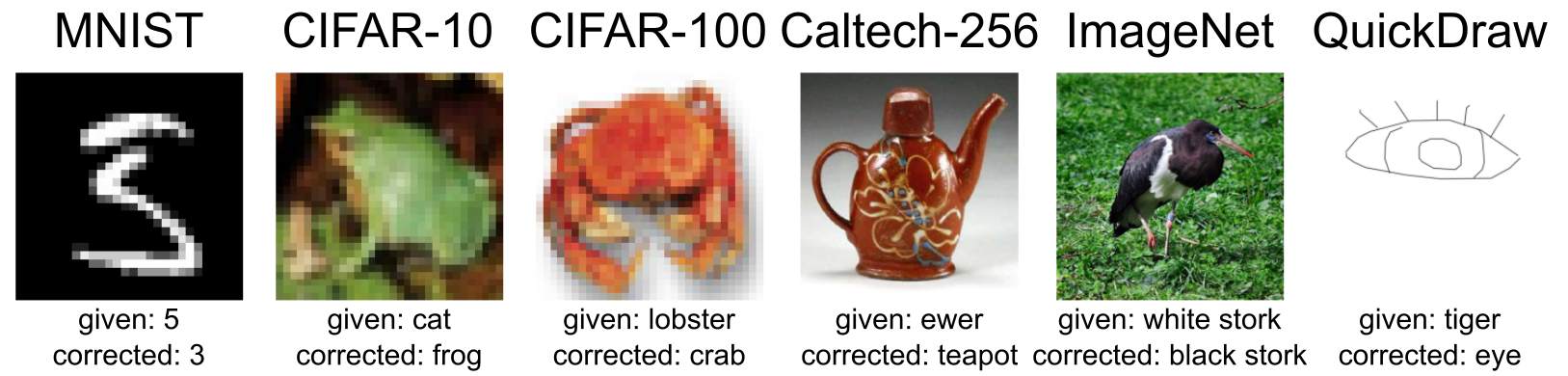

Examples of incorrect given labels in various image datasets found and corrected using cleanlab.

cleanlab supports Linux, macOS, and Windows and runs on Python 3.8+.

- Get started here! Install via

piporcondaas described here. - Developers who install the bleeding-edge from source should refer to this master branch documentation.

- For help, check out our detailed FAQ, Github Issues, or Slack. We welcome any questions!

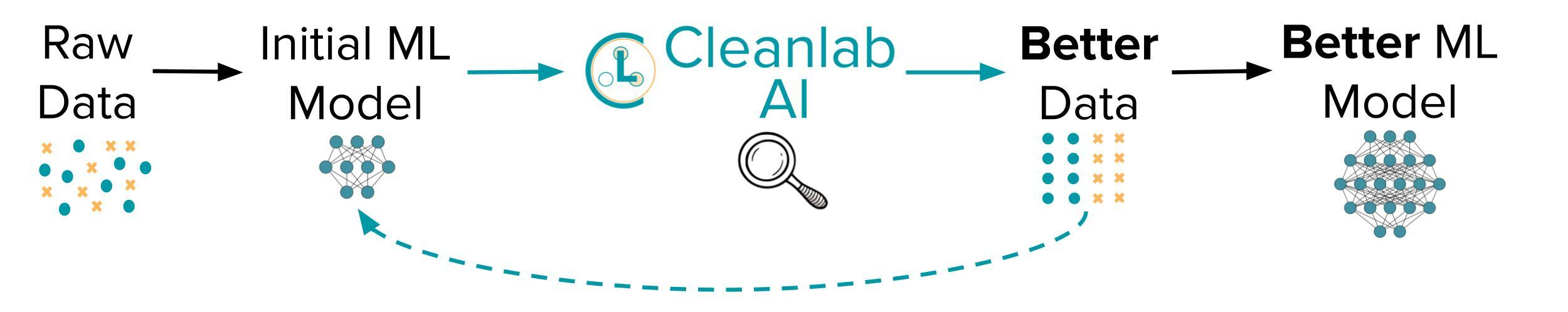

Practicing data-centric AI can look like this:

- Train initial ML model on original dataset.

- Utilize this model to diagnose data issues (via cleanlab methods) and improve the dataset.

- Train the same model on the improved dataset.

- Try various modeling techniques to further improve performance.

Most folks jump from Step 1 → 4, but you may achieve big gains without any change to your modeling code by using cleanlab! Continuously boost performance by iterating Steps 2 → 4 (and try to evaluate with cleaned data).

All features of cleanlab work with any dataset and any model. Yes, any model: PyTorch, Tensorflow, Keras, JAX, HuggingFace, OpenAI, XGBoost, scikit-learn, etc. If you use a sklearn-compatible classifier, all cleanlab methods work out-of-the-box.

It’s also easy to use your favorite non-sklearn-compatible model (click to learn more)

cleanlab can find label issues from any model's predicted class probabilities if you can produce them yourself.

Some cleanlab functionality may require your model to be sklearn-compatible.

There's nothing you need to do if your model already has .fit(), .predict(), and .predict_proba() methods.

Otherwise, just wrap your custom model into a Python class that inherits the sklearn.base.BaseEstimator:

from sklearn.base import BaseEstimator

class YourFavoriteModel(BaseEstimator): # Inherits sklearn base classifier

def __init__(self, ):

pass # ensure this re-initializes parameters for neural net models

def fit(self, X, y, sample_weight=None):

pass

def predict(self, X):

pass

def predict_proba(self, X):

pass

def score(self, X, y, sample_weight=None):

passThis inheritance allows to apply a wide range of sklearn functionality like hyperparameter-optimization to your custom model. Now you can use your model with every method in cleanlab. Here's one example:

from cleanlab.classification import CleanLearning

cl = CleanLearning(clf=YourFavoriteModel()) # has all the same methods of YourFavoriteModel

cl.fit(train_data, train_labels_with_errors)

cl.predict(test_data)Want to see a working example? Here’s a compliant PyTorch MNIST CNN class

More details are provided in documentation of cleanlab.classification.CleanLearning.

Note, some libraries exist to give you sklearn-compatibility for free. For PyTorch, check out the skorch Python library which will wrap your PyTorch model into a sklearn-compatible model (example). For TensorFlow/Keras, check out our Keras wrapper. Many libraries also already offer a special scikit-learn API, for example: XGBoost or LightGBM.

cleanlab is useful across a wide variety of Machine Learning tasks. Specific tasks this data-centric AI solution offers dedicated functionality for include:

- Binary and multi-class classification

- Multi-label classification (e.g. image/document tagging)

- Token classification (e.g. entity recognition in text)

- Regression (predicting numerical column in a dataset)

- Image segmentation (images with per-pixel annotations)

- Object detection (images with bounding box annotations)

- Classification with data labeled by multiple annotators

- Active learning with multiple annotators (suggest which data to label or re-label to improve model most)

- Outlier detection (identify atypical data that appears out of distribution)

For other ML tasks, cleanlab can still help you improve your dataset if appropriately applied. Many practical applications are demonstrated in our Example Notebooks.

cleanlab is based on peer-reviewed research. Here are relevant papers to cite if you use this package:

Confident Learning (JAIR '21) (click to show bibtex)

@article{northcutt2021confidentlearning,

title={Confident Learning: Estimating Uncertainty in Dataset Labels},

author={Curtis G. Northcutt and Lu Jiang and Isaac L. Chuang},

journal={Journal of Artificial Intelligence Research (JAIR)},

volume={70},

pages={1373--1411},

year={2021}

}

Rank Pruning (UAI '17) (click to show bibtex)

@inproceedings{northcutt2017rankpruning,

author={Northcutt, Curtis G. and Wu, Tailin and Chuang, Isaac L.},

title={Learning with Confident Examples: Rank Pruning for Robust Classification with Noisy Labels},

booktitle = {Proceedings of the Thirty-Third Conference on Uncertainty in Artificial Intelligence},

series = {UAI'17},

year = {2017},

location = {Sydney, Australia},

numpages = {10},

url = {http://auai.org/uai2017/proceedings/papers/35.pdf},

publisher = {AUAI Press},

}

Label Quality Scoring (ICML '22) (click to show bibtex)

@inproceedings{kuan2022labelquality,

title={Model-agnostic label quality scoring to detect real-world label errors},

author={Kuan, Johnson and Mueller, Jonas},

booktitle={ICML DataPerf Workshop},

year={2022}

}

Out-of-Distribution Detection (ICML '22) (click to show bibtex)

@inproceedings{kuan2022ood,

title={Back to the Basics: Revisiting Out-of-Distribution Detection Baselines},

author={Kuan, Johnson and Mueller, Jonas},

booktitle={ICML Workshop on Principles of Distribution Shift},

year={2022}

}

Token Classification Label Errors (NeurIPS '22) (click to show bibtex)

@inproceedings{wang2022tokenerrors,

title={Detecting label errors in token classification data},

author={Wang, Wei-Chen and Mueller, Jonas},

booktitle={NeurIPS Workshop on Interactive Learning for Natural Language Processing (InterNLP)},

year={2022}

}

CROWDLAB for Data with Multiple Annotators (NeurIPS '22) (click to show bibtex)

@inproceedings{goh2022crowdlab,

title={CROWDLAB: Supervised learning to infer consensus labels and quality scores for data with multiple annotators},

author={Goh, Hui Wen and Tkachenko, Ulyana and Mueller, Jonas},

booktitle={NeurIPS Human in the Loop Learning Workshop},

year={2022}

}

ActiveLab: Active learning with data re-labeling (ICLR '23) (click to show bibtex)

@inproceedings{goh2023activelab,

title={ActiveLab: Active Learning with Re-Labeling by Multiple Annotators},

author={Goh, Hui Wen and Mueller, Jonas},

booktitle={ICLR Workshop on Trustworthy ML},

year={2023}

}

Incorrect Annotations in Multi-Label Classification (ICLR '23) (click to show bibtex)

@inproceedings{thyagarajan2023multilabel,

title={Identifying Incorrect Annotations in Multi-Label Classification Data},

author={Thyagarajan, Aditya and Snorrason, Elías and Northcutt, Curtis and Mueller, Jonas},

booktitle={ICLR Workshop on Trustworthy ML},

year={2023}

}

Detecting Dataset Drift and Non-IID Sampling (ICML '23) (click to show bibtex)

@inproceedings{cummings2023drift,

title={Detecting Dataset Drift and Non-IID Sampling via k-Nearest Neighbors},

author={Cummings, Jesse and Snorrason, Elías and Mueller, Jonas},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

year={2023}

}

Detecting Errors in Numerical Data (ICML '23) (click to show bibtex)

@inproceedings{zhou2023errors,

title={Detecting Errors in Numerical Data via any Regression Model},

author={Zhou, Hang and Mueller, Jonas and Kumar, Mayank and Wang, Jane-Ling and Lei, Jing},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

year={2023}

}

ObjectLab: Mislabeled Images in Object Detection Data (ICML '23) (click to show bibtex)

@inproceedings{tkachenko2023objectlab,

title={ObjectLab: Automated Diagnosis of Mislabeled Images in Object Detection Data},

author={Tkachenko, Ulyana and Thyagarajan, Aditya and Mueller, Jonas},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

year={2023}

}

Label Errors in Segmentation Data (ICML '23) (click to show bibtex)

@inproceedings{lad2023segmentation,

title={Estimating label quality and errors in semantic segmentation data via any model},

author={Lad, Vedang and Mueller, Jonas},

booktitle={ICML Workshop on Data-centric Machine Learning Research},

year={2023}

}

To understand/cite other cleanlab functionality not described above, check out our additional publications.

-

Example Notebooks demonstrating practical applications of this package

-

NeurIPS 2021 paper: Pervasive Label Errors in Test Sets Destabilize Machine Learning Benchmarks

-

Cleanlab Studio: No-code Data Improvement

While this open-source library finds data issues, its utility depends on you having a decent existing ML model and an interface to efficiently fix these issues in your dataset. Providing all these pieces, Cleanlab Studio is a no-code platform to find and fix problems in real-world ML datasets. Cleanlab Studio automatically runs optimized versions of the algorithms from this open-source library on top of AutoML & Foundation models fit to your data, and presents detected issues in a smart data editing interface. It's a data cleaning assistant to quickly turn unreliable data into reliable models/insights (via AI/automation + streamlined UX). Try it for free!

-

The best place to learn is our Slack community.

-

Have ideas for the future of cleanlab? How are you using cleanlab? Join the discussion and check out our active/planned Projects and what we could use your help with.

-

Interested in contributing? See the contributing guide and ideas on useful contributions. We welcome your help building a standard open-source platform for data-centric AI!

-

Have code improvements for cleanlab? See the development guide.

-

Have an issue with cleanlab? Search our FAQ and existing issues, or submit a new issue.

-

Need professional help with cleanlab? Join our #help Slack channel and message us there, or reach out via email: team@cleanlab.ai

Copyright (c) 2017 Cleanlab Inc.

cleanlab is free software: you can redistribute it and/or modify it under the terms of the GNU Affero General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

cleanlab is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

See GNU Affero General Public LICENSE for details. You can email us to discuss licensing: team@cleanlab.ai

Commercial licensing is available for teams and enterprises that want to use cleanlab in production workflows, but are unable to open-source their code as is required by the current license. Please email us: team@cleanlab.ai