Overview [paper]

This repo contains a learning method for denoising gyroscopes of Inertial Measurement Units (IMUs) using ground truth data. In terms of attitude dead-reckoning estimation, the obtained algorithm is able to beat top-ranked visual-inertial odometry systems [3-5] in terms of attitude estimation although it only uses signals from a low-cost IMU. The obtained performances are achieved thanks to a well chosen model, and a proper loss function for orientation increments. Our approach builds upon a neural network based on dilated convolutions, without requiring any recurrent neural network.

Our implementation is based on Python 3 and Pytorch. We test the code under Ubuntu 16.04, Python 3.5, and Pytorch 1.5. The codebase is licensed under the MIT License.

- Install the correct version of Pytorch

pip install --pre torch -f https://download.pytorch.org/whl/nightly/cu101/torch_nightly.html

- Clone this repo and create empty directories

git clone https://github.com/mbrossar/denoise-imu-gyro.git

mkdir denoise-imu-gyro/data

mkdir denoise-imu-gyro/results

- Install the following required Python packages, e.g. with the pip command

pip install -r denoise-imu-gyro/requirements.txt

- Download reformated pickle format of the EuRoC [1] and TUM-VI [2] datasets at this url, extract and copy then in the

datafolder.

wget "https://cloud.mines-paristech.fr/index.php/s/MRXzSMDX829Qb6k/download"

unzip download -d denoise-imu-gyro/data

rm download

These file can alternatively be generated after downloading the EuRoC and TUM-VI datasets. They will be generated when lanching the main file after providing data paths.

- Download optimized parameters at this url, extract and copy in the

resultsfolder.

wget "https://cloud.mines-paristech.fr/index.php/s/AMe4V1KYvyHtvEC/download"

unzip download -d denoise-imu-gyro/results

rm download

- Test on the dataset on your choice !

cd denoise-imu-gyro

python3 main_EUROC.py

# or alternatively

# python3 main_TUMVI.py

You can then compare results with the evaluation toolbox of [3].

You can train the method by uncomment the two lines after # train in the main files. Edit then the configuration to obtain results with another sets of parameters. It roughly takes 5 minutes per dataset with a decent GPU.

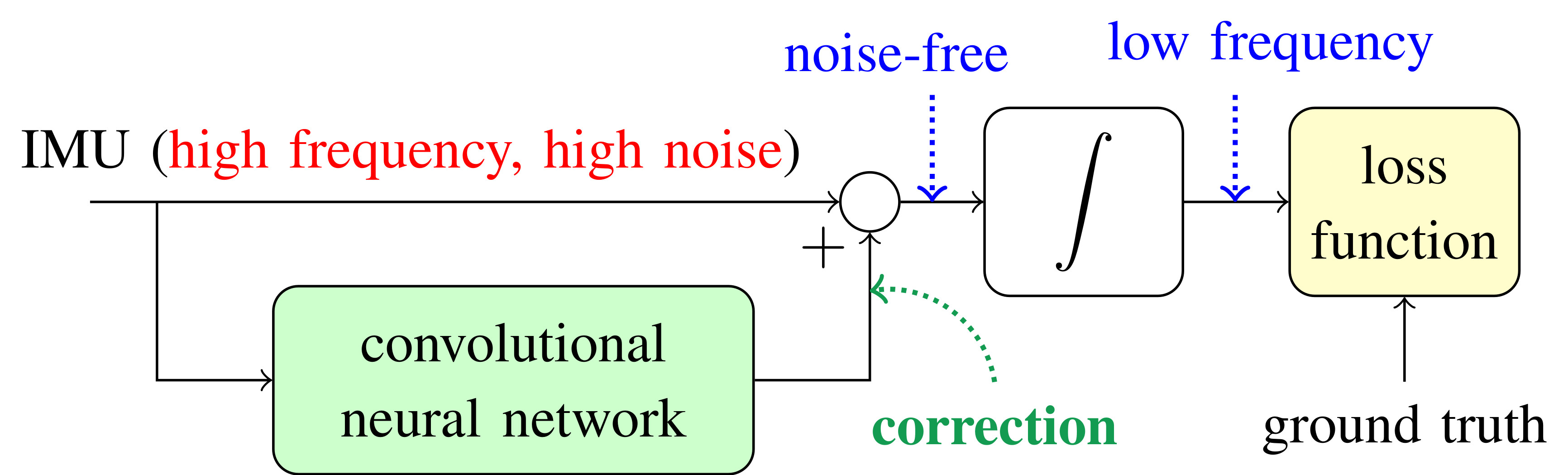

The convolutional neural network computes gyro corrections (based on past IMU measurements) that filters undesirable errors in the raw IMU signals. We then perform open-loop time integration on the noise-free measurements for regressing low frequency errors between ground truth and estimated orientation increments.

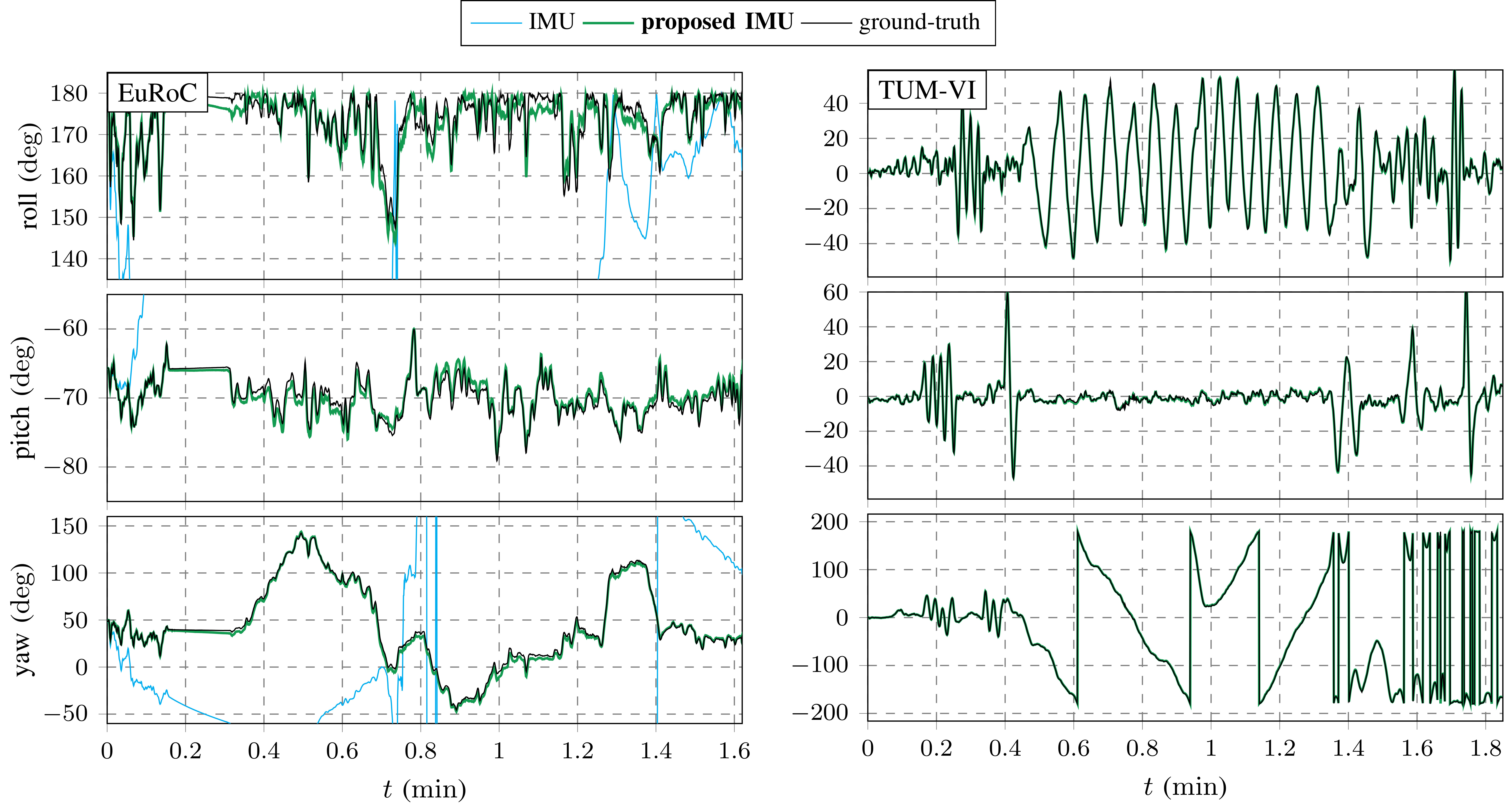

Orientation estimates on the test sequence MH 04 difficult of [1] (left), and room 4 of [2] (right). Our method removes errors of the IMU.

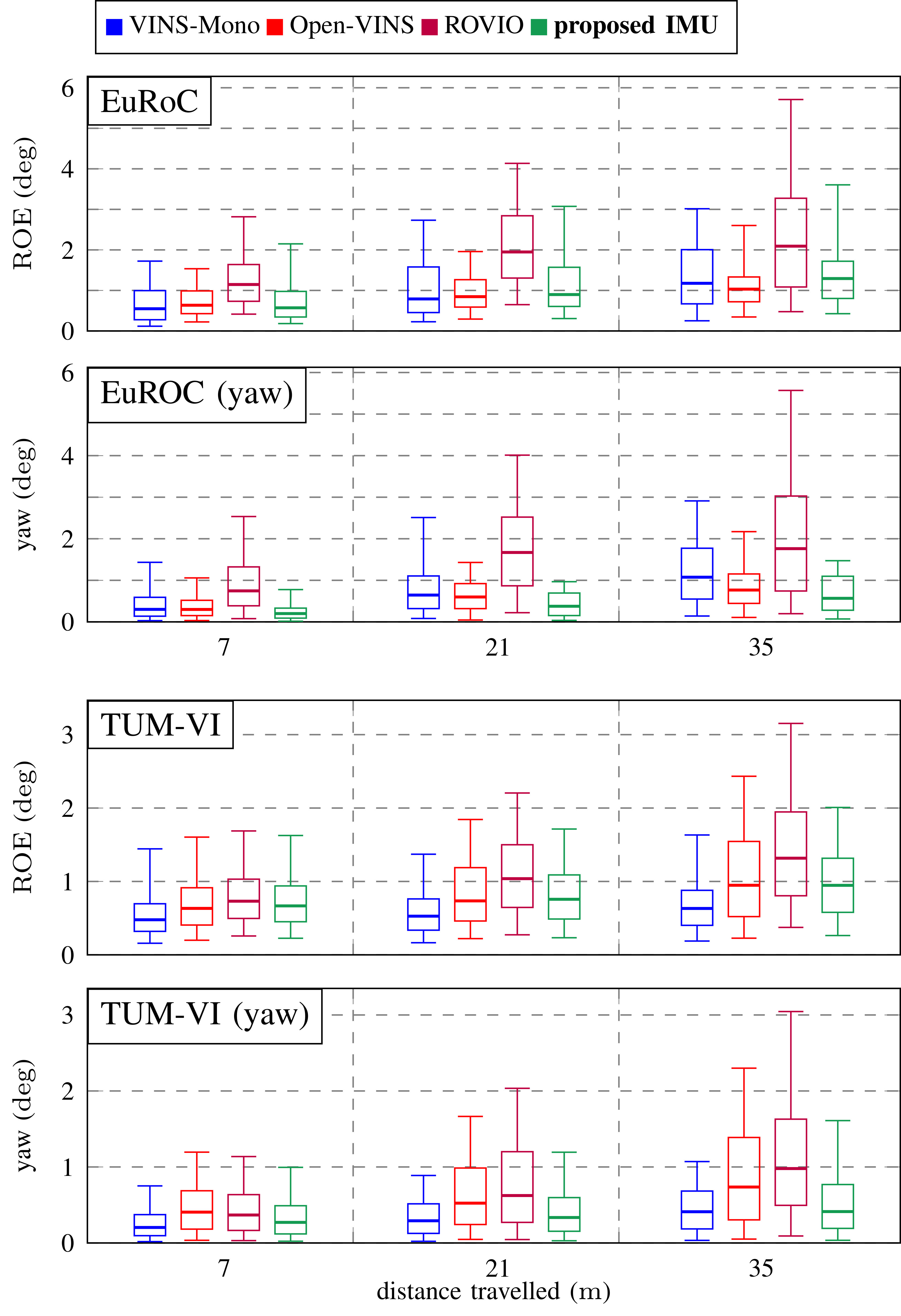

Relative Orientation Error (ROE) in terms of 3D orientation and yaw errors on the test sequences. Our method competes with VIO methods albeit based only on IMU signals.

The paper Denoising IMU Gyroscope with Deep Learning for Open-Loop Attitude Estimation, M. Brossard, S. Bonnabel and A. Barrau. 2020, relative to this repo is available at this url.

If you use this code in your research, please cite:

@article{brossard2020denoising,

author = {Martin Brossard and Silv\`ere Bonnabel and Axel Barrau},

title = {{Denoising IMU Gyro with Deep Learning for Open-Loop Orientation Estimation}},

year = {2020}

}

This code was written by the Centre of Robotique at the MINESParisTech, Paris, France.

Martin Brossard^, Axel Barrau^ and Silvère Bonnabel^.

^MINES ParisTech, PSL Research University, Centre for Robotics, 60 Boulevard Saint-Michel, 75006 Paris, France.

[1] M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari, M. W. Achtelik, and R. Siegwart, ``The EuRoC Micro Aerial Vehicle Datasets", The International Journal of Robotics Research, vol. 35, no. 10, pp. 1157–1163, 2016.

[2] D. Schubert, T. Goll, N. Demmel, V. Usenko, J. Stuckler, and D. Cremers, ``The TUM VI Benchmark for Evaluating Visual-Inertial Odometry", in International Conference on Intelligent Robots and Systems (IROS). IEEE, pp. 1680–1687, 2018.

[3] P. Geneva, K. Eckenhoff, W. Lee, Y. Yang, and G. Huang, ``OpenVINS: A Research Platform for Visual-Inertial Estimation", IROS Workshop on Visual-Inertial Navigation: Challenges and Applications, 2019.

[4] T. Qin, P. Li, and S. Shen, ``VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator", IEEE Transactions on Robotics, vol. 34, no. 4, pp. 1004–1020, 2018.

[5] M. Bloesch, M. Burri, S. Omari, M. Hutter, and R. Siegwart, ``Iterated Extended Kalman Filter Based Visual-Inertial Odometry Using Direct Photometric Feedback", The International Journal of Robotics Research,vol. 36, no. 10, pp. 1053ñ1072, 2017.