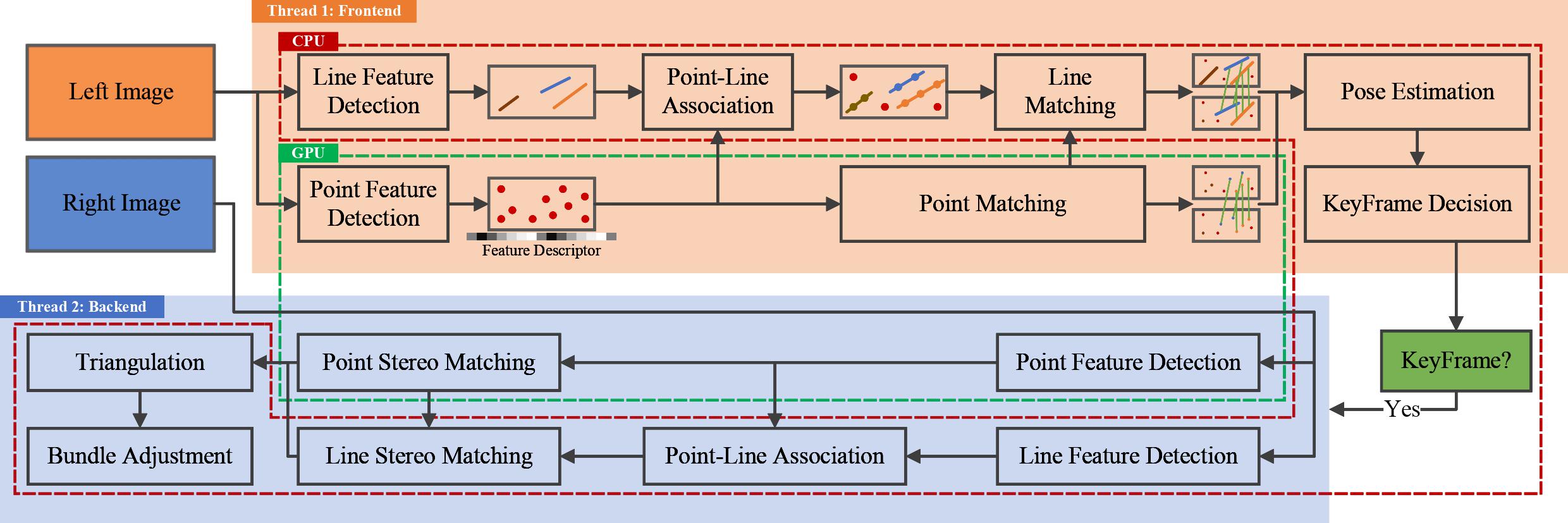

AirVO is an illumination-robust and accurate stereo visual odometry (VO) system based on point and line features. It is a hybrid VO system that combines the efficiency of traditional optimization techniques with the robustness of learning-based methods. To be robust to illumination variation, we introduce both the learning-based feature extraction (SuperPoint) and matching (SuperGlue) method to the system. Moreover, We propose a new line processing pipeline for VO that associates 2D lines with learning-based 2D points on the image, leading to more robust feature matching and triangulation. This novel method enhances the accuracy and reliability of VO, especially in illumination-challenging environments. By accelerating CNN and GNN parts using Nvidia TensorRT Toolkit, our point feature detection and matching achieve more than 5× faster than the original codes. The system can run at a rate of about 15Hz on the Nvidia Jetson AGX Xavier (a low-power embedded device) and 40Hz on a notebook PC.

Authors: Kuan Xu, Yuefan Hao, Shenghai Yuan, Chen Wang, and Lihua Xie

AirVO: An Illumination-Robust Point-Line Visual Odometry, Kuan Xu, Yuefan Hao, Shenghai Yuan, Chen Wang and Lihua Xie, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023. PDF.

If you use AirVO, please cite:

@inproceedings{xu2023airvo,

title={AirVO: An Illumination-Robust Point-Line Visual Odometry},

author={Xu, Kuan and Hao, Yuefan and Yuan, Shenghai and Wang, Chen and Xie, Lihua},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2023}

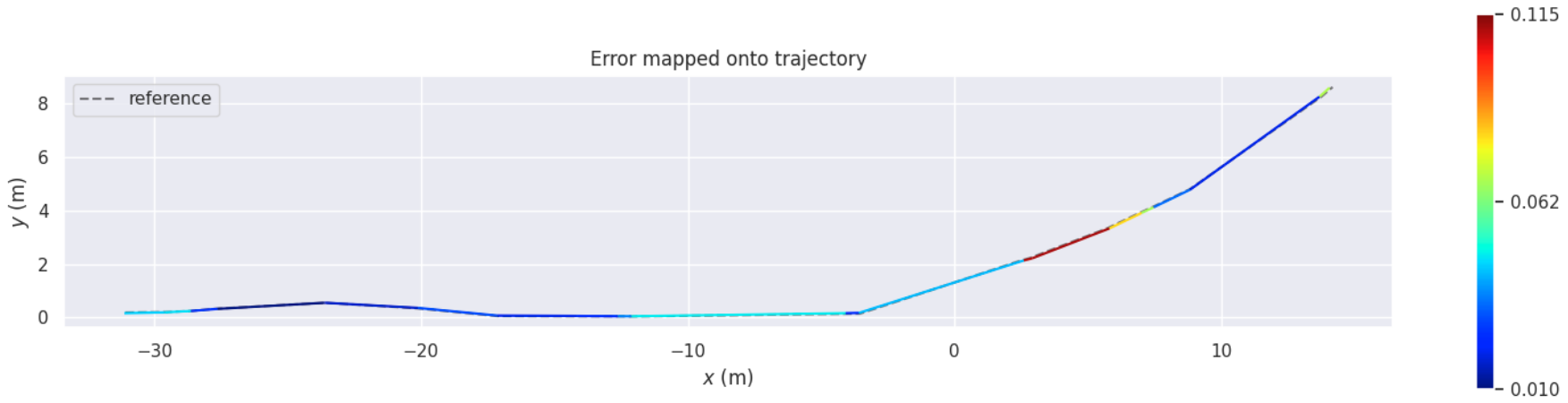

}UMA-VI dataset contains many sequences where images may suddenly darken as a result of turning off the lights. Here are demos on two sequences.

OIVIO dataset collects data in mines and tunnels with onboard illumination.

We also test AirVO on sequences collected by Realsense D435I in the environment with continuous changing illumination.

We collect the data in a factory.

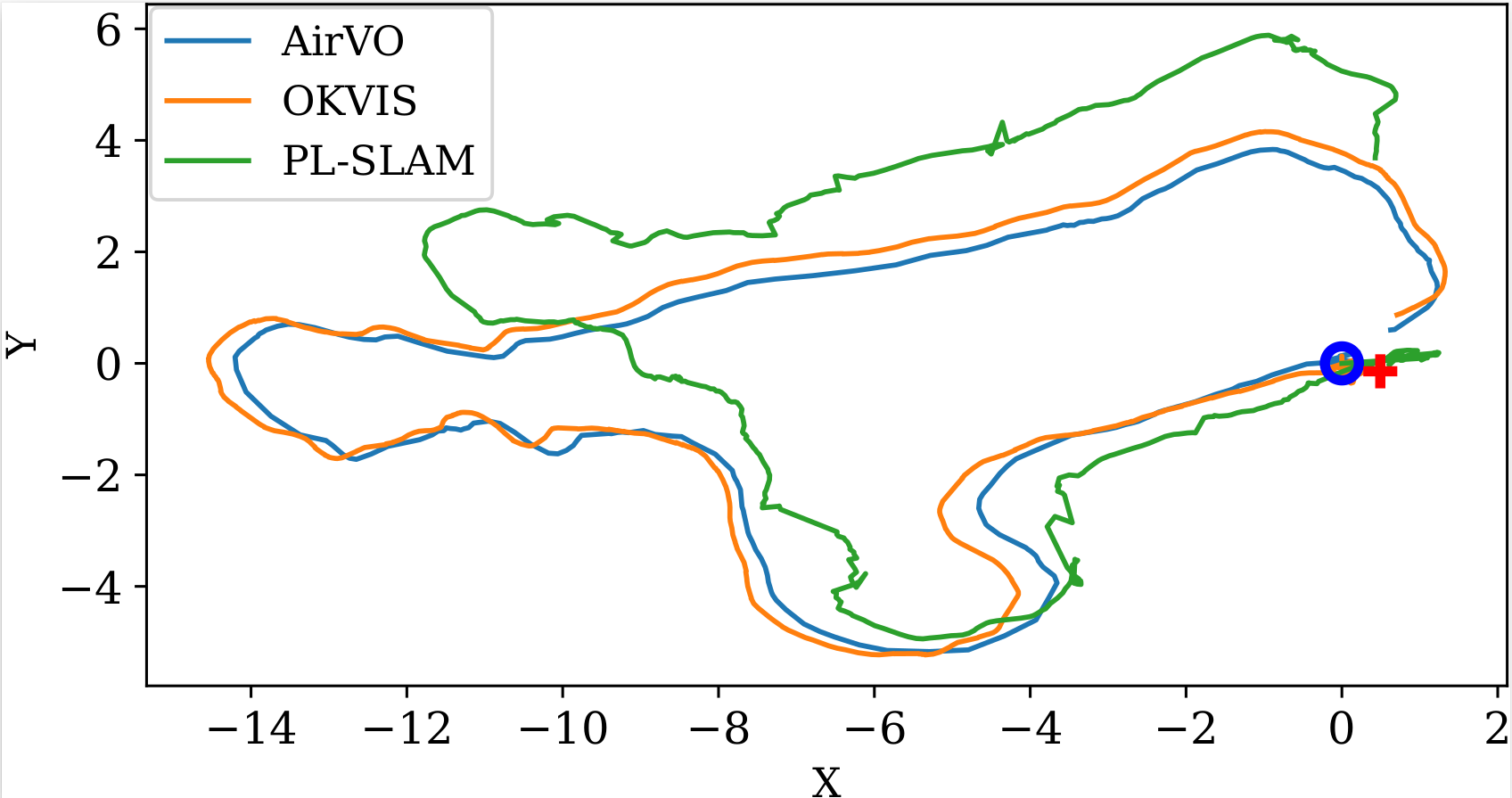

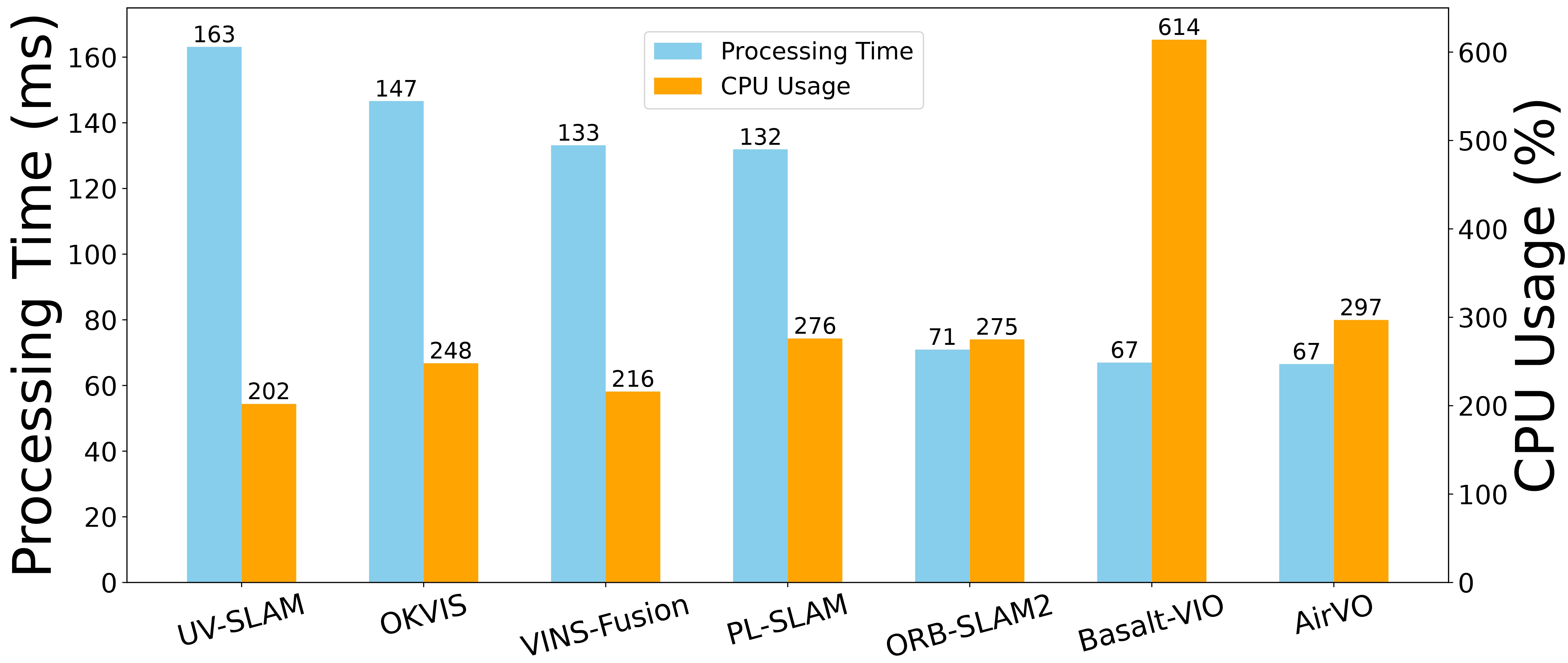

The evaluation is performed on the Nvidia Jetson AGX Xavier (2018), a low-power embedded platform with an 8-core ARM v8.2 64-bit CPU and a lowpower 512-core NVIDIA Volta GPU. The resolution of the input image sequence is 640 × 480. We extract 200 points and disabled the loop closure, relocalization and visualization part for all algorithms.

- OpenCV 4.2

- Eigen 3

- Ceres 2.0.0

- G2O (tag:20230223_git)

- TensorRT 8.4

- CUDA 11.6

- python

- onnx

- ROS noetic

- Boost

- Glog

For Nvidia GeForce RTX 40 series, please use TensorRT 8.5 and CUDA 11.8 instead.

docker pull xukuanhit/air_slam:v1

docker run -it --env DISPLAY=$DISPLAY --volume /tmp/.X11-unix:/tmp/.X11-unix --privileged --runtime nvidia --gpus all --volume ${PWD}:/workspace --workdir /workspace --name air_slam xukuanhit/air_slam:v1 /bin/bashFor Nvidia GeForce RTX 40 series:

docker pull xukuanhit/air_slam:v3

docker run -it --env DISPLAY=$DISPLAY --volume /tmp/.X11-unix:/tmp/.X11-unix --privileged --runtime nvidia --gpus all --volume ${PWD}:/workspace --workdir /workspace --name air_slam xukuanhit/air_slam:v3 /bin/bashThe data should be organized using the Automous Systems Lab (ASL) dataset format just like the following:

dataroot

├── cam0

│ └── data

│ ├── 00001.jpg

│ ├── 00002.jpg

│ ├── 00003.jpg

│ └── ......

└── cam1

└── data

├── 00001.jpg

├── 00002.jpg

├── 00003.jpg

└── ......

cd ~/catkin_ws/src

git clone https://github.com/xukuanHIT/AirVO.git

cd ../

catkin_make

source ~/catkin_ws/devel/setup.bash

Note: Generating ".engine" files needs some time on the first run.

roslaunch air_vo oivio.launch

roslaunch air_vo uma_bumblebee_indoor.launch

roslaunch air_vo euroc.launch

We would like to thank SuperPoint and SuperGlue for making their project public.