Unsupervised Generative Disentangled Action Embedding Extraction

Unsupervised: without action supervision

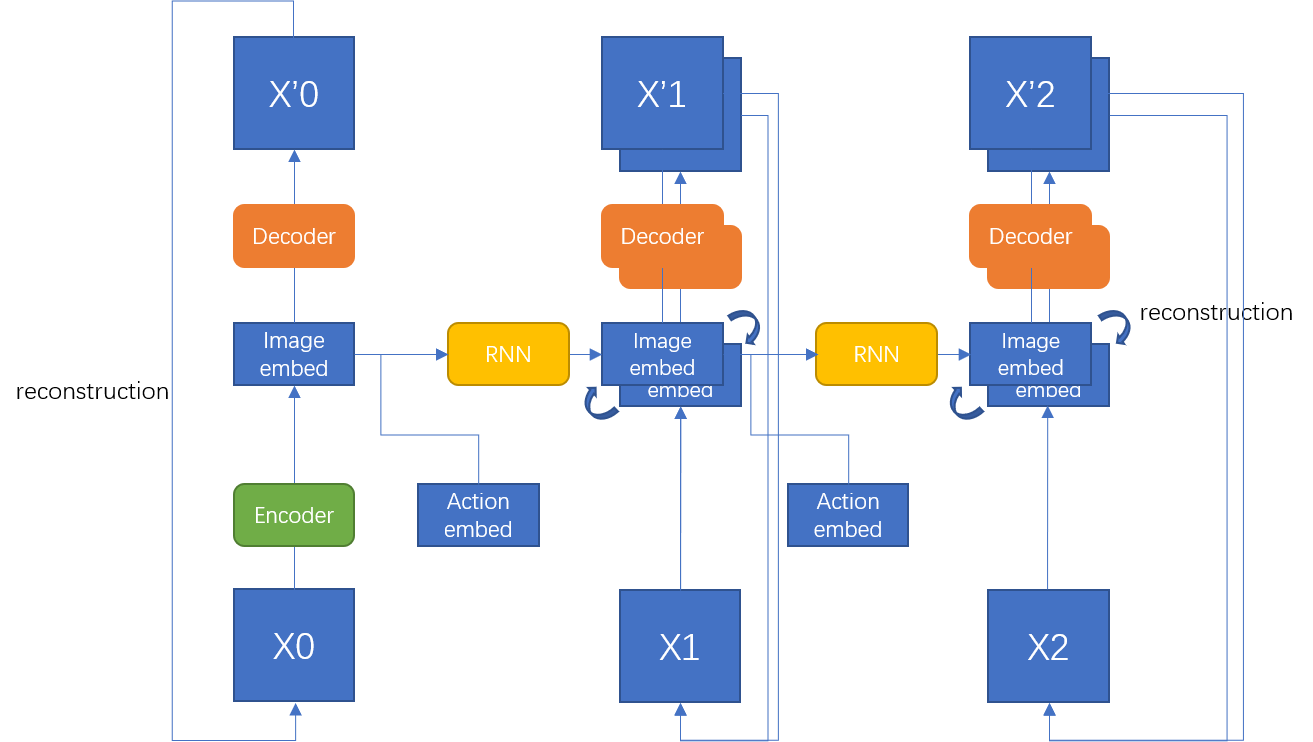

Generative: We use a generrative model

Disentangled: We wish to extract the action embedding only, which is object-agnostic.

Model

Slides

Training and testing

Our framework can be used in several modes. In the motion transfer mode, a static image will be animated using a driving video. In the image-to-video translation mode, given a static image, the framework will predict future frames.

Installation

We support python3. To install the dependencies run:

pip3 install -r requirements.txt

YAML configs

There are several configuration (config/dataset_name.yaml) files one for each dataset. See config/actions.yaml to get description of each parameter.

Training

To train a model on specific dataset run:

CUDA_VISIBLE_DEVICES=0 python3 run.py --config config/dataset_name.yaml

The code will create a folder in the log directory (each run will create a time-stamped new directory).

Checkpoints will be saved to this folder.

To check the loss values during training in see log.txt.

You can also check training data reconstructions in the train-vis subfolder.

Image-to-video translation

In order to perform image-to-video translation run:

CUDA_VISIBLE_DEVICES=0 python3 run.py --config config/dataset_name.yaml --mode prediction --checkpoint path/to/checkpoint

Datasets

-

Shapes. This dataset is saved along with repository. Training takes about 1 hour.

-

Actions. This dataset is also saved along with repository. And training takes about 4 hours.

Reference

[1]Animating Arbitrary Objects via Deep Motion Transfer by Aliaksandr Siarohin, Stéphane Lathuilière, Sergey Tulyakov, Elisa Ricci and Nicu Sebe.