Ziyang Chen, Daniel Geng, Andrew Owens

University of Michigan, Ann Arbor

arXiv 2024

[Paper] [Project Page]

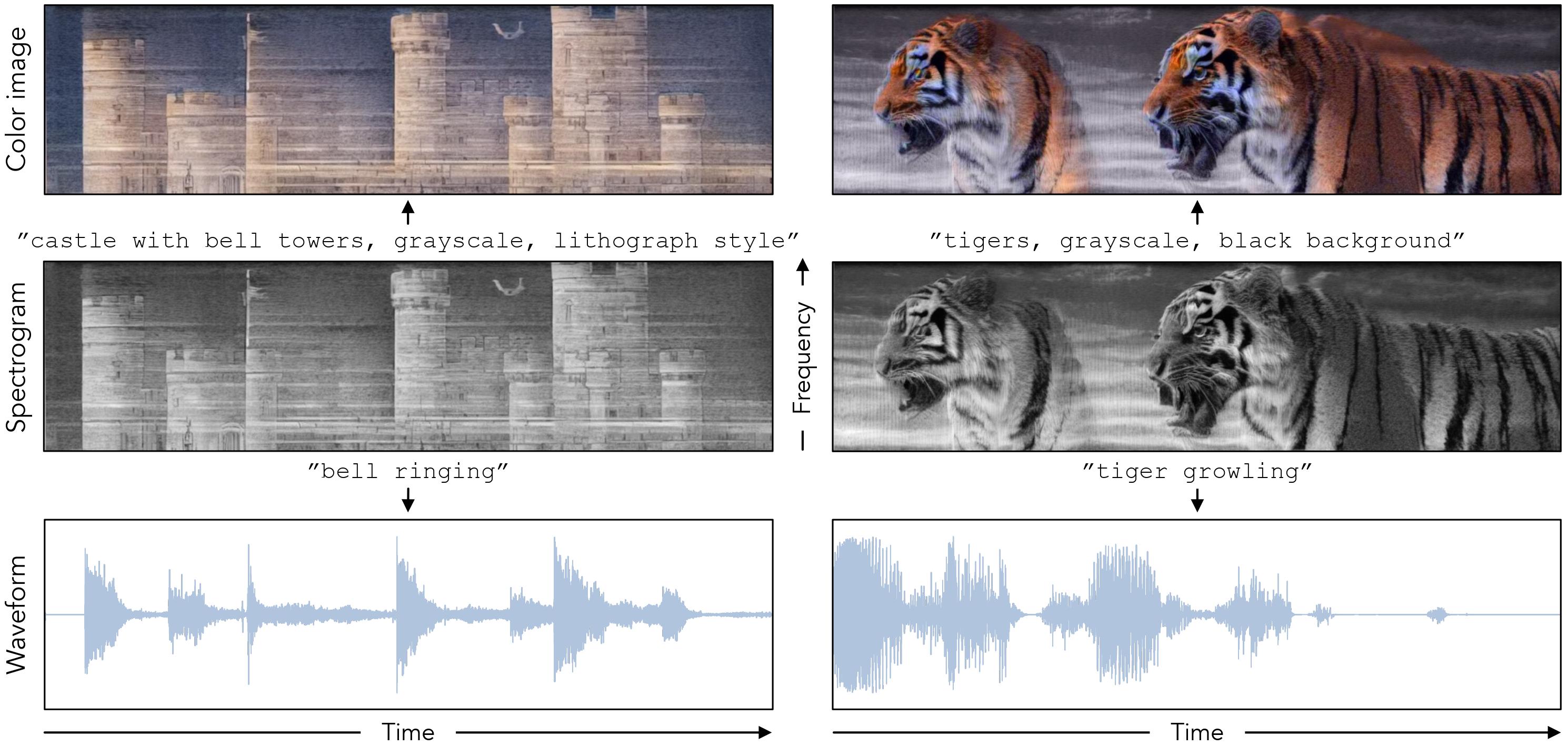

This repository contains the code to generate images that sound, a special spectrogram that can be seen as images and played as sound.

To setup the environment, please simply run:

conda env create -f environment.yml

conda activate soundifyPro tip: we highly recommend using mamba instead of conda for much faster environment solving and installation.

DeepFlyod: our repo also uses DeepFloyd IF. To use DeepFloyd IF, you must accept its usage conditions. To do so:

- Sign up or log in to Hugging Face account.

- Accept the license on the model card of DeepFloyd/IF-I-XL-v1.0.

- Log in locally by running

python huggingface_login.pyand entering your Hugging Face Hub access token when prompted. It does not matter how you answer theAdd token as git credential? (Y/n)question.

We use pretrained image latent diffusion Stable Diffusion v1.5 and pretrained audio latent diffusion Auffusion, which finetuned from Stable Diffusion. We provide the codes (including visualization) and instructions for our approach (multimodal denoising) and two proposed baselines: Imprint and SDS. We note that our code is based on the hydra, you can overwrite the parameters based on hydra.

To create images that sound using our multimodal denoising method, run the code with config files under configs/main_denoise/experiment:

python src/main_denoise.py experiment=examples/bellNote: our method does not have a high success rate since it's zero-shot and it highly depends on initial random noises. We recommend generating more samples such as N=100 to selectively hand-pick high-quality results.

To create images that sound using our proposed imprint baseline method, run the code with config files under configs/main_imprint/experiment:

python src/main_imprint.py experiment=examples/bellTo create images that sound using our proposed multimodal SDS baseline method, run the code with config file under configs/main_sds/experiment:

python src/main_sds.py experiment=examples/bellNote: we find that Audio SDS doesn't work for a lot of audio prompts. We hypothesize the reason is that latent diffusions don't work quite well as pixel-based diffusion for SDS.

We also provide the colorization code under src/colorization which is adopted from Factorized Diffusion. To directly generate colorized videos with audio, run the code:

python src/colorization/create_color_video.py \

--sample_dir /path/to/generated/sample/dir \

--prompt "a colorful photo of [object]" \

--num_samples 16 --guidance_scale 10 \

--num_inference_steps 30 --start_diffusion_step 7Note: since our generated images fall outside the distribution, we recommend running more trials (num_samples=16) to select best colorized results.

Our code is based on Lightning-Hydra-Template, diffusers, stable-dreamfusion, Diffusion-Illusions, Auffusion, and visual-anagrams. We appreciate their open-source codes.