pick_place do not work

caishanglei opened this issue · 3 comments

Hello, have you made any changes to the pickplace environment itself? I used DDPG+HER in stable-baseline3 and did not train the results. The reward of the environment itself is only the distance between the target point and the object, which is very simple

Hi @caishanglei,

Thanks for your comment.

have you made any changes to the pickplace environment itself

No, I have not. I've just added this trivial line of code to avoid training the agent by random spawning of the desired_goal and achieved_goal.

I used DDPG+HER in stable-baseline3 and did not train the results

I myself have not used any codes of stable-baseline3 so, I have no idea why that does not perform what it's supposed to. Instead, I encourage you to take a look at this repo

The reward of the environment itself is only the distance between the target point and the object, which is very simple

I think you're making a mistake in the interpretation of rewarding in PickAndPlace; if you consider such a dense reward mechanism (that you described) then there would be no need to deploy HER. Take a look at these lines:

Thank you very much for your reply, I use your code to train again. After how many epochs will the success rate increase significantly? The initial 20 epochs are all 0, does the gym version affect the training results (gym=0.18)

Thank you very much for your reply

My pleasure. 😊

After how many epochs will the success rate increase significantly?

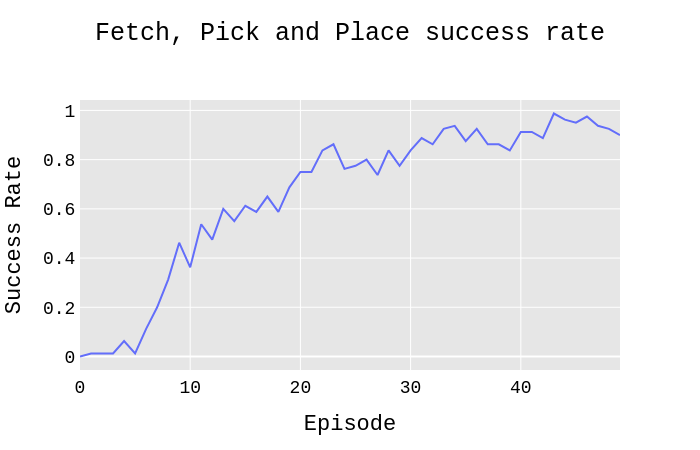

Based on the following plot, if you use 8 parallel agents to train, then it would take 8 epochs to observe a significant change in the success rate:

The initial 20 epochs are all 0, does the gym version affect the training results (gym=0.18)

It seems improbable but if you doubt it you may check issues in the OpenAI GYM repository.