This is a pytorch implementation of Hindsight Experience Replay.

- python=3.5.2

- openai-gym=0.12.5 (mujoco200 is supported, but you need to use gym >= 0.12.5, it has a bug in the previous version.)

- mujoco-py=1.50.1.56 (

Please use this version, if you use mujoco200, you may failed in the FetchSlide-v1) - pytorch=1.0.0 (If you use pytorch-0.4.1, you may have data type errors. I will fix it later.)

- mpi4py

- support GPU acceleration - although I have added GPU support, but I still not recommend if you don't have a powerful machine.

- add multi-env per MPI.

- add the plot and demo of the FetchSlide-v1.

If you want to use GPU, just add the flag --cuda (Not Recommended, Better Use CPU).

- train the FetchReach-v1:

mpirun -np 1 python -u train.py --env-name='FetchReach-v1' --n-cycles=10 2>&1 | tee reach.log- train the FetchPush-v1:

mpirun -np 8 python -u train.py --env-name='FetchPush-v1' 2>&1 | tee push.log- train the FetchPickAndPlace-v1:

mpirun -np 16 python -u train.py --env-name='FetchPickAndPlace-v1' 2>&1 | tee pick.log- train the FetchSlide-v1:

mpirun -np 8 python -u train.py --env-name='FetchSlide-v1' --n-epochs=200 2>&1 | tee slide.logpython demo.py --env-name=<environment name>Please download them from the Google Driver, then put the saved_models under the current folder.

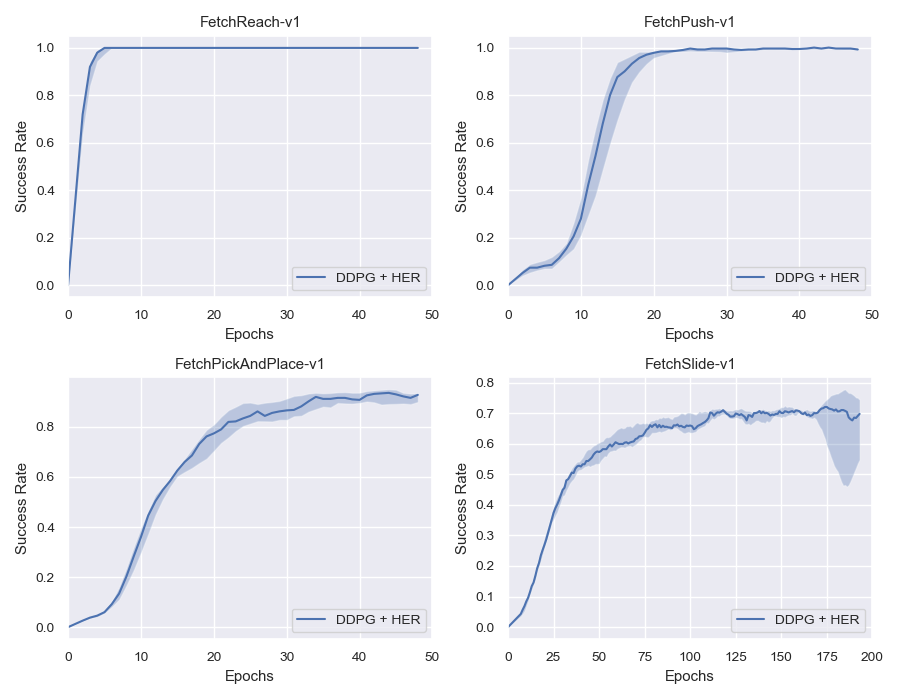

It was plotted by using 5 different seeds, the solid line is the median value.

Tips: when you watch the demo, you can press TAB to switch the camera in the mujoco.

| FetchPush-v1 | FetchPickAndPlace-v1 | FetchSlide-v1 |

|---|---|---|

|

|

|