PyTorch implementation of paper "Neural Residual Radiance Fields for Streamably Free-Viewpoint Videos", CVPR 2023.

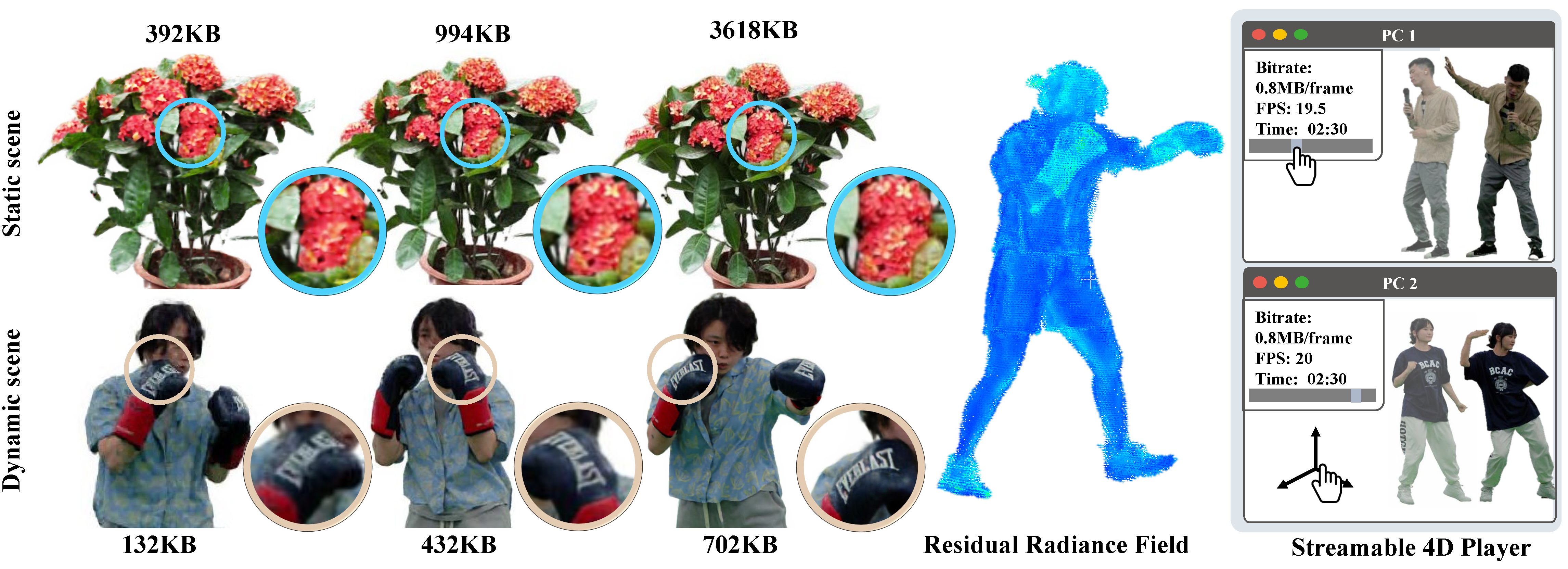

Neural Residual Radiance Fields for Streamably Free-Viewpoint Videos

Liao Wang, Qiang Hu, Qihan He, Ziyu Wang, Jingyi Yu, Tinne Tuytelaars, Lan Xu, Minye Wu

CVPR 2023

git clone git@github.com:aoliao12138/ReRF.git

cd ReRF

conda env create -f environment.yml

conda activate rerf

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu116

pip install torch-scatter==2.0.9Tested on Ubuntu with RTX 3090.

Follow ReRF_Dataset to download our dataset and model. It is only for non-commercial purposes. To use our data for training and rendering, for example, first unzip it to your data folder and run:

$ python data_util.py --dataset_dir ./data/kpopYou can use, redistribute, and adapt the material for non-commercial purposes, as long as you give appropriate credit by citing our paper and indicating any changes that you've made.

To train kpop scene, run:

$ LD_LIBRARY_PATH=./ac_dc:$LD_LIBRARY_PATH PYTHONPATH=./ac_dc/:$PYTHONPATH python run.py --config configs/rerf/kpop.py --render_testCompress each trained frames

$ LD_LIBRARY_PATH=./ac_dc:$LD_LIBRARY_PATH PYTHONPATH=./ac_dc/:$PYTHONPATH python codec/compress.py --model_path ./output/kpop --frame_num 4000 --expr_name rerfUse --pca to enable pca and --group_size to enable GOF compression.

render video while camera and frames are changing, run:

$ LD_LIBRARY_PATH=./ac_dc:$LD_LIBRARY_PATH PYTHONPATH=./ac_dc/:$PYTHONPATH python rerf_render.py --config ./configs/rerf/kpop.py --compression_path ./output/kpop/rerf --render_360 4000If your compression use --pca or --group_size, you need to also set them at the rendering

Follow ReRF_Dataset to download our compressed files for the scene kpop. It contains 4000 frames. You can unzip it and render it by using

LD_LIBRARY_PATH=./ac_dc:$LD_LIBRARY_PATH PYTHONPATH=./ac_dc/:$PYTHONPATH python rerf_render.py --config ./configs/rerf/kpop.py --compression_path <the folder path you unzip> --render_360 4000 --pca --group_size 20As DVGO, check the comments in configs/default.py for the configurable settings.

We use mmcv's config system.

To create a new config, please inherit configs/default.py first and then update the fields you want.

As DVGO, you can change some settings like N_iters, N_rand, num_voxels, rgbnet_depth, rgbnet_width and so on to achieve the speed and quality tradeoff.

The scale of the bbox will greatly affect the final result, so it is recommended to adjust it to fit your camera coordinate system. You can follow DVGO to adjust it.

The code base is originated from the DVGO implementation. We borrowed some codes from Multi-view Neural Human Rendering (NHR) and torch-dct.

@InProceedings{Wang_2023_CVPR,

author = {Wang, Liao and Hu, Qiang and He, Qihan and Wang, Ziyu and Yu, Jingyi and Tuytelaars, Tinne and Xu, Lan and Wu, Minye},

title = {Neural Residual Radiance Fields for Streamably Free-Viewpoint Videos},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {76-87}

}