In this repository, we provide the code for ensembling the output of object detection models, and applying test-time augmentation for object detection. This library has been designed to be applicable to any object detection model independently of the underlying algorithm and the framework employed to implement it. A draft describing the techniques implemented in this repository are available in the following article.

- Installation

- Ensemble of models

- Test-time augmentation for object detection

- Adding new models

- Experiments

- Citation

- Acknowledgements

This library requires Python 3.6 and the packages listed in requirements.txt.

Installation:

- Clone this repository

git clone https://github.com/ancasag/ensembleObjectDetection- Install dependencies

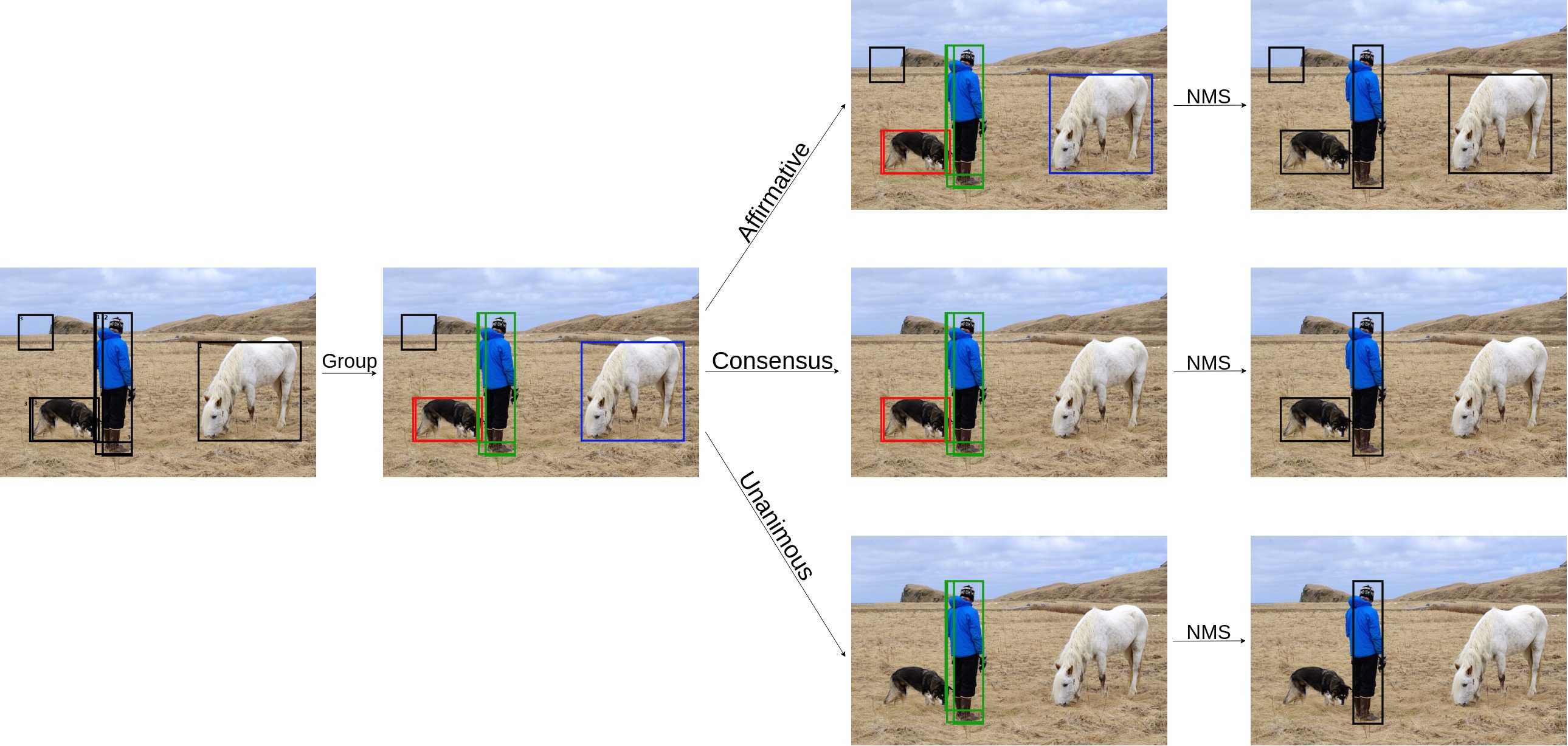

pip3 install -r requirements.txtIn the following image, we show an example of the workflow of our ensemble algorithm. Three methods have been applied to detect the objects in the original image: the first method has detected the person and the horse; the second, the person and the dog; and, the third, the person, the dog, and an undefined region. The first step of our ensemble method groups the overlapping regions. Subsequently, a voting strategy is applied to discard some of those groups. The final predictions are obtained using the NMs algorithm.

Three different voting strategies can be applied with our ensemble algorithm:

- Affirmative. This means that whenever one of the methods that produce the initial predictions says that a region contains an object, such a detection is considered as valid.

- Consensus. This means that the majority of the initial methods must agree to consider that a region contains an object. The consensus strategy is analogous to the majority voting strategy commonly applied in ensemble methods for images classification.

- Unanimous. This means that all the methods must agree to consider that a region contains an object.

In order to run the ensemble algorithm, you can edit the file mainModel.py from the TestTimeAugmentation folder to configure the models to use and then invoke the following command where pathOfDataset is the path where the images are saved, and option is the voting strategy (affirmative, consensus or unanimous).

python mainModel.py -d pathOfDataset -o optionA simpler way to use our this method is provided in the following notebook.

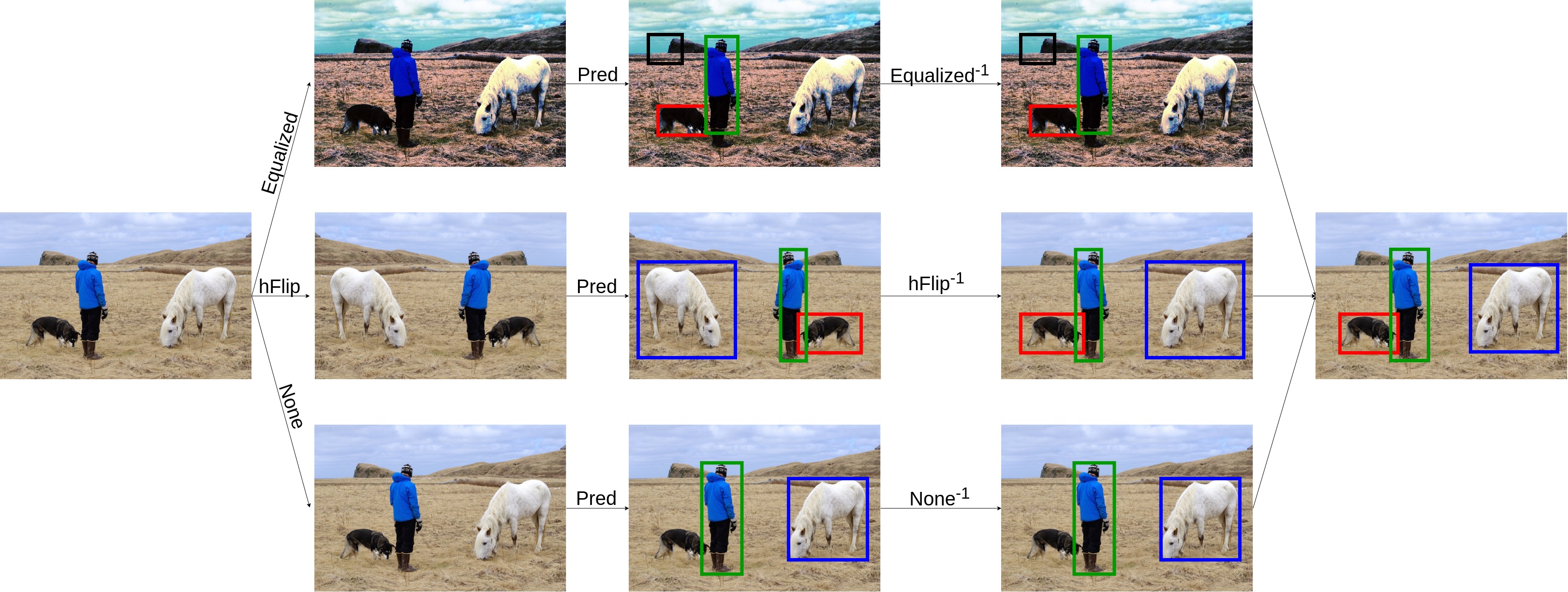

In the following image, we show an example of the workflow of test-time augmentation (from now on, TTA) for object detectors. First, we apply three transformations to the original image: a histogram equalisation, a horizontal flip, and a none transformation (that does not modify the image). Subsequently, we detect the objects in the new images, and apply the corresponding detection transformation to locate the objects in the correct position for the original image. Finally, the detections are ensembled using the consensus strategy.

As indicated previously, three different voting strategies can be applied for TTA:

- Affirmative. This means that whenever one of the methods that produce the initial predictions says that a region contains an object, such a detection is considered as valid.

- Consensus. This means that the majority of the initial methods must agree to consider that a region contains an object. The consensus strategy is analogous to the majority voting strategy commonly applied in ensemble methods for images classification.

- Unanimous. This means that all the methods must agree to consider that a region contains an object.

These are all the techniques that we have defined to use in the TTA process. The first column corresponds with the name assigned to the technique, and the second column describes the technique.

- "avgBlur": Average blurring

- "bilaBlur": Bilateral blurring

- "blur": Blurring

- "chanHsv": Change to hsv colour space

- "chanLab": Blurring

- "crop": Crop

- "dropOut": Dropout

- "elastic": Elastic deformation

- "histo": Equalize histogram

- "vflip": Vertical flip

- "hflip": Horizontal flip

- "hvflip": Vertical and horizontal flip

- "gamma": Gamma correction

- "blurGau": Gaussian blurring

- "avgNoise": Add Gaussian noise

- "invert": Invert

- "medianblur": Median blurring

- "none": None

- "raiseBlue": Raise blue channel

- "raiseGreen": Raise green channel

- "raiseHue": Raise hue

- "raiseRed": Raise red

- "raiseSatu": Raise saturation

- "raiseValue": Raise value

- "resize": Resize

- "rotation10": Rotate 10º

- "rotation90": Rotate 90º

- "rotation180": Rotate 180º

- "rotation270": Rotate 270º

- "saltPeper": Add salt and pepper noise

- "sharpen": Sharpen

- "shiftChannel": Shift channel

- "shearing": Shearing

- "translation": Translation

In order to run the ensemble algorithm, you can edit the mainTTA.py file from the TestTimeAugmentation folder to configure the model to use and the transformation techniques. Then, you can invoke the following command where pathOfDataset is the path where the images are saved, and option is the voting strategy (affirmative, consensus or unanimous).

python mainTTA.py -d pathOfDataset -o optionA simpler way to use our this method is provided in the following notebook.

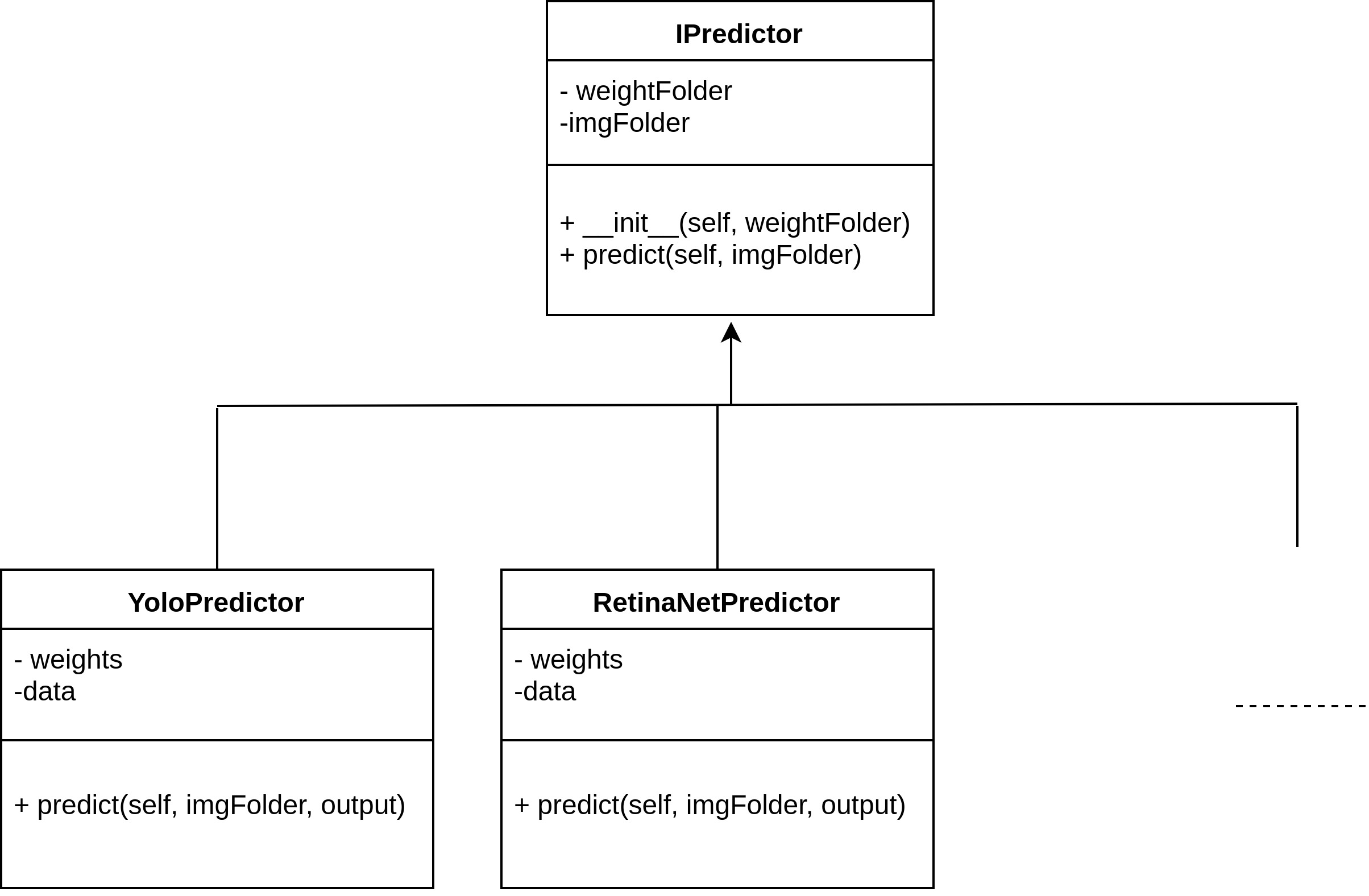

This open source library can be extended to work with any object detection model regardless of the algorithm and framework used to build it. To do this, it is necessary to create a new class that extends the IPredictor class of the following diagram:

Several examples of classes extending the IPredictor class can be seen in the testTimeAugmentation.py file. Namely, it is necessary to define a class with a predict method that takes as input the path to a folder containing the images, and stores the predictions in the Pascal VOC format in the same folder. Once this new class has been created, it can be applied both for the ensemble of models and for TTA.

Currently, they library can work with models constructed with the following models:

- YOLO models constructed with the Darknet library. To use models constructed with this library create an object of the

DarknetYoloPredclass. The constructor of this class takes as input the path to the weights of the model, the path to the file with the names of the classes for the model, and the configuration file. - Faster-RCNN models constructed with the MxNet library. To use models constructed with this library create an object of the

MXnetFasterRCNNPredclass. The constructor of this class takes as input the path to the weights of the model and the path to the file with the names of the classes for the model. - SSD models constructed with the MxNet library. To use models constructed with this library create an object of the

MXnetSSD512Predclass. The constructor of this class takes as input the path to the weights of the model and the path to the file with the names of the classes for the model. - YOLO models constructed with the MxNet library. To use models constructed with this library create an object of the

MXnetYoloPredclass. The constructor of this class takes as input the path to the weights of the model and the path to the file with the names of the classes for the model. - Retinanet models with the Resnet 50 backbone constucted with the Keras Retinanet library. To use models constructed with this library create an object of the

RetinaNetResnet50Predclass. The constructor of this class takes as input the path to the weights of the model and the path to the file with the names of the classes for the model. - MaskRCNN models with the Resnet 101 backbone constucted with the Keras MaskRCNN library. To use models constructed with this library create an object of the

MaskRCNNPredclass. The constructor of this class takes as input the path to the weights of the model and the path to the file with the names of the classes for the model.

You can see several examples of these models in the notebook for ensembling models.

Several experiments were conducted to test this library and the results are presented in the article. Here, we provide the datasets and models used for those experiments.

For the experiments of Section 4.1 of the paper, we employed the test set of The PASCAL Visual Object Classes Challenge; and, the pre-trained models provided by the MXNet library.

For the experiments of Section 4.2 of the paper, we employed two stomata datasets:

Using these datasets, we trained YOLO models using the Darknet framework:

- Model trained using the fully annotated dataset.

- Model trained with the partially annotated dataset using data distillation, all the transformations, and affirmative strategy.

- Model trained with the partially annotated dataset using data distillation, all the transformations, and consensus strategy.

- Model trained with the partially annotated dataset using data distillation, all the transformations, and unanimous strategy.

- Model trained with the partially annotated dataset using data distillation, colour transformations, and affirmative strategy.

- Model trained with the partially annotated dataset using data distillation, colour transformations, and consensus strategy.

- Model trained with the partially annotated dataset using data distillation, colour transformations, and unanimous strategy.

- Model trained with the partially annotated dataset using data distillation, flip transformations, and affirmative strategy.

- Model trained with the partially annotated dataset using data distillation, flip transformations, and consensus strategy.

- Model trained with the partially annotated dataset using data distillation, flip transformations, and unanimous strategy.

For the experiments of Section 4.3 of the paper, we employed two table datasets:

Using the ICDAR 2013 dataset, we have trained several models for this dataset:

- Mask RCNN model trained using the Keras MaskRCNN

- SSD Model trained using the MXNet framework.

- YOLO Model trained using the Darknet framework.

We have also trained several models for the ICDAR 2013 dataset using model distillation using the images of the Word part of the TableBank dataset:

- Mask RCNN model using the affirmative strategy.

- Mask RCNN model using the consensus strategy.

- Mask RCNN model using the unanimous strategy.

- SSD model using the affirmative strategy.

- SSD model using the consensus strategy.

- SSD model using the unanimous strategy.

- YOLO model using the affirmative strategy.

- YOLO model using the consensus strategy .

- YOLO model using the unanimous strategy.

Use this bibtex to cite this work:

@misc{CasadoGarcia19,

title={Ensemble Methods for Object Detection},

author={A. Casado-García and J. Heras},

year={2019},

note={\url{https://github.com/ancasag/ensembleObjectDetection}},

}

This work was partially supported by Ministerio de Economía y Competitividad [MTM2017-88804-P], Ministerio de Ciencia, Innovación y Universidades [RTC-2017-6640-7], Agencia de Desarrollo Económico de La Rioja [2017-I-IDD-00018], and the computing facilities of Extremadura Research Centre for Advanced Technologies (CETA-CIEMAT), funded by the European Regional Development Fund (ERDF). CETA-CIEMAT belongs to CIEMAT and the Government of Spain. We also thank Álvaro San-Sáez for providing us with the stomata datasets.