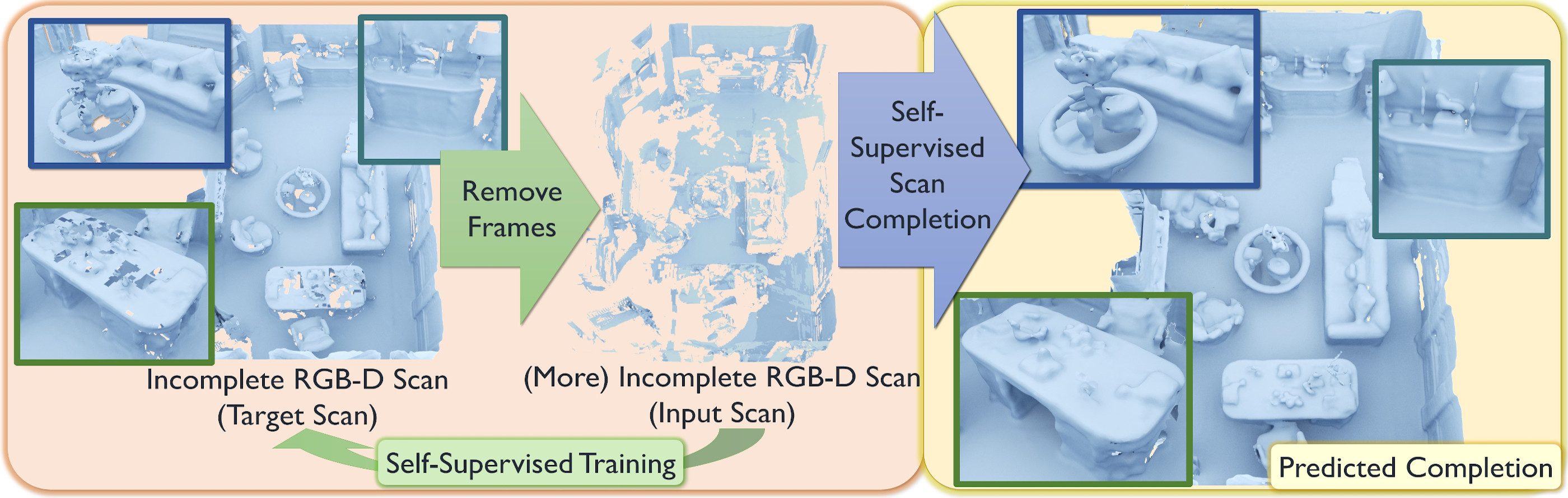

SG-NN presents a self-supervised approach that converts partial and noisy RGB-D scans into high-quality 3D scene reconstructions by inferring unobserved scene geometry. For more details please see our paper SG-NN: Sparse Generative Neural Networks for Self-Supervised Scene Completion of RGB-D Scans.

Training is implemented with PyTorch. This code was developed under PyTorch 1.1.0, Python 2.7, and uses SparseConvNet.

For visualization, please install the marching cubes by python setup.py install in marching_cubes.

- See

python train.py --helpfor all train options. - Example command:

python train.py --gpu 0 --data_path ./data/completion_blocks --train_file_list ../filelists/train_list.txt --val_file_list ../filelists/val_list.txt --save_epoch 1 --save logs/mp --max_epoch 4 - Trained model: sgnn.pth (7.5M)

- See

python test_scene.py --helpfor all test options. - Example command:

python test_scene.py --gpu 0 --input_data_path ./data/mp_sdf_vox_2cm_input --target_data_path ./data/mp_sdf_vox_2cm_target --test_file_list ../filelists/mp-rooms_val-scenes.txt --model_path sgnn.pth --output ./output --max_to_vis 20

- Scene data:

- mp_sdf_vox_2cm_input.zip (44G)

- mp_sdf_vox_2cm_target.zip (58G)

- Train data:

- completion_blocks.zip (88G)

- GenerateScans depends on the mLib library.

If you find our work useful in your research, please consider citing:

@inproceedings{dai2020sgnn,

title={SG-NN: Sparse Generative Neural Networks for Self-Supervised Scene Completion of RGB-D Scans},

author = {Dai, Angela and Diller, Christian and Nie{\ss}ner, Matthias},

booktitle = {Proc. Computer Vision and Pattern Recognition (CVPR), IEEE},

year = {2020}

}