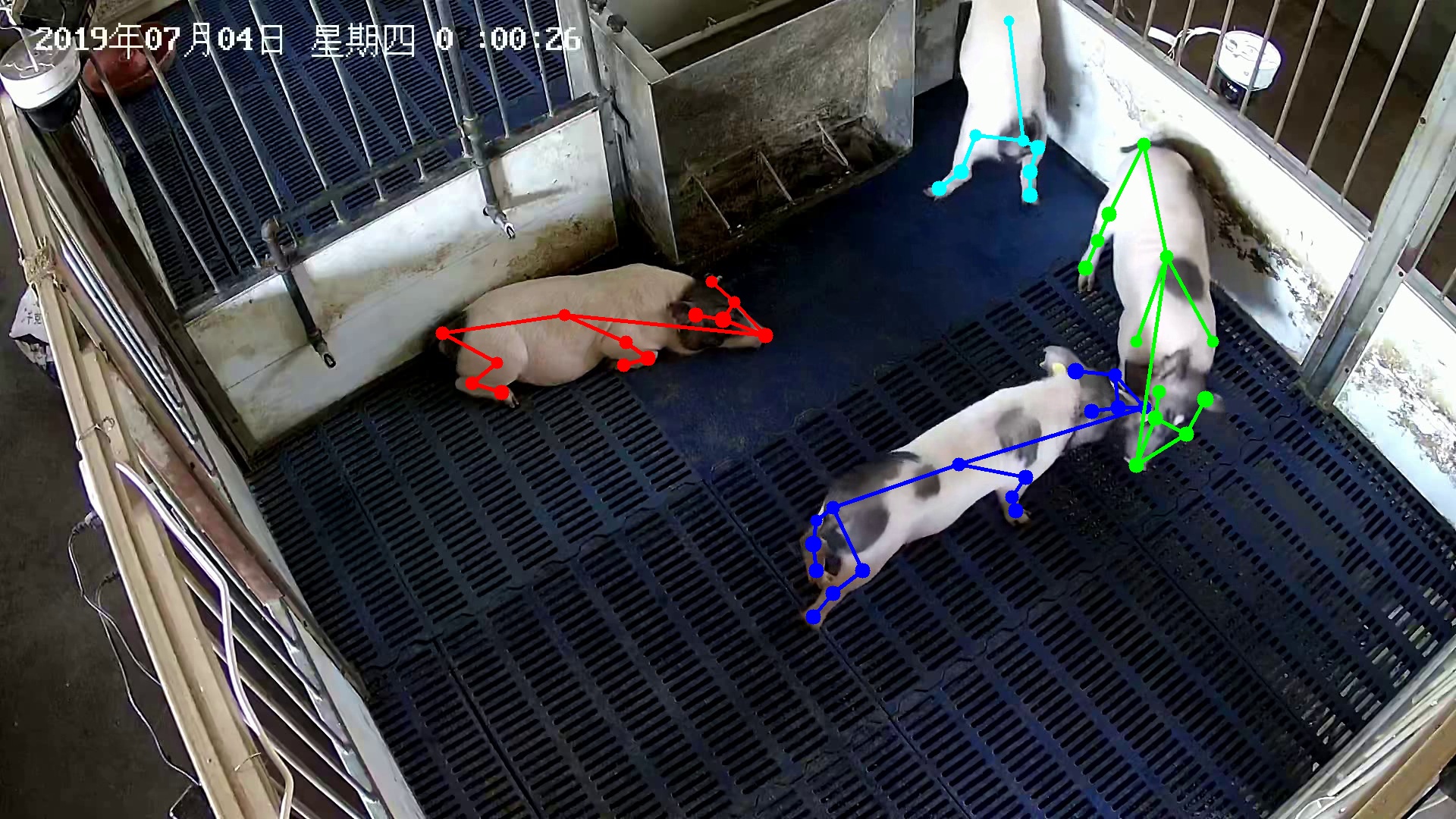

This repository is the official implementation of pig pose detection used in the paper:

An, L., Ren, J., Yu, T., Hai, T., Jia, Y., &Liu, Y. Three-dimensional surface motion capture of multiple freely moving pigs using MAMMAL. biorxiv (2022).

Other related repositories:

This code is modified from https://github.com/Microsoft/human-pose-estimation.pytorch.

This code has been tested on Ubuntu 20.04, cuda 11.3 (cudnn 8.2.1), python3.7.13, pytorch 1.12.0, NVIDIA Titan X. Currently, GPU support is necessary.

Besides, the following environments were also tested:

- Ubuntu 18.04, cuda 10.1 (cudnn 7.6.5), python 3.6.9, pytorch 1.5.1, NVIDIA RTX 2080 Ti.

- Clone this repo, and we'll call the directory that you cloned as ${POSE_ROOT}. Simply go to ${POSE_ROOT} by

cd ${POSE_ROOT}- We recommend to use conda to manage the virtual environment. After install conda (follow the official instruction of anaconda), use the commands below to create a virtual python environment. We have tested the code on both python3.7 with the newest

pytorch 1.12.0.

conda create -n PIG_POSE python=3.7

conda activate PIG_POSE- To install the newest

pytorch(or old version as you like), you can go to https://pytorch.org/ for more instructions. Here, we used the following commands. Please pay a close attention to the version ofcudatoolkit, it should match the cuda version of your own machine. In our case, we use cuda 11.3.

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch- To install other packages, run

pip install -r requirements.txt

- Then, go to

libfolder to make libs.

cd lib

make

cd ..

Note that, before doing this, you should modify lib/nms/setup_linux.py line 128 by changing arch=sm_75 to the version compatible with your own GPU. See https://arnon.dk/matching-sm-architectures-arch-and-gencode-for-various-nvidia-cards/ for more guidance.

6. Build cocoapi. Note that, DO NOT use the official verision pycocotools because we modified cocoeval.py to accommodate our BamaPig2D dataset.

cd lib/cocoapi/PythonAPI

python setup.py install

The installation is finished.

Download the pretrained weights (together with training and testing log) from Google Drive or Baidu Drive (extract code: 4pw1), unzip the downloaded output20210225.zip to ${POSE_ROOT} folder and you will get:

${POSE_ROOT}

`-- output20210225

`-- pig_univ

`-- pose_hrnet

`-- w48_384x384_univ_20210225

|-- results

|-- checkpoint.pth

|-- final_state.pth

|-- model_best.pth

|-- pose_hrnet.py

|-- w48_384x384_univ_20210225_2021-02-25-23-28_train.log

The model_best.pth is actually the used pretrained weights.

- Download the BamaPig3D dataset. Its path is denoted as ${YOUR_DATA_PATH}. Note that, if you have not run pig_silhouette_det to get the

boxes_prfolder within the dataset, you could not run this demo because bounding boxes for pigs are necessary. Fortunately, we have provided our own detected bounding boxes within the full version of BamaPig3D dataset, see https://github.com/anl13/MAMMAL_datasets for more details. - Change the

--dataset_folderargument indemo_BamaPig3D.shto ${YOUR_DATA_PATH}. - Run

demo_BamaPig3D.shwith

bash demo_BamaPig3D.sh

The --vis argument control whether the program shows the output image with keypoints drawn. If the image panel is shown, you can press ESC to exit or other keys to move to next image. Note that, only keypoints with a confidence large than 0.5 are shown. You can change this threshold in pose_estimation/demo_BamaPig3D.py line 144.

The --write_json would write the output keypoints as json files into ${YOUR_DATA_PATH}/keypoints_hrnet folder.

- To train the network, you should first obtain the BamaPig2D dataset. Let's assume your own dataset path is ${YOUR_DATA_2D_PATH}.

- Create a symbolic link towards ${YOUR_DATA_2D_PATH} as

data/pig_univ. The struct is${POSE_ROOT} `-- data `-- pig_univ |-- images\ |-- annotations\ - Download weights of hrnet pretrained on ImageNet

hrnet_w48-8ef0771d.pthfrom pytorch model zoo and put it tomodelfolder as${POSE_ROOT} `-- models `-- pytorch `-- imagenet `-- hrnet_w48-8ef0771d.pth - Run

bash train.sh

It will write training log and results to output folder. The whole training takes about 32 hours on single GPU. It depends on the performance and number of GPU.

Run test.sh as the following will perform evaluation on our pretrained model (see demos before).

bash test.sh

This command will write a validation log to output20210225/pig_univ/pose_hrnet/w48_384x384_univ_20210225/. You can also change the cfg file in test.sh to perform evalution on your own trained results.

As a baseline, our pretrained model performs:

| Arch | AP | Ap .5 | AP .75 | AP (M) | AP (L) | AR | AR .5 | AR .75 | AR (M) | AR (L) |

|---|---|---|---|---|---|---|---|---|---|---|

| pose_hrnet | 0.633 | 0.954 | 0.730 | 0.000 | 0.633 | 0.694 | 0.961 | 0.791 | 0.000 | 0.695 |

The output is 23 keypoints, where only 19 are non-zero valid ones. See BamaPig2D dataset for more explanations.

If you use this code in your research, please cite the paper

@article{MAMMAL,

author = {An, Liang and Ren, Jilong and Yu, Tao and Jia, Yichang and Liu, Yebin},

title = {Three-dimensional surface motion capture of multiple freely moving pigs using MAMMAL},

booktitle = {biorxiv},

month = {July},

year = {2022}

}

@article{WangSCJDZLMTWLX19,

title={Deep High-Resolution Representation Learning for Visual Recognition},

author={Jingdong Wang and Ke Sun and Tianheng Cheng and

Borui Jiang and Chaorui Deng and Yang Zhao and Dong Liu and Yadong Mu and

Mingkui Tan and Xinggang Wang and Wenyu Liu and Bin Xiao},

journal = {TPAMI}

year={2019}

}- Liang An (al17@mails.tsinghua.edu.cn)