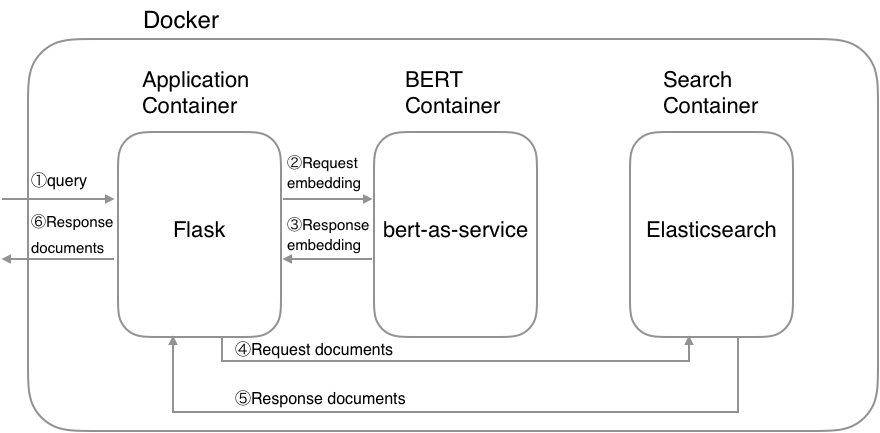

This repository provides the implementation of a neural search engine for RFC documents that use a pre-trained NetBERT model for information retrieval.

The following section lists the requirements in order to start running the project.

This project is based on Docker containers, so ensure to have Docker and docker-compose installed on your machine. In addition, your machine should dispose from a working version of Python 3.6 as well as the following packages:

These libraries can be installed automatically by running the following command in the code/ repository:

pip install -r requirements.txtYou can download the pre-trained NetBERT model with 🤗 transformers as follows:

from transformers import AutoTokenizer, AutoModel

# Load model.

tokenizer = AutoTokenizer.from_pretrained("antoiloui/netbert")

model = AutoModel.from_pretrained("antoiloui/netbert")

# Save it.

model.save_pretrained("path/to/local/folder")

tokenizer.save_pretrained("path/to/local/folder")

Then, you need to convert the 🤗 model checkpoint to the checkpoint format from original BERT repository:

bash convert_model_checkpoint.sh $path/to/local/folderThis will create a new 'tensorflow' repository where you should change the file names that way in order for the docker containers to run properly:

- netbert_config.json -> bert_config.json

- netbert.ckpt.data-00000-of-00001 -> bert_model.ckpt.data-00000-of-00001

- netbert.ckpt.index -> bert_model.ckpt.index

- netbert.ckpt.meta -> bert_model.ckpt.meta

Finally, set the following environnement variable with the path of the folder containing the convert NetBERT checkpoint:

export PATH_MODEL=$path/to/local/folder/tensorflow

In order to run the containers, run the following command:

make installGo into the 'index_creation' repository:

cd index_creation/bash download_data.sh $OUT_DIRbash clean_data.sh $DATA_DIRbash convert_data_format.sh $DATA_DIRYou can use the create index API to add a new index to an Elasticsearch cluster. When creating an index, you can specify the following:

- Settings for the index

- Mappings for fields in the index

- Index aliases

For example, if you want to create rfcsearch index with title, text and text_vector fields, you can create the index by the following command:

$ bash create_index.sh

# index.json

{

"settings": {

"number_of_shards": 2,

"number_of_replicas": 1

},

"mappings": {

"dynamic": "true",

"_source": {

"enabled": "true"

},

"properties": {

"title": {

"type": "text"

},

"text": {

"type": "text"

},

"text_vector": {

"type": "dense_vector",

"dims": 768

}

}

}

}NB: The dims value of text_vector must need to match the dims of a pretrained BERT model.

Once you created an index, you’re ready to index some document. The point here is to convert your document into a vector using BERT. The resulting vector is stored in the text_vector field. Let`s convert your data into a JSON document:

bash create_documents.sh $DATA_DIR $DATA_FILE

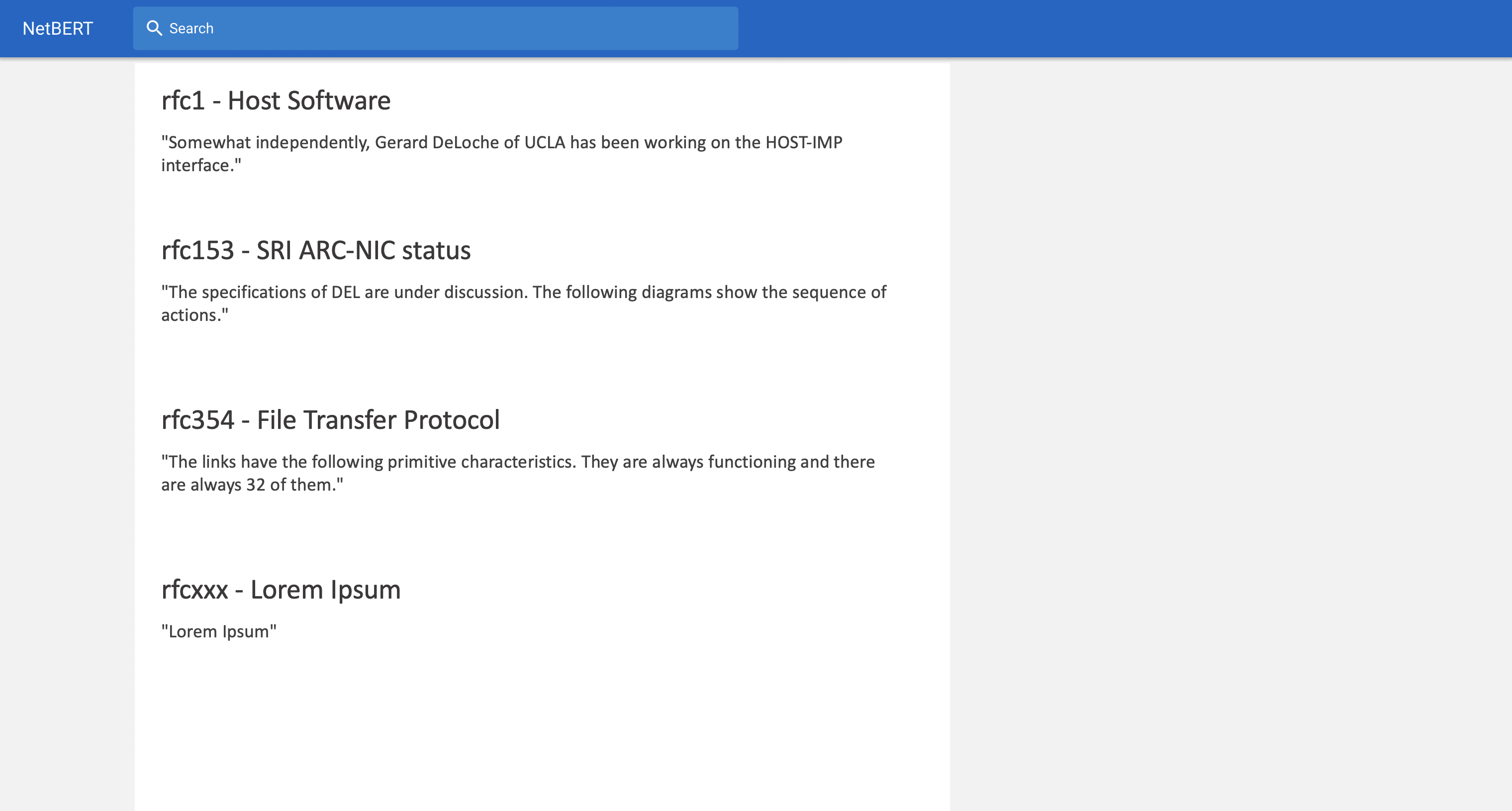

# $DATA_FILE=data/example.csv

"Title","Text"

"rfc1 - Host Software","Somewhat independently, Gerard DeLoche of UCLA has been working on the HOST-IMP interface."

"rfc153 - SRI ARC-NIC status","The specifications of DEL are under discussion. The following diagrams show the sequence of actions."

"rfc354 - File Transfer Protocol","The links have the following primitive characteristics. They are always functioning and there are always 32 of them."

"rfcxxx - Lorem Ipsum","Lorem Ipsum"

...After finishing the script, you get a JSON document as follows:

# documents.json

{"_op_type": "index", "_index": "rfcsearch", "text": "lorem ipsum", "title": "lorem ipsum", "text_vector": [...]}

{"_op_type": "index", "_index": "rfcsearch", "text": "lorem ipsum", "title": "lorem ipsum", "text_vector": [...]}

{"_op_type": "index", "_index": "rfcsearch", "text": "lorem ipsum", "title": "lorem ipsum", "text_vector": [...]}

...

After converting your data into a JSON, you can adds a JSON document to the specified index and makes it searchable:

bash index_documents.sh $DATA_DIROpen your browser and go to http://127.0.0.1:5000.

- Credits: This project was inspired by Hironsan.