DeepVariant is an analysis pipeline that uses a deep neural network to call genetic variants from next-generation DNA sequencing data.

DeepVariant is a suite of Python/C++ programs that run on any Unix-like operating system. For convenience the documentation refers to building and running DeepVariant on Google Cloud Platform, but the tools themselves can be built and run on any standard Linux computer, including on-premise machines. Note that DeepVariant currently requires Python 2.7 and does not yet work with Python 3.

Pre-built binaries are available at gs://deepvariant/. These are compiled to use SSE4 and AVX instructions, so you'll need a CPU (such as Intel Sandy Bridge) that supports them. (The file /proc/cpuinfo lists these features under "flags".)

Alternatively, see Building and testing DeepVariant for more information on building DeepVariant from sources for your platform.

For managed pipeline execution of DeepVariant see the cost- and speed-optimized, Docker-based pipelines created for Google Cloud Platform.

- DeepVariant release notes

- Building and testing DeepVariant

- DeepVariant quick start

- DeepVariant via Docker

- DeepVariant whole genome case study

- DeepVariant exome case study

- DeepVariant Genomic VCF (gVCF) support

- DeepVariant usage guide

- Advanced Case Study: Train a customized SNP and small indel variant caller for BGISEQ-500 data

- DeepVariant model training (old)

- DeepVariant training data

- Datalab example: visualizing pileup images/tensors

- Getting Started with GCP (It is not required to run DeepVariant on GCP.)

- Google Developer Codelab: Variant Calling on a Rice genome with DeepVariant

- Improve DeepVariant for BGISEQ germline variant calling | slides

For technical details describing how DeepVariant works please see our preprint.

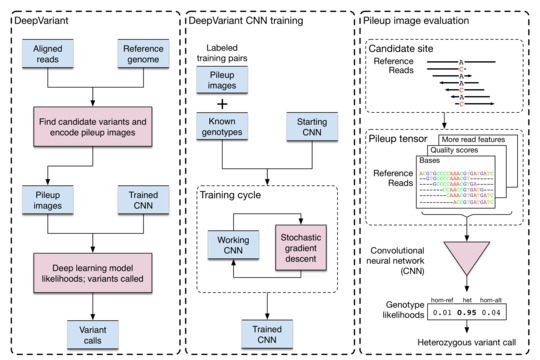

Briefly, we started with some of the reference genomes from Genome in a Bottle, for which there is high-quality ground truth available (or the closest approximation currently possible). Using multiple replicates of these genomes, we produced approximately one hundred million training examples in the form of multi-channel tensors encoding the sequencing instrument data, and then trained a TensorFlow-based image classification model (inception-v3) to assign genotype likelihoods from the experimental data produced by the instrument. Read additional information on the Google Research blog.

Under the hood, DeepVariant relies on Nucleus, a library of Python and C++ code for reading and writing data in common genomics file formats (like SAM and VCF) designed for painless integration with the TensorFlow machine learning framework.

We are delighted to see several external evaluations of the DeepVariant method.

The 2016 PrecisionFDA Truth Challenge, administered by the FDA, assessed several community-submitted variant callsets on the (at the time) blinded evaluation sample, HG002. DeepVariant won the Highest SNP Performance award in the challenge.

DNAnexus posted an extensive evaluation of several variant calling methods, including DeepVariant, using a variety of read sets from HG001, HG002, and HG005. They have also evaluated DeepVariant under a variety of noisy sequencing conditions.

Independent evaluations of DeepVariant v0.6 from both DNAnexus and bcbio are also available. Their analyses support our findings of improved indel accuracy, and also include comparisons to other variant calling tools.

The Genomics team in Google Brain actively supports DeepVariant and are always interested in improving the quality of DeepVariant. If you run into an issue, please report the problem on our Issue tracker. Make sure to add enough detail to your report that we can reproduce the problem and fix it. We encourage including links to snippets of BAM/VCF/etc. files that provoke the bug, if possible. Depending on the severity of the issue we may patch DeepVariant immediately with the fix or roll it into the next release.

If you have questions about next-generation sequencing, bioinformatics, or other general topics not specific to DeepVariant we recommend you post your question to a community discussion forum such as BioStars.

Interested in contributing? See CONTRIBUTING.

DeepVariant is licensed under the terms of the BSD-3-Clause license.

DeepVariant happily makes use of many open source packages. We'd like to specifically call out a few key ones:

-

abseil-cpp and abseil-py

We thank all of the developers and contributors to these packages for their work.

- This is not an official Google product.