How to build an end-to-end Machine Learning pipeline using AWS Glue, Amazon S3, Amazon SageMaker and Amazon Athena.

In this workshop, we will build an end-to-end pipeline to predict the accessibility of playgrounds in NYC based on borough, and tax income information. Our pipeline will combine several different data sources, use AWS Glue for serverless extract-transform-load (ETL) jobs, Amazon SageMaker for Machine learning, Amazon Simple Storage Service (S3) for storage and staging the datasets, and Amazon Athena to make inferences from the trained machine learning model directly using SQL functions.

In addition to training ML models using existing SageMaker functionality, you will learn how to use SageMaker APIs to launch an AutoPilot job from a Jupyter environment, which allows you to automatically build, train and tune ML models.

By the end of this lab, your data scientists and analysts will learn how to directly obtain predictions from ML models using just SQL queries. The inferences can directly then be ingested into a database for OLAP or business intelligence.

The Athena feature at the end of this lab is still in Preview (as of 05-01-2020) and available in "us-east-1". We caution however that APIs can often change between preview to when a feature becomes generally available, so we are including it in the lab for testing purposes only.

The end-to-end architecture we will build in this lab is the following:

Create Glue job for extracting and storing raw data 4

Analyze Raw Data using Athena 9

Create Glue Job to join income, parks, playground data 10

Automate the data processing pipeline using Glue Workflow(Optional) 12

[Machine Learning](#Machine Learning)

[Inferences using Amazon Athena](#Inferences using Amazon Athena)

-

Please use Chrome or Firefox browser to ensure smooth lab experience.

-

Sign into the AWS Management Console

-

Click on S3 from the list of all services. This will bring you to the S3 dashboard page.

-

On the S3 Dashboard, create two buckets for storing Raw and Processed data as follows:

- Click on Create bucket and then enter Bucket name: <<USERID>>-raw and click on Create. Create another bucket for storing processed objects <<USERID>>-processed

In this section, we will be creating two glue jobs:

a. Import_income_data: To extract 2016 income data for all 50 dates in CSV format

b. Import_json_data: To extract parks and playgrounds data in JSON format.

-

Sign into the AWS Management Console https://console.aws.amazon.com/.

-

Click on AWS Glue from the list of all services. This will bring you to the Amazon Glue dashboard page.

-

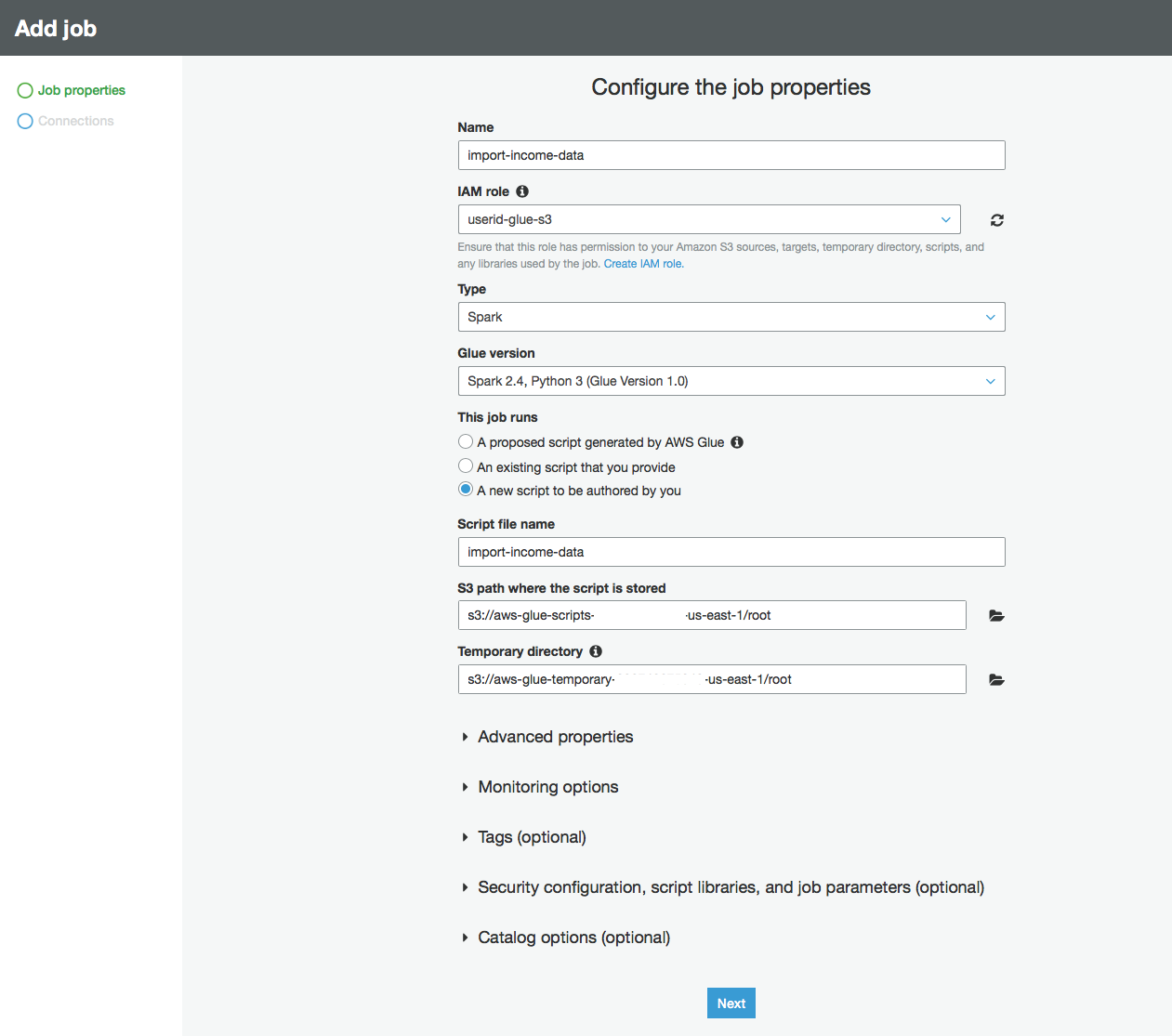

On the Glue Dashboard, click Jobs on the left panel and then click Add Job

-

Enter the following information:

-

Name: import_income_data

-

IAM Role: userid_glue_s3(refer to Appendix for creating this role)

-

This job runs: A new script to be authored by you.

-

-

Click Next in the lower-right corner of the screen.

-

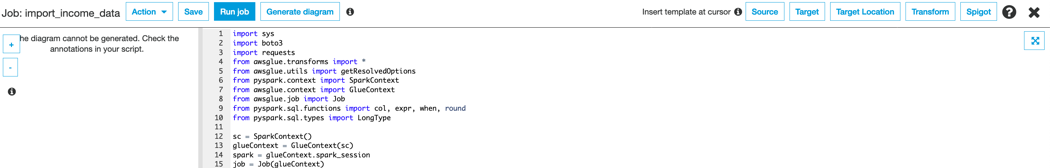

Click Save job and edit script

-

Paste the following source code: Replace <<userid>-raw with the RAW bucket you created in Create S3 buckets section.

The python code below, downloads income data in CSV format from the provided income_url and writes it to s3 using boto3.

- Click on Save and Run Job

-

Repeat steps 1-8 to create a job to import parks and playgrounds data in json format.

-

Name: import_json_data

-

IAM Role: userid_glue_s3(refer to Appendix for creating this role)

-

This job runs: A new script to be authored by you.

-

Replace <<userid>>-raw with your userid in the source code below

-

The python code below, downloads parks & playground data in JSON format from the provided parks_url & playgrounds_url and writes it to S3 in parquet format.

-

Sign into the AWS Management Console https://console.aws.amazon.com/.

-

Click on AWS Glue from the list of all services. This will bring you to the Amazon Glue dashboard page.

-

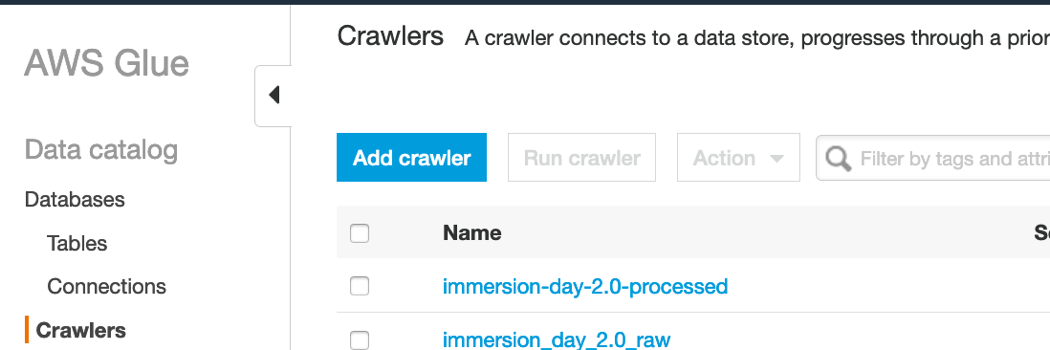

On the Glue Dashboard, click Crawlers on the left panel and then click Add Crawler

Enter the following crawler information:

-

Name: crawl_raw_data

-

Crawler Source Type: Data stores

-

Choose Data Store: S3

-

Crawl data in: Specified path in my account

-

Include Path: <<userid>>-raw

-

Add another datastore: No

-

Choose an existing IAM role and select <<userid>>_glue_s3

-

Frequency: Run on demand

-

Configure Crawler's output

- Add database and enter <<userid>>-raw as database name

and click Create

- Add database and enter <<userid>>-raw as database name

-

Click Finish and Run Crawler

-

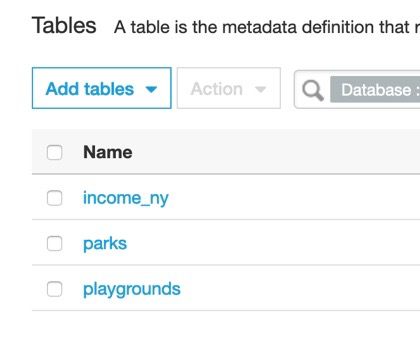

On the left panel, click on Databases and select <<userid>>-raw and click on Tables in <<userid>>-raw.

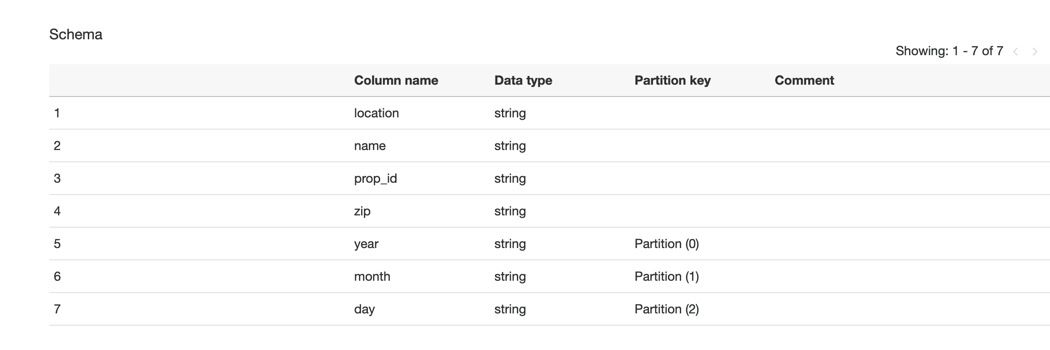

- Click on one of the tables (parks) to view its schema and partitions.

-

Sign into the AWS Management Console https://console.aws.amazon.com/.

-

Click on Athena from the list of all services. This will bring you to the Amazon Athena dashboard page.

-

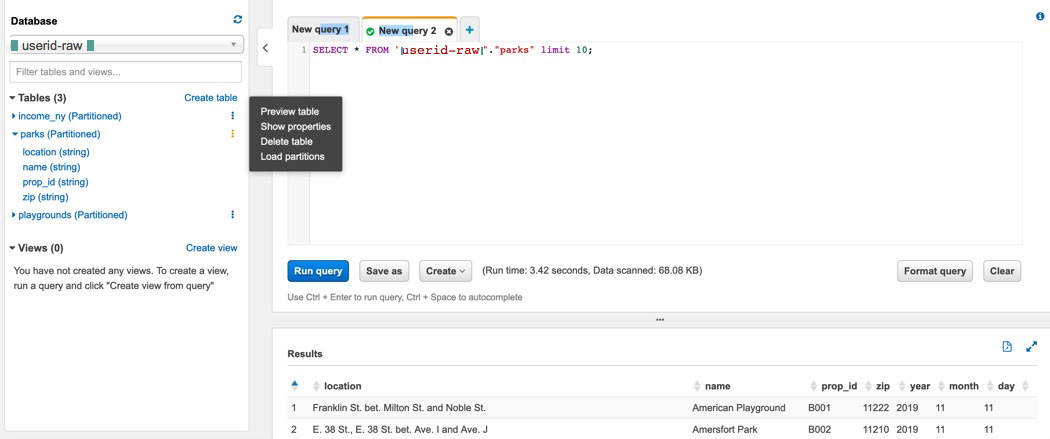

Select <<userid>>-raw from database list and any of the three tables(parks). Clicking on preview table will generate the sample select query. Use Athena to analyze other tables using ANSI SQL syntax.

In this section we will create a glue job to combine the income, parks, playgrounds data into one for ML processing.

-

Name: prep_data_for_ml

-

IAM Role: userid_glue_s3(refer to Appendix for creating this role)

-

This job runs: A new script to be authored by you.

-

Replace <<userid>>-raw with your userid in the source code below

-

Replace processed_dir with your userid in the code where it says "s3://<>-processed/processed/+path"

The python code does the following:

-

Load parks, playground, income data from the glue catalog as dynamic frame

-

Filter income data for NY and include/rename fields required for analysis

-

Join parks and income data using Zipcode

-

Left outer join playgrounds and combined parks_income data using Prop ID.

-

Write combined playgrounds, parks, income data to <<userid>>-processed bucket

-

Click on Save and Run Job. Note: this job might take 10-15 minutes to complete. Feel free to grab a coffee, stretch your legs in the mean time.

-

Create a crawler to catalog and analyze process data as follows:

From the Glue dashboard, click on Crawlers and Add Crawler and enter the following crawler information:

-

Name: crawl_processed_data

-

Crawler Source Type: Data stores

-

Choose Data Store: S3

-

Crawl data in: Specified path in my account

-

Include Path: <<userid>>-processed

-

Add another datastore: No

-

Choose an existing IAM role and select <<userid>>_glue_s3

-

Frequency: Run on demand

-

Configure Crawler's output

- Add database and enter <<userid>>-processed as database

name and click Create

- Add database and enter <<userid>>-processed as database

- Click Finish and Run Crawler

You can analyze this processed data using Athena and SQL as described in prior section: "Analyze raw data using Athena"

-

Sign into the AWS Management Console https://console.aws.amazon.com/.

-

Click on Glue from the list of all services. This will bring you to the Amazon Glue dashboard page.

-

Click on Workflow and add Workflow

-

Workflow name: playgrounds_workflow_for_ml

-

Add trigger to start processing workflow on demand

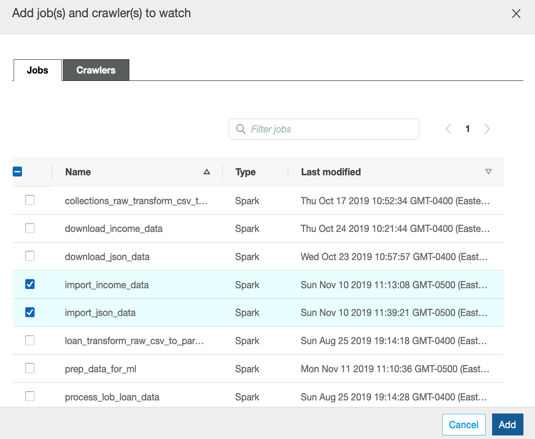

- Add jobs import_json_data and import_income_data to start trigger

- Add trigger to watch the above jobs and to trigger raw_data_crawler

-

Complete the workflow following the above steps

a. Add trigger to watch crawl_raw_data and trigger new event process_data_for_ml job

b. Add trigger to watch process_data_for_ml and trigger crawl_processed_data

- Run workflow, click on History and selct the running job to monitor progress

Next navigate to the s3 bucket where your parquet file is created (<>-processed bucket) and download the file locally on your computer.

Note: For ML Immersion Labs, you don't need to complete the "Inferences using Amazon Athena" Sectoin. Simply upload the parquet file you downloaded to your SageMaker notebook environment and run through the rest of the lab from the notebook entitled "Predict_accessibility_using_ML.ipynb". To download the notebook, git clone this repo locally and upload the notebook from the local folder to your SageMaker Instance. See below for the steps

In the AWS console, search and find Amazon SageMaker, and click Create Notebook Instance.

Give the notebook instance a name such as sagemaker-<>-notebook or any name of your choice which matches the required naming convention.

Leave the defaults for instance type

In Permissions and Encryption, choose Create a new role and enter the name of your S3 bucket (<>-processed bucket). This ensures that SageMaker has access to this bucket for storing model artifacts and processed features.

Click Create Role and Create Notebook Instance.

Once the notebook instance is created, click on Open Jupyter. Download the "Predict_accessibility_using_ML.ipynb" notebook on your local computer. Click Upload in the Jupyter console and upload both the parquet file and the ipynb to Jupyter.

Once uploaded, follow the steps in the Predict_accessibility_using_ML.ipynb Notebook to complete the rest of the lab. Remember: replace the bucket name with your bucket <>-processed bucket, and filename with the name of your parquet file.

Remember: Do not delete your SageMaker endpoints until you complete the Athena portion below. If you delete them by accident, simply recreate the SageMaker endpoint by re-running the code cell shown below

xgb_predictor = xgb.deploy(initial_instance_count=1,

instance_type='ml.m4.xlarge')Once your endpoints are up and running, we will run some inferences using Amazon Athena.

As of 2019 Re:Invent, AWS introduced a new feature whereby analysts and data scientists can directly query their machine learning models using SQL without having to write any custom python code. This enables data scientists and analysts to directly ingest predictions from their ML models into a database of their choice, run SQL queries for further analytics and business intelligence.

Note: As of 05-01-2020, this feature is only available in us-east-1, so only complete the rest of this lab if you did the entire lab in us-east-1.

Here we will show how this works.

Since this is a new feature, as of this Github repo, the feature is still in Preview. This requires one additional stepsin order to get it to work.

Navigate to the Amazon Athena console.

Click Connect to Data Source and Choose S3 and AWS Glue for the Data Catalog.

Select Set up crawler in AWS Glue ... and click on Connect to AWS Glue.

This will open a separate page for AWS Glue. Enter a name for your crawler, choose data stores, keep the default to S3 and using the folder icon, navigate to the folder containing the train.csv file in your bucket.

Caution: it is very important to not enter the full path ending in /train.csv, but rather only up to the folder containing the train.csv file. If you do the former, your Athena queries won't return any results. Make sure to put a "/" after the folder name containing the train.csv file.

Click Next, Next and Create an IAM role. Click Next

Set Frequency to run on demand

For database, choose the database name you chose above <>-raw, Click Next and Finish.

Run the crawler.

Once the crawler has successfully completed, navigate to the Athena Query Editor. You should find a "train" table created containing columns col0 ... col12.

Important While the ML feature is still in preview, you need this additiona step:

Click on Workgroup

Create Workgroup

For workgroup name, Enter: AmazonAthenaPreviewFunctionality

For Query Result Location, use the folder icon to navigate to the <>-processed bucket to store your query artifacts.

Click Create Workgroup

Once the Workgroup is created, ensure that this workgroup is selected, and click Switch Workgroup.

Remember that the XGBoost algorithm requires that all headers are removed before saving the train.csv file, so Glue assigns column names to the columms. Also notice that col0 is the actual labels.

We will now call our SageMaker endpoint and test our predictions on the training data.

In the SageMaker console, navigate to Endpoints, and copy the name of the endpoint you just created for your standalone XGBoost model (not AutoPilot) to the clipboard. It should look something like this: sagemaker-xgboost-####

To do so, in the Query editor, write the following query, but replace where it says :

sagemaker_endpoint = 'your endpoint here': with your endpoint name and replace "sampledb" in below

FROM sampledb.trainwith the name of your database.

USING FUNCTION predict(col1 double, col2 double, col3 double, col4 double, col5 double,

col6 double, col7 bigint, col8 bigint, col9 bigint, col10 bigint, col11 bigint, col12 bigint)

returns double type SAGEMAKER_INVOKE_ENDPOINT

WITH (sagemaker_endpoint='sagemaker-xgboost-2019-12-23-19-06-32-813')

SELECT ROUND(predict(col1, col2, col3, col4, col5, col6, col7, col8, col9, col10, col11, col12)) AS PREDICTED, col0 AS ACTUAL

FROM

(SELECT *

FROM sampledb.train)Click Run.

Once the query is run, the final output should look something like this:

and that's it!

You now have produced inferences using your ML model using Amazon Athena using data in S3, without a single line of python code!

In this lab you have learned how to leverage AWS glue to collect data from different sources, store the raw data on S3 and process and save the result on S3. You analyzed raw and processed data using Athena and also automated the data processing pipeline using Glow workflow.

Following this, you trained used Amazon SageMaker to ingest the parquet files from Glue, perform some data exploration and feature engineering and stage the processed features into an S3 bucket. You then trained an ML model using Amazon SageMaker's built in algorithms as well as SageMaker AutoPilot which automates a lot of the feature engineering tasks common in a data science workflow.

Finally once a trained model was generated, you deployed the model in Amazon SageMaker and performed inferences against that model using Amazon Athena with minimal code. This allows analysts to directly benefit from trained machine learning model using just SQL code.

Create a Role for Glue to have proper permissions

-

Sign into the AWS Management Console https://console.aws.amazon.com/.

-

Click on IAM from the list of all services. This will bring you to the IAM dashboard page.

-

Click Roles on the left hand panel and then click Create role

-

Select Glue

-

Click Next: Permissions.

-

In attach permission policies page, search for Glue and select AWSGlueServiceRole

-

Search for S3 and select AmazonS3FullAccess.

-

Click Next: Tags and Next: Review

-

Enter Userid_glue_s3 for the Role Name and click Create Role

This sample code is made available under a modified MIT-0 license. See the LICENSE file.