We’ve written a blog that describes example use cases including engineering design optimization, scenario analysis, systems-of-systems analysis, and L3/L4 digital twins. TwinFlow provides a unified framework that enables:

- registering different types of predictive models (inductive: data-driven, deductive: simulation, hybrid)

- task orchestration via graphs

- versioning and traceability

- workflow deployment on a scalable compute infrastructure

- mechanisms for self-learning (i.e. run triggers to rerun training and building of predictive models, update model parameters using probabilistic methods)

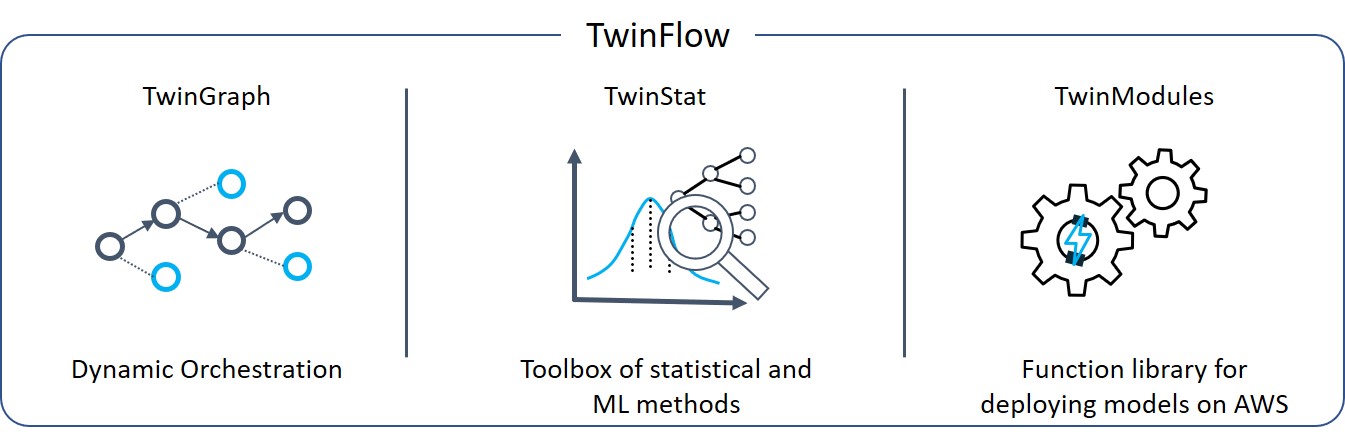

Figure 1: Overview of TwinFlow.

TwinGraph is the core of Twin flow, allowing for scalable graph orchestration of containerized compute tasks. A key differentiator of TwinGraph is the ability to use a number of different containers running on different heterogeneous compute nodes, chained within a dynamic workflow directed-acyclic graph (DAG) in an asynchronous event-driven architecture. Additionally, TwinGraph saves all the required (customizable) artifacts within a scalable graph database such as Amazon Neptune or Apache TinkerGraph.

TwinFlow relies on TwinStat for inductive data-driven predictive modelling, including probabilistic methods for updating model parameters. Other types of predictive models, most notably deductive simulations, must be supplied from external sources (i.e. partners or customers).

TwinModules is the automation that provides python wrappers to AWS tasks, compute and workflow orchestration, and traceability. Users can insert predictive models anywhere within the graph orchestration to complete the L4 DT.

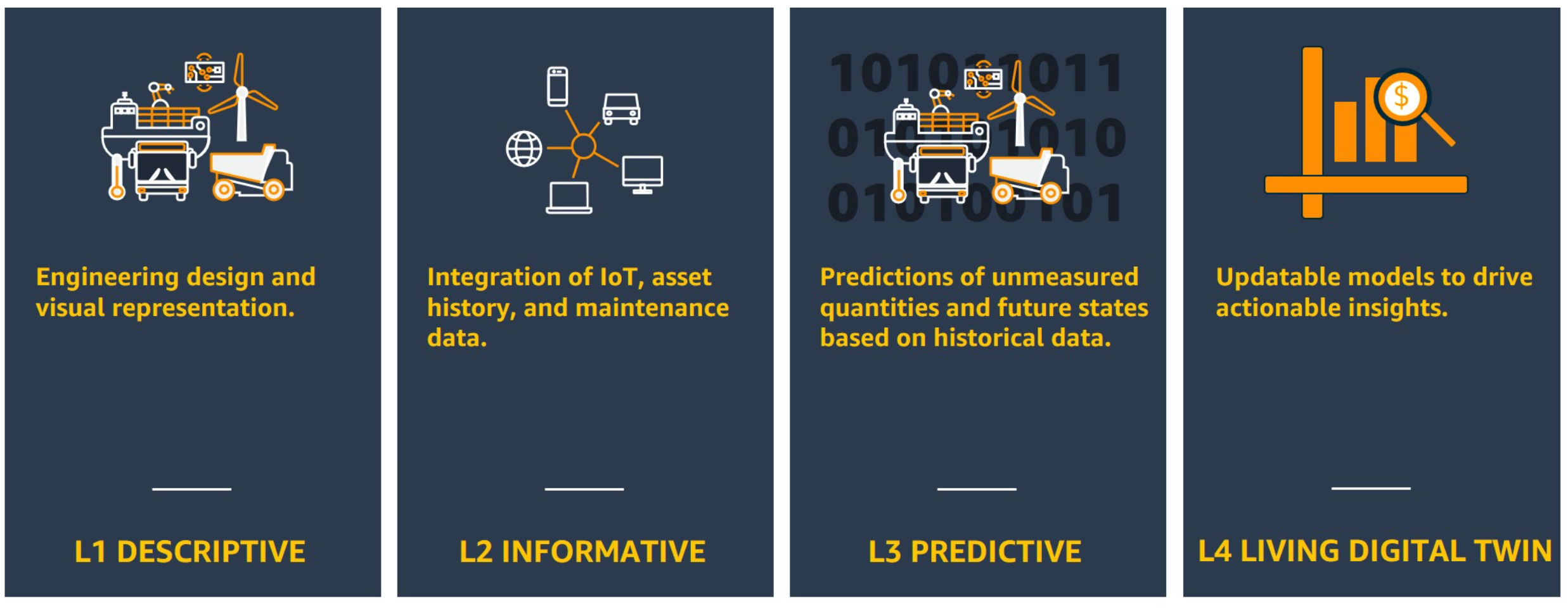

TwinFlow is designed to help create Digital Twins. In prior work, we have described different categories of DTs. These include L1 (descriptive), L2 (informative), L3 (predictive), and L4 (living), which is the focus of this work. This is shown in Figure 2.

Figure 2: Leveling Framework for Digital Twins.

A level 4 (L4) digital twin (DT) requires the ability to retrain or rebuild any predictive models that are utilized in the digital twin. Predictive models can either be inductive (data-driven: statistical, ML/DL), deductive (first principle-based, spatial simulations), or a hybrid combination. Most companies that utilize L4 DTs write custom code for each application. TwinFlow provides tools to minimize the undifferentiated heavy lifting of building L4 DTs and allows users to focus on the code custom to their use case. Figure 3 shows where the different submodules of TwinFlow (TwinGraph, TwinStat & TwinModules) piece together to form an L4 DT solution.

Figure 3: Overall Architecture of TwinFlow for Digital Twins.

When the TwinFlow project is cloned, the three modules will appear as empty folders.

git clone https://github.com/aws-samples/twinflow.gitIn order to use the submodules, you must run two commands:

-

git submodule init to initialize local configuration file:

git submodule init

-

git submodule update to fetch all the data from that project and check out the appropriate commit listed in TwinFlow:

git submodule update

Alternatively, if you only wish to clone the repo once and have the current commit HEAD of the submodules cloned recursively, you can use:

git clone --recursive https://github.com/aws-samples/twinflow.gitSee CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.

- TwinFlow Overview & Use Cases

- Digital Twins Leveling Framework

- Level 1 Descriptive DT Overview

- Level 2 Informative DT Overview

- Level 3 Predictive DT Overview

- Level 4 Living DT Overview

- AWS Do-PM Framework: Parts 1, 2, 3

- Level 3 Digital Twin Virtual Sensors with Ansys on AWS

This open source framework was developed by the Autonomous Computing Team within Amazon Web Services (AWS) Worldwide Specialist Organization (WWSO). Developers include Ross Pivovar, Vidyasagar Ananthan, Satheesh Maheswaran, and Cheryl Abundo. Authors would like to thank Alex Iankoulski for his detailed guidance and expertise in reviewing the code.