A curated list of resources including papers, datasets, and relevant links pertaining to object placement, which aims to learn plausible spatial transformation (e.g., shifting, scaling, affine transformation, perspective transformation) for the inserted foreground object in a composite image considering geometric and semantic information. The simplest case is finding reasonable location and scale for the foreground object. For more complete resources on general image composition (object insertion), please refer to Awesome-Image-Composition.

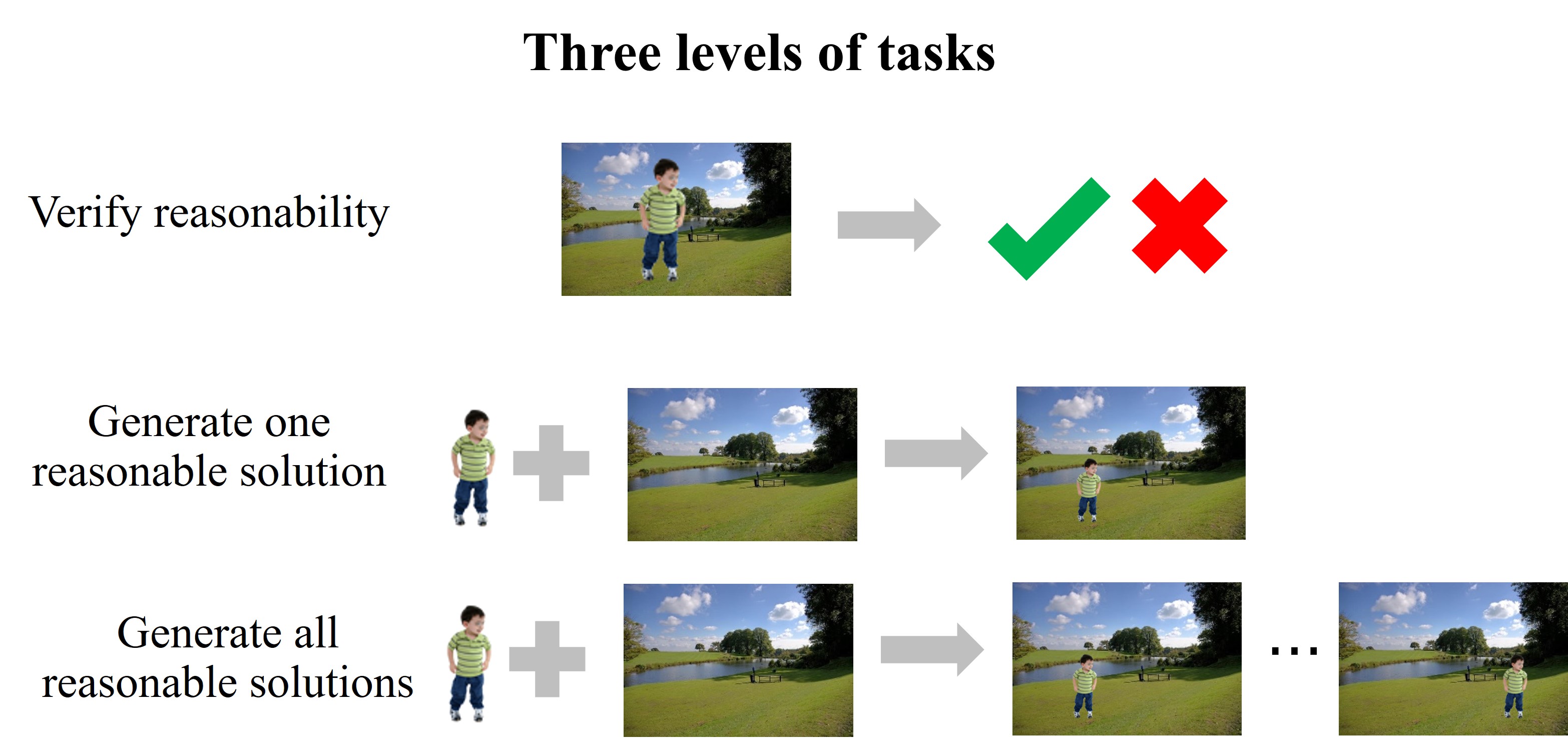

We can define three levels of tasks for object placement. (1) Level 1: given a composite image, verify whether the foreground placement is reasonable. (2) Level 2: given a pair of foreground and background, generate one composite image with reasonable foreground placement. (3) Level 3: given a pair of foreground and background, generate all composite images with reasonable foreground placement.

Contributions are welcome. If you wish to contribute, feel free to send a pull request. If you have suggestions for new sections to be included, please raise an issue and discuss before sending a pull request.

A brief review on object placement is included in the following survey on image composition:

Li Niu, Wenyan Cong, Liu Liu, Yan Hong, Bo Zhang, Jing Liang, Liqing Zhang: "Making Images Real Again: A Comprehensive Survey on Deep Image Composition." arXiv preprint arXiv:2106.14490 (2021). [arXiv] [slides]

Try this online demo for object placement and have fun!

-

Gemma Canet Tarrés, Zhe Lin, Zhifei Zhang, Jianming Zhang, Yizhi Song, Dan Ruta, Andrew Gilbert, John Collomosse, Soo Ye Kim: "Thinking Outside the BBox: Unconstrained Generative Object Compositing." ECCV (2024) [pdf]

-

Yaxuan Qin, Jiayu Xu, Ruiping Wang, Xilin Chen: "Think before Placement: Common Sense Enhanced Transformer for Object Placement." ECCV (2024) [pdf] [code]

-

Yibin Wang, Yuchao Feng, Jianwei Zheng: "Learning Object Placement via Convolution Scoring Attention." BMVC (2024) [pdf] [code]

-

Guosheng Ye, Jianming Wang, Zizhong Yang: "Efficient Object Placement via FTOPNet." Electronics (2023) [pdf]

-

Shengping Zhang, Quanling Meng, Qinglin Liu, Liqiang Nie, Bineng Zhong, Xiaopeng Fan, Rongrong Ji: "Interactive Object Placement with Reinforcement Learning." ICML (2023) [pdf]

-

Yibin Wang, Yuchao Feng, Jie Wu, Honghui Xu, Jianwei Zheng: "CA-GAN: Object Placement via Coalescing Attention based Generative Adversarial Network." ICME (2023) [pdf]

-

Siyuan Zhou, Liu Liu, Li Niu, Liqing Zhang: "Learning Object Placement via Dual-path Graph Completion." ECCV (2022) [arXiv] [code]

-

Lingzhi Zhang, Tarmily Wen, Jie Min, Jiancong Wang, David Han, Jianbo Shi: "Learning Object Placement by Inpainting for Compositional Data Augmentation." ECCV (2020) [pdf]

-

Shashank Tripathi, Siddhartha Chandra, Amit Agrawal, Ambrish Tyagi, James M. Rehg, Visesh Chari: "Learning to Generate Synthetic Data via Compositing." CVPR (2019) [arXiv]

-

Chen-Hsuan Lin, Ersin Yumer, Oliver Wang, Eli Shechtman, Simon Lucey: "ST-GAN: Spatial Transformer Generative Adversarial Networks for Image Compositing." CVPR (2018) [arXiv] [code]

-

Sijie Zhu, Zhe Lin, Scott Cohen, Jason Kuen, Zhifei Zhang, Chen Chen: "TopNet: Transformer-based Object Placement Network for Image Compositing." CVPR (2023) [pdf]

-

Li Niu, Qingyang Liu, Zhenchen Liu, Jiangtong Li: "Fast Object Placement Assessment." arXiv:2205.14280 (2022) [arXiv] [code]

-

Liu Liu, Zhenchen Liu, Bo Zhang, Jiangtong Li, Li Niu, Qingyang Liu, Liqing Zhang: "OPA: Object Placement Assessment Dataset." arXiv:2107.01889 (2021) [arXiv][code]

-

Rishubh Parihar, Harsh Gupta, Sachidanand VS, R. Venkatesh Babu: "Text2Place: Affordance-aware Text Guided Human Placement." ECCV (2024) [pdf] [code]

-

Jieteng Yao, Junjie Chen, Li Niu, Bin Sheng: "Scene-aware Human Pose Generation using Transformer." ACM MM (2023) [pdf]

-

Donghoon Lee, Sifei Liu, Jinwei Gu, Ming-Yu Liu, Ming-Hsuan Yang, Jan Kautz: "Context-Aware Synthesis and Placement of Object Instances." NeurIPS (2018) [pdf]

-

Fuwen Tan, Crispin Bernier, Benjamin Cohen, Vicente Ordonez, Connelly Barnes: "Where and Who? Automatic Semantic-Aware Person Composition." WACV (2018) [arXiv][code]

-

Anna Volokitin, Igor Susmelj, Eirikur Agustsson, Luc Van Gool, Radu Timofte: "Efficiently Detecting Plausible Locations for Object Placement Using Masked Convolutions." ECCV workshop (2020) [pdf]

-

Nikita Dvornik, Julien Mairal, Cordelia Schmid: "On the Importance of Visual Context for Data Augmentation in Scene Understanding." T-PAMI (2019) [arXiv]

-

Nikita Dvornik, Julien Mairal, Cordelia Schmid: "Modeling Visual Context is Key to Augmenting Object Detection Datasets." ECCV (2018) [pdf]

-

OPAZ: 7 distinct categories of foreground objects with 15 images for each category. 10 background images for each category. OPAZ comprises 8,160 generated images, of which 1,390 are labeled as rational, and 6,770 as irrational. [pdf] [link]

-

OPA: it contains 62,074 training images and 11,396 test images, in which the foregrounds/backgrounds in training set and test set have no overlap. The training (resp., test) set contains 21,376 (resp.,3,588) positive samples and 40,698 (resp., 7,808) negative samples. Besides, the training (resp., test) set contains 2,701 (resp., 1,436) unrepeated foreground objects and1,236 (resp., 153) unrepeated background images. [pdf] [link]

-

STRAT: it contains three subdatasets: STRAT-glasses, STRAT-hat, and STRAT-tie, which correspond to "glasses try-on", "hat try-on", and "tie try-on" respectively. The accessory image (resp., human face or portrait image) is treated as foreground (resp., background). In each subdataset, the training set has 2000 pairs of foregrounds and backgrounds, while the test set has 1000 pairs of foregrounds and backgrounds. [pdf] [link]

-

Philipp Bomatter, Mengmi Zhang, Dimitar Karev, Spandan Madan, Claire Tseng, Gabriel Kreiman: "When Pigs Fly: Contextual Reasoning in Synthetic and Natural Scenes." ICCV (2021) [paper] [dataset&code]

-

Manoj Acharya, Anirban Roy, Kaushik Koneripalli, Susmit Jha, Christopher Kanan, Ajay Divakaran: "Detecting Out-Of-Context Objects Using Graph Context Reasoning Network." IJCAI (2022) [paper]