This is the application of reinforcement learning (RL) for Aw-Rascle-Zhang (ARZ) Traffic Model using proximal poloicy optimization (PPO). The implementation is based on "PyTorch Implementations of Reinforcement Learning Algorithms", and modified with the application of ARZ simulator.

- Python 3 (it might work with Python 2, but I didn't test it)

- ARZ Simulator

- PyTorch

- OpenAI baselines

-

In the "settings_file.py", the parameters of ARZ simulator are defined. For RL training configuration, the hyper-paramters are defined in "main.py".

-

You can simply run the code and reproduce the results by following:

# Training

python main.py

- After training the RL agent, one can evaluate the performance of RL controller by executing:

# Evaluation & Visualization

python evaluation_RL.py

- Model-based baselines, i.e., Openloop, Backstepping, P, and PI controllers, are executed by following:

# Openloop control

# Evaluation & Visualization

python evaluation_Openloop.py

# Backstepping control

# Evaluation & Visualization

python evaluation_Backstep.py

# P control

# Evaluation & Visualization

python evaluation_P_control_inlet.py

## PI control

# Evaluation & Visualization

python evaluation_PI_control_Inlet_N_Outlet.py

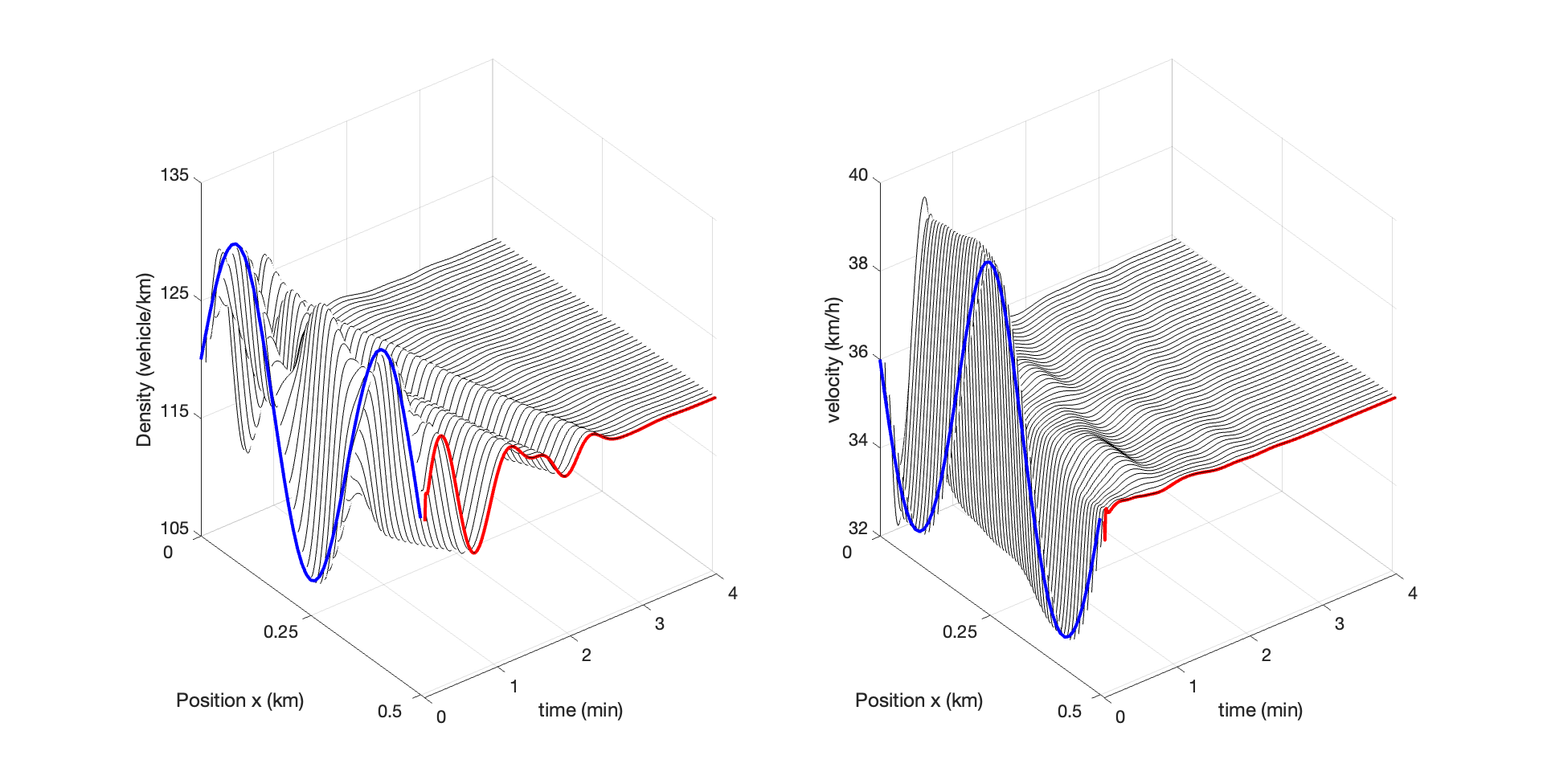

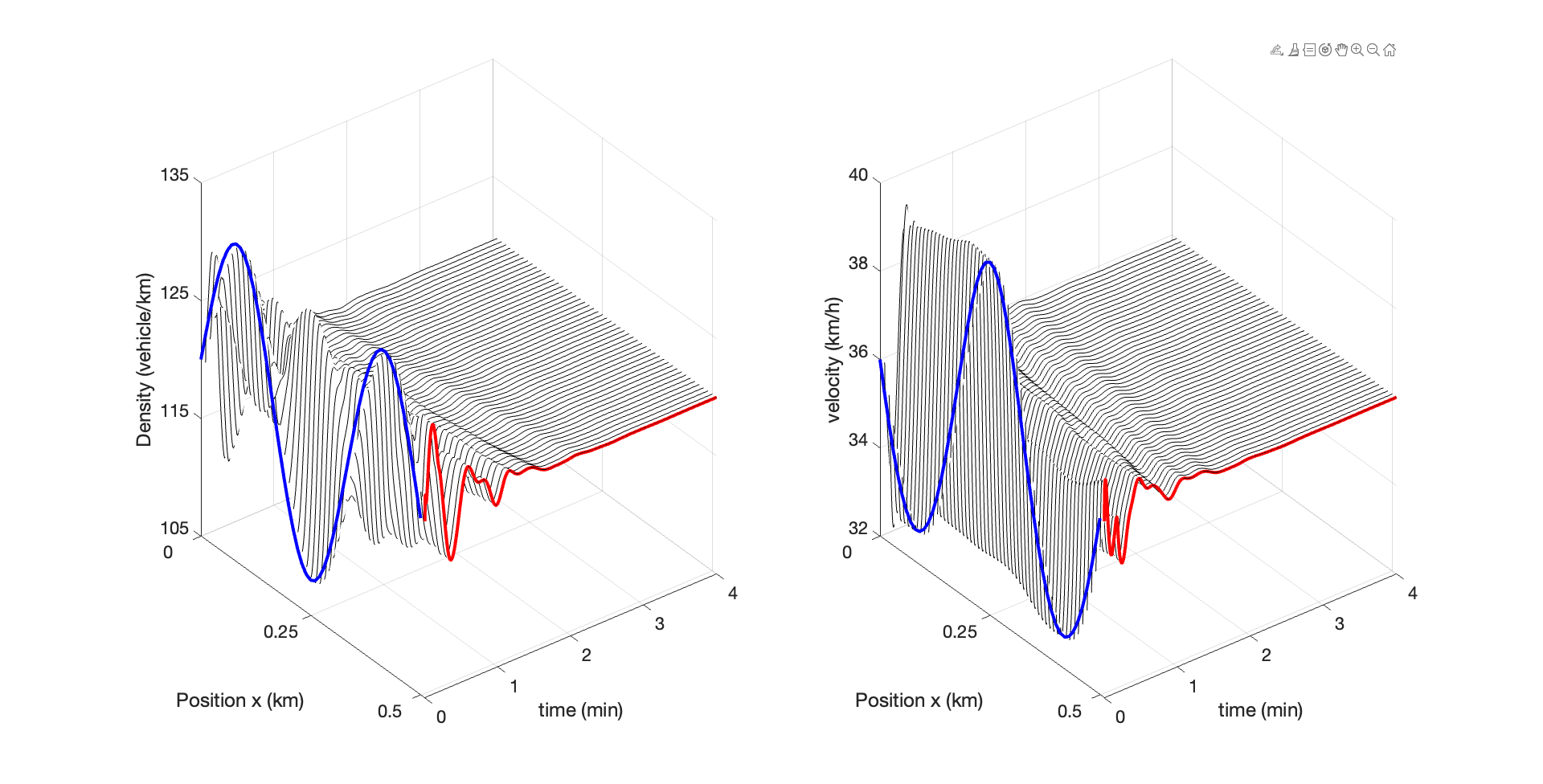

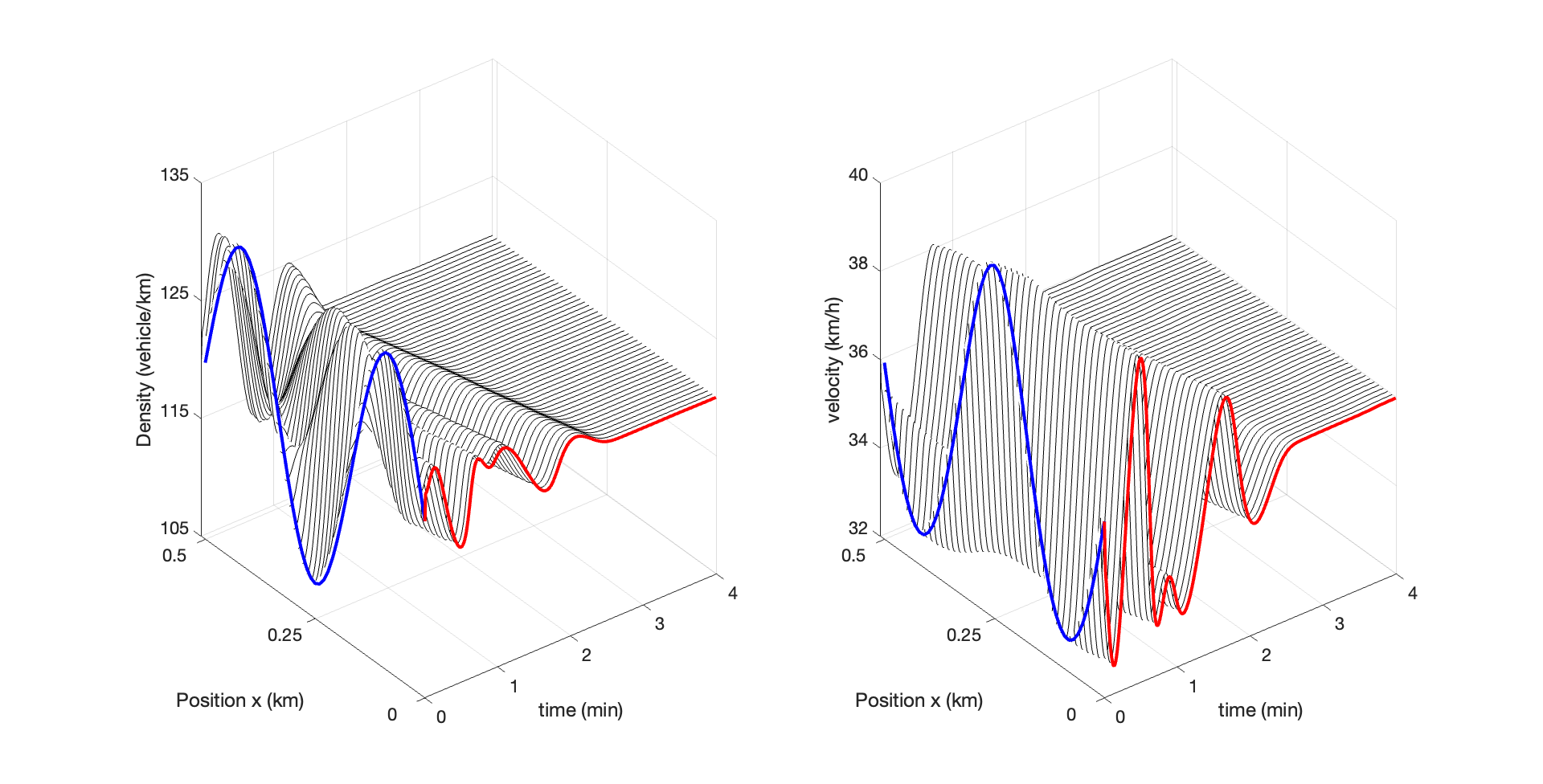

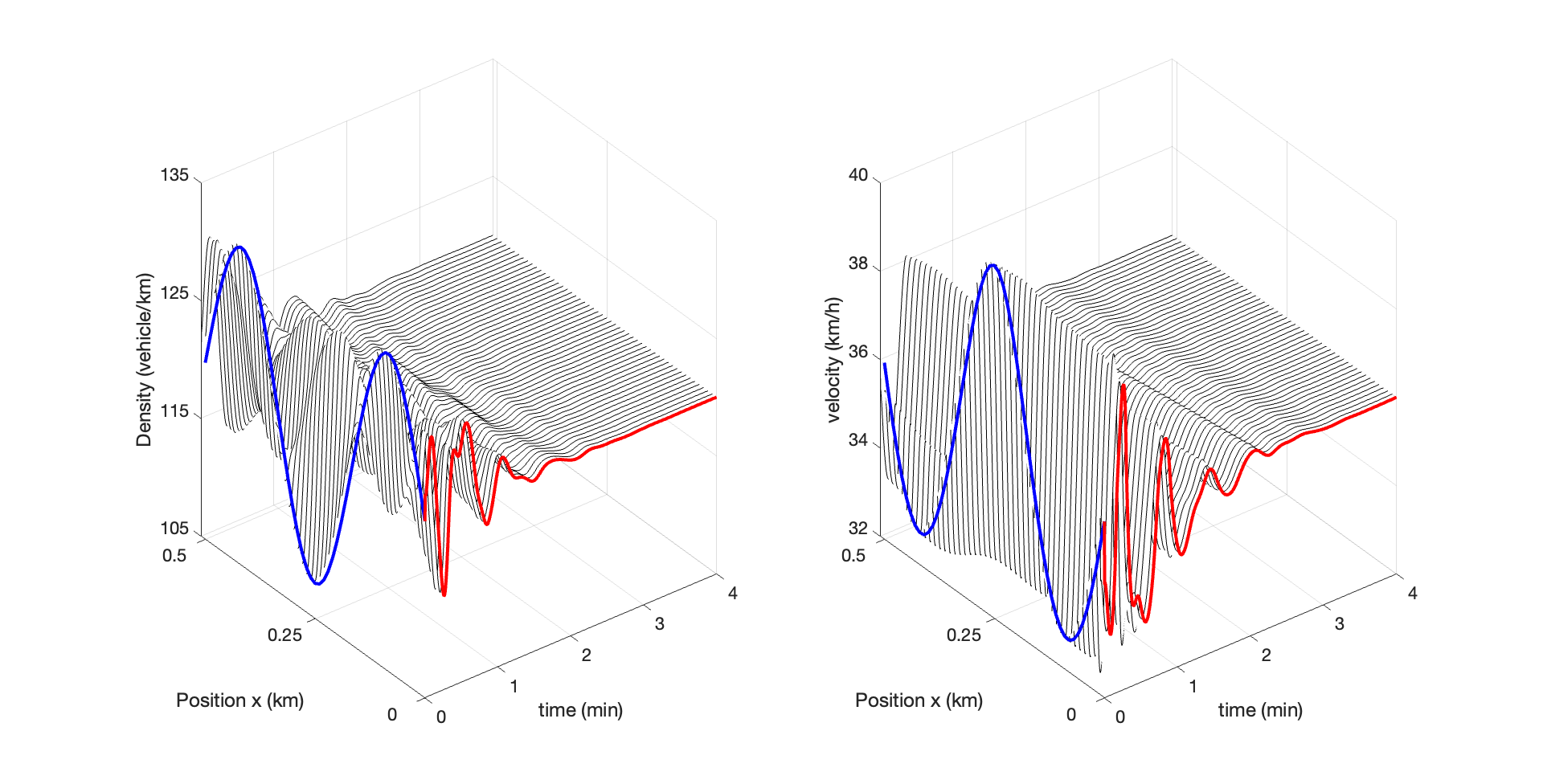

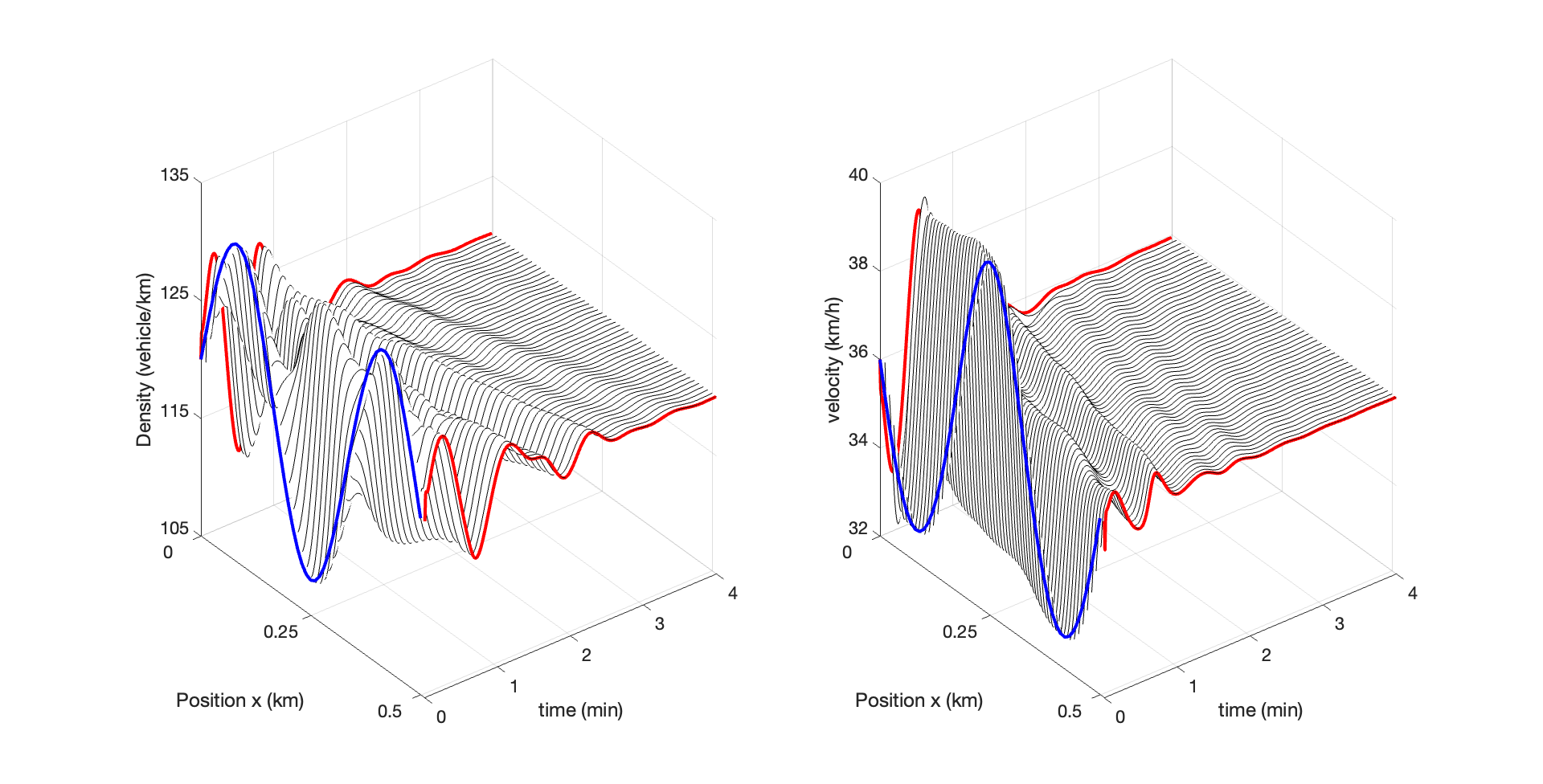

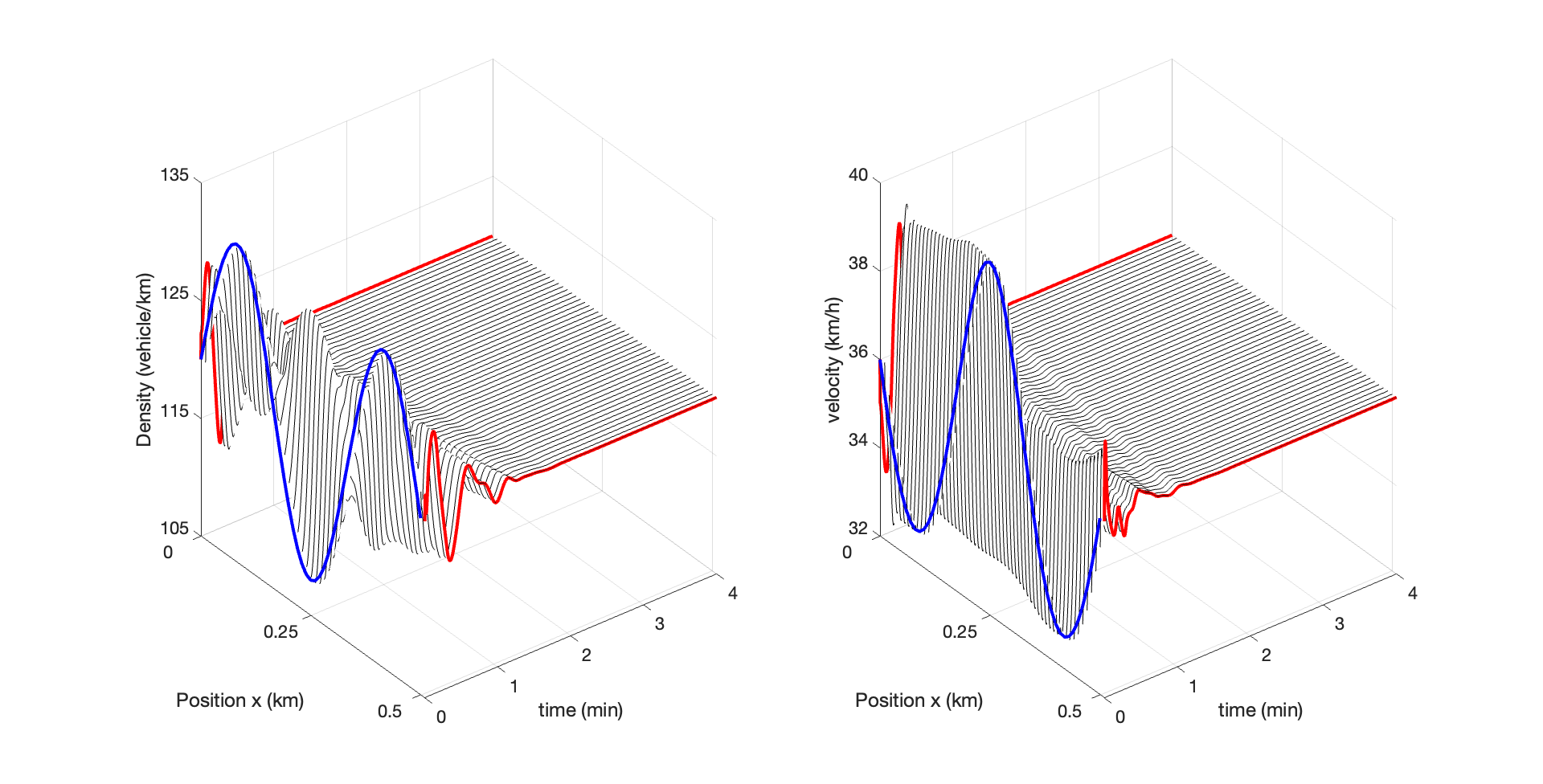

Figures and Results are saved in "save_plot_results" and "save_mat" folder in the repository. The figures in the manuscript are generated by running "Matlab_states_plot.m" in "save_mat" folder.

Saehong Park: sspark@berkeley.edu

Huan Yu: huy015@ucsd.edu