This ROS package provides an immersive projection mapping system for interactively teaching assembly operations.

This project has the following associated paper:

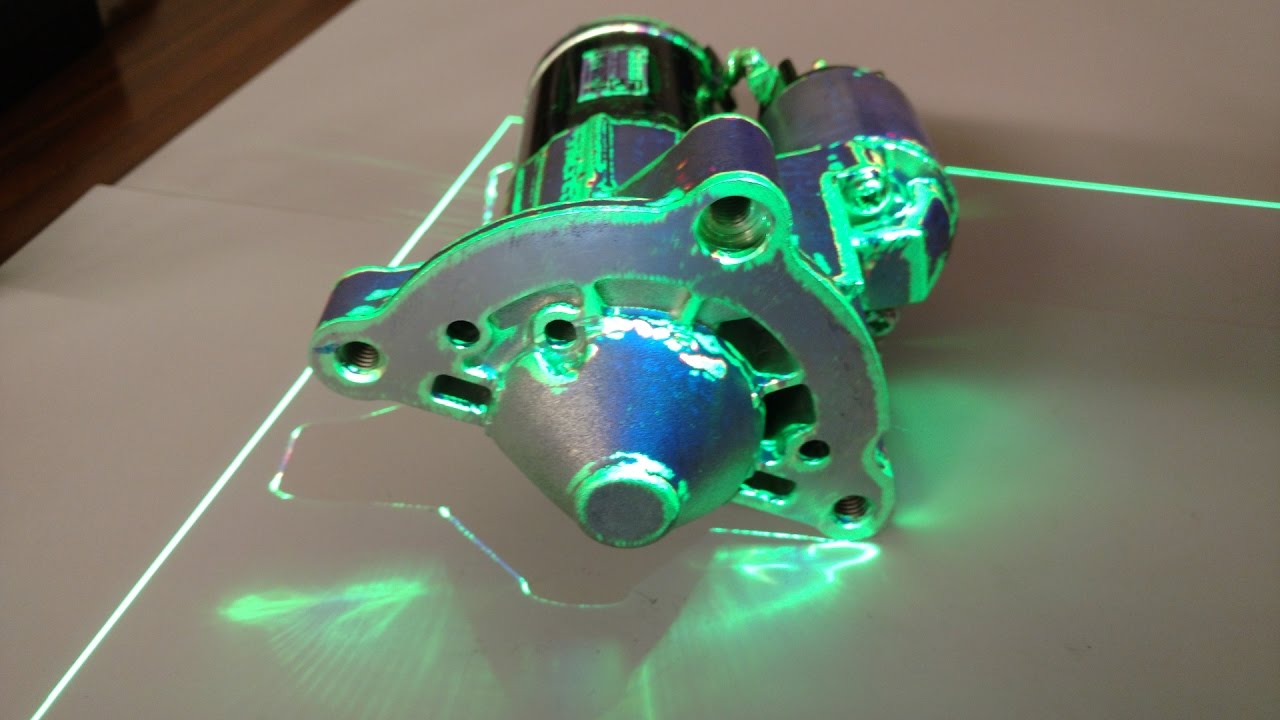

Currently it has assembly instructions for a Mitsubishi M000T20873 starter motor, but it can be easily reconfigured to other tasks (you just need to add the content to the media folder and change the yaml/assembly.yaml file).

Video 1: Assisted assembly of a starter motor

Video 2: Immersive natural interaction for assisted assembly operations

Video 3: Object pose estimation for assisted assembly operations

Video 4: Projection mapping for assisted assembly operations

Video 5: Disassembly of a starter motor

Quick overview of the main installation steps:

- Install ROS

- Upgrade gazebo to at least version 9.12 for getting this pull request

- Install the catkin-tools build system

- Create a catkin tools workspace using this script for building all the libraries and executables

- Comile my fork of gazebo_ros and gpm:

- gazebo_ros_pkgs

- gazebo_projection_mapping

- Compile using the catkin build command

- Install or compile the 3D perception system:

- Compile the teaching system:

Before compiling packages, check if you have installed all the required dependencies (use rosdep to speedup this task):

cd ~/catkin_ws

rosdep check --from-paths src --ignore-src

This package was tested with a Asus Xtion Pro Live for object recognition and a Kinect 2 for bare hand human machine interaction.

You will need to install the hardware drivers and calibrate them.

Sensors drivers:

Sensors calibration:

You will also need to calibrate the projector (using for example this tool) and update the intrinsics parameters of the rendering camera in worlds/assembly.world.

Finally, you will need to compute the extrinsics (position and rotation in relation to the chessboard origin) of the sensors and projector (using the charuco_detector) and update launch/assembly_tfs.launch.

The teaching system can be started with the following launch file:

roslaunch assembly_projection_mapping_teaching assembly.launch

You can start several modules of the system individually (such as sensors, rendering, perception). Look into the launch folder and tests.txt.

After the system is started, you can navigate between the textual / video instructions using the projected buttons and can also pause / play / seek the video. In the last step it is projected into the workspace the outline of the 3D model for visual inspection and assembly validation. Check the videos above for a demonstration of the system functionality.

- command (std_msgs::String) | Topic for processing string commands (listed below) for changing the content being projected (# symbol corresponds to a number)

- "next_step"

- "previous_step"

- "first_step"

- "last_step"

- "step: #"

- "play_video"

- "pause_video"

- change_step (std_msgs::Int32) | Topic for changing the projection content to the specified assembly step

- current_step (std_msgs::Int32) | Latched topic informing the assembly step currently being projected

- status (std_msgs::String) | Latched status topic informing the changes being performed to the projected content

- "next_step"

- "previous_step"

- "first_step"

- "last_step"

- "move_to_step"

- "step_number: #"

- "running"

- "paused"

- "seek_video: #"