This project uses a Convolutional Neural Network to attempt to learn how to drive a car in a simulator by trying to replicate the driving behaviour of a human player.

The Neural Net is fed three image streams from cameras fixed on the car and the current steering angle during training.

After training the model is able to send appropriate steering angles to the car in order for it to stay on the track.

The car simulator used to gather training data is made by Udacity for their Self-Driving Car Nanodegree program, download it here:

To run the neural net, use docker

docker run -p 4567:4567 -it --rm -v `pwd`:/src madhorse/behavioral-cloning python3 drive.py model.h5open your simulator and go in Autonomous Mode, this allows the neural net to recieve images and send steering angles.

To run training on the model, use nvidia-docker in order to train on the GPU, use the following commands:

git clone https://github.com/Charles-Catta/Behavioral-Cloning.git

cd Behavioral-Cloning

wget https://d17h27t6h515a5.cloudfront.net/topher/2016/December/584f6edd_data/data.zip

unzip data.zip

rm data.zip

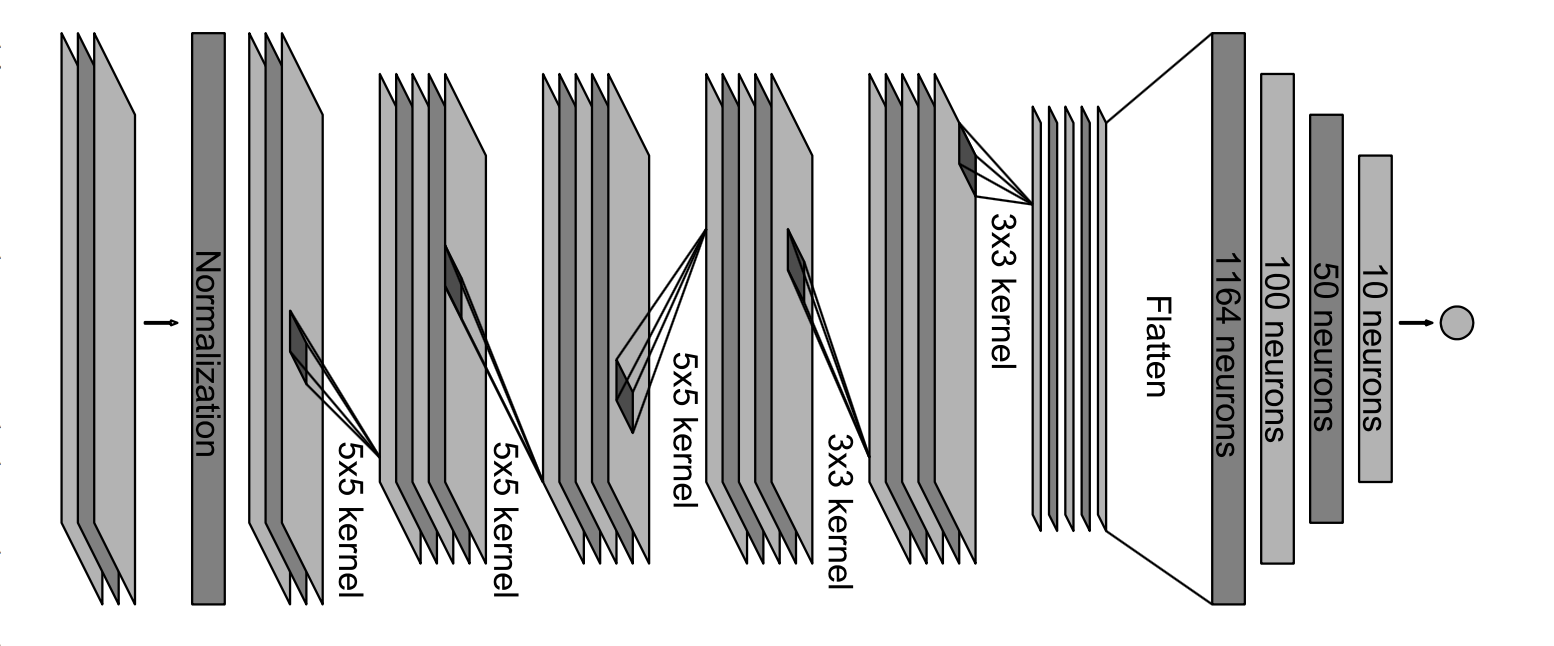

nvidia-docker run -it --rm -v `pwd`:/src madhorse/behavioral-cloning python3 model.pyThe model architecture for this project is based on Nvidia's paper on End to end learning for self-driving cars

All of the data preprocessing steps are outlined in the Jupyter notebook

Read the writeup