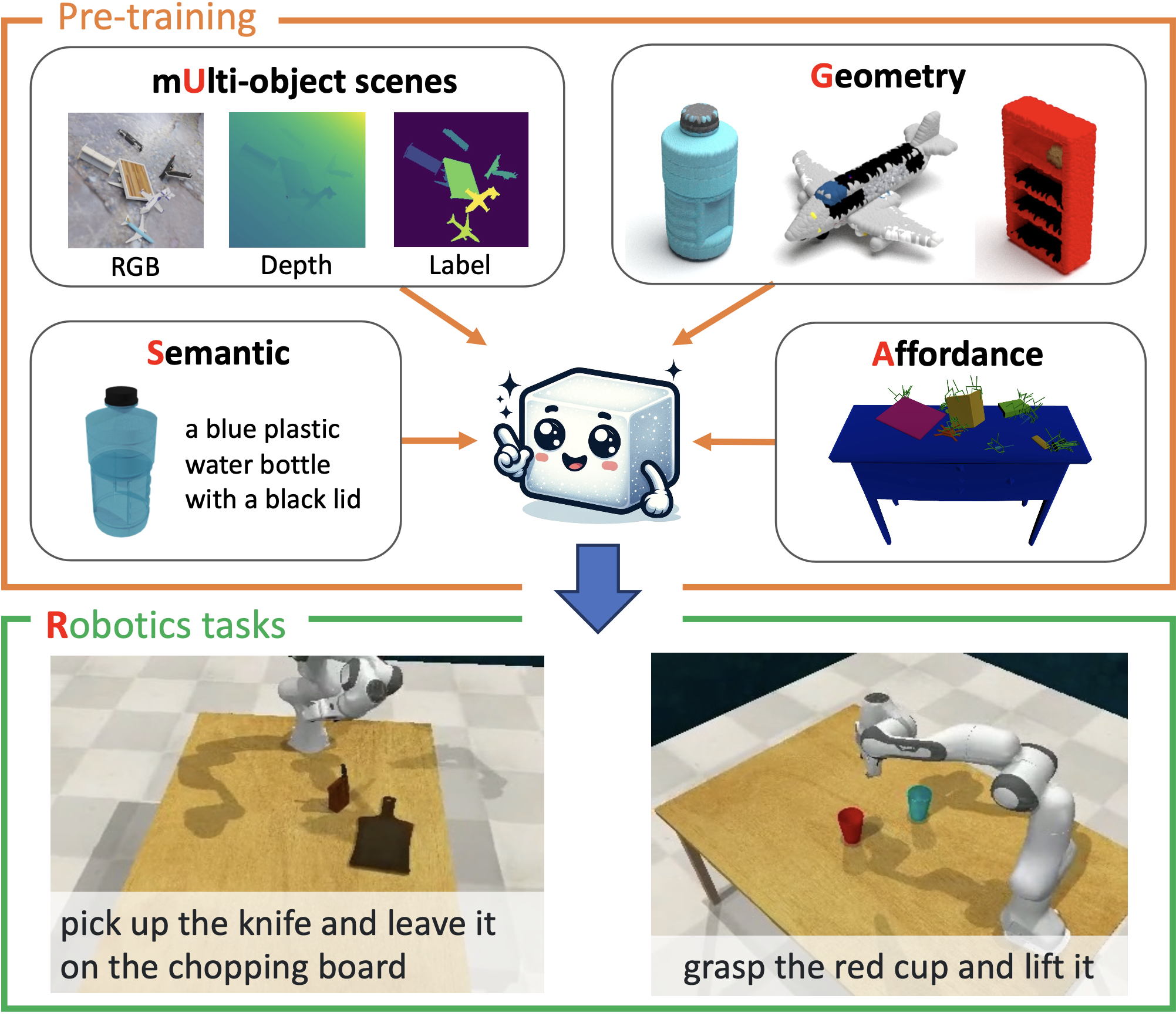

This repository is the official implementation of SUGAR: Pre-training 3D Visual Representations for Robotics (CVPR 2024).

See INSTALL.md for detailed instruction in installation.

See DATASET.md for detailed instruction in dataset.

The pretrained checkpoints are available here.

- pre-training on single-object datasets

sbatch scripts/slurm/pretrain_shapenet_singleobj.slurm

sbatch scripts/slurm/pretrain_ensemble_singleobj.slurm- pre-training on multi-object datasets

sbatch scripts/slurm/pretrain_shapenet_multiobj.slurm

sbatch scripts/slurm/pretrain_ensemble_multiobj.slurmEvaluate on the modelnet, scanobjectnn and objaverse_lvis dataset with pretrained checkpoints.

sbatch scripts/slurm/downstream_cls_zeroshot.slurmTrain and evaluate on the ocidref and roborefit dataset. The trained models can be downloaded here.

sbatch scripts/slurm/downstream_ocidref.slurm

sbatch scripts/slurm/downstream_roborefit.slurmTrain and evaluate on the RLBench 10 tasks. The trained model can be downloaded here.

sbatch scripts/slurm/rlbench_train_multitask_10tasks.slurm

sbatch scripts/slurm/rlbench_eval_val_split.slurm

sbatch scripts/slurm/rlbench_eval_tst_split.slurmIf you find this work useful, please consider citing:

@InProceedings{Chen_2024_SUGAR,

author = {Chen, Shizhe and Garcia, Ricardo and Laptev, Ivan and Schmid, Cordelia},

title = {SUGAR: Pre-training 3D Visual Representations for Robotics},

booktitle = {CVPR},

year = {2024}

}