This is the official repository of IEEE Robotics and Automation Letters 2022 paper:

What's in the Black Box? The False Negative Mechanisms Inside Object Detectors

Dimity Miller, Peyman Moghadam, Mark Cox, Matt Wildie, Raja Jurdak

If you use this repository, please cite:

@article{miller2022s,

title={What's in the Black Box? The False Negative Mechanisms Inside Object Detectors},

author={Miller, Dimity and Moghadam, Peyman and Cox, Mark and Wildie, Matt and Jurdak, Raja},

journal={IEEE Robotics and Automation Letters},

year={2022},

volume={7},

number={3},

pages={8510-8517},

doi={10.1109/LRA.2022.3187831}

}

This code was developed with Python 3.8 on Ubuntu 20.04.

Our paper is implemented for Faster R-CNN and RetinaNet object detectors from the detectron2 repository.

Clone and Install Detectron2 Repository

- Clone detectron2 inside the fn_mechanisms folder.

cd fn_mechanisms

git clone https://github.com/facebookresearch/detectron2.git- Follow the detectron2 instructions for installation. We use pytorch 1.12 with cuda 11.6, and build detectron2 from source. However, you should be able to use other versions of pytorch and cuda as long as they meet the listed detectron2 requirements.

- You should be able to run the following command with no errors. If you have any errors, this is an issue with your detectron2 installation and you should debug or raise an issue with the detectron2 repository.

cd detectron2/demo

python demo.py --config-file ../configs/COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml --input ../../images/test_im.jpg --opts MODEL.WEIGHTS https://dl.fbaipublicfiles.com/detectron2/COCO-Detection/faster_rcnn_R_50_FPN_3x/137849458/model_final_280758.pklQuick Setup:

COCO data can be downloaded from here. The following commands can be used to quickly download the COCO val2017 images and annotations for evaluating.

mkdir data

cd data

mkdir coco

cd coco

wget http://images.cocodataset.org/zips/val2017.zip

wget http://images.cocodataset.org/annotations/annotations_trainval2017.zip

unzip -q val2017.zip

unzip -q annotations_trainval2017.zip

Other Datasets:

The scripts are designed to be input folders that contain the images to be tested (and no other file types), and an annotation file in the COCO Object Detection format. Read here for details on how to format the annotation file. The annotation file does not need segmentations or segmentation-related information, but all other fields are necessary.

We use pre-trained models (trained on COCO) from the Detectron2 Model Zoo.

python identify_fn.py --input image_folder --gt annotation_file --opts MODEL.WEIGHTS weights_filewhere:

image_folderis the path to the folder containing all images to be tested. No other files should be in this folder.annotation_fileis the path to the json file containing annotations for all images, in the COCO Object Detection format (see above).weights_fileis the path to the weights file to test the

Optional arguments:

--config-file config_pathwhere config_path is a string of the path to the detectron2 detector config file. Default is "detectron2/configs/COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml".--confidence-threshold cwhere c ris a float of the minimum class confidence score for detections to be considered valid. Default is 0.3.--vis Trueto visualise each the detections for each image--visFN Trueto visualise each image's false negative objects and a visualisation of the false negative mechanism responsible.--optscan be also be used to alter the config file options. See detectron2 instructions for more information.

Testing Faster R-CNN (R50 FPN 3x) on COCO

After following the instructions above for downloading the COCO val2017 data:

python identify_fn.py --input data/coco/val2017/ --gt data/coco/annotations/instances_val2017.json --opts MODEL.WEIGHTS https://dl.fbaipublicfiles.com/detectron2/COCO-Detection/faster_rcnn_R_50_FPN_3x/137849458/model_final_280758.pklTesting RetinaNet (R50 FPN 3x) on COCO

After following the instructions above for downloading the COCO val2017 data:

python identify_fn.py --input data/coco/val2017/ --gt data/coco/annotations/instances_val2017.json --config-file detectron2/configs/COCO-Detection/retinanet_R_50_FPN_3x.yaml --opts MODEL.WEIGHTS https://dl.fbaipublicfiles.com/detectron2/COCO-Detection/retinanet_R_50_FPN_3x/190397829/model_final_5bd44e.pklResults

Running the two commands above should generate the following results:

| False Negative Mechanisms | ||||||

| Detector | # of False Negatives | Proposal Process | Regressor | Interclass Classification | Background Classification | Classifier Calibration |

| Faster R-CNN (R50 FPN 3x) | 10464 | 20.01% | 2.48% | 12.19% | 58.22% | 7.10% |

| RetinaNet (R50 FPN 3x) | 11869 | 5.87% | 0.07% | 9.52% | 77.86% | 6.68% |

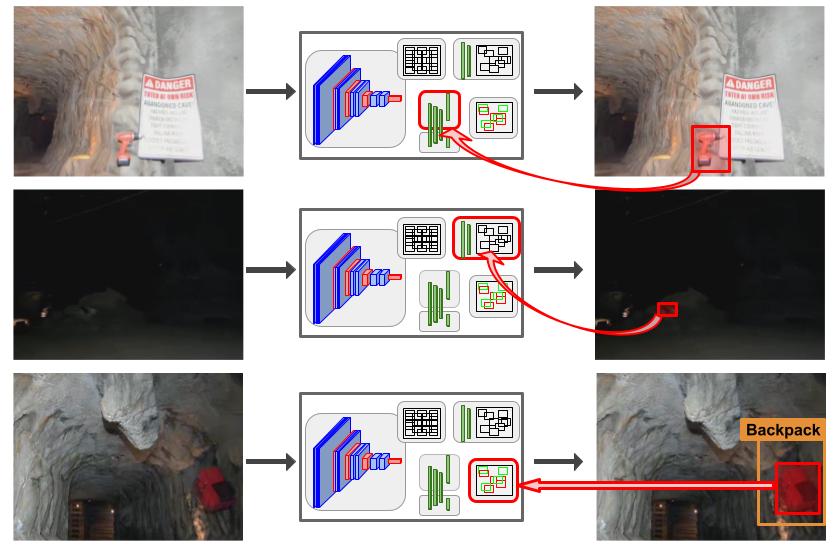

When running the identify_fn.py script, you can set --visFN True to visualise false negative mechanisms. This section explains what is being visualised with some examples. These examples are from the COCO dataset when testing with the Detectron2 COCO-trained Faster RCNN (R50 FPN 3x).

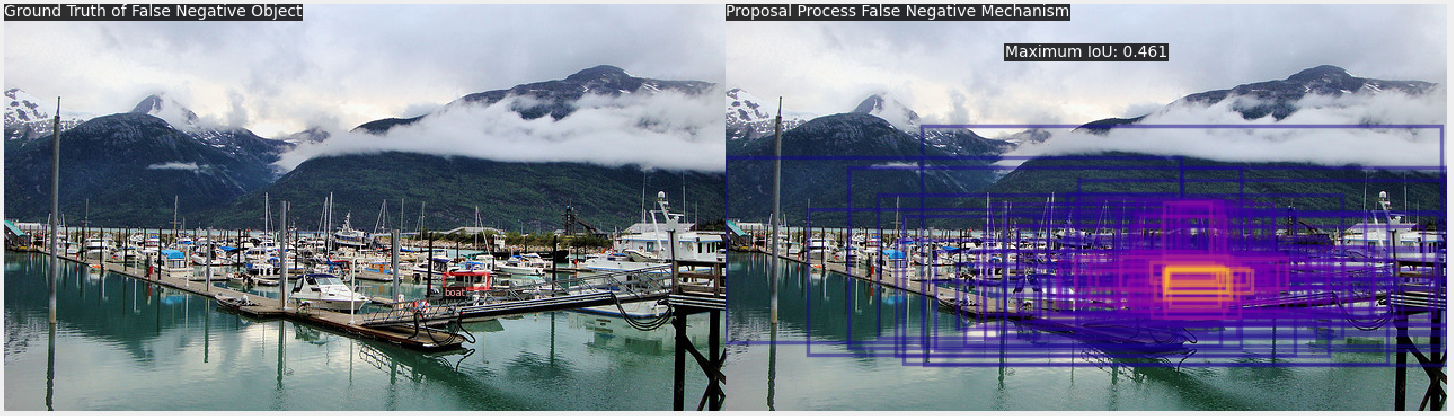

Proposal Process Mechanism

On the left, a red box and label show the false negative object (e.g. boat). On the right, there is a visualisation of the detector proposals, where warmer-toned boxes show a greater IoU with the FN object and cooler-toned boxes show a lower IoU with the FN object. The maximum IoU of any proposal with the FN object is also printed on the image (e.g. Maximum IoU: 0.461). To de-clutter the image, we only visualise the proposals that have an IoU greater than 0 with the FN object.

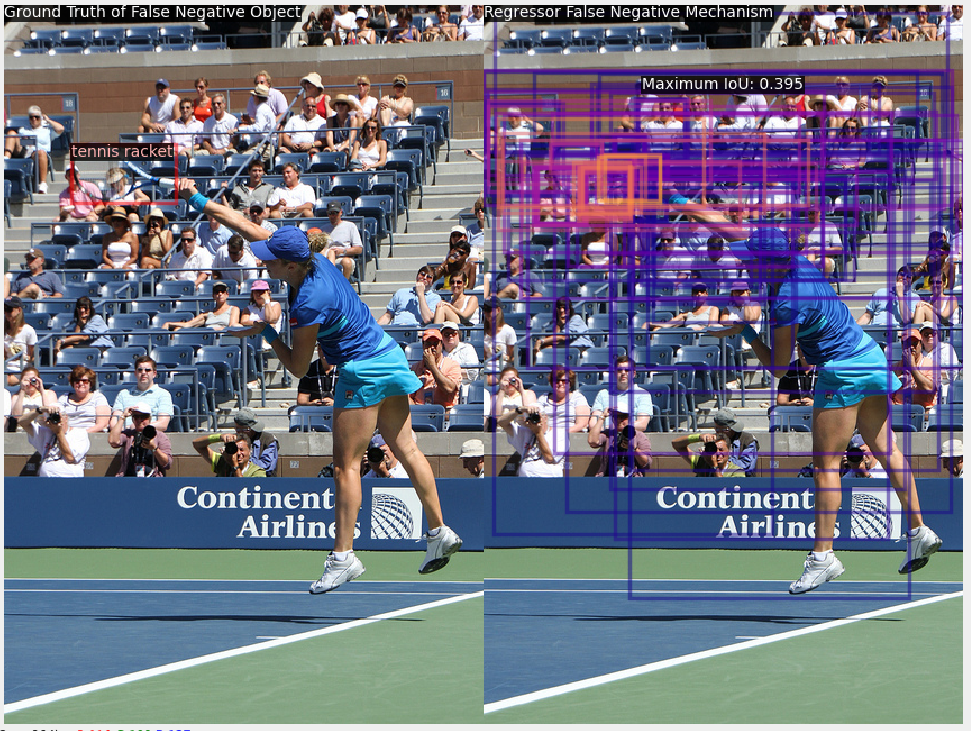

Regressor Mechanism

On the left, a red box and label show the false negative object (e.g. tennis racket). On the right, there is a visualisation of the detector's regressed bounding boxes, where warmer-toned boxes show a greater IoU with the FN object and cooler-toned boxes show a lower IoU with the FN object. The maximum IoU of any proposal with the FN object is also printed on the image (e.g. Maximum IoU: 0.395). To de-clutter the image, we only visualise the regressed boxes that have an IoU greater than 0 with the FN object.

Interclass Classification Mechanism

On the left, a red box and label show the false negative object (e.g. elephant). On the right, we draw the most confident interclass misclassification that had localised the FN object. In this case, we show a regressed object proposal that had localised the elephant with an IoU of 0.63, but had been misclassified as a surfboard with confidence 0.43.

Background Classification Mechanism

On the left, a red box and label show the false negative object (e.g. chair). On the right, we draw the regressed proposal that had best localised the object, but was misclassified as background. In this case, we show a regressed object proposal that had localised the chair with an IoU of 0.91, but had been misclassified as background with confidence 0.91. Notably, RetinaNet does not have a specific background class, and will not show an associated background confidence score.

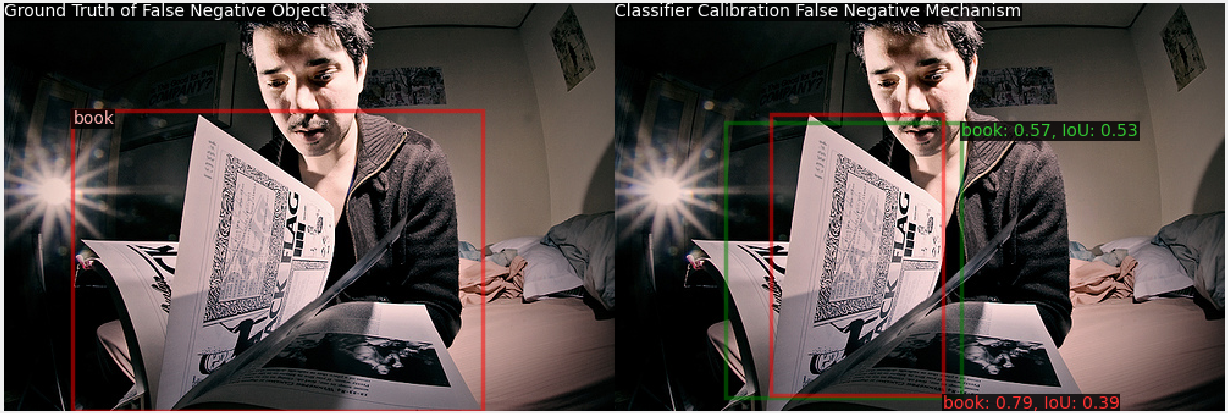

Classifier Calibration Mechanism

On the left, a red box and label show the false negative object (e.g. book). On the right, we draw the confident but poorly-localised detection output by the detector (in red), and the well-localised detection suppressed by NMS (in green). In this case, the red detection predicted by the detector has a high confidence of 0.79, but only has an IoU of 0.39 with the FN object. We also draw a green detection which was less confident (0.57), but had localised the object with an IoU of 0.53. Due to the lower confidence, the green detection was suppressed by the red detection during NMS.

If you have any questions or comments, please contact Dimity Miller.

This code builds upon the detectron2 repository. Please also acknowledge detectron2 if you use their repository.