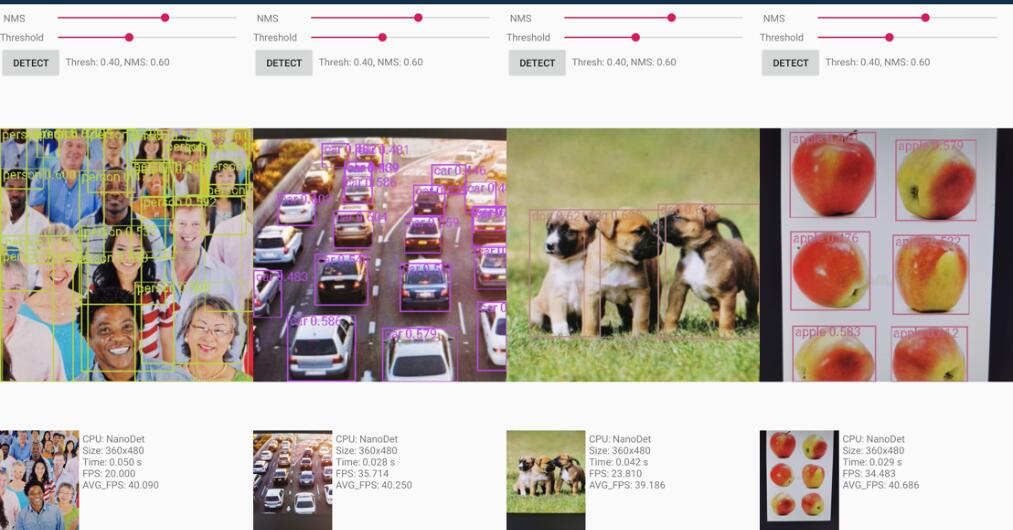

Super fast and high accuracy lightweight anchor-free object detection model. Real-time on mobile devices.

- ⚡Super lightweight: Model file is only 980KB(INT8) or 1.8MB(FP16).

- ⚡Super fast: 97fps(10.23ms) on mobile ARM CPU.

- 👍High accuracy: Up to 34.3 mAPval@0.5:0.95 and still realtime on CPU.

- 🤗Training friendly: Much lower GPU memory cost than other models. Batch-size=80 is available on GTX1060 6G.

- 😎Easy to deploy: Support various backends including ncnn, MNN and OpenVINO. Also provide Android demo based on ncnn inference framework.

NanoDet is a FCOS-style one-stage anchor-free object detection model which using Generalized Focal Loss as classification and regression loss.

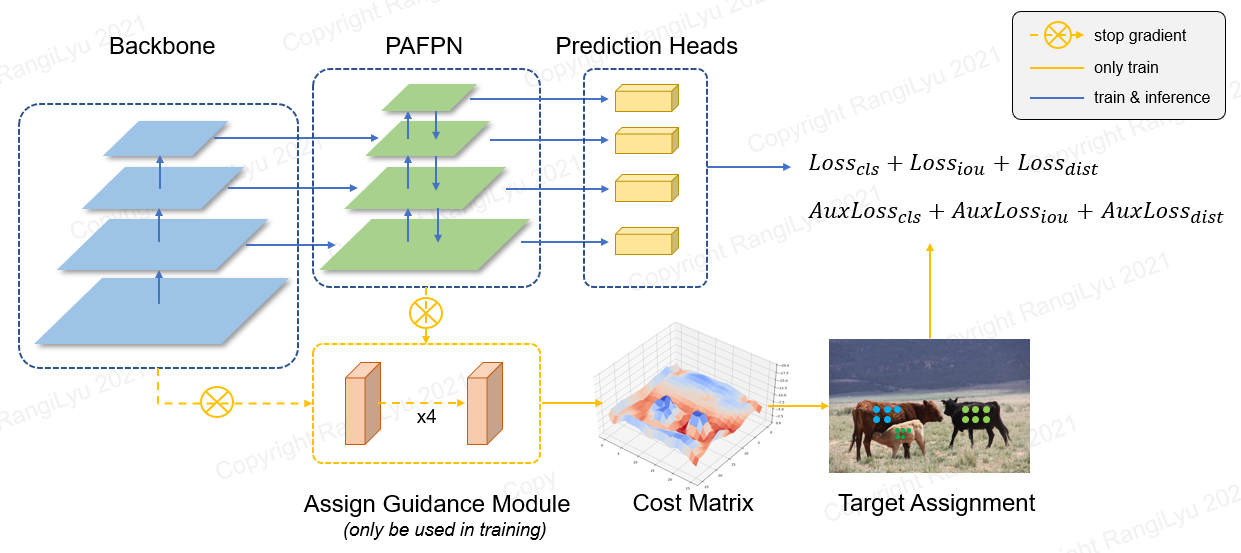

In NanoDet-Plus, we propose a novel label assignment strategy with a simple assign guidance module (AGM) and a dynamic soft label assigner (DSLA) to solve the optimal label assignment problem in lightweight model training. We also introduce a light feature pyramid called Ghost-PAN to enhance multi-layer feature fusion. These improvements boost previous NanoDet's detection accuracy by 7 mAP on COCO dataset.

QQ交流群:908606542 (答案:炼丹)

| Model | Resolution | mAPval 0.5:0.95 |

CPU Latency (i7-8700) |

ARM Latency (4xA76) |

FLOPS | Params | Model Size |

|---|---|---|---|---|---|---|---|

| NanoDet-m | 320*320 | 20.6 | 4.98ms | 10.23ms | 0.72G | 0.95M | 1.8MB(FP16) | 980KB(INT8) |

| NanoDet-Plus-m | 320*320 | 27.0 | 5.25ms | 11.97ms | 0.9G | 1.17M | 2.3MB(FP16) | 1.2MB(INT8) |

| NanoDet-Plus-m | 416*416 | 30.4 | 8.32ms | 19.77ms | 1.52G | 1.17M | 2.3MB(FP16) | 1.2MB(INT8) |

| NanoDet-Plus-m-1.5x | 320*320 | 29.9 | 7.21ms | 15.90ms | 1.75G | 2.44M | 4.7MB(FP16) | 2.3MB(INT8) |

| NanoDet-Plus-m-1.5x | 416*416 | 34.1 | 11.50ms | 25.49ms | 2.97G | 2.44M | 4.7MB(FP16) | 2.3MB(INT8) |

| YOLOv3-Tiny | 416*416 | 16.6 | - | 37.6ms | 5.62G | 8.86M | 33.7MB |

| YOLOv4-Tiny | 416*416 | 21.7 | - | 32.81ms | 6.96G | 6.06M | 23.0MB |

| YOLOX-Nano | 416*416 | 25.8 | - | 23.08ms | 1.08G | 0.91M | 1.8MB(FP16) |

| YOLOv5-n | 640*640 | 28.4 | - | 44.39ms | 4.5G | 1.9M | 3.8MB(FP16) |

| FBNetV5 | 320*640 | 30.4 | - | - | 1.8G | - | - |

| MobileDet | 320*320 | 25.6 | - | - | 0.9G | - | - |

Download pre-trained models and find more models in Model Zoo or in Release Files

Notes (click to expand)

-

ARM Performance is measured on Kirin 980(4xA76+4xA55) ARM CPU based on ncnn. You can test latency on your phone with ncnn_android_benchmark.

-

Intel CPU Performance is measured Intel Core-i7-8700 based on OpenVINO.

-

NanoDet mAP(0.5:0.95) is validated on COCO val2017 dataset with no testing time augmentation.

-

YOLOv3&YOLOv4 mAP refers from Scaled-YOLOv4: Scaling Cross Stage Partial Network.

-

[2022.08.26] Upgrade to pytorch-lightning-1.7. The minimum PyTorch version is upgraded to 1.9. To use previous version of PyTorch, please install NanoDet <= v1.0.0-alpha-1

-

[2021.12.25] NanoDet-Plus release! Adding AGM(Assign Guidance Module) & DSLA(Dynamic Soft Label Assigner) to improve 7 mAP with only a little cost.

Find more update notes in Update notes.

Android demo project is in demo_android_ncnn folder. Please refer to Android demo guide.

Here is a better implementation 👉 ncnn-android-nanodet

C++ demo based on ncnn is in demo_ncnn folder. Please refer to Cpp demo guide.

Inference using Alibaba's MNN framework is in demo_mnn folder. Please refer to MNN demo guide.

Inference using OpenVINO is in demo_openvino folder. Please refer to OpenVINO demo guide.

https://nihui.github.io/ncnn-webassembly-nanodet/

First, install requirements and setup NanoDet following installation guide. Then download COCO pretrain weight from here

The pre-trained weight was trained by the config config/nanodet-plus-m_416.yml.

- Inference images

python demo/demo.py image --config CONFIG_PATH --model MODEL_PATH --path IMAGE_PATH- Inference video

python demo/demo.py video --config CONFIG_PATH --model MODEL_PATH --path VIDEO_PATH- Inference webcam

python demo/demo.py webcam --config CONFIG_PATH --model MODEL_PATH --camid YOUR_CAMERA_IDBesides, We provide a notebook here to demonstrate how to make it work with PyTorch.

- Linux or MacOS

- CUDA >= 10.0

- Python >= 3.6

- Pytorch >= 1.9

- experimental support Windows (Notice: Windows not support distributed training before pytorch1.7)

- Create a conda virtual environment and then activate it.

conda create -n nanodet python=3.8 -y

conda activate nanodet- Install pytorch

conda install pytorch torchvision cudatoolkit=11.1 -c pytorch -c conda-forge- Clone this repository

git clone https://github.com/RangiLyu/nanodet.git

cd nanodet- Install requirements

pip install -r requirements.txt- Setup NanoDet

python setup.py developNanoDet supports variety of backbones. Go to the config folder to see the sample training config files.

| Model | Backbone | Resolution | COCO mAP | FLOPS | Params | Pre-train weight |

|---|---|---|---|---|---|---|

| NanoDet-m | ShuffleNetV2 1.0x | 320*320 | 20.6 | 0.72G | 0.95M | Download |

| NanoDet-Plus-m-320 (NEW) | ShuffleNetV2 1.0x | 320*320 | 27.0 | 0.9G | 1.17M | Weight | Checkpoint |

| NanoDet-Plus-m-416 (NEW) | ShuffleNetV2 1.0x | 416*416 | 30.4 | 1.52G | 1.17M | Weight | Checkpoint |

| NanoDet-Plus-m-1.5x-320 (NEW) | ShuffleNetV2 1.5x | 320*320 | 29.9 | 1.75G | 2.44M | Weight | Checkpoint |

| NanoDet-Plus-m-1.5x-416 (NEW) | ShuffleNetV2 1.5x | 416*416 | 34.1 | 2.97G | 2.44M | Weight | Checkpoint |

Notice: The difference between Weight and Checkpoint is the weight only provide params in inference time, but the checkpoint contains training time params.

Legacy Model Zoo

| Model | Backbone | Resolution | COCO mAP | FLOPS | Params | Pre-train weight |

|---|---|---|---|---|---|---|

| NanoDet-m-416 | ShuffleNetV2 1.0x | 416*416 | 23.5 | 1.2G | 0.95M | Download |

| NanoDet-m-1.5x | ShuffleNetV2 1.5x | 320*320 | 23.5 | 1.44G | 2.08M | Download |

| NanoDet-m-1.5x-416 | ShuffleNetV2 1.5x | 416*416 | 26.8 | 2.42G | 2.08M | Download |

| NanoDet-m-0.5x | ShuffleNetV2 0.5x | 320*320 | 13.5 | 0.3G | 0.28M | Download |

| NanoDet-t | ShuffleNetV2 1.0x | 320*320 | 21.7 | 0.96G | 1.36M | Download |

| NanoDet-g | Custom CSP Net | 416*416 | 22.9 | 4.2G | 3.81M | Download |

| NanoDet-EfficientLite | EfficientNet-Lite0 | 320*320 | 24.7 | 1.72G | 3.11M | Download |

| NanoDet-EfficientLite | EfficientNet-Lite1 | 416*416 | 30.3 | 4.06G | 4.01M | Download |

| NanoDet-EfficientLite | EfficientNet-Lite2 | 512*512 | 32.6 | 7.12G | 4.71M | Download |

| NanoDet-RepVGG | RepVGG-A0 | 416*416 | 27.8 | 11.3G | 6.75M | Download |

-

Prepare dataset

If your dataset annotations are pascal voc xml format, refer to config/nanodet_custom_xml_dataset.yml

Or convert your dataset annotations to MS COCO format(COCO annotation format details).

-

Prepare config file

Copy and modify an example yml config file in config/ folder.

Change save_dir to where you want to save model.

Change num_classes in model->arch->head.

Change image path and annotation path in both data->train and data->val.

Set gpu ids, num workers and batch size in device to fit your device.

Set total_epochs, lr and lr_schedule according to your dataset and batchsize.

If you want to modify network, data augmentation or other things, please refer to Config File Detail

-

Start training

NanoDet is now using pytorch lightning for training.

For both single-GPU or multiple-GPUs, run:

python tools/train.py CONFIG_FILE_PATH

-

Visualize Logs

TensorBoard logs are saved in

save_dirwhich you set in config file.To visualize tensorboard logs, run:

cd <YOUR_SAVE_DIR> tensorboard --logdir ./

NanoDet provide multi-backend C++ demo including ncnn, OpenVINO and MNN. There is also an Android demo based on ncnn library.

To convert NanoDet pytorch model to ncnn, you can choose this way: pytorch->onnx->ncnn

To export onnx model, run tools/export_onnx.py.

python tools/export_onnx.py --cfg_path ${CONFIG_PATH} --model_path ${PYTORCH_MODEL_PATH}Please refer to demo_ncnn.

Please refer to demo_openvino.

Please refer to demo_mnn.

Please refer to android_demo.

If you find this project useful in your research, please consider cite:

@misc{=nanodet,

title={NanoDet-Plus: Super fast and high accuracy lightweight anchor-free object detection model.},

author={RangiLyu},

howpublished = {\url{https://github.com/RangiLyu/nanodet}},

year={2021}

}https://github.com/Tencent/ncnn

https://github.com/open-mmlab/mmdetection

https://github.com/implus/GFocal