PyTorch implementation of Neural Bounding, using neural networks to represent bounding volumes and learning conservative space classification.

Neural Bounding

Stephanie Wenxin Liu1, Michael Fischer2, Paul D. Yoo1, Tobias Ritschel2

1Birkbeck, University of London, 2University College London

Published at SIGGRAPH 2024 (Conference Track)

[Paper] [Project Page]

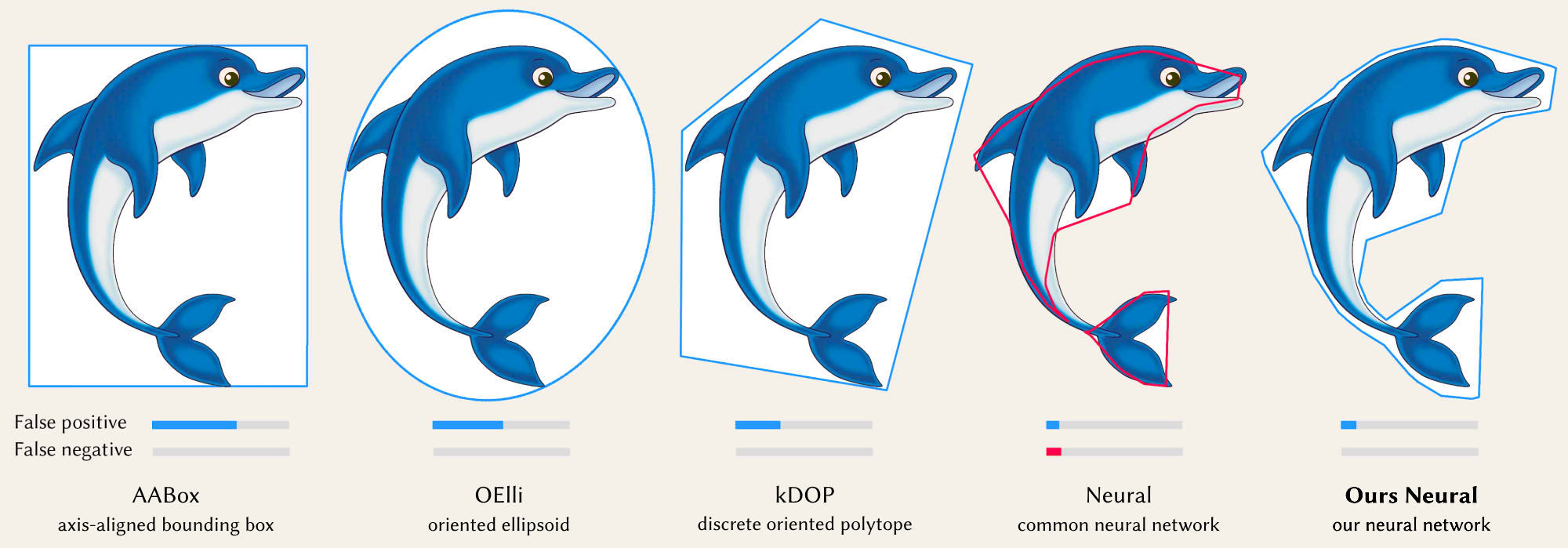

Bounding volumes are an established concept in computer graphics and vision tasks but have seen little change since their early inception. In this work, we study the use of neural networks as bounding volumes. Our key observation is that bounding, which so far has primarily been considered a problem of computational geometry, can be redefined as a problem of learning to classify space into free or occupied. This learning-based approach is particularly advantageous in high-dimensional spaces, such as animated scenes with complex queries, where neural networks are known to excel. However, unlocking neural bounding requires a twist: allowing -- but also limiting -- false positives, while ensuring that the number of false negatives is strictly zero. We enable such tight and conservative results using a dynamically-weighted asymmetric loss function. Our results show that our neural bounding produces up to an order of magnitude fewer false positives than traditional methods. In addition, we propose an extension of our bounding method using early exits that accelerates query speeds by 25%. We also demonstrate that our approach is applicable to non-deep learning models that train within seconds. Our project page is at: https://wenxin-liu.github.io/neural_bounding/.

To get started with running experiments and calculating results, make sure you have the following prerequisites:

- Anaconda: You will need Anaconda to create a virtual environment and manage the required dependencies. If you don't have Anaconda installed, you can download it from here and follow the installation instructions for your specific platform.

Once you have the prerequisites in place, follow these steps to set up the environment and run the experiments:

-

Clone this repository to your local machine:

git clone https://github.com/wenxin-liu/neural_bounding.git

-

Navigate to the project's root directory:

cd neural_bounding -

Create a Conda environment to manage dependencies. You can use the provided environment.yml file:

conda env create -f environment.yml

-

Activate the Conda environment:

conda activate neural_bounding

-

Now, you're ready to run the experiments and calculate results.

./run.sh

This makes all experiments results as absolute values, per task (indicator dimension and query), method and object.

Then it calculates Table 1 of the paper using the above experiment results.

All results are saved as CSV files in<project_root>/results.

-

2D Data: For 2D experiments (2D point, ray, plane, and box queries), we use PNG images of single natural objects in front of a transparent background. In these images, the alpha channel serves as a mask, defining the indicator function. We use a total of 9 such images.

-

3D Data: In 3D experiments (3D point, ray, plane, and box queries), we use 3D voxelizations in binvox format. We use 9 such voxelizations.

-

4D Data: For 4D experiments (4D point, ray, plane, and box queries), we investigate sets of animated objects. In these experiments, we randomly select shapes from our 3D data and create time-varying occupancy data by rotating them around their center. This results in a 4D tensor, where each element represents a 3D voxelization of the object at a specific point in time, saved in .npy format.

If you wish to apply this code to your own datasets, you can follow these steps:

-

Ensure that your data is in a format compatible with the dimension you are working with (2D, 3D, or 4D).

-

Place your custom data in the appropriate directories:

- For 2D data, add your PNG images to the

data/2Ddirectory. - For 3D data, add your binvox voxelizations to the

data/3Ddirectory. - For 4D data, prepare your 4D tensor in .npy format and save it in the

data/4Ddirectory.

- For 2D data, add your PNG images to the

-

Modify the

run.shscript to include the file names of your custom data.- For example, if you have a 2D image named "bunny.png" and placed in the

data/2Ddirectory, modify the 2D section ofrun.shto include"bunny"in the list of objects.

- For example, if you have a 2D image named "bunny.png" and placed in the

-

Follow the installation instructions in the above section.

@inproceedings{liu2024neural,

author = {Stephanie Wenxin Liu and Michael Fischer and Paul D. Yoo and Tobias Ritschel},

title = {Neural Bounding},

booktitle = {Proceedings of the Special Interest Group on Computer Graphics and Interactive Techniques Conference Papers '24 (SIGGRAPH Conference Papers '24)},

year = {2024},

location = {Denver, CO, USA},

publisher = {ACM},

address = {New York, NY, USA},

pages = {10},

doi = {10.1145/3641519.3657442}

}