[Project Page] [Blog Post] Official code base for IQ-Learn: Inverse soft-Q Learning for Imitation, NeurIPS '21 Spotlight

IQ-Learn is an simple, stable & data-efficient algorithm that's a drop-in replacement to methods like Behavior Cloning and GAIL, to boost your imitation learning pipelines!

Update: IQ-Learn was recently used to create the best AI agent for playing Minecraft. Placing #1 in NeurIPS MineRL Basalt Challenge using only recorded human player demos. (IQ-Learn also competed with methods that use human-in-the-loop interactions and surprisingly still achieved Overall Rank #2)

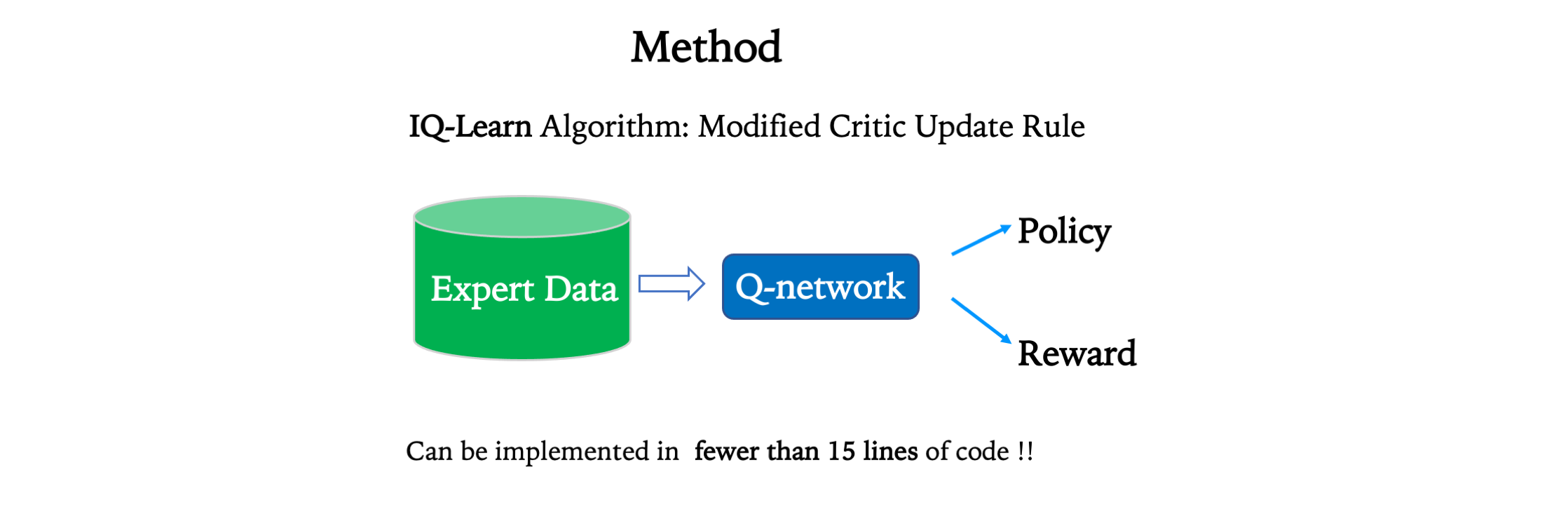

We introduce Inverse Q-Learning (IQ-Learn), a state-of-the-art novel framework for Imitation Learning (IL), that directly learns soft Q-functions from expert data. IQ-Learn enables non-adverserial imitation learning, working on both offline and online IL settings. It is performant even with very sparse expert data, and scales to complex image-based environments, surpassing prior methods by more than 3x. It is very simple to implement requiring ~15 lines of code on top of existing RL methods.

Inverse Q-Learning is theoretically equivalent to Inverse Reinforcement learning, i.e. learning rewards from expert data. However, it is much more powerful in practice. It admits very simple non-adverserial training and works on complete offline IL settings (without any access to the environment), greatly exceeding Behavior Cloning.

IQ-Learn is the successor to Adversarial Imitation Learning methods like GAIL (coming from the same lab).

It extends the theoretical framework for Inverse RL to non-adverserial and scalable learning, for the first-time showing guaranteed convergence.

@inproceedings{garg2021iqlearn,

title={IQ-Learn: Inverse soft-Q Learning for Imitation},

author={Divyansh Garg and Shuvam Chakraborty and Chris Cundy and Jiaming Song and Stefano Ermon},

booktitle={Thirty-Fifth Conference on Neural Information Processing Systems},

year={2021},

url={https://openreview.net/forum?id=Aeo-xqtb5p}

}

✅ Drop-in replacement to Behavior Cloning

✅ Non-adverserial online IL (Successor to GAIL & AIRL)

✅ Simple to implement

✅ Performant with very sparse data (single expert demo)

✅ Scales to Complex Image Envs (SOTA on Atari and playing Minecraft)

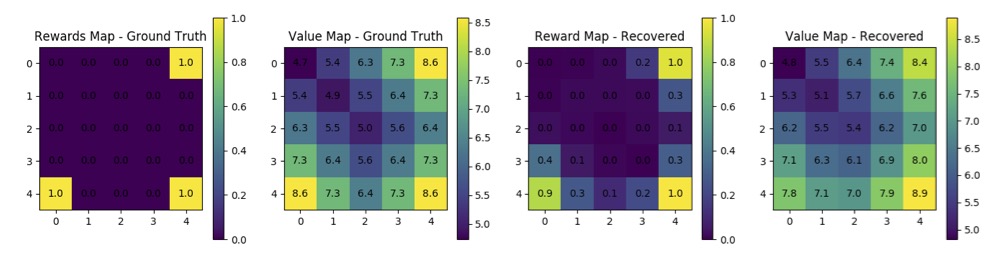

✅ Recover rewards from envs

To install and use IQ-Learn check the instructions provided in the iq_learn folder.

Reaching human-level performance on Atari with pure imitation:

Recovering environment rewards on GridWorld:

Please feel free to email us if you have any questions.

Div Garg (divgarg@stanford.edu)