Trying to implement YoloV4 in ML.NET but running into some trouble (I'm quite new to all of this)

devedse opened this issue · 14 comments

System information

- OS version/distro: Windows 10 20H2

- .NET Version (eg., dotnet --info): 3.1.9

Introduction

I'm currently trying to use the YoloV4 model inside ML.NET but whenever I try to put an image through the model I get very strange results from the model. (It mainly finds backpacks, handbags, some apples and some other unexpected results). The image I'm using:

I created a repository to reproduce my issue as well:

https://github.com/devedse/DeveMLNetTest

Disclaimer: I'm quite new to all of this 😄

Steps I've taken

I first wanted to obtain a pre-trained model which I was sure worked so I did the following steps:

- Cloned: https://github.com/Tianxiaomo/pytorch-YOLOv4

- Installed requirements + pytorch + cuda etc.

- Downloaded the Yolov4_epoch1.pth model and put it in

checkpoints\Yolov4_epoch1.pth - Ran

demo_pytorch2onnx.pywith debug enabled.

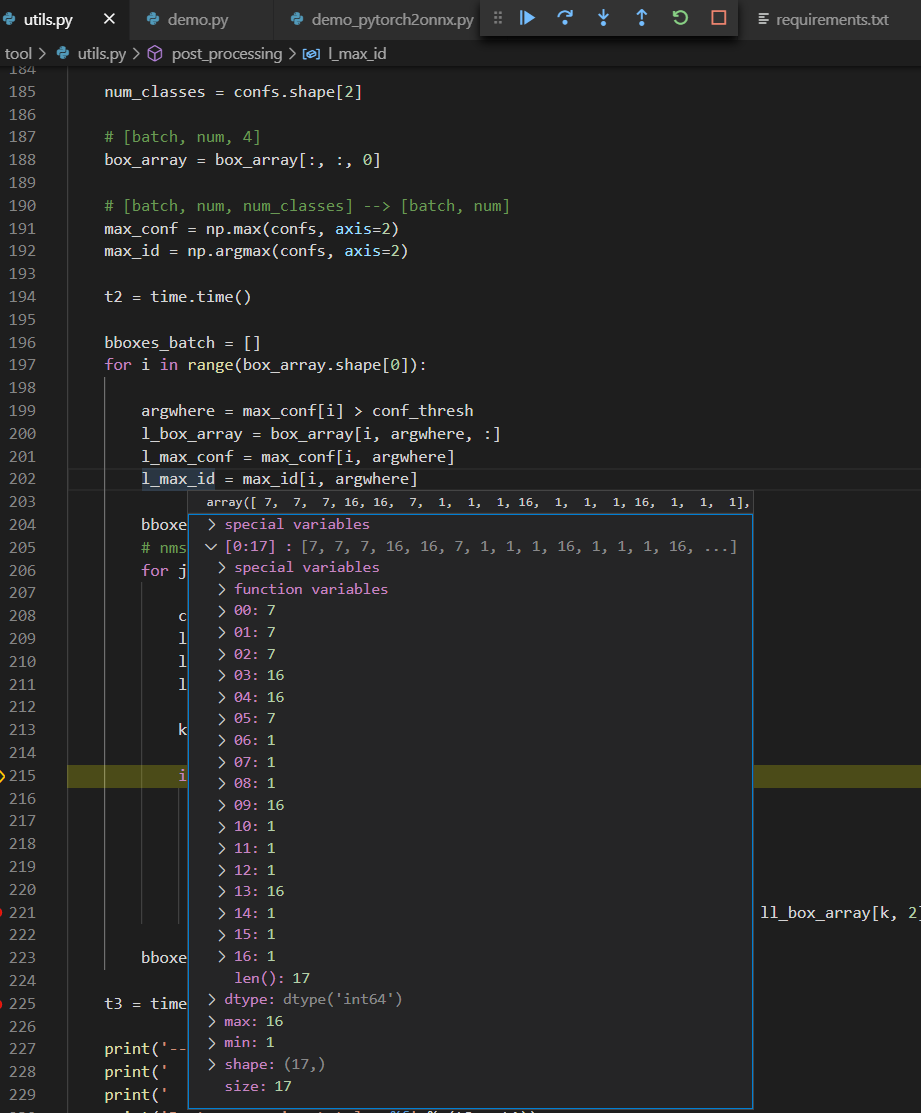

When you do this you can step through the code where the output from the model is parsed (this shows the labels for all boxes with a confidence higher then 0.6):

When we look up these labels, we can see that (all +1 since it's a 0-index array):

1: bicycle

8: truck

16: dog

So the results in Python seem to be correct. This python script first converts the model to an ONNX model and then uses that model to do the inference.

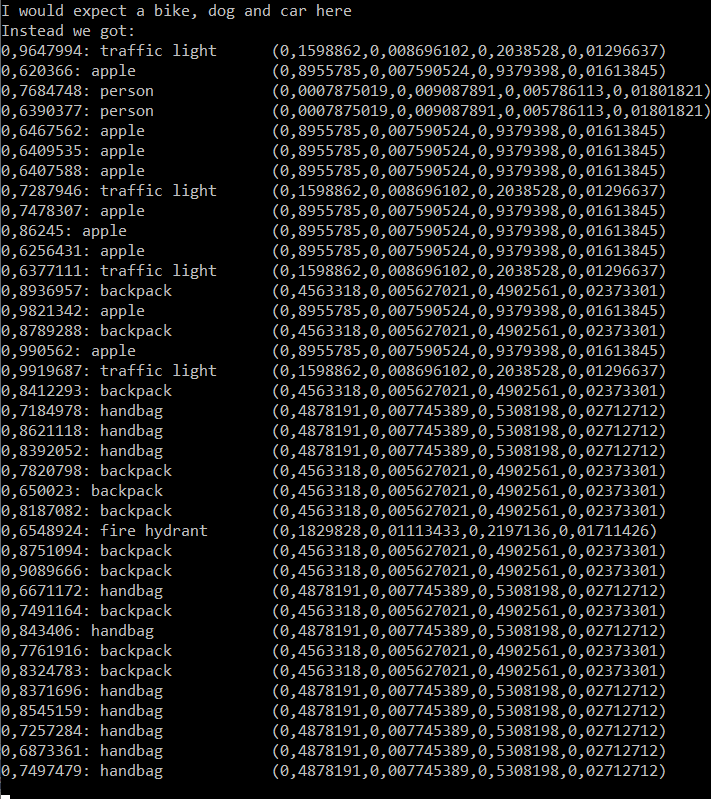

When I now start using the model inside C# though and try to parse through the results in exactly the same way I find completely different results:

Could it be that the model is somehow creating garbage data?

Anyway, here's the code that I'm

MLContext:

var pipeline = mlContext.Transforms.LoadImages(outputColumnName: "image", imageFolder: "", inputColumnName: nameof(ImageNetData.ImagePath))

.Append(mlContext.Transforms.ResizeImages(outputColumnName: "image", imageWidth: ImageNetSettings.imageWidth, imageHeight: ImageNetSettings.imageHeight, inputColumnName: "image"))

.Append(mlContext.Transforms.ExtractPixels(outputColumnName: "input", inputColumnName: "image"))

.Append(mlContext.Transforms.ApplyOnnxModel(

modelFile: modelLocation,

outputColumnNames: new[] { "boxes", "confs" },

inputColumnNames: new[] { "input" }

));

Code to parse model output:

public IList<YoloBoundingBox> ParseOutputs(float[] boxes, float[] confs, float threshold = .6F)

{

var boxesUnflattened = new List<BoundingBoxDimensions>();

for (int i = 0; i < boxes.Length; i += 4)

{

boxesUnflattened.Add(new BoundingBoxDimensions()

{

X = boxes[i],

Y = boxes[i + 1],

Width = boxes[i + 2] - boxes[i],

Height = boxes[i + 3] - boxes[i + 1],

OriginalStuff = boxes.Skip(i).Take(4).ToArray()

});

}

var confsUnflattened = new List<float[]>();

for (int i = 0; i < confs.Length; i += 80)

{

confsUnflattened.Add(confs.Skip(i).Take(80).ToArray());

}

var maxConfidencePerBox = confsUnflattened.Select(t => t.Select((n, i) => (Number: n, Index: i)).Max()).ToList();

var boxesNumbered = boxesUnflattened.Select((b, i) => (Box: b, Index: i)).ToList();

var boxesIndexWhichHaveHighConfidence = maxConfidencePerBox.Where(t => t.Number > threshold).ToList();

var allBoxesThemselvesWithHighConfidence = boxesIndexWhichHaveHighConfidence.Join(boxesNumbered, t => t.Index, t => t.Index, (l, r) => (Box: r, Conf: l)).ToList();

Console.WriteLine("I would expect a bike, dog and car here");

Console.WriteLine("Instead we got:");

foreach (var b in allBoxesThemselvesWithHighConfidence)

{

var startString = $"{b.Conf.Number}: {labels[b.Conf.Index]}";

Console.WriteLine($"{startString.PadRight(30, ' ')}({string.Join(",", b.Box.Box.OriginalStuff)})");

}

throw new InvalidOperationException("Everything below this doesn't work anyway");

Can someone give me some hints / ideas on why I could be running into this issue?

Also feel free to clone the repo and press F5. It should simply build / run.

https://github.com/devedse/DeveMLNetTest

@Lynx1820 I'm debugging through the code. This is the screenshot with all labels:

So you can see the array with 7, 16 and 1 (which are dog / bicycle / truck)

@Lynx1820 , I see the input and output layers are different from the YOLO v4 model available in onnx/models.

I did an implementation using this model here: YOLO v4 in ML.Net.

Few comments:

- depending on your model, you might have to use

scaleImage: 1f / 255fin theExtractPixels()step (for this, you'd need to understand how your input image is transformed in the python code).

EDIT: image pre-processing is happening here and here, so you indeed need to scale your image by1f / 255f. - have a look at the post processing done here: YOLO v3 in ML.Net as it seems the output shape of your v4 model are similar to the outputs of the v3 I've used.

- I see here that you'd need to do NMS in post processing. This is done in my v3 implementation above (EDIT: this is also done in your code).

Also, I think this bit of code in your repos is not correct:

if (boxes.Count != confs.Count)

{

throw new InvalidOperationException("Errrorrrr");

}@Lynx1820 , the file is not corrupted, it's checked into Github using Git LFS. If you use the latest version of git then it will automatically checkout files from Git LFS as well. (Without LFS you can only commit files up to a maximum of 50mb to Github).

So if you want the model to work you need to either manually downoad it from Github or update your git command line tools :).

@BobLd , thanks for that elaborate answer.

Using your fix mentioned in the first comment I finally managed to get some data from the model that makes sense (the dog, bicycle and truck are all detected):

What I can't figure out though is why the boxes seem to be at the wrong location. When I check the python code, there seems to be no post processing whatsoever on the boxes. They pass just use it to call cv2 to draw a rectangle:

cv2.rectangle(img, (x1, y1), (x2, y2), rgb, 1)

In my code rather then 2 coordinates we need the width and height, so I'm doing a tiny bit of post processing:

for (int i = 0; i < boxes.Length; i += 4)

{

boxesUnflattened.Add(new BoundingBoxDimensions()

{

X = boxes[i],

Y = boxes[i + 1],

Width = boxes[i + 2] - boxes[i],

Height = boxes[i + 3] - boxes[i + 1],

OriginalStuff = boxes.Skip(i).Take(4).ToArray()

});

}

The OriginalStuff array however also contains values at position 1 and 3 that don't really make sense. The first value (y) is -0.000948109664 which is a negative y value.

Could it be that I need to do RGB -> BGR conversion or something?

Besides my own problems I do have some questions about your comment / code though 😄:

- In your code you're using a predictionengine whereas in my code (that I got from the Microsoft samples) I call

pipeline.Fit(...)somewhere and then usemodel.Transform(...). Do you know the difference? - What is the use of the

shapeDictionaryyou're using? - In you're code you're doing all kinds of stuff with anchors. From what I understood, the model I'm using already has that part of the post processing inside the model itself. Do I understand this correctly?

- In your model you seem to have 3 outputs that are all the same. Why doesn't the model just generate one output then?

- I thought Yolov4 was simply Yolov4, but apparently there's numerous models that are called Yolov4. Any ideas?

- When I run the Yolov4 model I got through C# and Python. Should the output of the model be exactly the same? (Or can there be some difference in the way C# executes the .onnx file and the way Python does it?)

TLDR:

Try using

resizing: ResizingKind.FillinResizeImages()andorderOfExtraction: ColorsOrder.ABGRinExtractPixels().

More details:

Could it be that I need to do RGB -> BGR conversion or something?

My first impression was no, as they extract it using the following (cv2 extract in BGR afak, they convert to RGB):

sized = cv2.cvtColor(sized, cv2.COLOR_BGR2RGB)but looking in more details at the input preprocessed image:

so we see that the first value is [245, 255, 255] (in RGB order).

but then they do a transpose, and the values seems to appear in BGR order:

So do try to extract in BGR order using orderOfExtraction: Microsoft.ML.Transforms.Image.ImagePixelExtractingEstimator.ColorsOrder.ABGR in ExtractPixels().

Also, I think you need to use resizing: ResizingKind.Fill in ResizeImages() because of this line of code:

sized = cv2.resize(img, (416, 416))EDIT:

1. In your code you're using a predictionengine whereas in my code (that I got from the Microsoft samples) I call pipeline.Fit(...) somewhere and then use model.Transform(...). Do you know the difference?

No big difference I think, using a predictionengine seems more convenient (but not sure, as I am also new to that)

2. What is the use of the shapeDictionary you're using?

The Onnx pipeline was crashing because some tensor shapes were not well defined. I'm not sure if it was with this model or with another.

What I can't figure out though is why the boxes seem to be at the wrong location. When I check the python code, there seems to be no post processing whatsoever on the boxes. They pass just use it to call cv2 to draw a rectangle:

3. In you're code you're doing all kinds of stuff with anchors. From what I understood, the model I'm using already has that part of the post processing inside the model itself. Do I understand this correctly?

It seems you are correct. From this line, I see that some post-processing is done here. I didn't have a look at the details but it seems the post-processing done here is very basic. So I guess you don't need to do stuff with anchors. Let's see what are the results with the new preprocessing steps.

4. In your model you seem to have 3 outputs that are all the same. Why doesn't the model just generate one output then?

Hehe, no the outputs are not all the same. If you are talking about the Yolo v4 model, the outputs are the following:

What is a bit misleading are those unknown dimensions. They are in fact:

{ "Identity:0", new[] { 1, 52, 52, 3, 85 } },

{ "Identity_1:0", new[] { 1, 26, 26, 3, 85 } },

{ "Identity_2:0", new[] { 1, 13, 13, 3, 85 } },

These outputs are merged in your model (and this is why you don't need to deal with anchors I guess). You can find more info here. The part of interest is:

For an image of size 416 x 416, YOLO predicts ((52 x 52) + (26 x 26) + 13 x 13)) x 3 = 10647 bounding boxes.

5. I thought Yolov4 was simply Yolov4, but apparently there's numerous models that are called Yolov4. Any ideas?

Again, I'm new to that so not sure, but I think you are right Yolov4 is simply Yolov4. Here we are dealing with the ONNX exported models, and I think they may by different 😄

6. When I run the Yolov4 model I got through C# and Python. Should the output of the model be exactly the same? (Or can there be some difference in the way C# executes the .onnx file and the way Python does it?)

I would expect the output of the exported ONNX model to be the same in C# and Python. One difference is that ML.Net returns 1D arrays.

@BobLd thanks again for your amazingly elaborate answer. I'll check it out later once I'm at my pc.

@BobLd , I just managed to implement your fixes / ideas. The confidence is a bit higher now, but the boxes however still don't line up.

amazing! Great to see you managed to make it work!

I do have a few more questions, not sure if you know the answer though:

- Do you know where the actual inference happens? Is that with the .Fit(...) method or the .Transform(...) method?

- Do you know if ML.Net is using GPU for inference?

Thank you for your elaborate answer @BobLd!

- The Fit method is used to train, and the Transform method is used to inference/apply a transformation to a DataView.

- ML.NET doesn't use GPU, but you can use GPU for inference in Onnx models in C#. Take a look at this.

Closing this issue, but feel free to follow up if you have more questions.