Our paper has been published, please refer to the link.

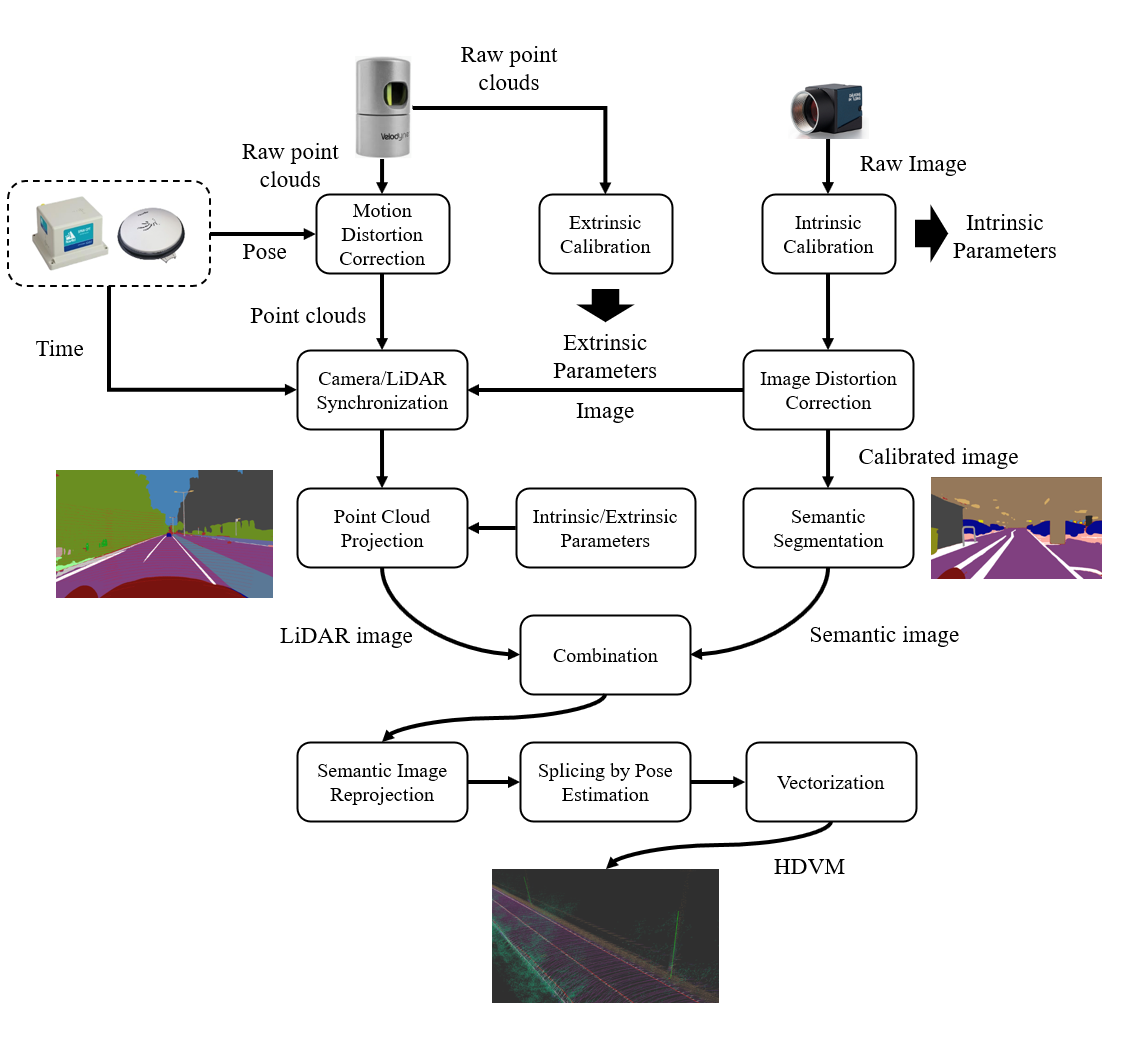

This repository hosts an open-source HDVM (high-definition vector map) generation pipeline designed for autonomous vehicles, especially in intricate urban environments. Traditional HDVM creation methods often operate on a planar assumption, causing inaccuracies in real-world scenarios. Our solution, however, integrates data from GNSS (global navigation satellite system), INS (inertial navigation system), LiDAR, and cameras.

The process starts with the extraction of semantic data from raw images using advanced architectures like Vision Transformer (ViT) and Swin Transformer. We then acquire the absolute 3D data of these semantic objects from 3D LiDAR depth and derive high-precision pose estimates from GNSS real-time kinematic (GNSS-RTK) and an INS navigation system. This semantic data aids in the extraction of vector information, such as lane markings, which forms the HDVM.

A significant feature of this repo is its focus on HDVM accuracy. We've examined the impact of two primary error sources: segmentation discrepancies and LiDAR-camera extrinsic parameter deviations. An error propagation scheme is provided to showcase how these sources can affect the HDVM's precision.

For ease of setup and consistency, a Docker version of the pipeline is available and is the recommended method for deployment.

This repo offers proof of our pipeline's efficacy through its open-source code and has been tested on various datasets, from indoor parking facilities to intricate urban landscapes. Explore the repository, and you'll find a comprehensive solution for HDVM generation in complex scenarios. Link to the repository.

|  |

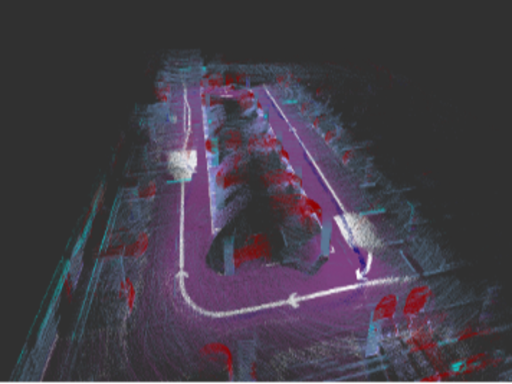

| The progress of indoor map construction | The 3D semantic map of the indoor scenario |

|  |

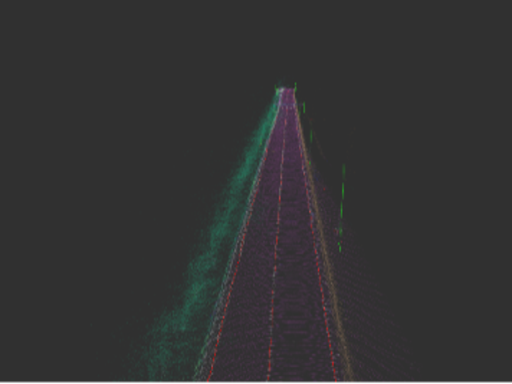

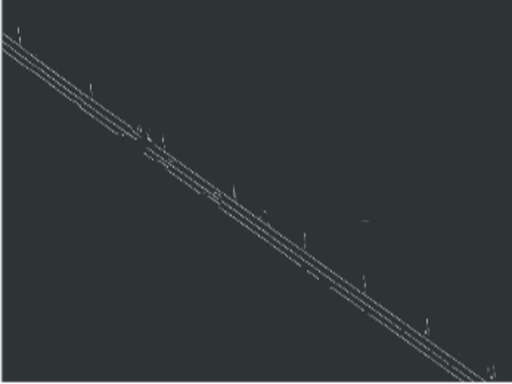

| The 3D semantic map of the outdoor scenario | The 3D vector map of the outdoor scenario |

Our tool supports below features:

- Soft time synchronization

- Image undistortion

- LiDAR motion distortion correction

- Image semantic segmentation

- Lane lines and poles vectorization

For more details, please refer to our paper

This tool needs lots of dependencies, thus, we urge all of you to use docker to run our map builder.

- Check that your grphics driver and docker are properly installed, especially the nvidia-docker suit.

- Clone our git repository to local disk.

git clone https://github.com/ebhrz/HDMap.git

- Open the docker folder and start the docker via the start script.

You can run this script repeatedly to open several command windows to run roscore, rviz and the map builder.

cd HDMap && cd docker sudo ./start.sh

- Use the stop script to stop the docker.

sudo ./stop.sh

- (optional) You can build the docker image by yourself by the build script:

sudo ./build.sh

- ROS

Our tool utilizes ROS to store the recording data and do the visualization. ROS installation refers to here- Novatel Msgs

Map construction requires accurate localization. In our sensorkit, a span-CPT is employed to be the ground truth. Please install its message type viasudo apt install ros-(your ROS version)-novatel-gps-msgs

- Novatel Msgs

- Pandas and Numpy

Please use the commands below to install:

pip install numpy

pip install pandas- OpenCV

Our tool also requires OpenCV. Install it via:

pip install opencv-python- PyTorch

Please refer to PyTorch installation guide at here- Detectron2

This is a image segmentation framework from Facebook using PyTorch. Our tool directly uses the pretrained Swin2Transformer model. Please refer to its installation guide at here - MMSegmentation

This is a image segmentation framework by OpenMMLab using PyTorch. Our tool can also support the pretrained model in their model zoo. Please refer to its installation guide at here

- Detectron2

- Scikit-learn

This is a powerful tool for machine learning. Our tool uses DBSCAN from it to do the cluster. Install it via:

pip install scikit-learn- Pclpy

Since we'll process the point cloud, we choose this python wrapper for PCL which uses pybind11 to maintain the same API. Installation refers to here

.

├── LICENSE

├── README.md

├── config

│ ├── ade20k.json

│ ├── indoor_config.json

│ ├── outdoor_config.json

│ └── outdoor_distortion_config.json

├── data

│ ├── indoor

│ │ ├── indoor.bag # place your indoor bag file here

│ │ └── pose6d.csv # the prior made pose estimation result

│ └── outdoor

│ └── outdoor.bag # place your outdoor bag file here

├── result # this folder will be gererated automatically

├── imseg

│ ├── mask2former

│ │ ├── class.json

│ │ ├── config

│ │ ├── config.py

│ │ ├── model

│ │ │ └── model.pkl # place the pretrainied swin2transformer model

│ │ └── mask2former

│ └── mmseg

│ ├── _base_

│ ├── configs

│ └── model

│ └── model.pth # place the pretrainied mmsegmentation model

├── indoor.py

├── outdoor.py

├── make_vector.py

├── make_pcl.py

├── predict.py

├── util.py

└── vis.rviz

If you want to run an example, please click the link to download the data.

For the outdoor bag file, here's an example:

path: outdoor.bag

version: 2.0

duration: 34:38s (2078s)

start: Dec 23 2021 11:43:52.57 (1640231032.57)

end: Dec 23 2021 12:18:31.01 (1640233111.01)

size: 64.5 GB

messages: 327941

compression: none [41569/41569 chunks]

types: novatel_msgs/INSPVAX [b5d66747957184042a6cca9b7368742f]

sensor_msgs/CompressedImage [8f7a12909da2c9d3332d540a0977563f]

sensor_msgs/PointCloud2 [1158d486dd51d683ce2f1be655c3c181]

topics: /acc_pose 207936 msgs : novatel_msgs/INSPVAX

/image 57732 msgs : sensor_msgs/CompressedImage

/origin_cloud 41515 msgs : sensor_msgs/PointCloud2

And for the indoor bag file, here's an example:

path: garage.bag

version: 2.0

duration: 6:59s (419s)

start: Feb 04 2022 18:04:18.39 (1643969058.39)

end: Feb 04 2022 18:11:18.08 (1643969478.08)

size: 70.1 GB

messages: 342273

compression: none [29148/29148 chunks]

types: sensor_msgs/Image [060021388200f6f0f447d0fcd9c64743]

sensor_msgs/PointCloud2 [1158d486dd51d683ce2f1be655c3c181]

topics: /velodyne_points 4193 msgs : sensor_msgs/PointCloud2

/zed2/camera/left/image_raw 12477 msgs : sensor_msgs/Image

Ros topic will be configured in config file.

So far, we should configure the ros topics in outdoor.py and indoor.py manually, but as the config file is supported, it can be defined in the config file.

Please make your own config file refer to the config files in config folder. Here are two example config files for indoor and outdoor. The explanations of each field are below the config files.

outdoor config file

{

"bag_file":"data/outdoor/outdoor.bag",

"start_time":120,

"play_time":5,

"GNSS_topic":"/acc_pose",

"LiDAR_topic":"/dedistortion_cloud",

"cloud_distortion":false,

"camera_topic":"/zed2/camera/left/image_raw/compressed",

"image_compressed":true,

"extrinsic":

[

[ 1.0102, -0.0026, -0.0087, 0.1135],

[-0.0033, -0.0030, -0.9963, -0.1617],

[ 0.0049, 0.9962, -0.0287, 0.0516],

[ 0.0000, 0.0000, 0.0000, 1.0000]

],

"intrinsic":

[

[543.5046, 0, 630.7183],

[0, 540.5383, 350.9063],

[0, 0, 1]

],

"distortion_matrix":[-1.05873889e-01, 1.32265629e-01, -8.55667814e-05,-1.04098281e-03, -7.01241428e-02],

"save_folder":"result/outdoor",

"mode":"outdoor",

"model_config":"imseg/mask2former/config/swin/maskformer2_swin_large_IN21k_384_bs16_300k.yaml",

"model_file":"imseg/mask2former/model/model.pkl",

"lane_class":24,

"pole_class":45,

"vector":true,

"predict_func":"get_predict_func",

"cmap":"mapillary"

}indoor config file

{

"bag_file":"data/indoor/indoor.bag",

"pose_file":"data/indoor/pose6d.csv",

"start_time":0,

"play_time":-1,

"LiDAR_topic":"/velodyne_points",

"camera_topic":"/zed2/zed_node/left/image_rect_color/compressed",

"image_compressed":true,

"extrinsic":

[

[ 1.0102, -0.0026, -0.0087, 0.1135],

[-0.0033, -0.0030, -0.9963, -0.1617],

[ 0.0049, 0.9962, -0.0287, 0.0516],

[ 0.0000, 0.0000, 0.0000, 1.0000]

],

"intrinsic":

[

[543.5046, 0, 630.7183],

[0, 540.5383, 350.9063],

[0, 0, 1]

],

"distortion_matrix":[-1.05873889e-01, 1.32265629e-01, -8.55667814e-05,-1.04098281e-03, -7.01241428e-02],

"save_folder":"result/indoor",

"mode":"indoor",

"model_config":"imseg/mmseg/configs/swin/upernet_swin_large_patch4_window12_512x512_pretrain_384x384_22K_160k_ade20k.py",

"model_file":"imseg/mmseg/model/upernet_swin_large_patch4_window12_512x512_pretrain_384x384_22K_160k_ade20k_20220318_091743-9ba68901.pth",

"lane_class":24,

"pole_class":45,

"predict_func":"get_predict_func_mmsegmentation",

"cmap":"ade20k"

}- bag_file

The path of the bag file - pose_file

The path of the pose file, format is (x,y,z,order,q,w,e,r,where q,w,e,r are the quaternion)(indoor only, outdoor ignored) - start_time

From where to read the bag, or skip how long of the bag - play_time

How long to play the bag, -1 for the whole bag - GNSS_topic

The GNSS topic name in Ros bag, (outdoor only, indoor ignored) - LiDAR_topic

The LiDAR topic name in Ros bag - cloud_distortion

To do the LiDAR motion distortion fix or not - camera_topic

The camera topic name in Ros bag - image_compressed

Whether the image format in the Ros bag is compressed or not - extrinsic

LiDAR and camera extrinsic matrix - intrinsic

Camera intrinsic matrix - distortion_matrix

Camera distortion matrix - save_folder

Save folder name - mode

Use indoor mode or outdoor mode - model_config

The path of the segmentation model config file - model_file

The path of the segmentation model file - lane_class

The ordinal number of the lane line in the segmentation model - pole_class

The ordinal number of the pole in the segmentation model - vector

Whether to generate the vectormap. Used by make_vector.py. - predict_func

This is the function name of the prediction function in file predict.py. There are already two function get_predict_func_detectron and get_predict_func_mmlab. One is for the pretrained models from Facebook and the other is for the models from MMLab. If you want to use your own models, please write your own prediction function, which is required to return a numpy array with the shape of W*H. The value of each pixel is the segmentation class. For example an image with the size 3*3 and 3 types after the segmentation:

[ [1,1,2], [1,2,1], [0,1,2] ] - cmap

The color map scheme, ade20k, cityscapes and mapillary are available.

For indoor scenario, there is no GNSS signal to provide global localization. Thus, pose estimation should be performed at first. Also, We recommond you to use LiDAR SLAM algorithm such as A-LOAM, LIO-SAM, etc. to estimate the pose. Here is an implementation of LIO-SAM with several changes to generate the pose for all the LiDAR messages.

outdoor.py

-h, --help show this help message and exit

-c CONFIG, --config CONFIG The config file path, recommand use this method to start the tool

-b BAG, --bag BAG The recorded ros bag file

-f FASTFOWARD, --fastfoward FASTFOWARD Start to play at the nth seconds

-d DURATION, --duration DURATION Time to play

-u UNDISTORTION, --undistortion UNDISTORTION Do LiDAR points undistortion

indoor.py

-h, --help show this help message and exit

-c CONFIG, --config CONFIG The config file path, recommand use this method to start the tool

-b BAG, --bag BAG The recorded ros bag

-f FASTFOWARD, --fastfoward FASTFOWARD Start to play at the nth seconds

-d DURATION, --duration DURATION Time to play

-p POSE, --pose POSE Pose file for the construction

make_pcl.py

-h, --help show this help message and exit

-c CONFIG, --config CONFIG The config file path, recommand use this method to start the tool

-i INPUT, --input INPUT

-m {indoor,outdoor}, --mode {indoor,outdoor} Depend on the way to store the pickle file

-f FILTERS [FILTERS ...], --filters FILTERS [FILTERS ...] Default to show all the classes, the meaning of each class refers to class.json

-s SAVE, --save SAVE Save to pcd file

-t TRAJECTORY, --trajectory TRAJECTORY Trajectory file, use to follow the camera

--semantic SEMANTIC Semantic photos folder

--origin ORIGIN Origin photos folder

make_vector.py

-h, --help show this help message and exit

-c CONFIG, --config CONFIG The config file path, recommand use this method to start the tool

-i INPUT, --input INPUT

-m {outdoor,indoor}, --mode {outdoor,indoor} Depend on the way to store the pickle file

-f FILTERS [FILTERS ...], --filters FILTERS [FILTERS ...] Default to show all the classes, the meaning of each class refers to class.json

-s SAVE, --save SAVE Save to pcd file

-t TRAJECTORY, --trajectory TRAJECTORY Trajectory file, use to follow the camera

--semantic SEMANTIC Semantic photos folder

--origin ORIGIN Origin photos folder

--vector Do the vectorization, only available when filters are accepted

To run the map builder, please first start the ros core

$ roscore

And you can open the rviz to see the visualization

rviz -d vis.rviz

- First synchronize and segment.

python3 indoor.py -c config/indoor_config.json- Make the global 3D semantic map.

python3 make_pcl.py -c config/indoor_config.json

- Make the global 3D vector map.

python3 vector.py -c config/indoor_config.json

- First synchronize and segment.

python3 outdoor.py -c config/outdoor_config.json- Make the global 3D semantic map.

python3 make_pcl.py -c config/outdoor_config.json

- Make the global 3D vector map.

python3 vector.py -c config/vector_config.json

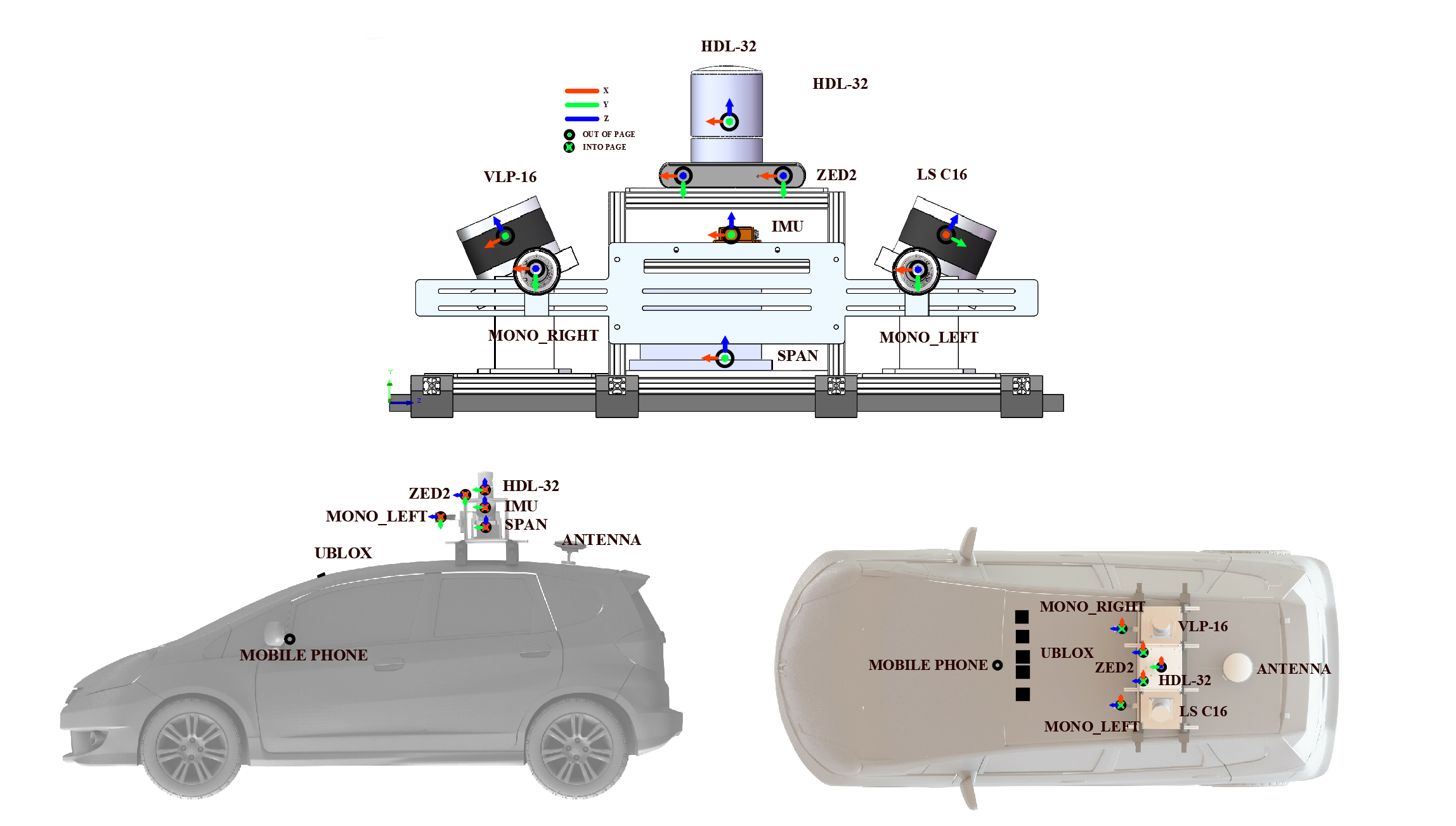

We use part of the sensor kit in UrbanNavDataset, they are:

- 3D LiDAR sensor: (HDL 32E Velodyne): (360 HFOV, +10~-30 VFOV, 80m range, 10Hz),

- Camera: ZED2 Stereo (30 Hz)

- SPAN-CPT: (RTK GNSS/INS, RMSE: 5cm, 100Hz)

In outdoor scenario, all the three sensors are used. And in indoor scenario, GNSS is not available, thus only LiDAR and camera are used. For the detail of the sensor kit, please refer to our UrbanNavDataset

If you find this tool useful, we would appreciate it if you cite our paper.

@article{https://doi.org/10.1049/itr2.12524,

author = {Hu, Runzhi and Bai, Shiyu and Wen, Weisong and Xia, Xin and Hsu, Li-Ta},

title = {Towards high-definition vector map construction based on multi-sensor integration for intelligent vehicles: Systems and error quantification},

journal = {IET Intelligent Transport Systems},

volume = {n/a},

number = {n/a},

pages = {},

keywords = {automated driving and intelligent vehicles, autonomous driving, navigation, sensor fusion},

doi = {https://doi.org/10.1049/itr2.12524},

url = {https://ietresearch.onlinelibrary.wiley.com/doi/abs/10.1049/itr2.12524},

eprint = {https://ietresearch.onlinelibrary.wiley.com/doi/pdf/10.1049/itr2.12524}

}