HardNet model implementation in PyTorch for NIPS 2017 paper "Working hard to know your neighbor's margins: Local descriptor learning loss" poster, slides

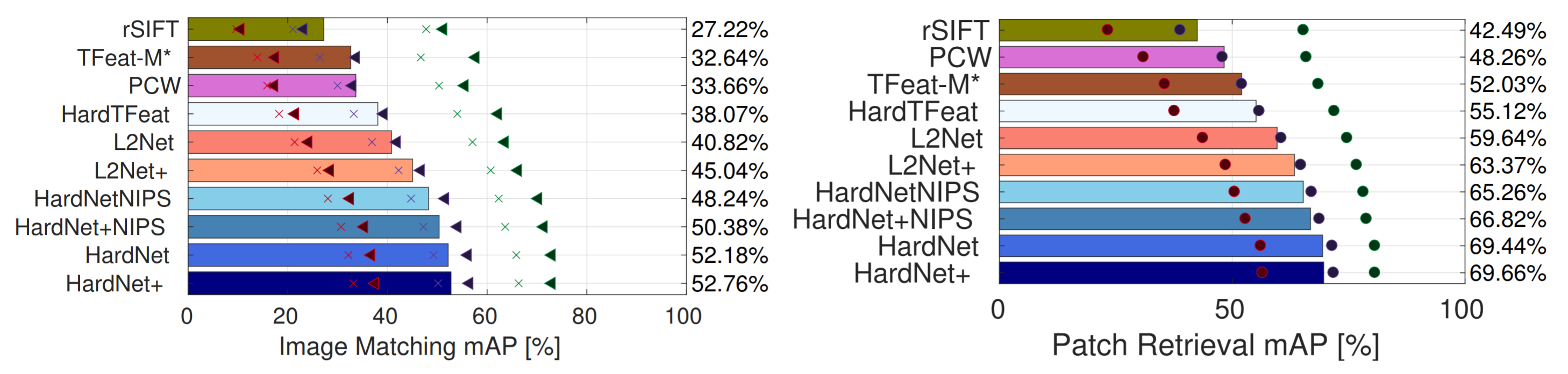

After two major updates of PyTorch library, we were not able to reproduce the results. Therefore, we did a hyperparameter search and we found that increasing the learning rate to 10 and dropout rate to 0.3 leads to better results, see figure below. We have obtained the same results on different machines

. We will update arXiv version soon.

Pretrained weights in PyTorch format are updated.

We also release version, trained on all subsets of Brown dataset, called HardNet6Brown.

Benchmark on HPatches, mAP

| Descriptor | BoW | BoW + SV | BoW + SV + QE | HQE + MA |

|---|---|---|---|---|

| TFeatLib | 46.7 | 55.6 | 72.2 | n/a |

| RootSIFT | 55.1 | 63.0 | 78.4 | 88.0 |

| L2NetLib+ | 59.8 | 67.7 | 80.4 | n/a |

| HardNetLibNIPS+ | 59.8 | 68.6 | 83.0 | 88.2 |

| HardNet++ | 60.8 | 69.6 | 84.5 | 88.3 |

| HesAffNet + HardNet++ | 68.3 | 77.8 | 89.0 | 89.5 |

Please use Python 2.7, install OpenCV and additional libraries from requirements.txt

To download datasets and start learning descriptor:

git clone https://github.com/DagnyT/hardnet

./run_me.shLogs are stored in tensorboard format in directory logs/

Pre-trained models can be found in folder pretrained.

Rahul Mitra presented new large-scale patch PS-dataset and trained even better HardNet on it. Original weights in torch format are here.

Converted PyTorch version is here.

For practical applications, we recommend HardNet++.

For comparison with other descriptors, which are trained on Liberty Brown dataset, we recommend HardNetLib+.

For the best descriptor, which is NOT trained on HPatches dataset, we recommend model by Mitra et.al., link in section above.

We provide an example, how to describe patches with HardNet. Script expects patches in HPatches format, i.e. grayscale image with w = patch_size and h = n_patches * patch_size

cd examples

python extract_hardnet_desc_from_hpatches_file.py imgs/ref.png out.txt

or with Caffe:

cd examples/caffe

python extract_hardnetCaffe_desc_from_hpatches_file.py ../imgs/ref.png hardnet_caffe.txt

AffNet -- learned local affine shape estimator.

Please cite us if you use this code:

@article{HardNet2017,

author = {Anastasiya Mishchuk, Dmytro Mishkin, Filip Radenovic, Jiri Matas},

title = "{Working hard to know your neighbor's margins: Local descriptor learning loss}",

booktitle = {Proceedings of NIPS},

year = 2017,

month = dec}