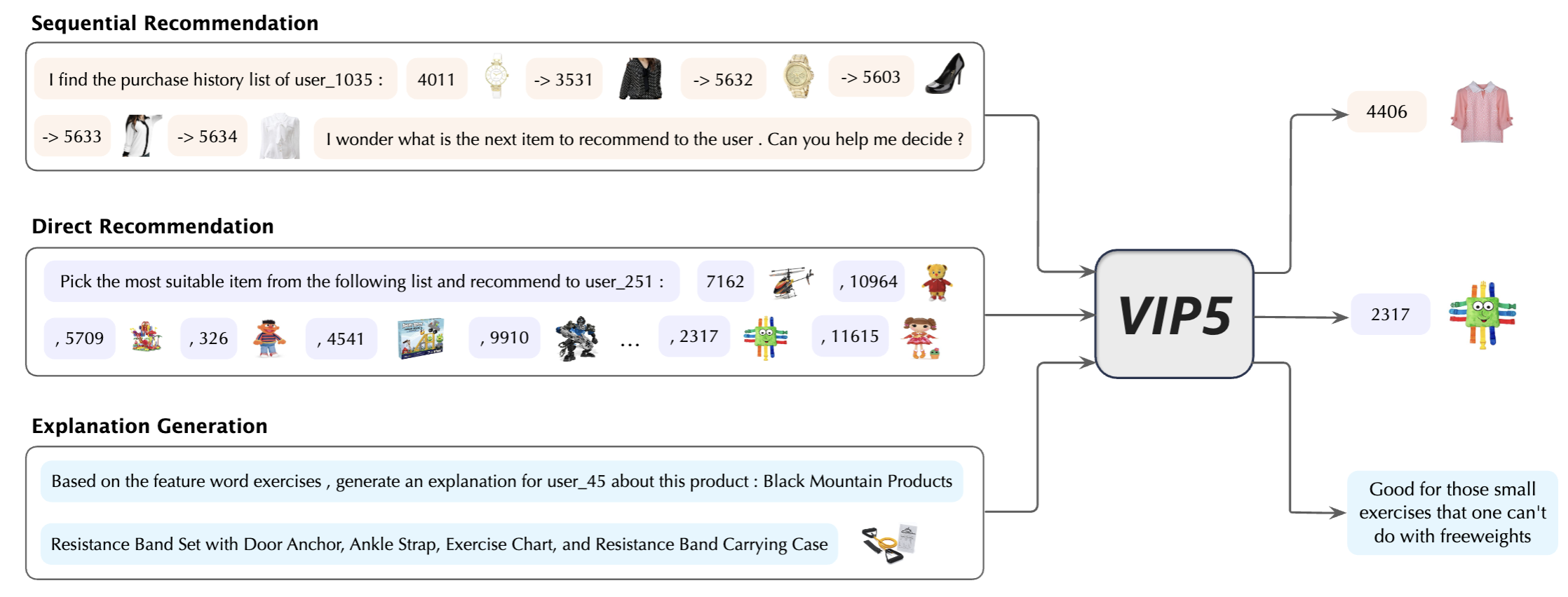

VIP5: Towards Multimodal Foundation Models for Recommendation

- PyTorch 1.12

- transformers

- tqdm

- numpy

- sentencepiece

- pyyaml

- pip install git+https://github.com/openai/CLIP.git

-

Clone this repo

-

Download preprocessed data and image features from this Google Drive link, then unzip them into the data and features folders

-

Create snap and log folders to store VIP5 checkpoints and training logs:

mkdir snap log -

Conduct parameter-efficient tuning with scripts in scripts folder, such as

CUDA_VISIBLE_DEVICES=0,1,2,3 bash scripts/train_VIP5.sh 4 toys 13579 vitb32 2 8 20

Please cite the following paper corresponding to the repository:

@inproceedings{geng2023vip5,

title={VIP5: Towards Multimodal Foundation Models for Recommendation},

author={Geng, Shijie and Tan, Juntao and Liu, Shuchang and Fu, Zuohui and Zhang, Yongfeng},

booktitle={Findings of the Association for Computational Linguistics: EMNLP 2023},

year={2023}

}