The implementation is written for environments with continuous action space. Currently, only one agent learning is supported.

The code has been written as an exercise while exploring Reinforcement Learning concepts. The algorithm is based on the description provided in original Proximal Policy Optimization paper by OpenAI. However, to get a working version of algorithm, important code-level details were added from The 32 Implementation Details of Proximal Policy Optimization (PPO) Algorithm and this implementation. For more info check Implementation details

- Save GIFs from rendered episodes, the files are saved in

/imagesdirectory (render=True) - Tensorboard logs for monitoring score and episode length (

tensorboard_logging=True) - Load pretrained model to evaluate or continue training (

pretrained=True)

All dependencies are provided in requirements.txt file.

The implementation uses Pytorch for training and Gym for environments. The imageio is an optional dependency needed to save GIFs from rendered environment.

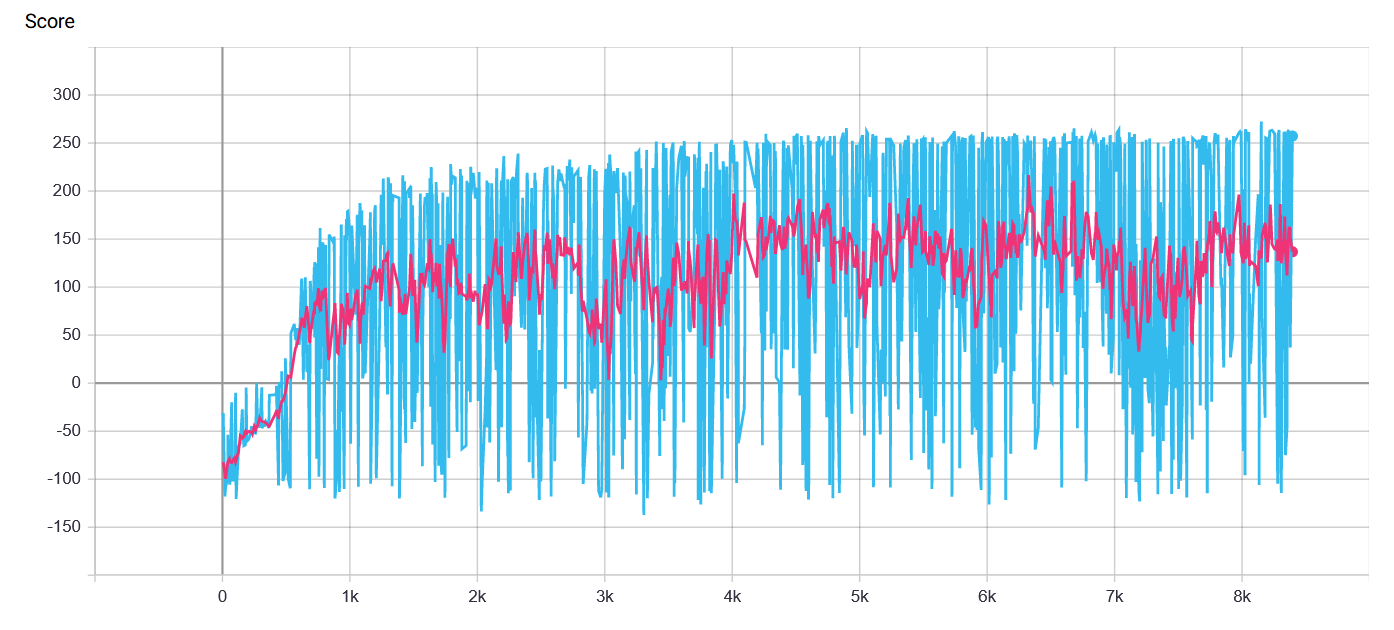

Training progress of an agent in BipedalWalker-v3 environment.

|

|

|

|---|---|---|

| 500 episodes | 2000 episodes | 5000 episodes |

|

|---|

| Training chart with score averaged over 20 consecutive episodes (marked with red) |

The last layer of policy network is initialized with weights rescaled by 0.01. It is used to enforce more random choices in the beginning of the training and thus improving the training performance. It is one of the suggestions provided in What Matters In On-Policy Reinforcement Learning? A Large-Scale Empirical Study

The std value used for distribution to estimate actions is set to 0.5.

The advantage is calculated using normalized discounted rewards. The advantage values are computed during every iteration of policy update.

The loss is a sum of these 3 components:

- Clipped Surrogate Objective from PPO paper with

epsilon value = 0.2 - MSE Loss calculated from estimated state value and discounted reward (

0.5) - entropy of action distribution (

-0.01)