Table of Contents:

- Introduction

- Reinforcement Learning Laboratory

- Inspiration

- Development Tools

- Custom Env

- Learning Paradigm

- Results

- Solution Analysis

- Dev Challenges

- Further Considerations

This project was made possible thanks to the Reinforcement Learning laboratory that is part of the Master's course in "Artificial Intelligence Engineering" at the University of Modena and Reggio Emilia. The laboratory was made possible thanks to Professor Simone Calderara, who managed and coordinated its development.

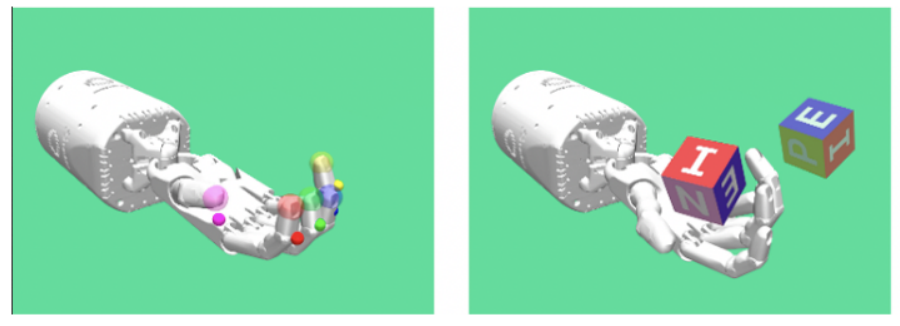

During the laboratory, it was possible to delve into the fundamentals of Reinforcement Learning and their implementation. We used various algorithms, both value-based and policy-based. The simpler ones, such as Q-learning and Deep Q-learning, were implemented from scratch, while for the more complex theoretical ones, like A2C and PPO, we chose to use the implementations from "Stable Baseline 3." We also utilized environments like Gymnasium, Petting-zoo, and Pybullet. Furthermore, we developed some custom environments, one of which was used in this project. Below, you can see some of our implementations:

For the sake of completeness, we provide the materials upon which our studies were based, with the hope that they will serve as a useful reference for all readers to better understand and delve into Reinforcement Learning:

- Fundamentals of Reinforcement Learning

- Deep Mind Introduction to Reinforcement Learning (1)

- Deep Mind Introduction to Reinforcement Learning (2)

- Deep Mind Introduction to Reinforcement Learning (3)

- Reinforcement Learning Italian Lectures

- Spinning up

- Hugging Face Deep Reinforcement Learning Course

- Sutton and Barto RL Book

This work is inspired by TossingBot: Learning to Throw Arbitrary Objects with Residual Physics (Princeton University, Google, Columbia University, MIT.) (below).

In order to make the task a little easier from the exploration point of view, we replaced the fingers with a plate. In this setting the robot need to learn to throw an object using just a flat surface.

- Mujoco

- Gymansium-Robotics

- StableBaselines3

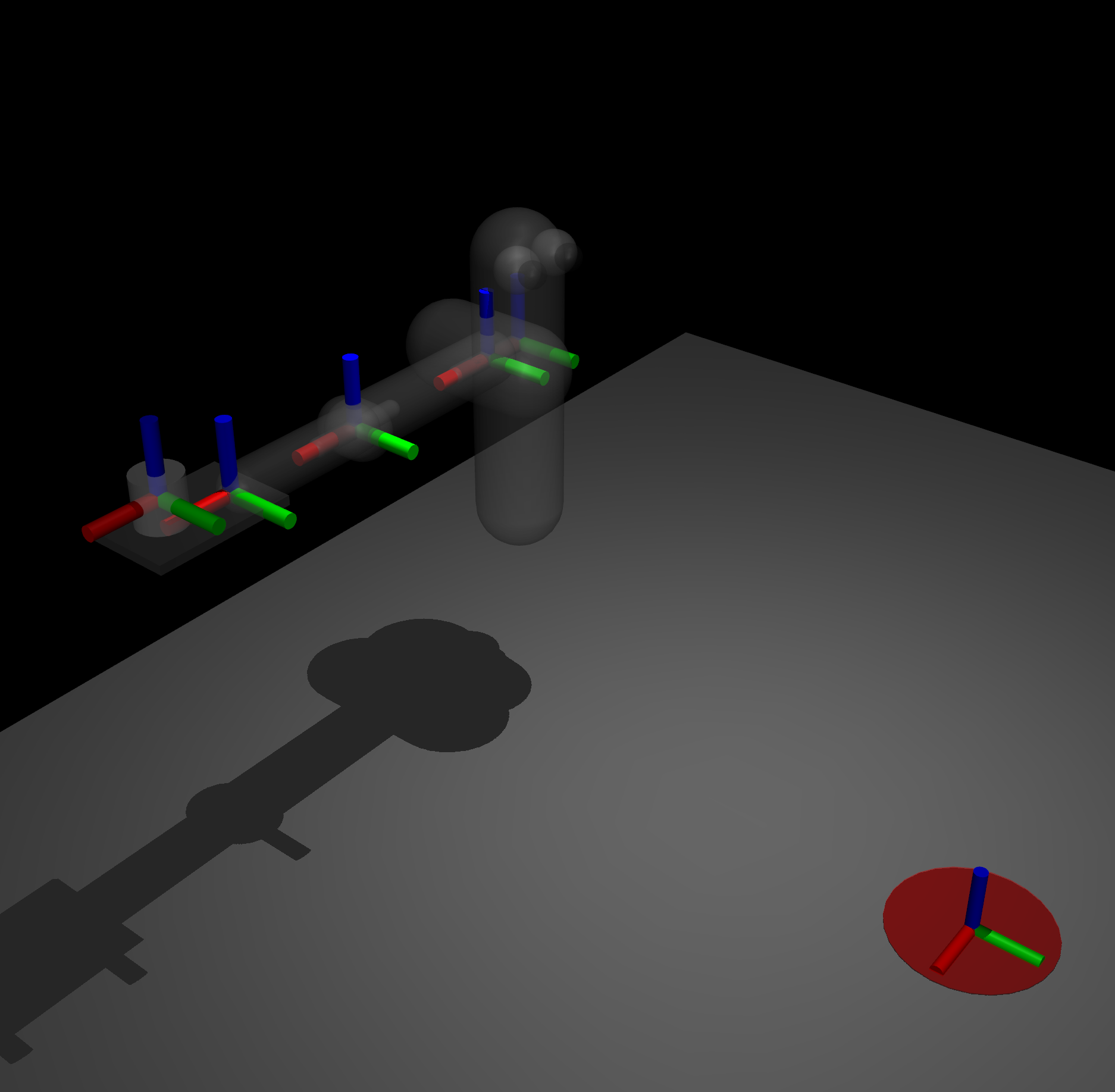

There are 19 observations:

- Shoulder rotation (rad)

- Elbow rotation (rad)

- Wrist rotation (rad)

- Shoulder angular velocity (rad/s)

- Elbow angular velocity (rad/)

- Wrist angular velocity (rad/s)

- Wrist coordinate (m)

- Object coordinate (m)

- Goal coordinate (m)

Observation range: [-inf, inf]

Actions:

- Shoulder joint 2 DoF

- Elbow joint 1 DoF

- Wrist joint 2 DoF

Control range: [-2, 2] (N·m)

The first term force the learning process to focus on throwing the object effectively. The second term force the learning process to develop an efficient motion of the robotic arm. The 0.1 weight scales down the second term in order to delay the learning process of the efficient motion only after being able to solve the main task.

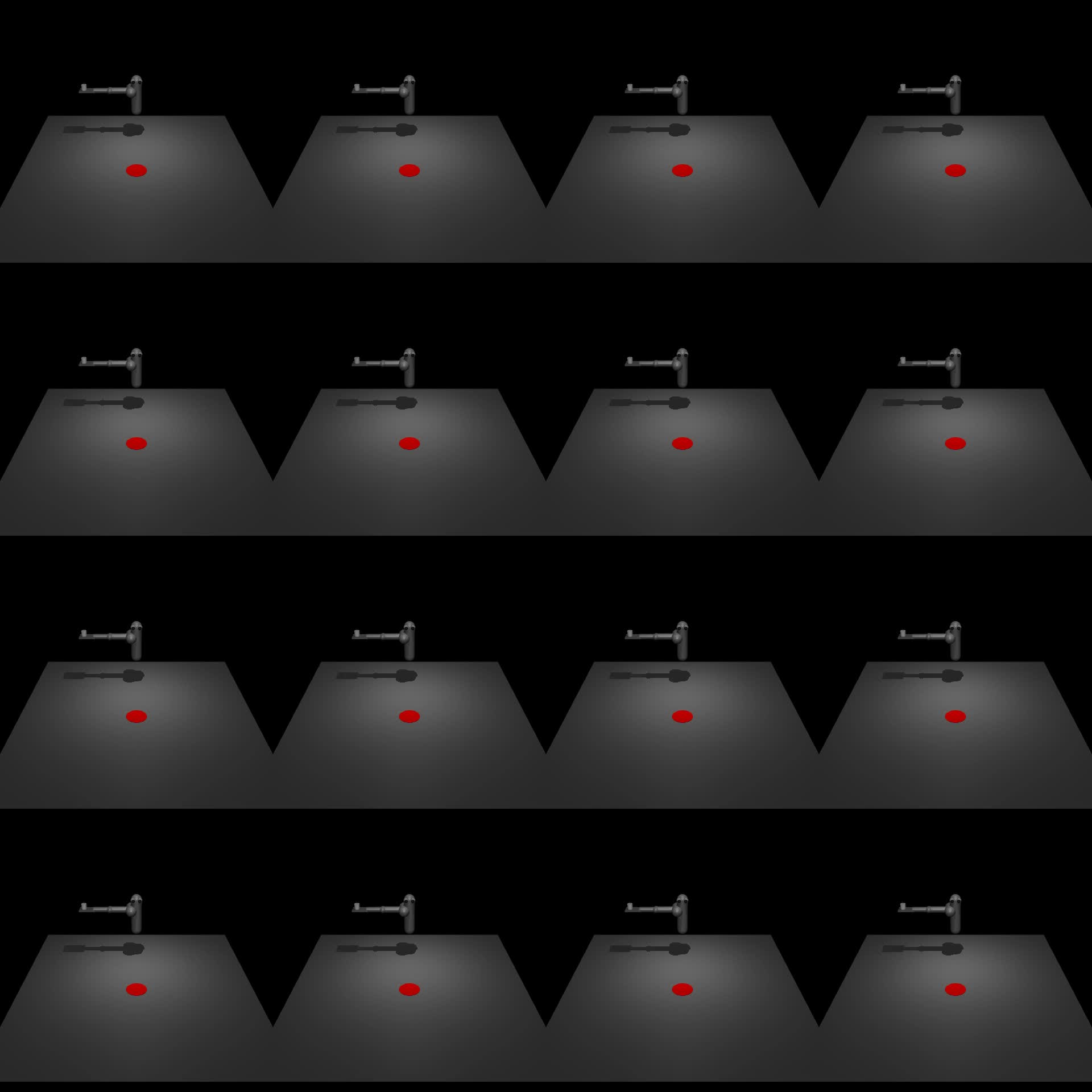

Allows multiple instances of the same environment to run in parallel which leds to a more efficient utilization of computing resources. This settings enhance exploration, as the agent can explore different parts of the state space simultaneously. Every instance has its own separate state.

We used 16 parallel envs during the training.

It is the third paradigm of Machine Learning. In this setting the agent learns to take actions by exploring the environment, observes outcomes, and adjust its strategy (policy) to maximize total rewards.

Specific approach within RL that uses deep neural networks to approximate complex decision-making functions i.e. handle high-dimensional and intricate state and action spaces.

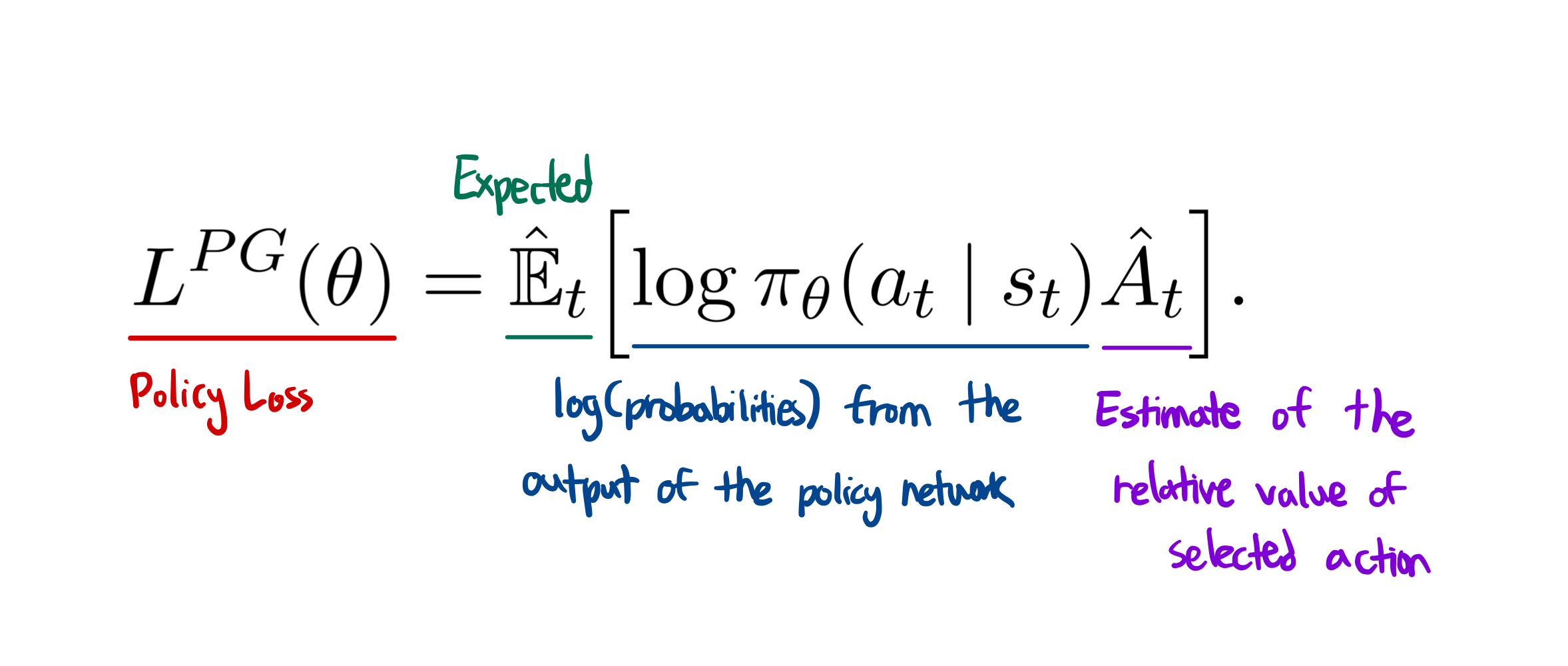

This methods aim to directly learns a policy to solve the given task.

where:

-

$A_t$ is called 'Advantage' and is defined as$A_t = G_t - V_t$ -

$G_t$ is the return i.e. the discounted sum of rewards in a single episode -

$V_t$ is the value function i.e. the estimation of$G_t$ done at time$t$

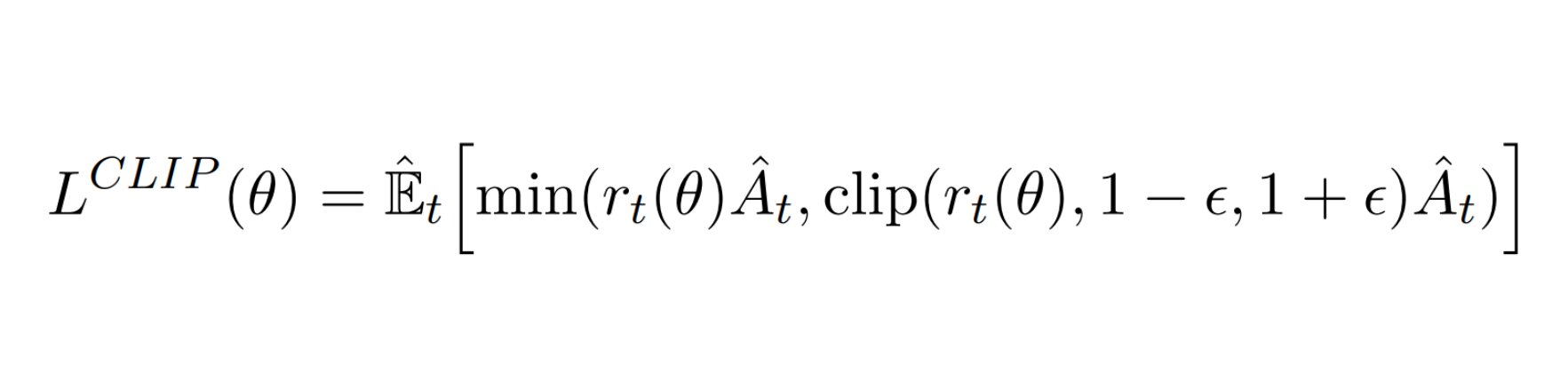

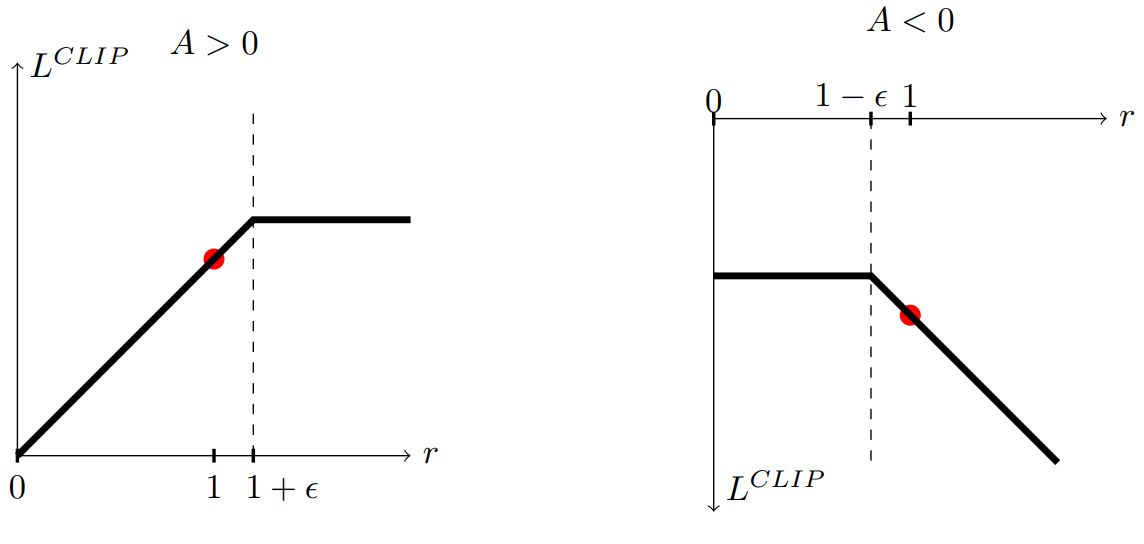

The Policy Gradient Methods is calculated online i.e. the policy is optimized on an observation history sampled by the policy itself. This leads to major instabilities in the learning process which sometimes brings the policy to diverge by focusing on a constantly shifting distribution. When it happens, the agent is not working towards the main goal anymore and thus stops to learn. To solve this issue OpenAI engineered the Proximal Policy Optimization (PPO). Basically, the new policy gradient method force the agent to learn a "proximal" policy which is a policy not too different from the one in the previous episode. The plots below, shows how they are clipping the loss in order to avoid extreme changes on the main strategy.

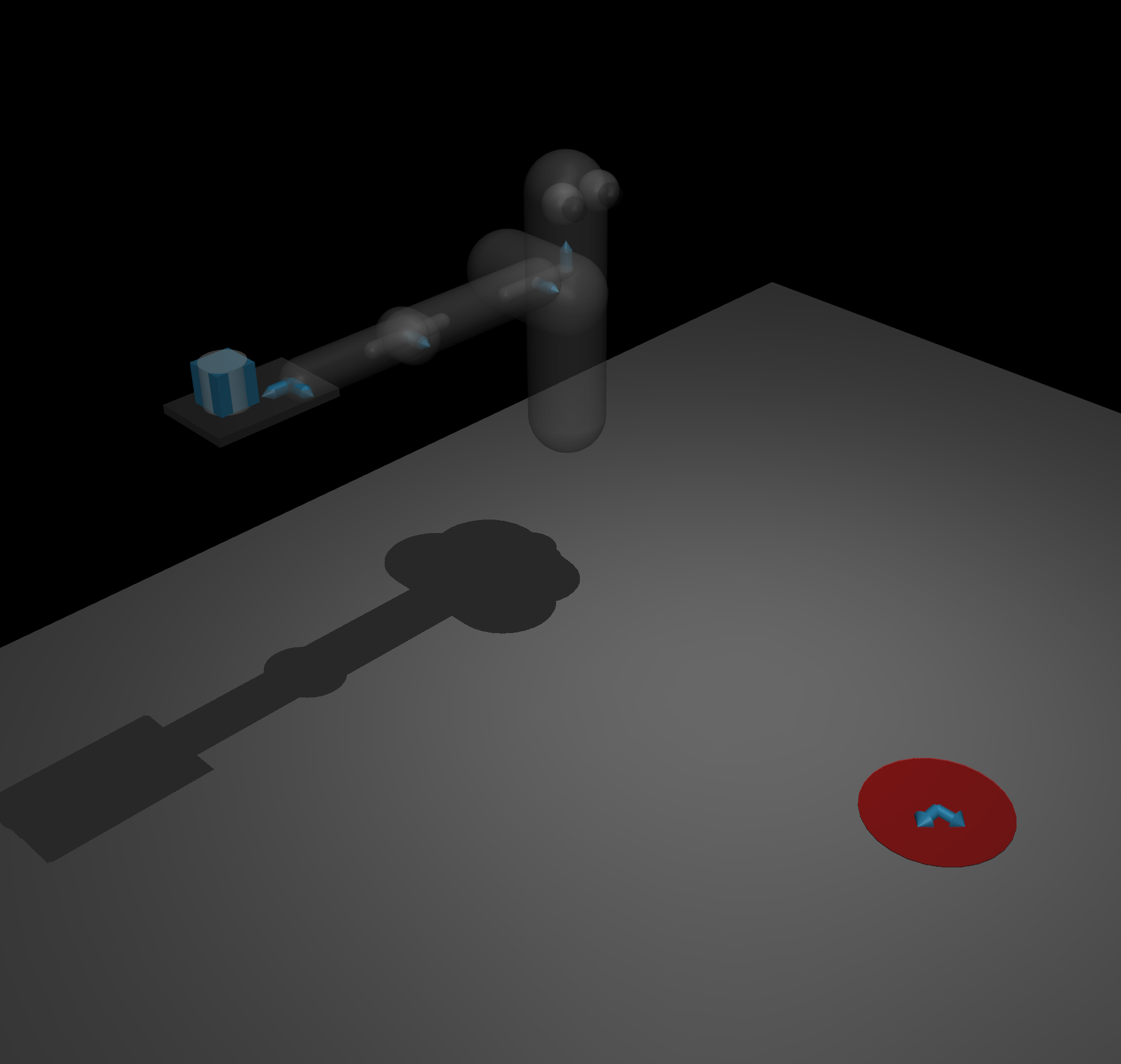

The curriculum learning is the concept of decomposing a complex task in simpler handcrafted subtasks in order to effectively reach the overall goal.

We investigated this approach in order to teach the agent to both throw the object and also track the target. Indeed the fist curriculum task was to learn the throwing motion to a static target. Instead the next two curriculums tasks focused on the tracking skill by gradually increasing the spawn offset of the target.

We trained the agent for 12M steps over each task.

We realized that in order to solve the task, the agent learned to "slap" the object. It resambles the behavior of the human hand during a basketball shot.

- Not requires any explicit model of the kinematics, neither direct nor inverse. This big plus when dealing with very complex dexterous robots.

- Not requires any model of the dynamics, neither direct nor inverse.

- No explicit control model.

- Not requires multiple control models for different interactions patterns (like interaction and interaction free tasks). Just one comprehensive control.

- Online adaptation to variations (e.g. The mathematical model of electric actuations will change with time).

- Scalable fast training on complex tasks thanks to vectorized envs.

- Reduce entry level skills of the operator.

- Short time to deploy.

- Broken video recorder lib of GPU parallel virtualized environment.

- Bad documentation of the Mujoco Lib

- Bad documentation of the StableBaseline3 regarding curriculum.

- Different base env class between tasks. Very library dependent. It makes hard the integration of a custom pipeline.

This section describes some considerations we made to further improve the flexibility and the effectiveness of this learning approach.

We realized that introducing a vision control feedback, this solution could be adopted more easily in a real enviroment.

- End-to-End Traning: The observations are entextracted by a Neural Network instead of retreiving them from the simulator API.

The Automatic Curriculum Learning (ACL) is a process that identify automatically the correct sequential task used to train the agent, instead of defining them by hand.

The Parallel Multitask RL is a training pattern where multiple pretext tasks are trained togheter in order to share useful knowledge for the main task. It is similar to ACL(above) but the tasks are solved in parallel and not sequentially.

Honorable mention to DeXtreme: Transfer of Agile In-Hand Manipulation from Simulation to Reality a work made by NVIDIA. This work proves that is possible to go from simulation to reality effictively even on a complex task like robotic hand dexterity.