Francis Engelmann1, Siyu Tang1

1ETH Zurich 2RWTH Aachen University *,†equal contribution

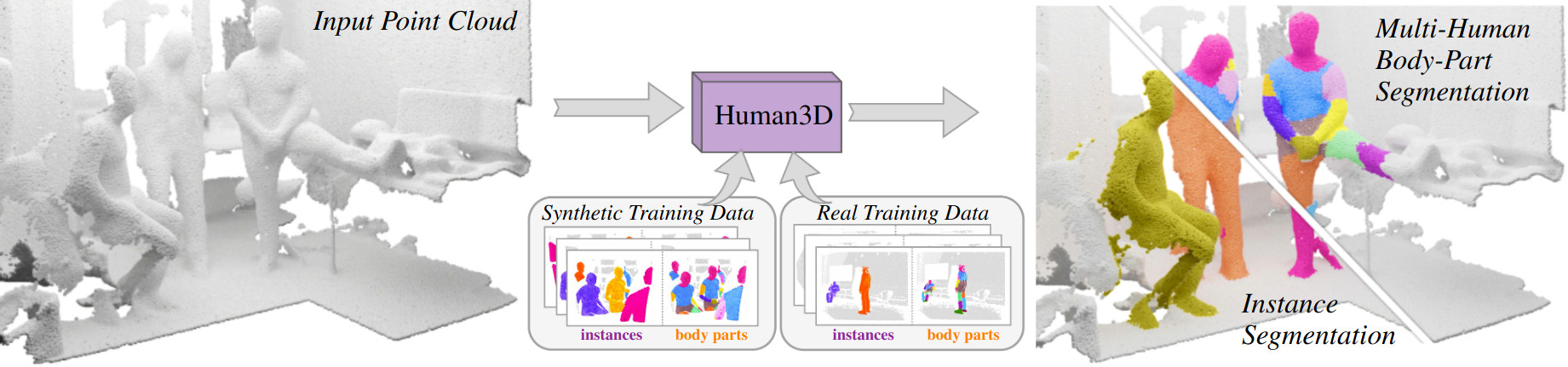

We propose the first multi-human body-part segmentation model, called Human3D 🧑🤝🧑, that directly operates on 3D scenes. In an extensive analysis, we validate the benefits of training on synthetic data on multiple baselines and tasks.

[Project Webpage] [Paper]

We adapt the codebase of Mix3D and Mask3D which provides a highly modularized framework for 3D scene understanding tasks based on the MinkowskiEngine.

├── mix3d

│ ├── main_instance_segmentation.py <- the main file

│ ├── conf <- hydra configuration files

│ ├── datasets

│ │ ├── preprocessing <- folder with preprocessing scripts

│ │ ├── semseg.py <- indoor dataset

│ │ └── utils.py

│ ├── models <- Human3D modules

│ ├── trainer

│ │ ├── __init__.py

│ │ └── trainer.py <- train loop

│ └── utils

├── data

│ ├── processed <- folder for preprocessed datasets

│ └── raw <- folder for raw datasets

├── scripts <- train scripts

├── docs

├── README.md

└── saved <- folder that stores models and logs

The main dependencies of the project are the following:

python: 3.10.9

cuda: 11.3You can set up a conda environment as follows

# Some users experienced issues on Ubuntu with an AMD CPU

# Install libopenblas-dev (issue #115, thanks WindWing)

# sudo apt-get install libopenblas-dev

export TORCH_CUDA_ARCH_LIST="6.0 6.1 6.2 7.0 7.2 7.5 8.0 8.6"

conda env create -f environment.yaml

conda activate human3d_cuda113

pip3 install torch==1.12.1+cu113 torchvision==0.13.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

pip3 install torch-scatter -f https://data.pyg.org/whl/torch-1.12.1+cu113.html

pip3 install 'git+https://github.com/facebookresearch/detectron2.git@710e7795d0eeadf9def0e7ef957eea13532e34cf' --no-deps

cd third_party

git clone --recursive "https://github.com/NVIDIA/MinkowskiEngine"

cd MinkowskiEngine

git checkout 02fc608bea4c0549b0a7b00ca1bf15dee4a0b228

python setup.py install --force_cuda --blas=openblas

cd ../../pointnet2

python setup.py install

cd ../../

pip3 install pytorch-lightning==1.7.2

After installing the dependencies, we preprocess the datasets.

Please refer to the instructions to obtain the synthetic dataset and the dataset based on Egobody.

Put the datasets in data/raw/.

python datasets/preprocessing/humanseg_preprocessing.py preprocess \

--data_dir="../../data/raw/egobody" \

--save_dir="../../data/processed/egobody" \

--dataset="egobody"

python datasets/preprocessing/humanseg_preprocessing.py preprocess \

--data_dir="../../data/raw/synthetic_humans" \

--save_dir="../../data/processed/synthetic_humans" \

--dataset="synthetic_humans" \

--min_points=20000 \

--min_instances=1

Training and evaluation scripts are located in the scripts/ folder.

We provide detailed scores and network configurations with trained checkpoints.

We pre-trained with synthetic data and fine-tuned on EgoBody.

Both checkpoints can be conveniently downloaded into the checkpoint/ folder with ./download_checkpoints.sh.

| Method | Task | Config | Checkpoint 💾 | Visualizations 🔭 |

|---|---|---|---|---|

| Mask3D | Human Instance | config | checkpoint | visualizations |

| Human3D | MHBPS | config | checkpoint | visualizations |

Tip: Setting data.save_visualizations=true saves the MHBPS predictions using PyViz3D.

@inproceedings{takmaz23iccv,

title = {{3D Segmentation of Humans in Point Clouds with Synthetic Data}},

author = {Takmaz, Ay\c{c}a and Schult, Jonas and Kaftan, Irem and Ak\c{c}ay, Mertcan

and Leibe, Bastian and Sumner, Robert and Engelmann, Francis and Tang, Siyu},

booktitle = {{International Conference on Computer Vision}},

year = {2023}

}

This repository is based on the Mix3D and Mask3D code base. Mask Transformer implementations largely follow Mask2Former.