- 需要获取相机曝光时间和传输时间,补偿给图像。获取相机曝光时间和传输时间,补偿给图像。

- 我选择了在视觉部分只更新Bias,camera和lidar不同步触发的话好像不能更新state。

- 传输时间计算方法:以RGB,网口为例。每一万个像素大约0.0286MB,我使用的相机为155w像素,一张图片4.45MB 网口传输速度在海康威视的可视化软件最下面一栏(带宽)可以读到,377Mbps,换算过来是47.125MB/s,传输时间为0.0944s 也就是94ms,这个数字我感觉很不合理,但是把这个时间补偿进去效果还是最好的。

- 更新了大恒相机的驱动,里面有估算传输时间的代码

感谢JJ哥的指导,后面可能还是要换成硬件触发方案。

如果有更合理的方案还望能够指点一下,感谢!。

- 测试雷达退化环境

- iVox替换iKdtree (因为ikdtree长时间运行内存会持续上涨)

FAST-LIVO is a fast LiDAR-Inertial-Visual odometry system, which builds on two tightly-coupled and direct odometry subsystems: a VIO subsystem and a LIO subsystem. The LIO subsystem registers raw points (instead of feature points on e.g., edges or planes) of a new scan to an incrementally-built point cloud map. The map points are additionally attached with image patches, which are then used in the VIO subsystem to align a new image by minimizing the direct photometric errors without extracting any visual features (e.g., ORB or FAST corner features).

Contributors: Chunran Zheng 郑纯然, Qingyan Zhu 朱清岩, Wei Xu 徐威

Our paper has been accepted to IROS2022, which is now available on arXiv: FAST-LIVO: Fast and Tightly-coupled Sparse-Direct LiDAR-Inertial-Visual Odometry.

If our code is used in your project, please cite our paper following the bibtex below:

@article{zheng2022fast,

title={FAST-LIVO: Fast and Tightly-coupled Sparse-Direct LiDAR-Inertial-Visual Odometry},

author={Zheng, Chunran and Zhu, Qingyan and Xu, Wei and Liu, Xiyuan and Guo, Qizhi and Zhang, Fu},

journal={arXiv preprint arXiv:2203.00893},

year={2022}

}

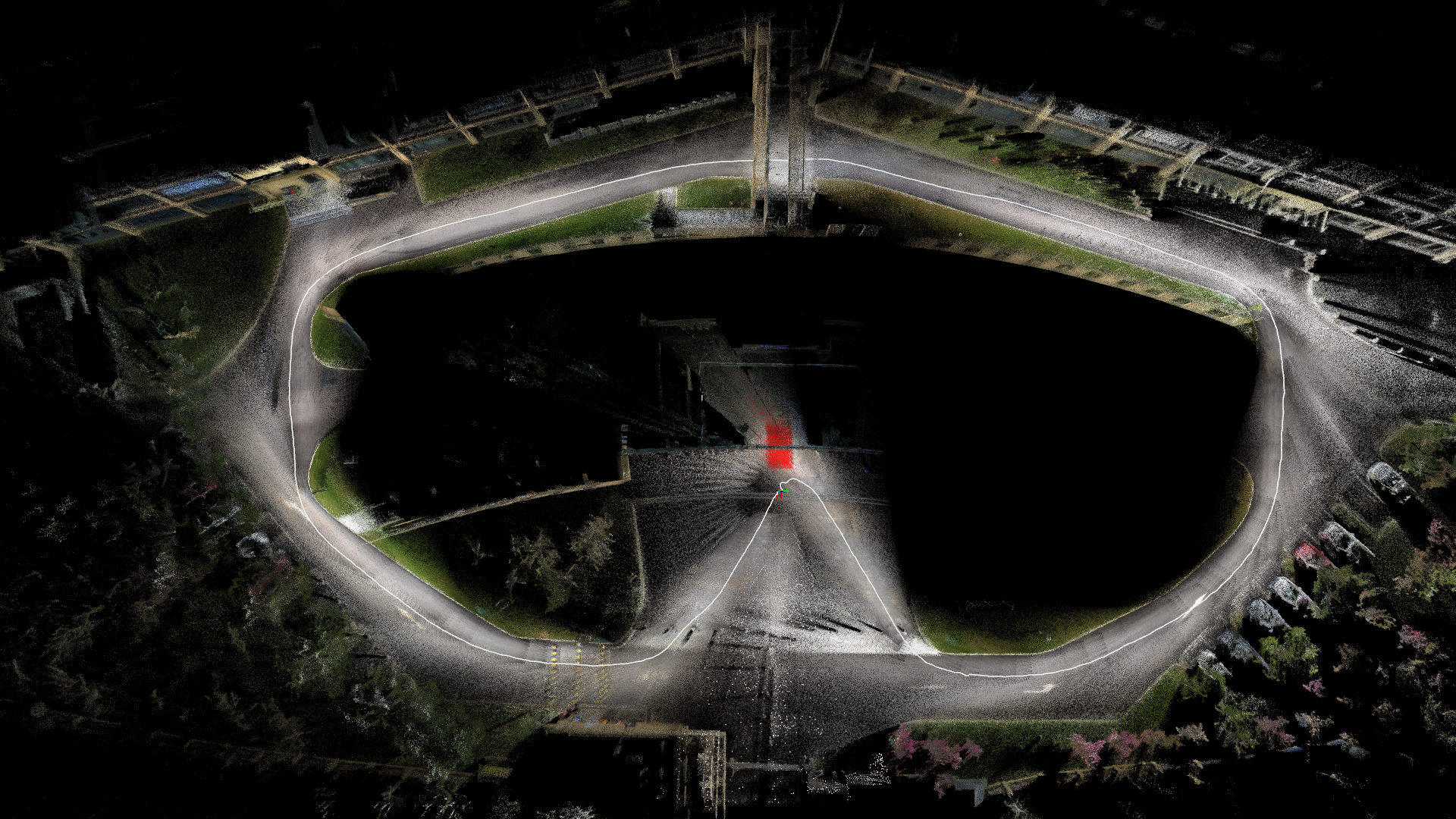

Our accompanying videos are now available on YouTube (click below images to open) and Bilibili.

Ubuntu 16.04~20.04. ROS Installation.

PCL>=1.6, Follow PCL Installation.

Eigen>=3.3.4, Follow Eigen Installation.

OpenCV>=3.2, Follow Opencv Installation.

Sophus Installation for the non-templated/double-only version.

git clone https://github.com/strasdat/Sophus.git

cd Sophus

git checkout a621ff

mkdir build && cd build && cmake ..

make

sudo make installVikit contains camera models, some math and interpolation functions that we need. Vikit is a catkin project, therefore, download it into your catkin workspace source folder.

cd catkin_ws/src

git clone https://github.com/uzh-rpg/rpg_vikit.gitFollow livox_ros_driver Installation.

Clone the repository and catkin_make:

cd ~/catkin_ws/src

git clone https://github.com/hku-mars/FAST-LIVO

cd ../

catkin_make

source ~/catkin_ws/devel/setup.bash

Please note that our system can only work in the hard synchronized LiDAR-Inertial-Visual dataset at present due to the unestimated time offset between the camera and IMU. The frame headers of the camera and the LiDAR are at the same phisical trigger time.

Edit config/xxx.yaml to set the below parameters:

lid_topic: The topic name of LiDAR data.imu_topic: The topic name of IMU data.img_topic: The topic name of camera data.img_enable: Enbale vio submodule.lidar_enable: Enbale lio submodule.point_filter_num: The sampling interval for a new scan. It is recommended that3~4for faster odometry,1~2for denser map.outlier_threshold: The outlier threshold value of photometric error (square) of a single pixel. It is recommended that50~250for the darker scenes,500~1000for the brighter scenes. The smaller the value is, the faster the vio submodule is, but the weaker the anti-degradation ability is.img_point_cov: The covariance of photometric errors per pixel.laser_point_cov: The covariance of point-to-plane redisual per point.filter_size_surf: Downsample the points in a new scan. It is recommended that0.05~0.15for indoor scenes,0.3~0.5for outdoor scenes.filter_size_map: Downsample the points in LiDAR global map. It is recommended that0.15~0.3for indoor scenes,0.4~0.5for outdoor scenes.

After setting the appropriate topic name and parameters, you can directly run FAST-LIVO on the dataset.

Download our collected rosbag files via OneDrive (FAST-LIVO-Datasets) containing 4 rosbag files.

roslaunch fast_livo mapping_avia.launch

rosbag play YOUR_DOWNLOADED.bag

roslaunch fast_livo mapping_avia_ntu.launch

rosbag play YOUR_DOWNLOADED.bag

In order to make it easier for our users to reproduce our work and benefit the robotics community, we also release a simple version of our handheld device, where you can access the CAD source files in our_sensor_suite. The drivers of various components in our hardware system are available in Handheld_ws.

Thanks for FAST-LIO2 and SVO2.0. Thanks for Livox_Technology for equipment support.

Thanks Jiarong Lin for the helps in the experiments.

The source code of this package is released under GPLv2 license. We only allow it free for academic usage. For commercial use, please contact Dr. Fu Zhang fuzhang@hku.hk.

For any technical issues, please contact me via email zhengcr@connect.hku.hk.